AI Native Daily Paper Digest – 20250102

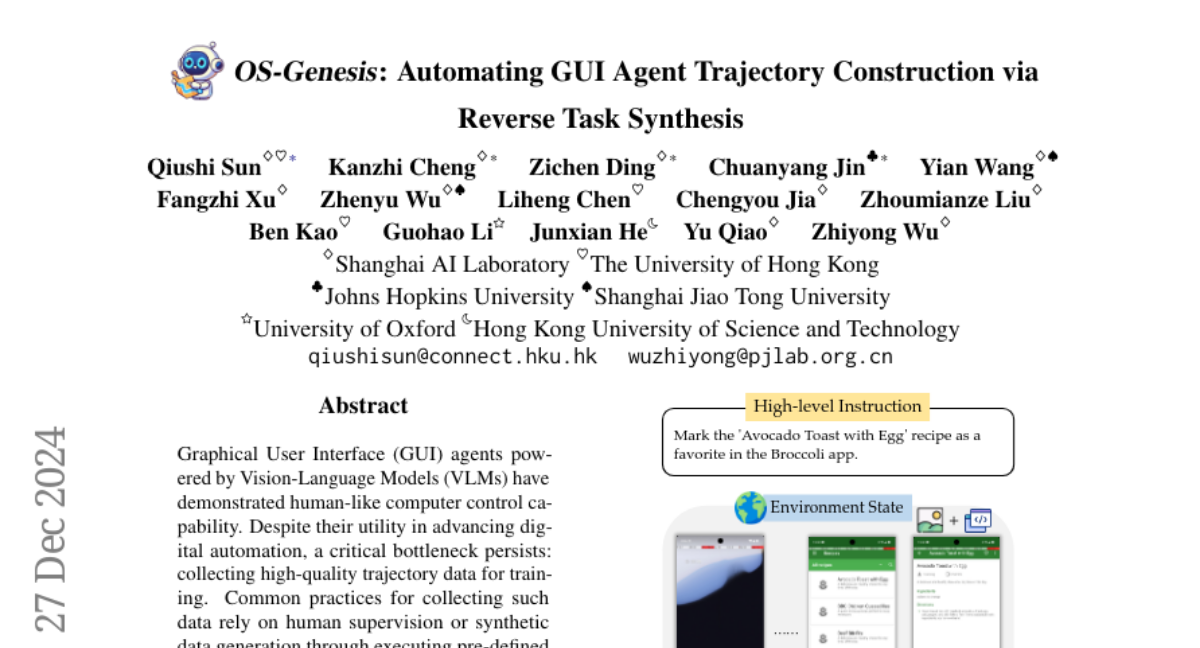

1. OS-Genesis: Automating GUI Agent Trajectory Construction via Reverse Task Synthesis

🔑 Keywords: Vision-Language Models, GUI agents, OS-Genesis, GUI data synthesis

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The paper aims to overcome the limitations of current data collection methods for training GUI agents by introducing OS-Genesis, a new data synthesis pipeline.

🛠️ Research Methods:

– OS-Genesis reverses the traditional trajectory collection process by enabling agents to interact with environments first, then derive tasks retrospectively to enhance data quality and diversity.

💬 Research Conclusions:

– Training GUI agents with OS-Genesis significantly boosts their performance on challenging online benchmarks and ensures superior data quality and diversity compared to existing methods.

👉 Paper link: https://huggingface.co/papers/2412.19723

2. Xmodel-2 Technical Report

🔑 Keywords: Xmodel-2, large language model, reasoning tasks, state-of-the-art performance

💡 Category: Natural Language Processing

🌟 Research Objective:

– Develop Xmodel-2, a large language model specifically for enhancing reasoning tasks.

🛠️ Research Methods:

– Implement a unified architecture that allows for shared hyperparameters across model scales and the use of the WSD learning rate scheduler to maximize training efficiency.

💬 Research Conclusions:

– Xmodel-2 demonstrates state-of-the-art performance in complex reasoning and agent tasks while maintaining low training costs, with resources available on GitHub.

👉 Paper link: https://huggingface.co/papers/2412.19638

3. HUNYUANPROVER: A Scalable Data Synthesis Framework and Guided Tree Search for Automated Theorem Proving

🔑 Keywords: HunyuanProver, interactive theorem proving, data synthesis, SOTA

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– The study introduces HunyuanProver, a language model fine-tuned for interactive automatic theorem proving using LEAN4.

🛠️ Research Methods:

– The researchers developed a scalable framework for iterative data synthesis to combat data sparsity issues and integrated guided tree search algorithms for enhanced reasoning.

💬 Research Conclusions:

– HunyuanProver achieved state-of-the-art performance on major benchmarks, notably outperforming current results with a 68.4% pass rate on miniF2F-test. It also successfully proved four IMO statements, and a dataset of 30k synthesized instances will be open-sourced for community benefit.

👉 Paper link: https://huggingface.co/papers/2412.20735

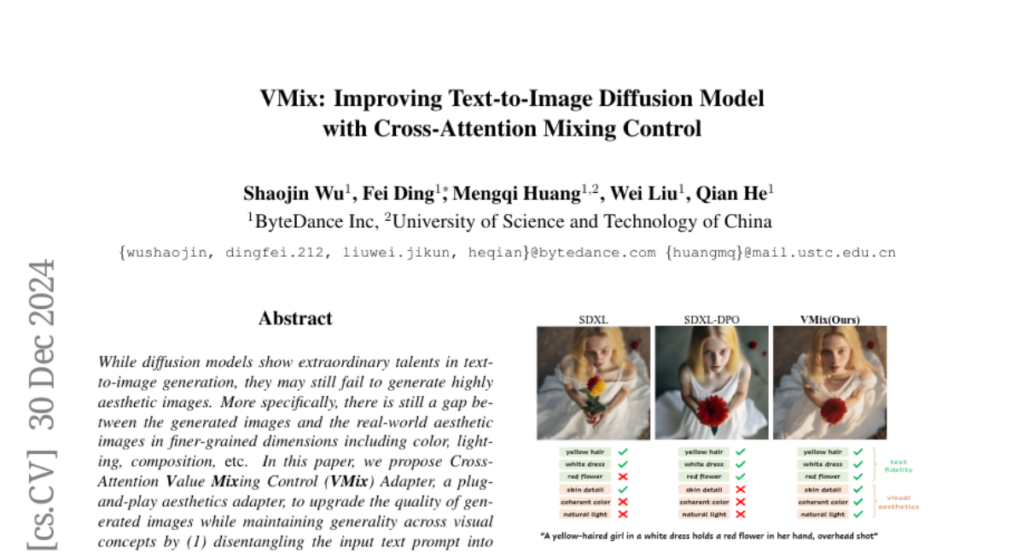

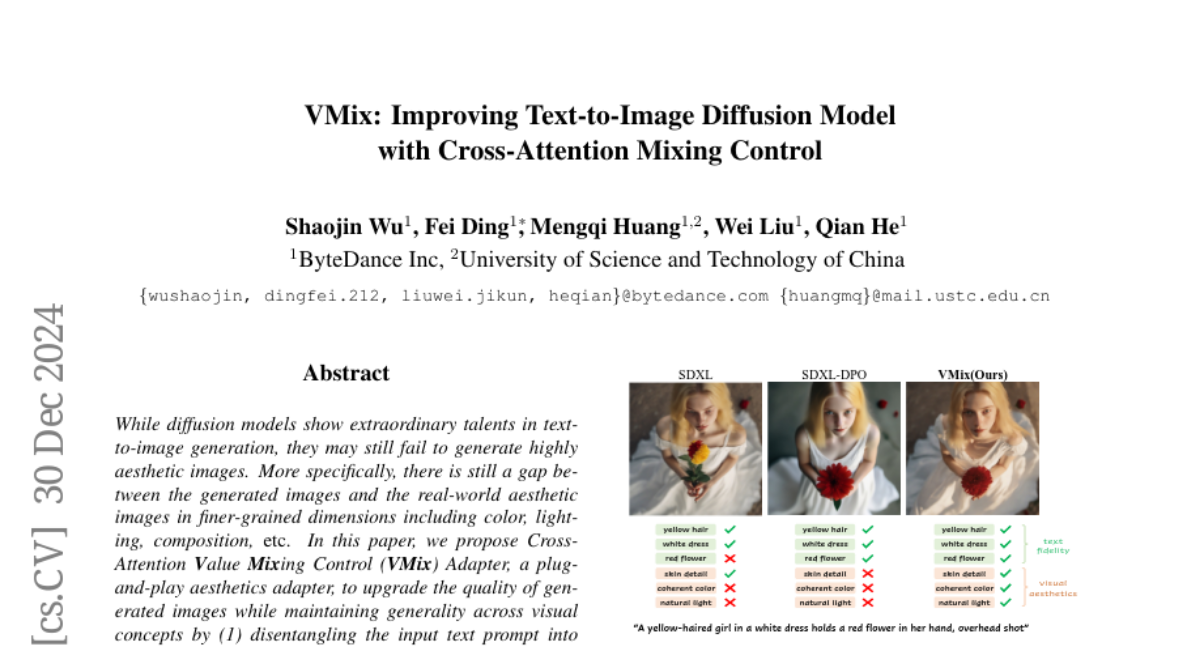

4. VMix: Improving Text-to-Image Diffusion Model with Cross-Attention Mixing Control

🔑 Keywords: Diffusion models, Aesthetic images, Cross-Attention Value Mixing Control, VMix Adapter, Image generation

💡 Category: Generative Models

🌟 Research Objective:

– To improve the aesthetic quality of images generated by diffusion models while maintaining text-image alignment and visual concept generality.

🛠️ Research Methods:

– Introduced the VMix Adapter, which disentangles input text into content and aesthetic descriptions and integrates aesthetic conditions using value-mixed cross-attention in the denoising process.

💬 Research Conclusions:

– VMix enhances the aesthetic quality of generated images and outperforms existing state-of-the-art methods, showing compatibility with community modules like LoRA and ControlNet without retraining.

👉 Paper link: https://huggingface.co/papers/2412.20800

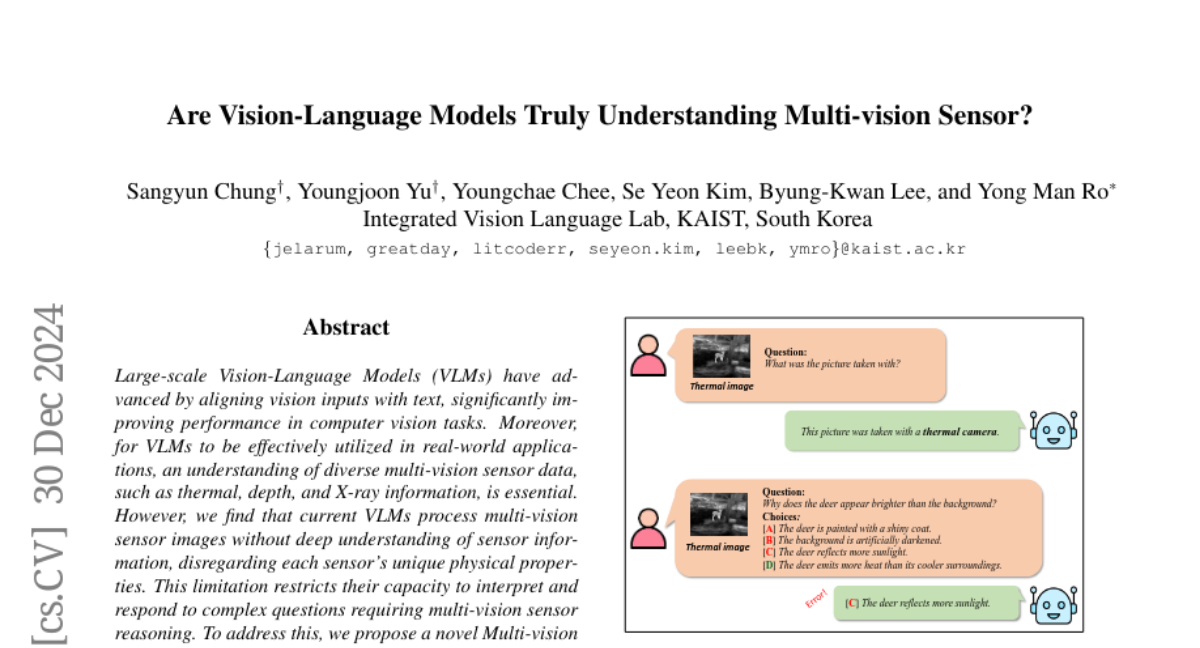

5. Are Vision-Language Models Truly Understanding Multi-vision Sensor?

🔑 Keywords: Vision-Language Models, multi-vision sensor reasoning, Diverse Negative Attributes

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Address the limitation of current Vision-Language Models in understanding diverse multi-vision sensor data.

🛠️ Research Methods:

– Introduce the Multi-vision Sensor Perception and Reasoning (MS-PR) benchmark and Diverse Negative Attributes (DNA) optimization to enhance deep reasoning in VLMs.

💬 Research Conclusions:

– Experimental results demonstrate that the DNA method significantly improves multi-vision sensor reasoning in Vision-Language Models.

👉 Paper link: https://huggingface.co/papers/2412.20750