AI Native Daily Paper Digest – 20250103

1. 2.5 Years in Class: A Multimodal Textbook for Vision-Language Pretraining

🔑 Keywords: Vision-Language Models, Multimodal Textbook, Instructional Videos, Image-Text Alignment

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Introduce a high-quality multimodal textbook corpus for VLM pretraining utilizing instructional videos.

🛠️ Research Methods:

– Systematically gather and curate instructional videos using a taxonomy; extract visual, audio, and textual knowledge to organize an image-text interleaved corpus.

💬 Research Conclusions:

– The new corpus provides superior pretraining performance, enhancing context coherence and knowledge-richness for tasks such as ScienceQA and MathVista, and improves interleaved context awareness in VLMs.

👉 Paper link: https://huggingface.co/papers/2501.00958

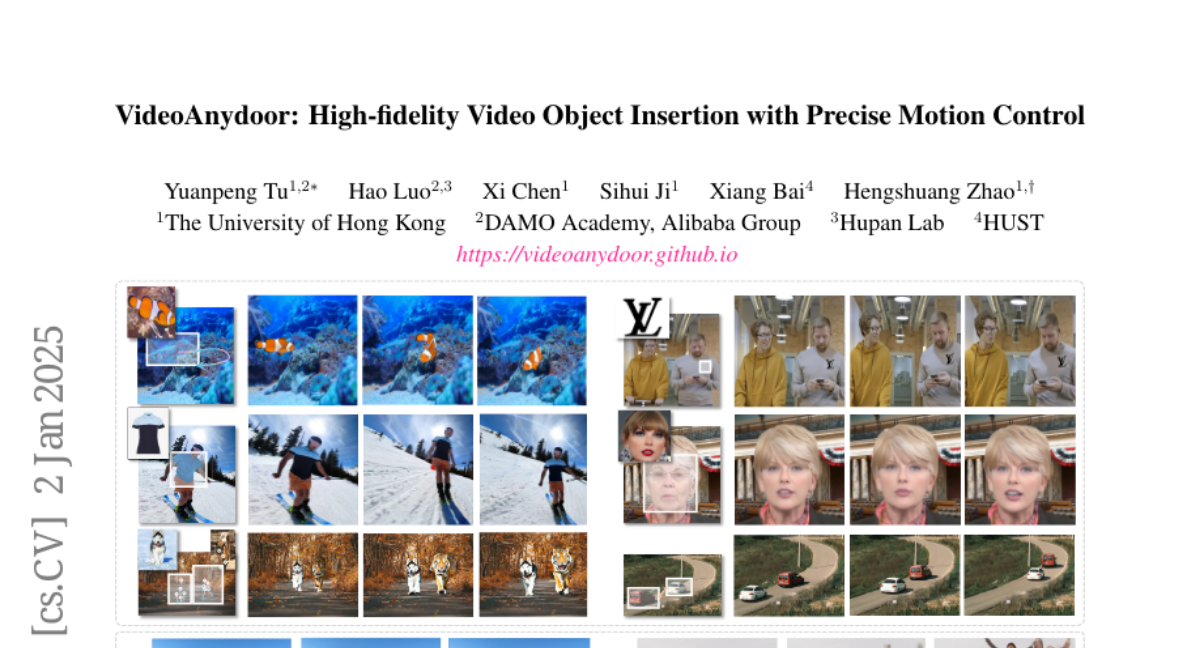

2. VideoAnydoor: High-fidelity Video Object Insertion with Precise Motion Control

🔑 Keywords: VideoAnydoor, zero-shot, motion control, detail preservation, pixel warper

💡 Category: Generative Models

🌟 Research Objective:

– To develop VideoAnydoor, a framework for inserting objects into videos with high-fidelity detail preservation and precise motion control.

🛠️ Research Methods:

– Utilizing an ID extractor and box sequence for global identity and motion control.

– Designing a pixel warper to manipulate pixel details with key-points and trajectories, supported by a diffusion U-Net.

– Implementing a training strategy using both videos and static images with a reweight reconstruction loss.

💬 Research Conclusions:

– VideoAnydoor outperforms existing methods and supports various applications like talking head generation and video virtual try-on without task-specific fine-tuning.

👉 Paper link: https://huggingface.co/papers/2501.01427

3. CodeElo: Benchmarking Competition-level Code Generation of LLMs with Human-comparable Elo Ratings

🔑 Keywords: Large Language Models, CodeElo, Benchmarks, Code Reasoning

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– The paper aims to develop CodeElo, a standardized competition-level code generation benchmark that addresses the limitations of existing benchmarks.

🛠️ Research Methods:

– The research involves compiling recent CodeForces contest problems, introducing a unique judging method, and developing an Elo rating calculation system comparable to human participants.

💬 Research Conclusions:

– CodeElo provides Elo ratings for popular open-source and proprietary LLMs, with o1-mini and QwQ-32B-Preview models achieving significantly higher ratings compared to others. Analysis also offers insights into performance across different algorithms and programming languages.

👉 Paper link: https://huggingface.co/papers/2501.01257

4. VideoRefer Suite: Advancing Spatial-Temporal Object Understanding with Video LLM

🔑 Keywords: Video Large Language Models, spatial-temporal understanding, object-level video instruction, VideoRefer-700K

💡 Category: Computer Vision

🌟 Research Objective:

– To enhance Video Large Language Models for fine-grained spatial-temporal video understanding and reasoning on object-level details.

🛠️ Research Methods:

– Developed a dataset named VideoRefer-700K using a multi-agent data engine for high-quality video instructions.

– Created a VideoRefer model with a spatial-temporal object encoder and established VideoRefer-Bench for assessment.

💬 Research Conclusions:

– The VideoRefer model significantly improves performance on video referring benchmarks and enhances general video understanding capabilities.

👉 Paper link: https://huggingface.co/papers/2501.00599

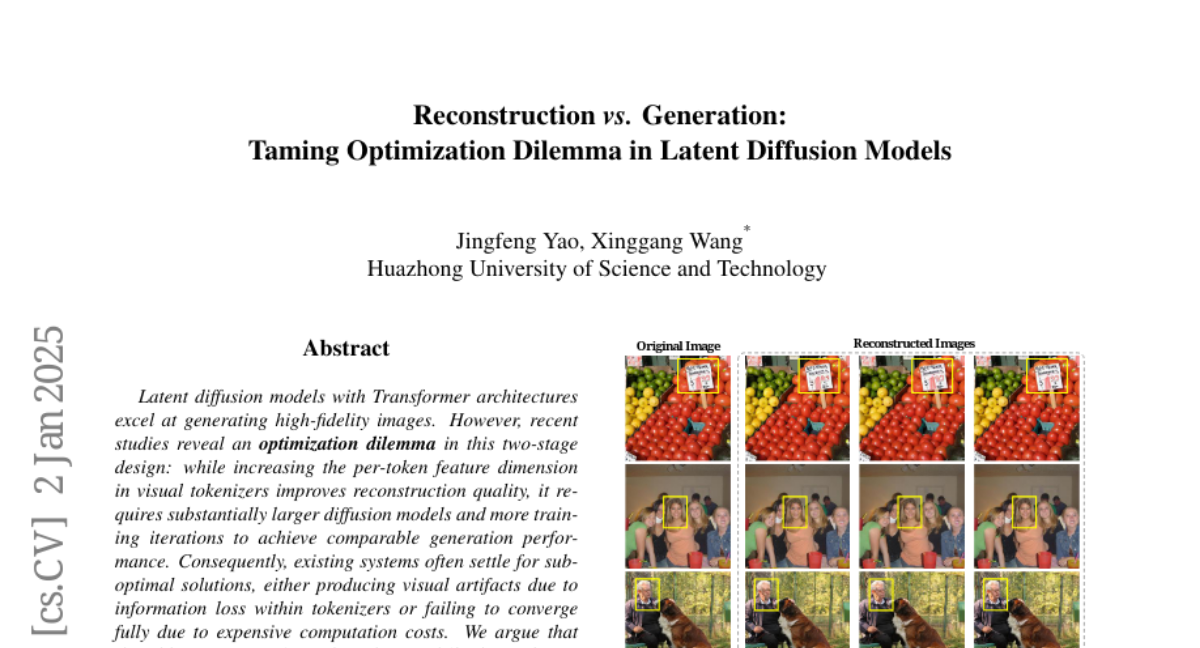

5. Reconstruction vs. Generation: Taming Optimization Dilemma in Latent Diffusion Models

🔑 Keywords: Latent diffusion models, Transformer architectures, Vision foundation models, VA-VAE, LightningDiT

💡 Category: Generative Models

🌟 Research Objective:

– The research aims to overcome the optimization challenges in latent diffusion models with Transformer architectures, enhancing the reconstruction-generation trade-off and convergence speed.

🛠️ Research Methods:

– The study introduces VA-VAE, aligning the latent space with pre-trained vision foundation models, and develops LightningDiT with improved training strategies and architecture designs.

💬 Research Conclusions:

– The proposed solution achieves state-of-the-art performance on ImageNet 256×256 and significantly improves training efficiency, with a notable convergence speedup over the original model.

👉 Paper link: https://huggingface.co/papers/2501.01423

6. ProgCo: Program Helps Self-Correction of Large Language Models

🔑 Keywords: Self-Correction, Large Language Models, Complex Reasoning, Program-driven Verification, Program-driven Refinement

💡 Category: Natural Language Processing

🌟 Research Objective:

– To enhance the self-correction abilities of large language models by enabling them to self-verify and self-refine their responses.

🛠️ Research Methods:

– Introduction of Program-driven Self-Correction (ProgCo), encompassing program-driven verification (ProgVe) and program-driven refinement (ProgRe), utilizing self-generated pseudo-programs for logic verification and response refinement.

💬 Research Conclusions:

– ProgCo significantly improves self-correction in LLMs, particularly in complex reasoning tasks, and demonstrates enhanced performance when combined with real program tools.

👉 Paper link: https://huggingface.co/papers/2501.01264

7. MapEval: A Map-Based Evaluation of Geo-Spatial Reasoning in Foundation Models

🔑 Keywords: Foundation Models, MapEval, Geo-Spatial Reasoning, Navigation, Claude-3.5-Sonnet

💡 Category: Foundations of AI

🌟 Research Objective:

– To introduce MapEval, a benchmark for assessing AI models’ geo-spatial reasoning abilities through diverse map-based queries.

🛠️ Research Methods:

– Evaluated 28 foundation models using MapEval with 700 multiple-choice questions across 180 cities, focusing on tasks involving geo-spatial contexts.

💬 Research Conclusions:

– No model excelled across all tasks, yet Claude-3.5-Sonnet showed significant competitive performance, indicating current gaps in models’ geo-spatial understanding compared to human capabilities.

👉 Paper link: https://huggingface.co/papers/2501.00316

8. A3: Android Agent Arena for Mobile GUI Agents

🔑 Keywords: Mobile GUI agents, AI agents, large language models, Android Agent Arena, in-the-wild tasks

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– To introduce Android Agent Arena (A3), a novel evaluation platform for mobile GUI agents, addressing the gap in comprehensive real-world task performance assessment.

🛠️ Research Methods:

– A3 platform features a flexible action space, enabling compatibility with agents trained on various datasets, and uses an automated business-level evaluation process leveraging large language models.

💬 Research Conclusions:

– A3 provides a robust foundation for evaluating mobile GUI agents in real-world settings with a less labor-intensive evaluation process, including 21 third-party apps and 201 tasks.

👉 Paper link: https://huggingface.co/papers/2501.01149

9. Dynamic Scaling of Unit Tests for Code Reward Modeling

🔑 Keywords: Large Language Models, Code Generation, Unit Tests, Reward Signal

💡 Category: Natural Language Processing

🌟 Research Objective:

– To enhance the reward signal quality in complex reasoning tasks such as code generation by scaling the number of unit tests.

🛠️ Research Methods:

– Conducted an experiment to explore the impact of scaled unit tests on reward signal quality and proposed a unit test generator, CodeRM-8B, along with a dynamic scaling mechanism based on problem difficulty.

💬 Research Conclusions:

– Scaling the number of unit tests improves the performance of large language models significantly, with an observed positive correlation between test number and reward signal quality, especially in more challenging problems.

👉 Paper link: https://huggingface.co/papers/2501.01054

10. MLLM-as-a-Judge for Image Safety without Human Labeling

🔑 Keywords: Image Content Safety, AI-Generated Content, Multimodal Large Language Models, Zero-shot Learning, Safety Rules

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The paper investigates the possibility of detecting unsafe images using pre-trained Multimodal Large Language Models (MLLMs) in a zero-shot setting with a predefined set of safety rules.

🛠️ Research Methods:

– The proposed method involves objectifying safety rules, assessing image-rule relevance, and using debiased token probabilities for quick judgments. It may also involve cascaded chain-of-thought processes for deeper reasoning where necessary.

💬 Research Conclusions:

– The proposed MLLM-based approach is highly effective for zero-shot image safety judgment tasks, despite challenges posed by subjective safety rules and model biases.

👉 Paper link: https://huggingface.co/papers/2501.00192

11. Unifying Specialized Visual Encoders for Video Language Models

🔑 Keywords: VideoLLMs, Multi-Encoder, MERV, Video understanding, Zero-shot Perception Test

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To enhance video understanding by leveraging multiple frozen visual encoders to provide a comprehensive set of specialized visual knowledge.

🛠️ Research Methods:

– Developed the MERV method which creates a unified representation of a video by spatio-temporally aligning features from multiple encoders, allowing for enhanced processing in Video Large Language Models.

💬 Research Conclusions:

– MERV outperforms state-of-the-art methods with up to 3.7% better accuracy on video understanding benchmarks and improves zero-shot Perception Test accuracy by 2.2% compared to previous best, with minimal extra parameters and faster training.

👉 Paper link: https://huggingface.co/papers/2501.01426

12. LTX-Video: Realtime Video Latent Diffusion

🔑 Keywords: LTX-Video, transformer-based, Video-VAE, high compression ratio, text-to-video generation

💡 Category: Generative Models

🌟 Research Objective:

– To integrate the roles of Video-VAE and a denoising transformer in a unified model for enhanced video generation efficiency and quality.

🛠️ Research Methods:

– Developed a transformer-based latent diffusion model, LTX-Video, that performs spatiotemporal self-attention in a highly compressed latent space with a compression ratio of 1:192.

💬 Research Conclusions:

– The proposed model enables fast and high-resolution video generation, achieving 5 seconds of 24 fps video at 768×512 resolution in just 2 seconds on an Nvidia H100 GPU, setting new benchmarks in the field.

👉 Paper link: https://huggingface.co/papers/2501.00103

13. Understanding and Mitigating Bottlenecks of State Space Models through the Lens of Recency and Over-smoothing

🔑 Keywords: Structured State Space Models (SSMs), recency bias, over-smoothing, long-sequence dependencies, deep architectures

💡 Category: Machine Learning

🌟 Research Objective:

– The study investigates the limitations and potential of Structured State Space Models (SSMs) as an alternative to transformers, specifically focusing on their capacity to capture long-sequence dependencies.

🛠️ Research Methods:

– Employ empirical studies to reveal the recency bias in SSMs and conduct scaling experiments to observe the effects of deeper architectures. Propose a polarization technique for state transition matrices to address identified challenges.

💬 Research Conclusions:

– Findings demonstrate that SSMs face a fundamental dilemma between recency bias and over-smoothing. The polarization technique enhances associative recall accuracy and leverages deeper architectures, improving SSM performance.

👉 Paper link: https://huggingface.co/papers/2501.00658

14. MapQaTor: A System for Efficient Annotation of Map Query Datasets

🔑 Keywords: Large Language Models, geospatial queries, MapQaTor, map-based QA datasets, geospatial understanding

💡 Category: Natural Language Processing

🌟 Research Objective:

– Introduce MapQaTor, a web application facilitating the creation of reproducible, traceable map-based QA datasets for geospatial queries.

🛠️ Research Methods:

– Utilized a plug-and-play architecture allowing integration with any maps API. Implemented features like caching API responses to enhance data reliability and centralizing data retrieval, annotation, and visualization.

💬 Research Conclusions:

– MapQaTor significantly expedites the annotation process, enhancing the development of complex geospatial resources and improving LLM-based geospatial reasoning capabilities, evidenced by a speed increase of at least 30 times compared to manual methods.

👉 Paper link: https://huggingface.co/papers/2412.21015

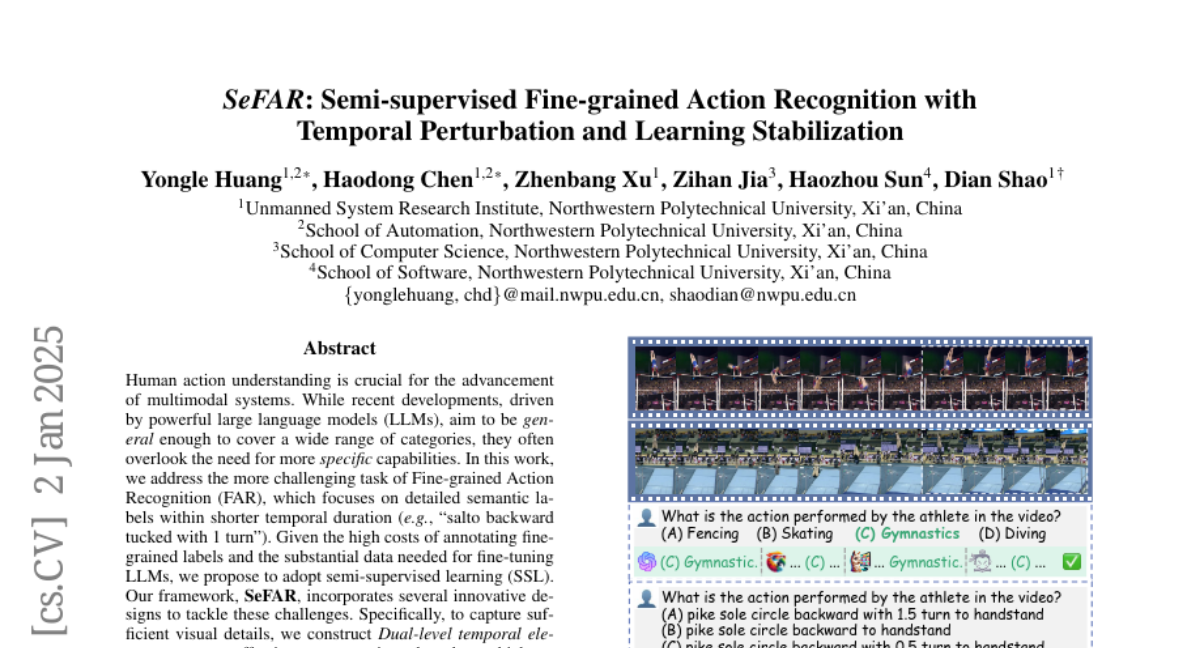

15. SeFAR: Semi-supervised Fine-grained Action Recognition with Temporal Perturbation and Learning Stabilization

🔑 Keywords: Fine-grained Action Recognition, semi-supervised learning, FineGym, FineDiving, multimodal systems

💡 Category: Computer Vision

🌟 Research Objective:

– The paper aims to address the challenging task of Fine-grained Action Recognition (FAR), focusing on detailed semantic labels within shorter temporal durations.

🛠️ Research Methods:

– Introduces a framework called SeFAR using semi-supervised learning (SSL) to tackle FAR, including Dual-level temporal elements for effective representation and an innovative augmentation strategy.

💬 Research Conclusions:

– SeFAR achieves state-of-the-art performance on FAR datasets and outperforms other semi-supervised methods on classical coarse-grained datasets, enhancing the understanding of fine-grained and domain-specific semantics in multimodal models.

👉 Paper link: https://huggingface.co/papers/2501.01245

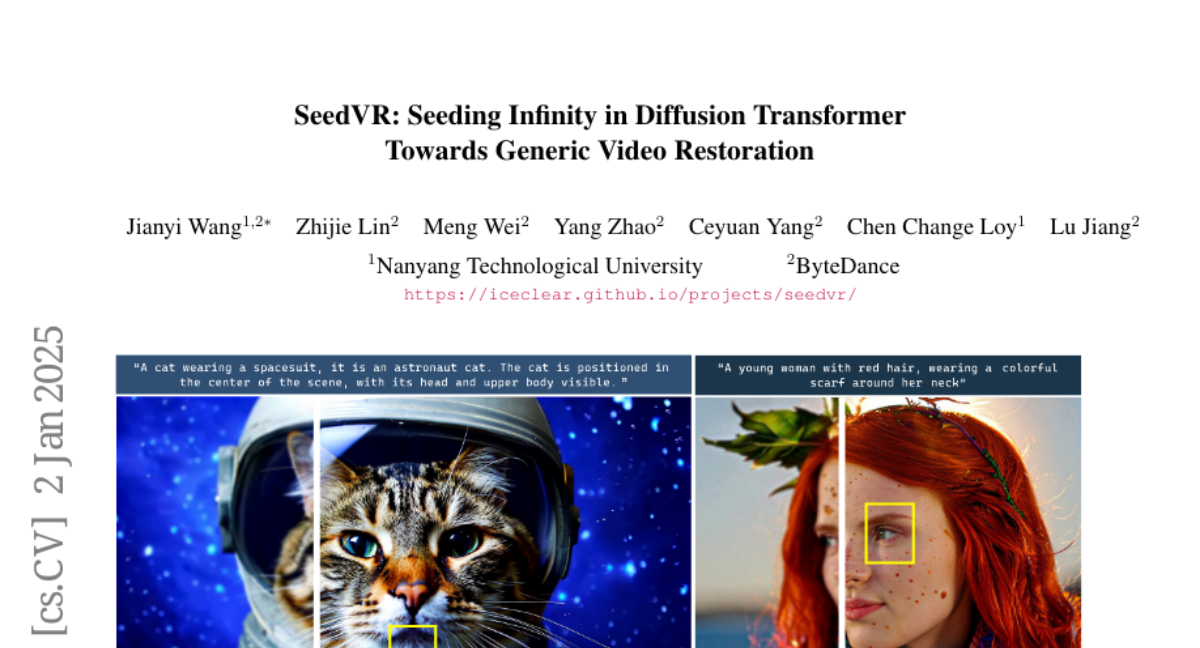

16. SeedVR: Seeding Infinity in Diffusion Transformer Towards Generic Video Restoration

🔑 Keywords: Video Restoration, Diffusion Transformer, Real-World Videos, Temporally Consistent, Shifted Window Attention

💡 Category: Computer Vision

🌟 Research Objective:

– The study aims to tackle the challenges of effectively restoring real-world videos with arbitrary length and resolution while maintaining temporal consistency.

🛠️ Research Methods:

– Implementation of SeedVR, a diffusion transformer utilizing shifted window attention and supporting variable-sized windows for effective restoration.

– Incorporation of additional techniques such as causal video autoencoder, mixed image and video training, and progressive training to enhance restoration performance.

💬 Research Conclusions:

– SeedVR demonstrates superior performance over existing methods in handling both synthetic and real-world video benchmarks, overcoming limitations in generation capability and sampling efficiency.

👉 Paper link: https://huggingface.co/papers/2501.01320

17. Rethinking Addressing in Language Models via Contexualized Equivariant Positional Encoding

🔑 Keywords: Transformers, Positional Encoding, Context Awareness, Equivariance

💡 Category: Natural Language Processing

🌟 Research Objective:

– The paper introduces conTextualized equivariant Position Embedding (TAPE) to enhance positional embeddings by integrating sequence content across layers, addressing limitations of traditional positional encoding techniques.

🛠️ Research Methods:

– TAPE employs dynamic, context-aware positional encodings that ensure stability through permutation and orthogonal equivariance, easily integrating into pre-trained transformers for efficient fine-tuning.

💬 Research Conclusions:

– TAPE demonstrates superior performance in tasks like language modeling, arithmetic reasoning, and long-context retrieval, surpassing existing positional embedding approaches.

👉 Paper link: https://huggingface.co/papers/2501.00712

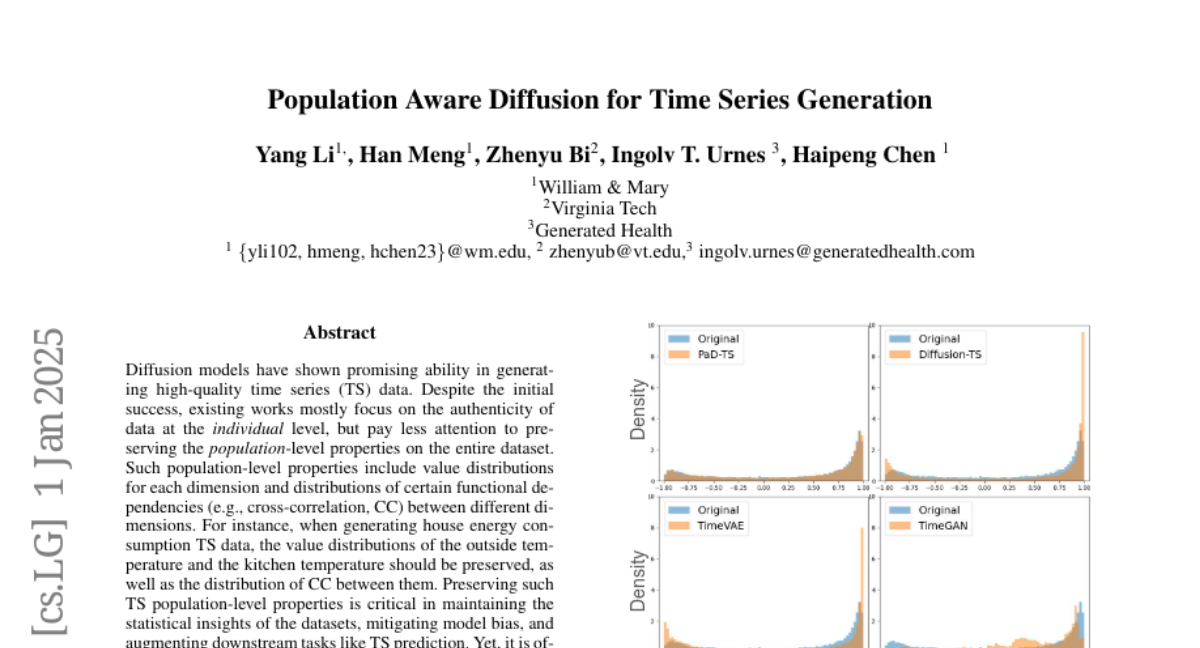

18. Population Aware Diffusion for Time Series Generation

🔑 Keywords: Diffusion models, Time series generation, Population-level properties, Cross-correlation, Data distribution shift

💡 Category: Generative Models

🌟 Research Objective:

– Introduce a new TS generation model, PaD-TS, to better preserve population-level properties in time series data.

🛠️ Research Methods:

– Develop a novel training method that explicitly incorporates TS population-level property preservation.

– Design a dual-channel encoder model architecture to capture the TS data structure more effectively.

💬 Research Conclusions:

– PaD-TS significantly reduces distribution shifts, improving the average CC distribution shift score by 5.9x while maintaining high individual-level authenticity.

👉 Paper link: https://huggingface.co/papers/2501.00910

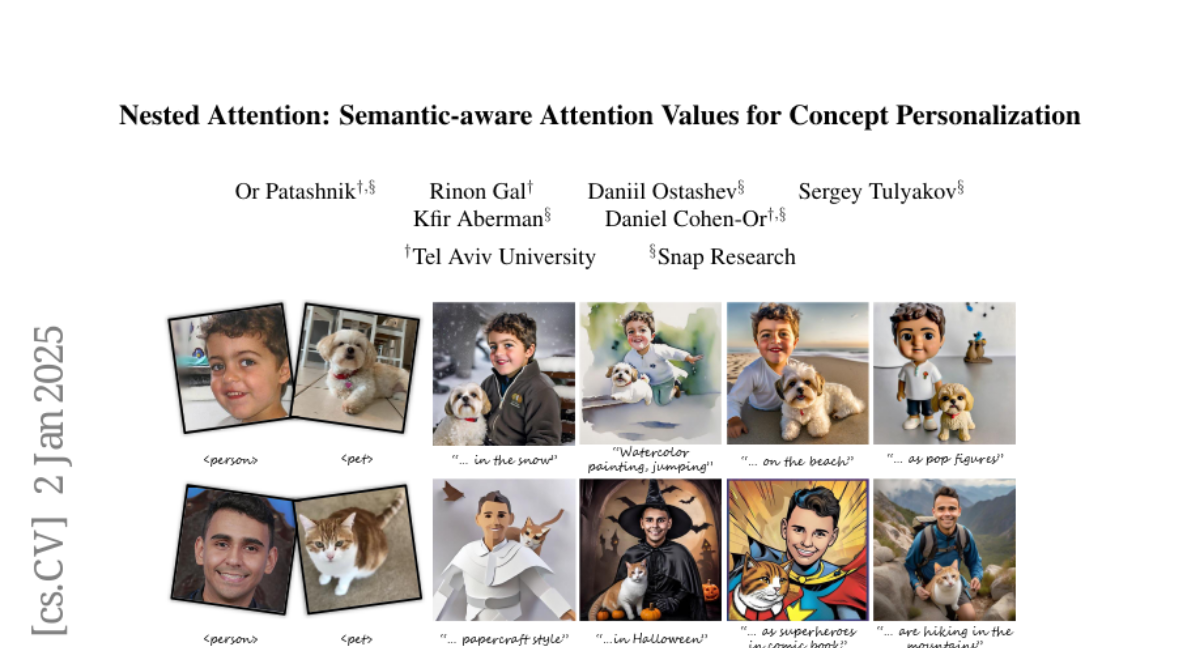

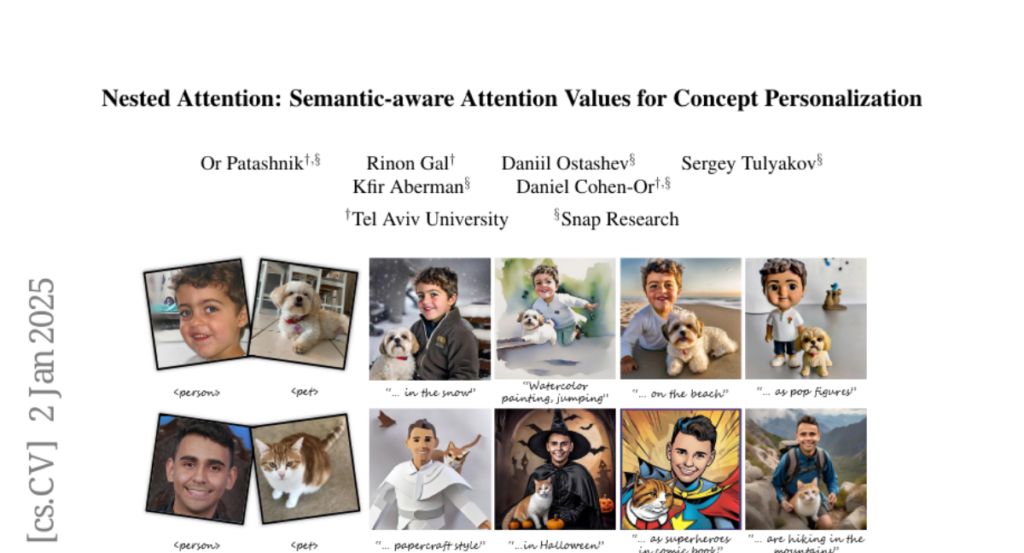

19. Nested Attention: Semantic-aware Attention Values for Concept Personalization

🔑 Keywords: Text-to-Image Models, Nested Attention, Identity Preservation, Cross-Attention Layers

💡 Category: Generative Models

🌟 Research Objective:

– Explore a new mechanism, Nested Attention, to improve expressiveness and balance between identity preservation and input text alignment in text-to-image generation.

🛠️ Research Methods:

– Introducing query-dependent subject values through nested attention layers in an encoder-based personalization method.

💬 Research Conclusions:

– The approach provides high identity preservation and adheres to text prompts, showcasing versatility across various domains while enabling the combination of multiple subjects in a single image.

👉 Paper link: https://huggingface.co/papers/2501.01407