AI Native Daily Paper Digest – 20250108

1. REINFORCE++: A Simple and Efficient Approach for Aligning Large Language Models

🔑 Keywords: Reinforcement Learning, Human Feedback, REINFORCE++, Proximal Policy Optimization, Computational Efficiency

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The paper introduces REINFORCE++, an enhanced version of the classical REINFORCE algorithm, aimed at optimizing training stability, simplicity, and computational efficiency.

🛠️ Research Methods:

– REINFORCE++ integrates key optimization techniques from Proximal Policy Optimization (PPO) while removing the necessity for a critic network, achieving its goals through empirical evaluation.

💬 Research Conclusions:

– REINFORCE++ demonstrates superior training stability compared to Group Relative Policy Optimization (GRPO) and achieves greater computational efficiency than PPO, maintaining comparable performance levels.

👉 Paper link: https://huggingface.co/papers/2501.03262

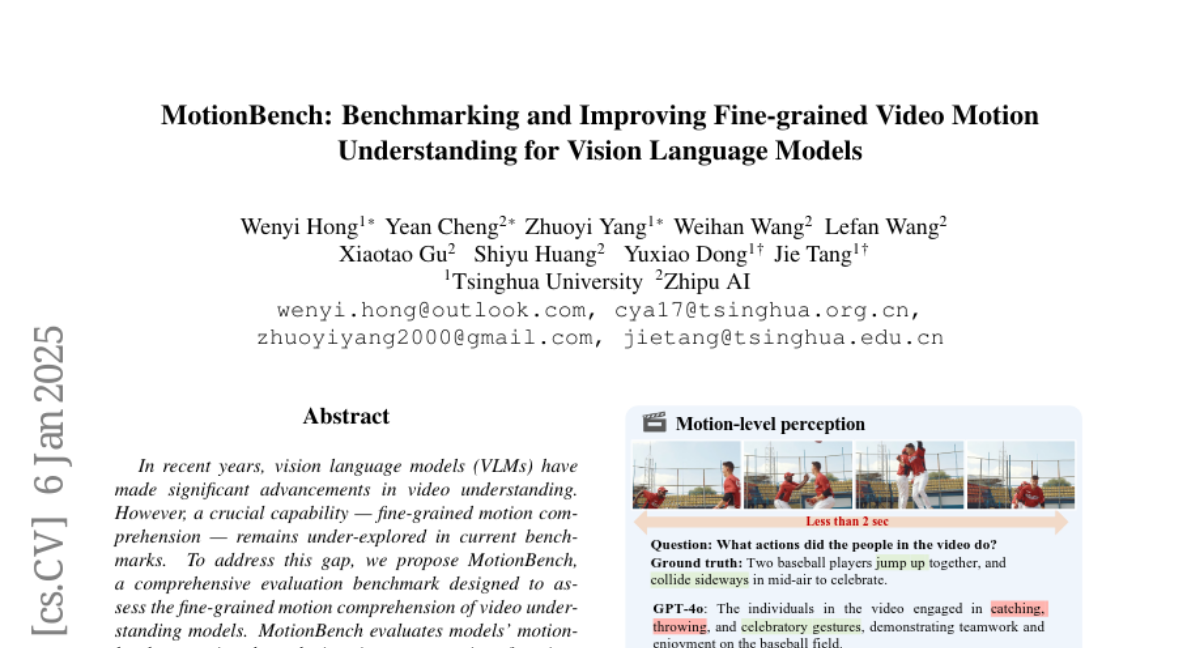

2. MotionBench: Benchmarking and Improving Fine-grained Video Motion Understanding for Vision Language Models

🔑 Keywords: Vision Language Models (VLMs), Video Understanding, Motion Comprehension, MotionBench, Through-Encoder Fusion

💡 Category: Computer Vision

🌟 Research Objective:

– The study aims to address the lack of fine-grained motion comprehension in video understanding models by proposing MotionBench, a new benchmark for assessing these capabilities.

🛠️ Research Methods:

– MotionBench evaluates models using six categories of motion-related questions and includes data from diverse real-world video sources. The study proposes the Through-Encoder Fusion method to improve fine-grained motion perception.

💬 Research Conclusions:

– Existing VLMs have limited capabilities in understanding fine-grained motion, but enhancements using higher frame rate inputs and the Through-Encoder Fusion method show potential for improvement, emphasizing the need for better video understanding models.

👉 Paper link: https://huggingface.co/papers/2501.02955

3. Cosmos World Foundation Model Platform for Physical AI

🔑 Keywords: Physical AI, digital twin, world model, open-source, Cosmos World Foundation Model

💡 Category: AI Systems and Tools

🌟 Research Objective:

– To present the Cosmos World Foundation Model Platform for building customized world models for Physical AI applications.

🛠️ Research Methods:

– Utilizes a general-purpose world foundation model that can be fine-tuned for specific downstream applications and includes a video curation pipeline, pre-trained models, and video tokenizers.

💬 Research Conclusions:

– The platform is open-source with permissive licenses, providing tools to aid Physical AI developers in addressing critical societal problems.

👉 Paper link: https://huggingface.co/papers/2501.03575

4. LLaVA-Mini: Efficient Image and Video Large Multimodal Models with One Vision Token

🔑 Keywords: Large Multimodal Models, Vision Tokens, Modality Pre-fusion, Efficiency, LLaVA-Mini

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The paper introduces LLaVA-Mini, an efficient large multimodal model designed to minimize the number of vision tokens while preserving visual information.

🛠️ Research Methods:

– The study involves analyzing the understanding of vision tokens in large multimodal models and introducing modality pre-fusion to compress vision tokens significantly by fusing visual information into text tokens early in the process.

💬 Research Conclusions:

– LLaVA-Mini utilizes a single vision token to outperform previous models like LLaVA-v1.5 across 18 benchmarks, achieves a 77% reduction in FLOPs, and delivers responses within 40 milliseconds, efficiently processing over 10,000 frames of video with 24GB GPU memory.

👉 Paper link: https://huggingface.co/papers/2501.03895

5. Sa2VA: Marrying SAM2 with LLaVA for Dense Grounded Understanding of Images and Videos

🔑 Keywords: Sa2VA, multi-modal, LLM, segmentation, SAM-2

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The research introduces Sa2VA, aimed at unifying the comprehension of both images and videos for diverse tasks such as referring segmentation and conversation using minimal one-shot instruction tuning.

🛠️ Research Methods:

– Combines SAM-2, a foundational video segmentation model, and LLaVA, a vision-language model, into a unified token space for text, image, and video, enhancing grounded multi-modal understanding.

– Ref-SAV dataset, containing over 72k object expressions, is developed to increase model effectiveness in complex scenes.

💬 Research Conclusions:

– Sa2VA demonstrates state-of-the-art performance across various tasks, notably in referring video object segmentation, indicating strong potential for complex real-world applications.

👉 Paper link: https://huggingface.co/papers/2501.04001

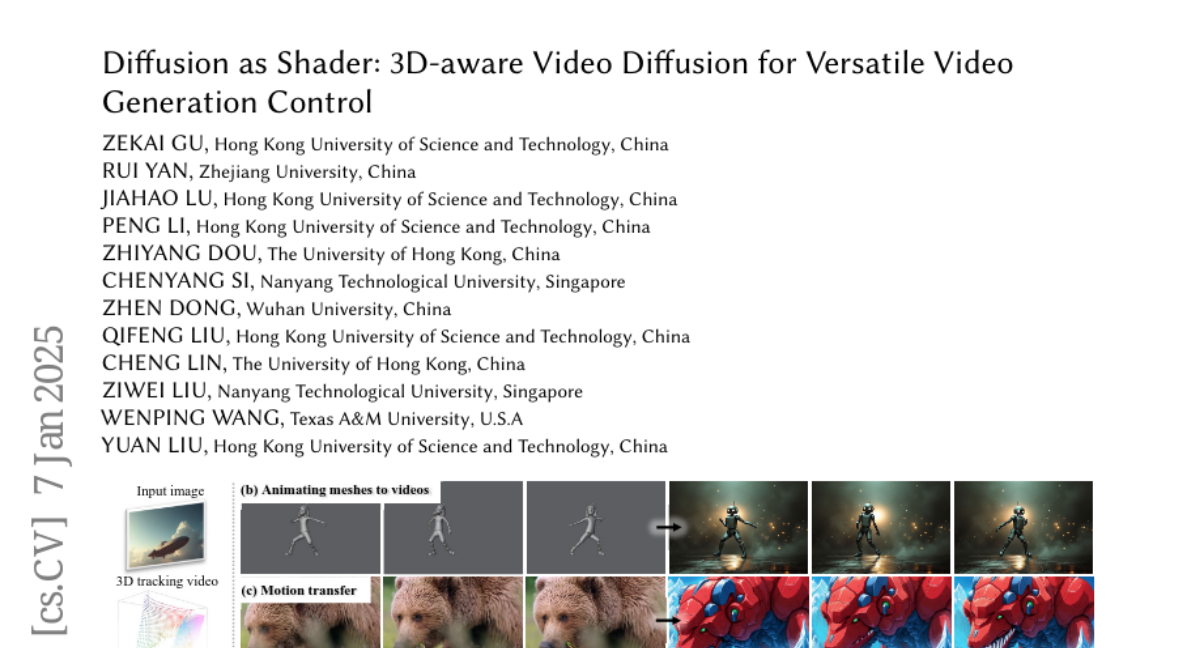

6. Diffusion as Shader: 3D-aware Video Diffusion for Versatile Video Generation Control

🔑 Keywords: Diffusion as Shader, 3D control signals, video generation, temporal consistency

💡 Category: Generative Models

🌟 Research Objective:

– To develop a novel approach, Diffusion as Shader (DaS), that supports multiple video control tasks within a unified architecture.

🛠️ Research Methods:

– Leveraging 3D tracking videos as control inputs to enable a wide range of video control capabilities, ensuring the process is inherently 3D-aware.

💬 Research Conclusions:

– DaS demonstrates strong control capabilities across diverse tasks, achieving significant improvements in temporal consistency and offering versatile video control such as mesh-to-video generation and motion transfer.

👉 Paper link: https://huggingface.co/papers/2501.03847

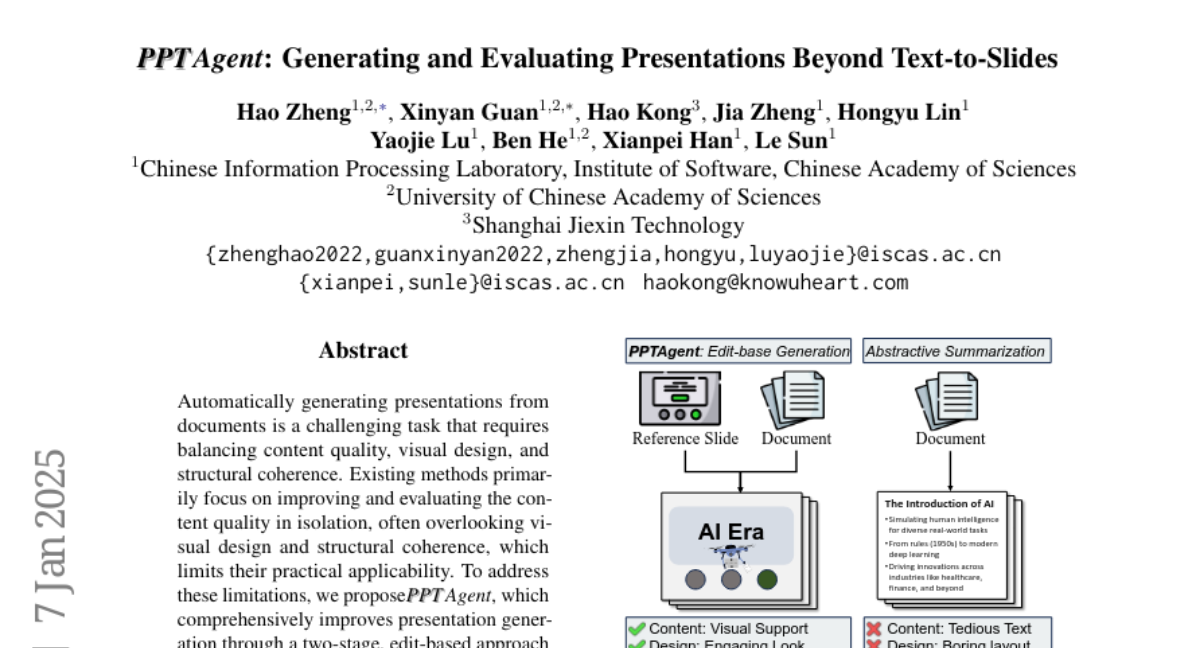

7. PPTAgent: Generating and Evaluating Presentations Beyond Text-to-Slides

🔑 Keywords: PPTAgent, presentation generation, structural coherence, PPTEval

💡 Category: AI Systems and Tools

🌟 Research Objective:

– Propose a novel method, PPTAgent, to enhance presentation generation by focusing on not just content quality but also visual design and structural coherence.

🛠️ Research Methods:

– Introduce a two-stage, edit-based approach inspired by human workflows and the development of PPTEval, an evaluation framework assessing presentations on Content, Design, and Coherence.

💬 Research Conclusions:

– PPTAgent outperforms traditional automatic presentation generation methods in multiple dimensions, improving practical applicability. The code and data are accessible at the provided GitHub link.

👉 Paper link: https://huggingface.co/papers/2501.03936

8. MoDec-GS: Global-to-Local Motion Decomposition and Temporal Interval Adjustment for Compact Dynamic 3D Gaussian Splatting

🔑 Keywords: 3D Gaussian Splatting, Neural Rendering, Dynamic Scenes, Memory-Efficient, Motion Decomposition

💡 Category: Computer Vision

🌟 Research Objective:

– Address storage demands and complexity in representing real-world dynamic motions in neural rendering with a new framework called MoDecGS.

🛠️ Research Methods:

– Development of a memory-efficient Gaussian splatting framework utilizing GlobaltoLocal Motion Decomposition (GLMD), Global Canonical Scaffolds, Local Canonical Scaffolds, Global Anchor Deformation, and Local Gaussian Deformation.

– Introduction of Temporal Interval Adjustment (TIA) to optimize temporal segment assignments during training.

💬 Research Conclusions:

– MoDecGS achieves a 70% reduction in model size compared to state-of-the-art methods for dynamic 3D Gaussian rendering from real-world videos while maintaining or enhancing the rendering quality.

👉 Paper link: https://huggingface.co/papers/2501.03714

9. Magic Mirror: ID-Preserved Video Generation in Video Diffusion Transformers

🔑 Keywords: identity-preserved videos, cinematic-level quality, video diffusion models, dynamic motion, Video Diffusion Transformers

💡 Category: Generative Models

🌟 Research Objective:

– To generate identity-preserved videos with high-quality cinematic presentation and natural dynamic motion.

🛠️ Research Methods:

– The method incorporates a dual-branch facial feature extractor, a lightweight cross-modal adapter, and a two-stage training strategy.

💬 Research Conclusions:

– Magic Mirror achieves a balance between identity consistency and natural motion, outperforming existing methods with minimal additional parameters.

👉 Paper link: https://huggingface.co/papers/2501.03931

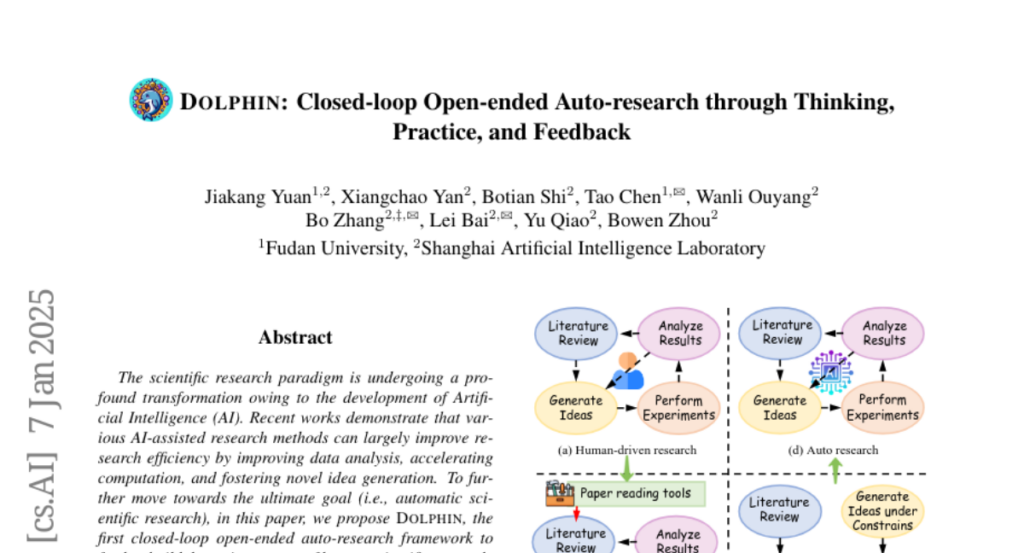

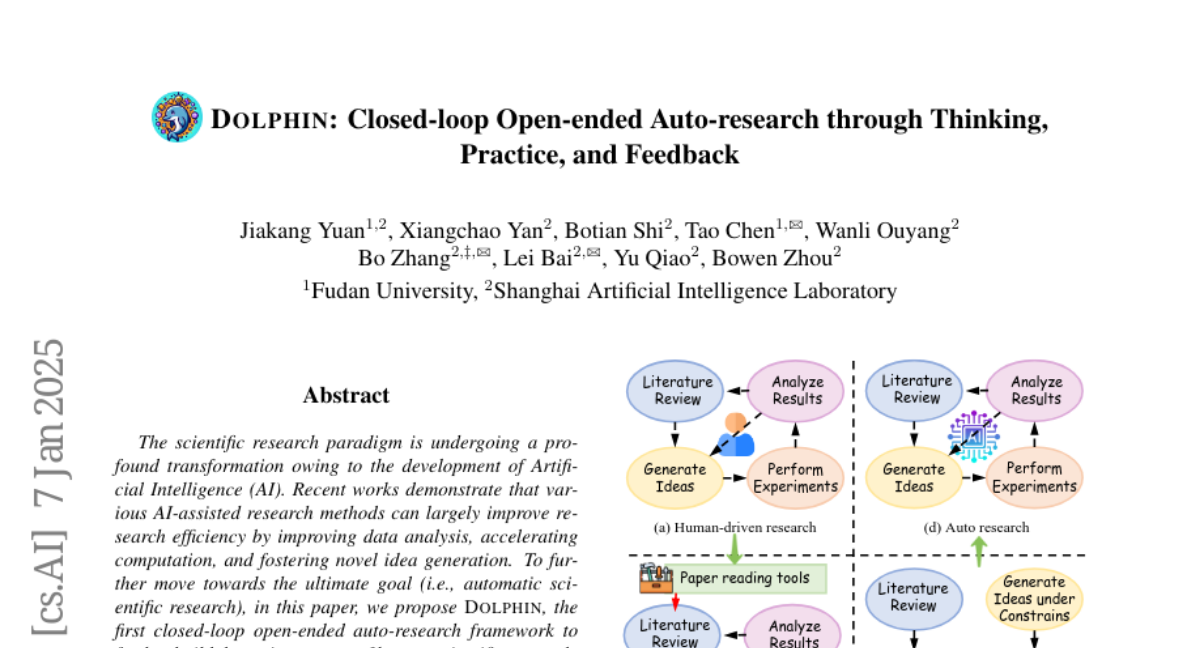

10. Dolphin: Closed-loop Open-ended Auto-research through Thinking, Practice, and Feedback

🔑 Keywords: AI-assisted research, Dolphin, automatic scientific research, novel idea generation

💡 Category: AI Systems and Tools

🌟 Research Objective:

– The paper aims to develop Dolphin, a framework to automate the scientific research process, advancing towards automatic scientific research.

🛠️ Research Methods:

– Dolphin generates research ideas by ranking relevant papers and uses an exception-traceback-guided approach for automatic code generation and debugging.

– It conducts experiments and analyzes results to inform subsequent idea generation, creating a closed-loop research cycle.

💬 Research Conclusions:

– Dolphin effectively generates continuous novel ideas and conducts experiments, achieving outcomes comparable to state-of-the-art methods in tasks like 2D image classification and 3D point classification.

👉 Paper link: https://huggingface.co/papers/2501.03916

11. Segmenting Text and Learning Their Rewards for Improved RLHF in Language Model

🔑 Keywords: Reinforcement Learning, Human Feedback, Language Models, Reward Model, Token-Level RLHF

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The paper aims to improve language model alignment with human preferences by developing a segment-level reward model that addresses the limitations of both bandit formulation and dense token-level RLHF.

🛠️ Research Methods:

– It introduces a method for training language models using dynamic text segmentation for segment-level rewards, and it generalizes classical scalar bandit reward normalization techniques into location-aware functions for effective model training.

💬 Research Conclusions:

– The proposed approach performs competitively on popular RLHF benchmarks such as AlpacaEval 2.0, Arena-Hard, and MT-Bench, and ablation studies further validate the method’s effectiveness.

👉 Paper link: https://huggingface.co/papers/2501.02790

12. MagicFace: High-Fidelity Facial Expression Editing with Action-Unit Control

🔑 Keywords: Facial Expression Editing, Action Unit, MagicFace, Diffusion Model, ID Encoder

💡 Category: Computer Vision

🌟 Research Objective:

– To achieve fine-grained, continuous, and interpretable facial expression editing while preserving the identity, pose, background, and detailed facial attributes.

🛠️ Research Methods:

– Use a diffusion model conditioned on AU variations and an ID encoder, leveraging pretrained Stable-Diffusion models and incorporating an efficient Attribute Controller for background and pose consistency.

💬 Research Conclusions:

– The proposed MagicFace model provides high-fidelity expression editing with superior results, allowing animation of arbitrary identities through various AU combinations. The code is available on GitHub.

👉 Paper link: https://huggingface.co/papers/2501.02260

13. Graph-Aware Isomorphic Attention for Adaptive Dynamics in Transformers

🔑 Keywords: Transformer modification, Graph Neural Networks, Sparse GIN-Attention, Generalization

💡 Category: Natural Language Processing

🌟 Research Objective:

– To integrate graph-aware relational reasoning into the Transformer architectures by merging concepts from graph neural networks and language modeling.

🛠️ Research Methods:

– Reformulating the Transformer’s attention mechanism as a graph operation using Graph-Aware Isomorphic Attention, leveraging Graph Isomorphism Networks (GIN) and Principal Neighborhood Aggregation (PNA).

– Introducing Sparse GIN-Attention to improve adaptability with minimal computational overhead.

💬 Research Conclusions:

– The approach reduces the generalization gap and improves learning performance.

– Demonstrates better training dynamics and generalization compared to methods like low-rank adaption (LoRA).

– Offers new insights into hierarchical GIN models for relational reasoning with potential applications across diverse fields.

👉 Paper link: https://huggingface.co/papers/2501.02393

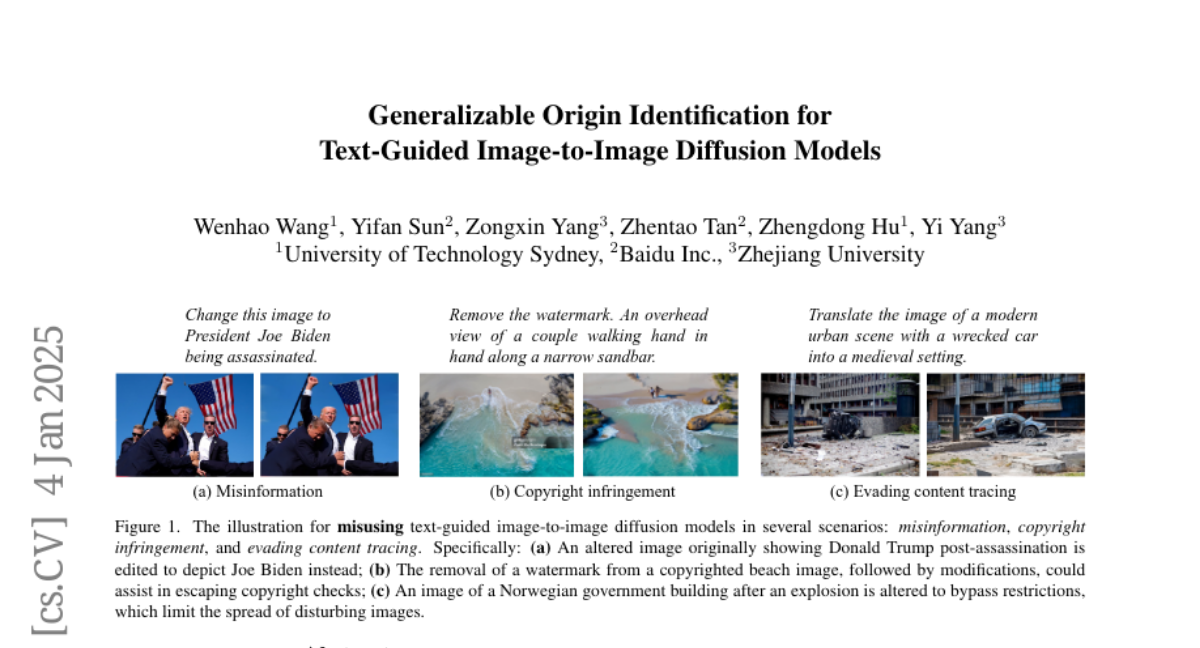

14. Generalizable Origin Identification for Text-Guided Image-to-Image Diffusion Models

🔑 Keywords: Image-to-Image Diffusion, Origin IDentification, Misuse Prevention, Linear Transformation, Variational Autoencoder

💡 Category: Generative Models

🌟 Research Objective:

– Introduce the task of origin IDentification for text-guided Image-to-image Diffusion models (ID^2) to retrieve the original image of a given translated query.

🛠️ Research Methods:

– Develop a dataset called OriPID and prove a method using a linear transformation to minimize the distance between pre-trained Variational Autoencoder (VAE) embeddings, emphasizing model generalizability.

💬 Research Conclusions:

– The proposed method achieves significant generalization performance, outperforming similarity-based methods by 31.6% mean Average Precision (mAP), even when applied across different diffusion models.

👉 Paper link: https://huggingface.co/papers/2501.02376