AI Native Daily Paper Digest – 20250110

1. The GAN is dead; long live the GAN! A Modern GAN Baseline

🔑 Keywords: GAN Loss, Regularization, AI Native, Modernization, R3GAN

💡 Category: Generative Models

🌟 Research Objective:

– To challenge the notion that GANs are inherently difficult to train and to develop a modern GAN baseline using a principled approach.

🛠️ Research Methods:

– Introduction of a regularized relativistic GAN loss with mathematical analysis ensuring local convergence guarantees, replacing empirical tricks and outdated architectures with modern ones.

💬 Research Conclusions:

– The new minimalist baseline, R3GAN, surpasses the capabilities of StyleGAN2 across various datasets and holds its ground against state-of-the-art GANs and diffusion models.

👉 Paper link: https://huggingface.co/papers/2501.05441

2. An Empirical Study of Autoregressive Pre-training from Videos

🔑 Keywords: Autoregressive Video Models, Visual Tokens, Pre-training

💡 Category: Computer Vision

🌟 Research Objective:

– To empirically study autoregressive pre-training from videos using models called Toto.

🛠️ Research Methods:

– Construct a series of autoregressive video models, treat videos as sequences of visual tokens, and train transformer models to predict future tokens. Pre-trained on over 1 trillion visual tokens.

💬 Research Conclusions:

– Autoregressive pre-training yields competitive performance across tasks like image recognition, video classification, and object tracking, with scaling curves similar to language models.

👉 Paper link: https://huggingface.co/papers/2501.05453

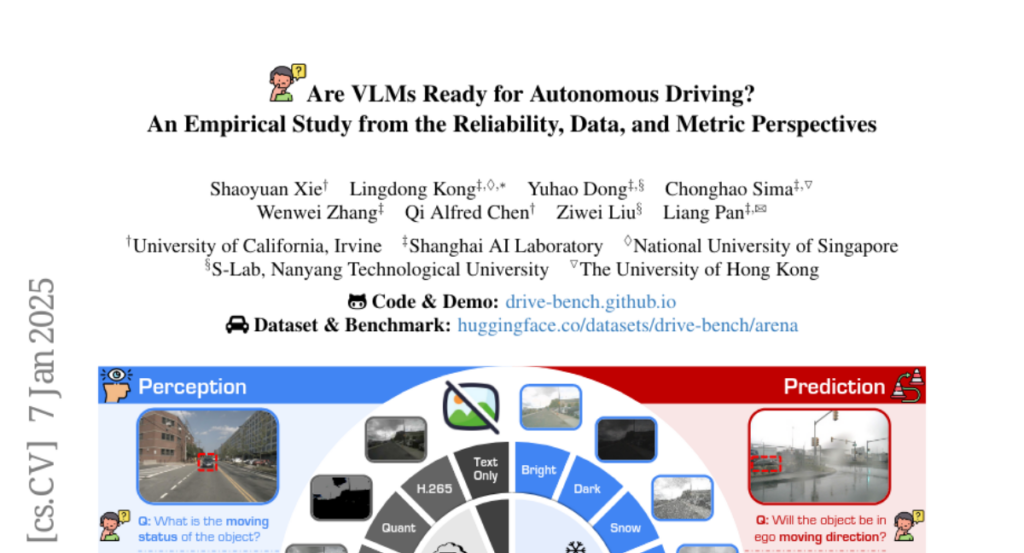

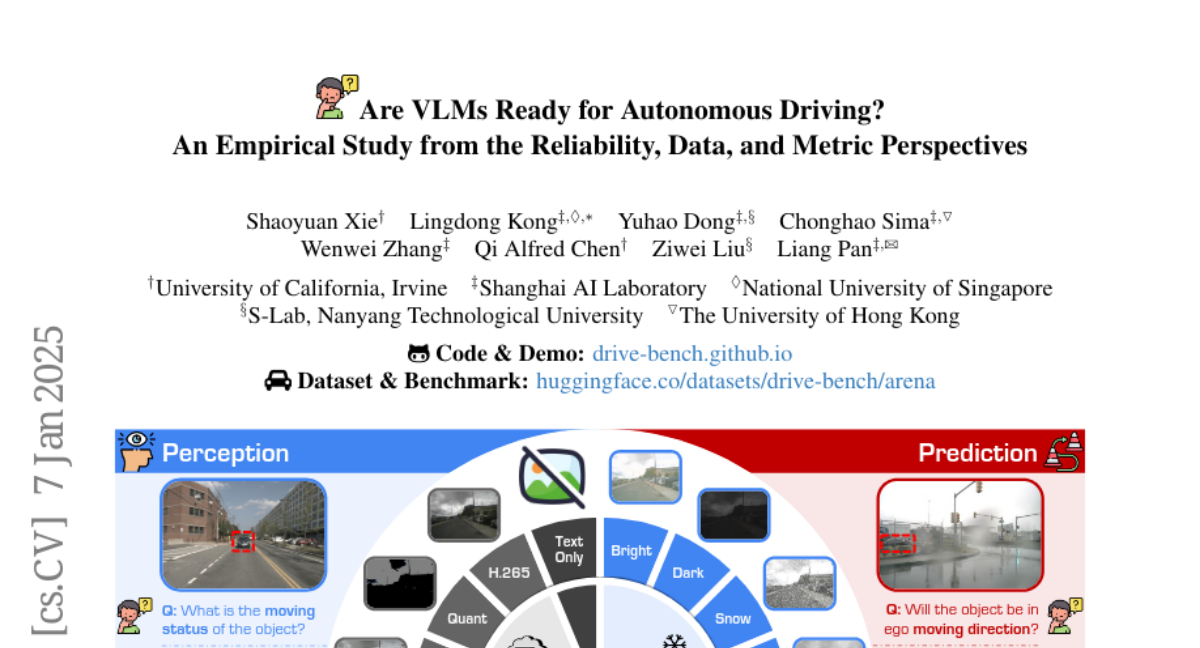

3. Are VLMs Ready for Autonomous Driving? An Empirical Study from the Reliability, Data, and Metric Perspectives

🔑 Keywords: Vision-Language Models, autonomous driving, multi-modal reasoning, evaluation metrics

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– Introduction of DriveBench to evaluate the reliability of Vision-Language Models (VLMs) in autonomous driving, focusing on real-world applications and interpretability.

🛠️ Research Methods:

– Developed a benchmark dataset, DriveBench, that includes 17 settings and 19,200 frames along with question-answer pairs to assess VLM performance across various conditions.

💬 Research Conclusions:

– Findings suggest VLMs often provide responses from general knowledge rather than true visual grounding, posing risks in safety-critical scenarios. Proposed improved evaluation metrics to enhance VLM reliability and leverage VLMs’ awareness of input corruptions.

👉 Paper link: https://huggingface.co/papers/2501.04003

4. Enhancing Human-Like Responses in Large Language Models

🔑 Keywords: Large Language Models, Natural Language Understanding, Conversational Coherence, Emotional Intelligence, Ethical Implications

💡 Category: Natural Language Processing

🌟 Research Objective:

– The paper explores advancements in making large language models more human-like by enhancing natural language understanding, conversational coherence, and emotional intelligence.

🛠️ Research Methods:

– Techniques include fine-tuning with diverse datasets, incorporating psychological principles, and designing models to mimic human reasoning patterns.

💬 Research Conclusions:

– Enhancements improve user interactions and open new possibilities for AI applications across different domains; future work will address ethical implications and potential biases.

👉 Paper link: https://huggingface.co/papers/2501.05032

5. Entropy-Guided Attention for Private LLMs

🔑 Keywords: Private Inference, Transformer Architectures, Nonlinearities, Shannon’s Entropy, Entropic Overload

💡 Category: Natural Language Processing

🌟 Research Objective:

– The study aims to establish an information-theoretic framework to optimize transformer architectures for private inference by examining the role of nonlinearities.

🛠️ Research Methods:

– The work leverages Shannon’s entropy to analyze nonlinearities in decoder-only language models, identifying two failure modes, and proposes an entropy-guided attention mechanism along with an entropy regularization technique.

💬 Research Conclusions:

– Nonlinearities are critical for training stability and maintaining attention head diversity, with proposed solutions to mitigate entropy collapse and overload to enhance efficient private inference architectures.

👉 Paper link: https://huggingface.co/papers/2501.03489

6. On Computational Limits and Provably Efficient Criteria of Visual Autoregressive Models: A Fine-Grained Complexity Analysis

🔑 Keywords: Visual Autoregressive Models, Image Generation, Computational Efficiency, Sub-quadratic Time Complexity, Strong Exponential Time Hypothesis

💡 Category: Generative Models

🌟 Research Objective:

– Analyze the computational limits and efficiency of Visual Autoregressive Models with a focus on achieving sub-quadratic time complexity.

🛠️ Research Methods:

– Evaluated the conditions under which VAR computations can be performed efficiently using a fine-grained complexity approach and low-rank approximations.

💬 Research Conclusions:

– Established a threshold for the input matrix norm, demonstrating the theoretical impossibility of achieving sub-quartic time complexity above this threshold under the Strong Exponential Time Hypothesis. Presented efficient constructions aligning with these criteria to enhance scalable image generation.

👉 Paper link: https://huggingface.co/papers/2501.04377

7. Centurio: On Drivers of Multilingual Ability of Large Vision-Language Model

🔑 Keywords: Large Vision-Language Models, Multilingual, Text-in-Image Understanding, Multimodal Learning, Instruction-Tuning

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Investigate training strategies for massively multilingual LVLMs to improve performance for multiple languages while maintaining English proficiency.

🛠️ Research Methods:

– Conducted multi-stage experiments on 13 vision-language tasks across 43 languages to determine optimal training language counts and data distribution, including non-English OCR data for pre-training and instruction-tuning.

💬 Research Conclusions:

– Discovered that up to 100 training languages can be included with 25-50% non-English data without degrading English performance. Non-English OCR data is crucial for enhancing multilingual text-in-image understanding. Developed Centurio LVLM, achieving state-of-the-art results across 14 tasks in 56 languages.

👉 Paper link: https://huggingface.co/papers/2501.05122

8. SWE-Fixer: Training Open-Source LLMs for Effective and Efficient GitHub Issue Resolution

🔑 Keywords: Large Language Models (LLMs), SWE-Fixer, GitHub, open-source, software engineering

💡 Category: Natural Language Processing

🌟 Research Objective:

– To introduce SWE-Fixer, an open-source LLM aimed at resolving real-world GitHub issues effectively and efficiently.

🛠️ Research Methods:

– Utilization of a two-module system comprising a code file retrieval module using BM25 and a lightweight model, and a code editing module for generating patches.

💬 Research Conclusions:

– SWE-Fixer achieves state-of-the-art performance on public benchmarks and will be released along with its dataset and code for public use.

👉 Paper link: https://huggingface.co/papers/2501.05040

9. Building Foundations for Natural Language Processing of Historical Turkish: Resources and Models

🔑 Keywords: Historical Turkish, Named Entity Recognition, Transformer-based Models, Universal Dependencies

💡 Category: Natural Language Processing

🌟 Research Objective:

– Develop foundational resources and models for NLP in the context of historical Turkish, an underexplored area in computational linguistics.

🛠️ Research Methods:

– Introduced the HisTR NER dataset and OTA-BOUN treebank, and created transformer-based models for tasks such as named entity recognition, dependency parsing, and part-of-speech tagging.

💬 Research Conclusions:

– Achieved significant improvements in the computational analysis of historical Turkish texts, with promising results demonstrating the effectiveness of the proposed models, while also identifying challenges related to domain adaptation and historical language variations.

👉 Paper link: https://huggingface.co/papers/2501.04828