AI Native Daily Paper Digest – 20250123

1. DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning

🔑 Keywords: DeepSeek-R1-Zero, DeepSeek-R1, Reinforcement Learning, Reasoning Models, Open-source

💡 Category: Reinforcement Learning

🌟 Research Objective:

– Introduction of first-generation reasoning models, focusing on enhancing reasoning capabilities via reinforcement learning techniques.

🛠️ Research Methods:

– Utilized large-scale reinforcement learning for DeepSeek-R1-Zero without supervised fine-tuning; introduced multi-stage training and cold-start data in DeepSeek-R1 to improve readability and reasoning performance.

💬 Research Conclusions:

– DeepSeek-R1 demonstrates reasoning performance comparable to OpenAI-o1-1217; both models and six dense models are open-sourced for community usage.

👉 Paper link: https://huggingface.co/papers/2501.12948

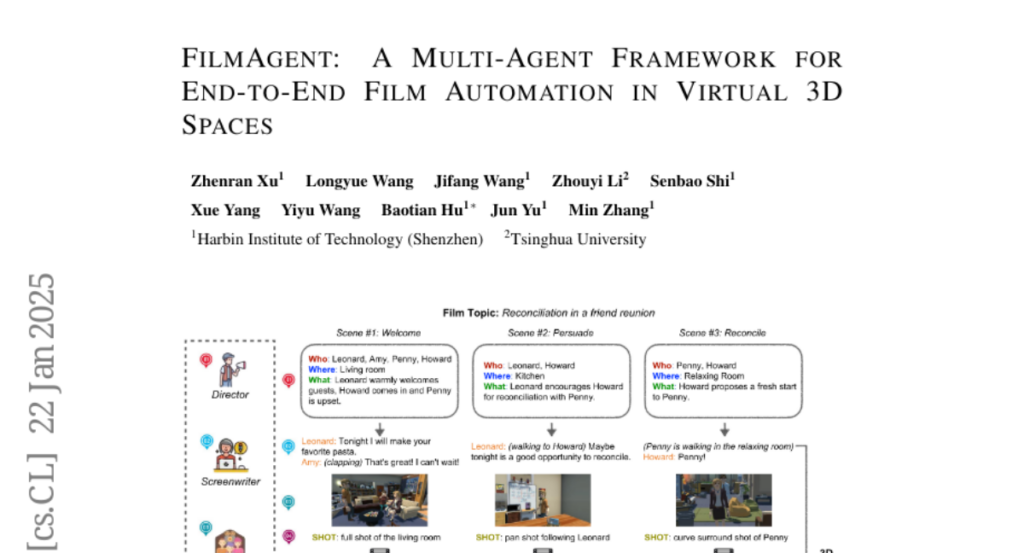

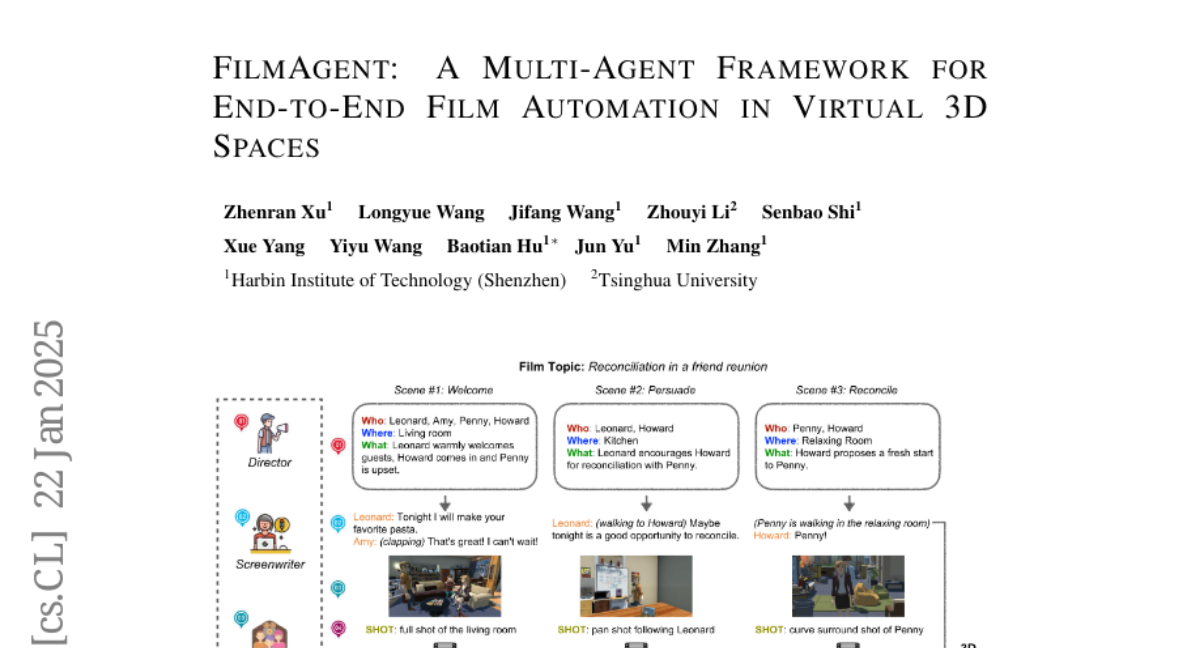

2. FilmAgent: A Multi-Agent Framework for End-to-End Film Automation in Virtual 3D Spaces

🔑 Keywords: FilmAgent, language agent-based societies, multi-agent collaboration, filmmaking, GPT-4o

💡 Category: AI Systems and Tools

🌟 Research Objective:

– To introduce FilmAgent, a novel framework for automating the film production process using language agent-based societies in virtual environments.

🛠️ Research Methods:

– Simulation of crew roles in virtual film production, including iterative feedback and revisions among agents to improve script quality and reduce errors.

💬 Research Conclusions:

– FilmAgent outperforms baseline models in film production tasks, demonstrating the effectiveness of multi-agent collaboration, even when using a less advanced model like GPT-4o.

👉 Paper link: https://huggingface.co/papers/2501.12909

3. VideoLLaMA 3: Frontier Multimodal Foundation Models for Image and Video Understanding

🔑 Keywords: VideoLLaMA3, vision-centric, image-text datasets, video understanding, foundation model

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The paper aims to propose VideoLLaMA3, an advanced multimodal foundation model focused on both image and video understanding through a vision-centric approach.

🛠️ Research Methods:

– The research employs a four-stage training process: vision-centric alignment, vision-language pretraining, multi-task fine-tuning, and video-centric fine-tuning. These stages involve adapting pretrained vision encoders and constructing large-scale and high-quality image-text datasets.

💬 Research Conclusions:

– VideoLLaMA3 exhibits strong performance on image and video understanding benchmarks due to its vision-centric design, which enables more precise and compact video representation and captures fine-grained image details.

👉 Paper link: https://huggingface.co/papers/2501.13106

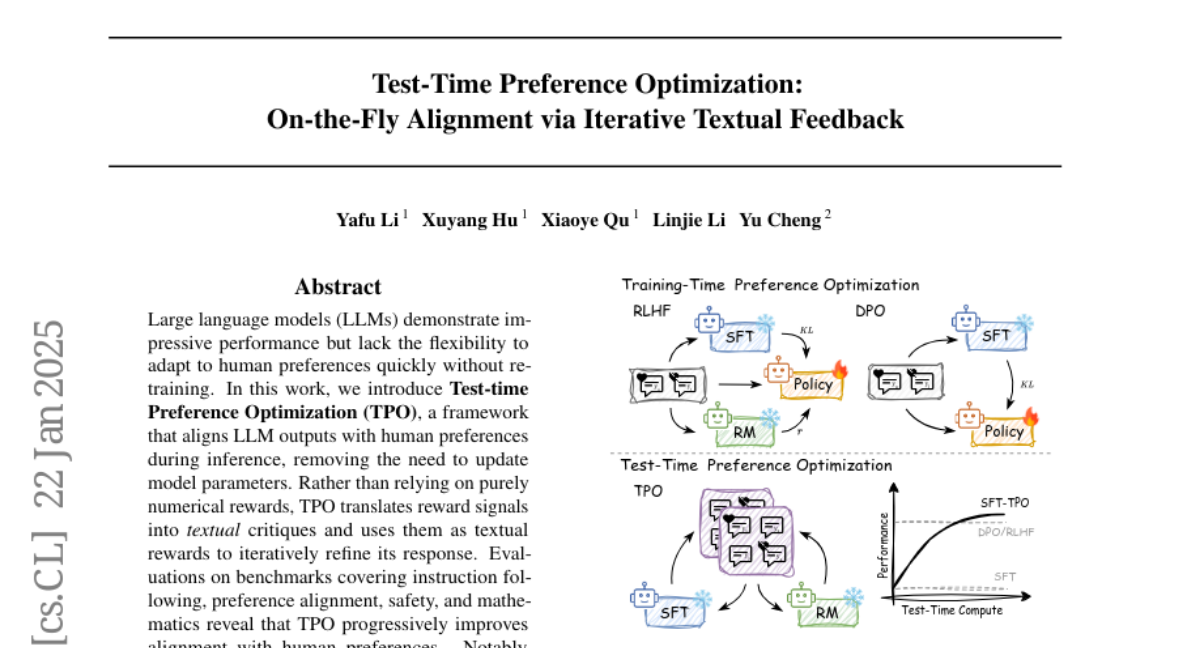

4. Test-Time Preference Optimization: On-the-Fly Alignment via Iterative Textual Feedback

🔑 Keywords: Large language models, Test-time Preference Optimization, Human preferences, Inference

💡 Category: Natural Language Processing

🌟 Research Objective:

– Introduce Test-time Preference Optimization (TPO) to improve Large language models’ alignment with human preferences without retraining.

🛠️ Research Methods:

– Utilize TPO to translate reward signals into textual critiques, iteratively refining responses during inference.

💬 Research Conclusions:

– TPO improves the alignment of LLM outputs with human preferences efficiently at test-time, outperforming some pre-aligned models, and offering a scalable and lightweight alternative for preference optimization.

👉 Paper link: https://huggingface.co/papers/2501.12895

5. Kimi k1.5: Scaling Reinforcement Learning with LLMs

🔑 Keywords: Language model pretraining, Reinforcement Learning, Multi-Modal Learning, Long-CoT techniques, Short-CoT models

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The research seeks to enhance artificial intelligence by scaling training data through reinforcement learning for large language models (LLMs) like Kimi k1.5.

🛠️ Research Methods:

– Employed RL training techniques and multi-modal data recipes, alongside infrastructure optimization, to improve language model capabilities without complex methods like Monte Carlo tree search.

💬 Research Conclusions:

– Achieved state-of-the-art reasoning performances across multiple benchmarks and modalities and significantly outperformed existing short-CoT models, setting a new standard in reasoning results.

👉 Paper link: https://huggingface.co/papers/2501.12599

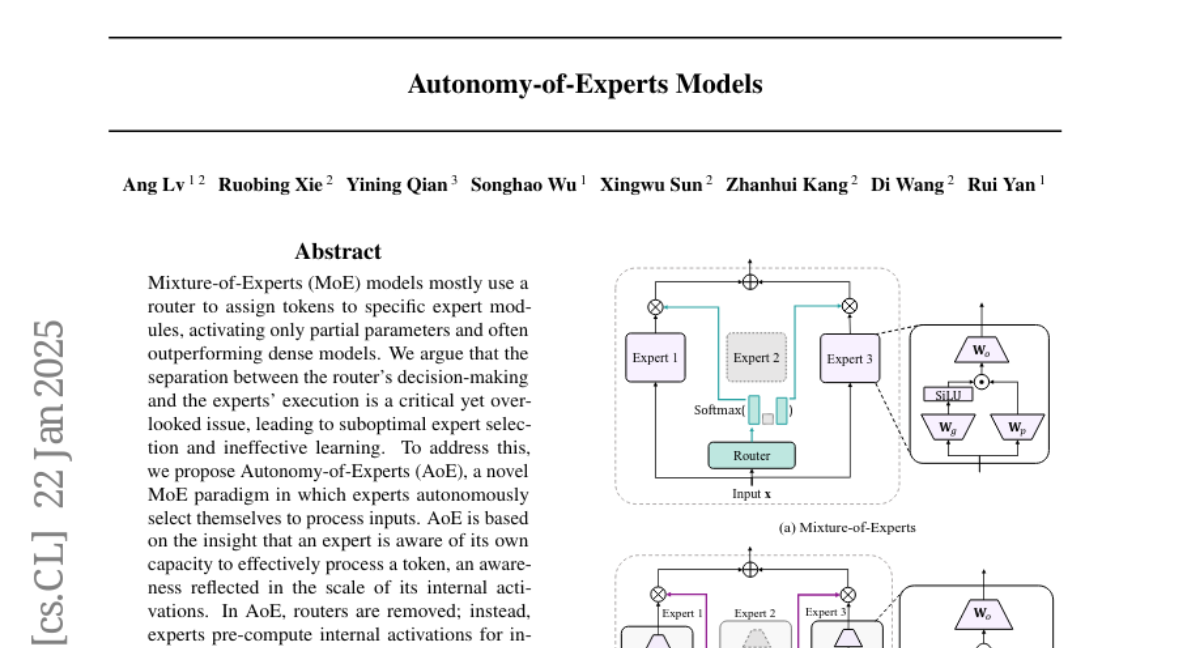

6. Autonomy-of-Experts Models

🔑 Keywords: Mixture-of-Experts, Autonomy-of-Experts, expert selection, language models

💡 Category: Natural Language Processing

🌟 Research Objective:

– To address the issue of suboptimal expert selection and ineffective learning in Mixture-of-Experts models by introducing a new paradigm where experts autonomously select themselves.

🛠️ Research Methods:

– Introduced Autonomy-of-Experts paradigm, where experts pre-compute internal activations, rank based on activation norms, and top experts proceed with processing.

– Utilized low-rank weight factorization to reduce the overhead of pre-computing activations.

💬 Research Conclusions:

– The proposed Autonomy-of-Experts paradigm improves expert selection and effective learning, outperforming traditional Mixture-of-Experts models with comparable efficiency.

– Demonstrated the effectiveness by pre-training language models with 700M to 4B parameters.

👉 Paper link: https://huggingface.co/papers/2501.13074

7. Pairwise RM: Perform Best-of-N Sampling with Knockout Tournament

🔑 Keywords: Best-of-N sampling, Pairwise Reward Model, Large Language Models, AI Native, knockout tournament

💡 Category: Natural Language Processing

🌟 Research Objective:

– The research aims to improve the effectiveness of Best-of-N (BoN) sampling for Large Language Models by utilizing a Pairwise Reward Model in combination with a knockout tournament approach.

🛠️ Research Methods:

– A Pairwise Reward Model is proposed to evaluate the correctness of two candidate solutions simultaneously, eliminating the arbitrary scoring of traditional reward models through parallel comparisons and iterative elimination in a knockout tournament setting.

💬 Research Conclusions:

– The experiments showed significant improvements over traditional discriminative reward models, particularly achieving a 40% to 60% relative improvement in performance on challenging problems.

👉 Paper link: https://huggingface.co/papers/2501.13007

8. O1-Pruner: Length-Harmonizing Fine-Tuning for O1-Like Reasoning Pruning

🔑 Keywords: long-thought reasoning, inference overhead, Length-Harmonizing Fine-Tuning, RL-style fine-tuning, mathematical reasoning benchmarks

💡 Category: Natural Language Processing

🌟 Research Objective:

– The paper aims to reduce the inference overhead of long-thought LLMs while maintaining their problem-solving accuracy.

🛠️ Research Methods:

– The authors propose Length-Harmonizing Fine-Tuning (O1-Pruner), which uses RL-style fine-tuning to generate shorter reasoning processes under accuracy constraints.

💬 Research Conclusions:

– Experiments show that O1-Pruner significantly reduces inference overhead and improves accuracy on various mathematical reasoning benchmarks.

👉 Paper link: https://huggingface.co/papers/2501.12570

9. IntellAgent: A Multi-Agent Framework for Evaluating Conversational AI Systems

🔑 Keywords: Large Language Models, conversational AI, IntellAgent, policy constraints, framework

💡 Category: AI Systems and Tools

🌟 Research Objective:

– To introduce IntellAgent, a scalable, open-source framework for evaluating conversational AI systems, addressing complexities and variability in multi-turn dialogues and policy constraints.

🛠️ Research Methods:

– Utilizes a graph-based policy model, policy-driven graph modeling, realistic event generation, and interactive user-agent simulations to create synthetic benchmarks.

💬 Research Conclusions:

– IntellAgent offers fine-grained diagnostics and identifies performance gaps, providing actionable insights for optimization and fostering community collaboration through its modular design.

👉 Paper link: https://huggingface.co/papers/2501.11067