AI Native Daily Paper Digest – 20250124

1. SRMT: Shared Memory for Multi-agent Lifelong Pathfinding

🔑 Keywords: Multi-agent reinforcement learning, Cooperative multi-agent problems, Shared Recurrent Memory Transformer, Coordination, Decentralized systems

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The study aims to address the challenge of predicting agents’ behavior in multi-agent reinforcement learning (MARL) to achieve effective cooperation.

🛠️ Research Methods:

– Introduced the Shared Recurrent Memory Transformer (SRMT) which extends memory transformers to multi-agent settings by pooling and broadcasting individual memories to coordinate actions without explicit behavior prediction.

💬 Research Conclusions:

– SRMT outperforms traditional reinforcement learning baselines, especially in environments with sparse rewards, and competes effectively with modern MARL, hybrid, and planning algorithms in various tasks, demonstrating enhanced coordination in decentralized systems.

👉 Paper link: https://huggingface.co/papers/2501.13200

2. Sigma: Differential Rescaling of Query, Key and Value for Efficient Language Models

🔑 Keywords: Sigma, DiffQKV attention, inference efficiency, system domain, AIMicius

💡 Category: AI Systems and Tools

🌟 Research Objective:

– Introduce Sigma, a large language model specialized for the system domain, aimed at improving inference efficiency through a novel architecture.

🛠️ Research Methods:

– Developed DiffQKV attention that enhances Query, Key, and Value components differentially.

– Conducted extensive experiments and analyses, pre-trained Sigma on 6T tokens from various sources.

💬 Research Conclusions:

– Sigma showed up to a 33.36% improvement in inference speed compared to conventional methods in long-context scenarios.

– Achieved remarkable performance in system domain benchmark AIMicius, outperforming GPT-4 with a significant margin.

👉 Paper link: https://huggingface.co/papers/2501.13629

3. Improving Video Generation with Human Feedback

🔑 Keywords: Video Generation, Rectified Flow Techniques, Human Feedback, VideoReward, Flow-NRG

💡 Category: Generative Models

🌟 Research Objective:

– The study aims to address unsmooth motion and misalignment in video generation using human feedback to improve video models.

🛠️ Research Methods:

– Construction of a large-scale human preference dataset with pairwise annotations.

– Introduction of VideoReward, a multi-dimensional video reward model with three alignment algorithms for flow-based models extending from diffusion models: Flow-DPO, Flow-RWR, and Flow-NRG.

💬 Research Conclusions:

– VideoReward model outperforms existing models, with Flow-DPO showing superior performance compared to Flow-RWR and standard methods. Flow-NRG allows custom weight assignments for personalized video quality objectives.

👉 Paper link: https://huggingface.co/papers/2501.13918

4. Temporal Preference Optimization for Long-Form Video Understanding

🔑 Keywords: Temporal Preference Optimization, video-LMMs, temporal grounding, LLaVA-Video-TPO

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The objective is to enhance temporal grounding capabilities in long-form videos using Temporal Preference Optimization (TPO).

🛠️ Research Methods:

– TPO uses a novel post-training framework with self-training based on preference learning, leveraging curated datasets for localized and comprehensive temporal grounding.

💬 Research Conclusions:

– TPO significantly improves temporal understanding in video-LMMs and reduces dependence on manual annotations, with LLaVA-Video-TPO leading in benchmark performance.

👉 Paper link: https://huggingface.co/papers/2501.13919

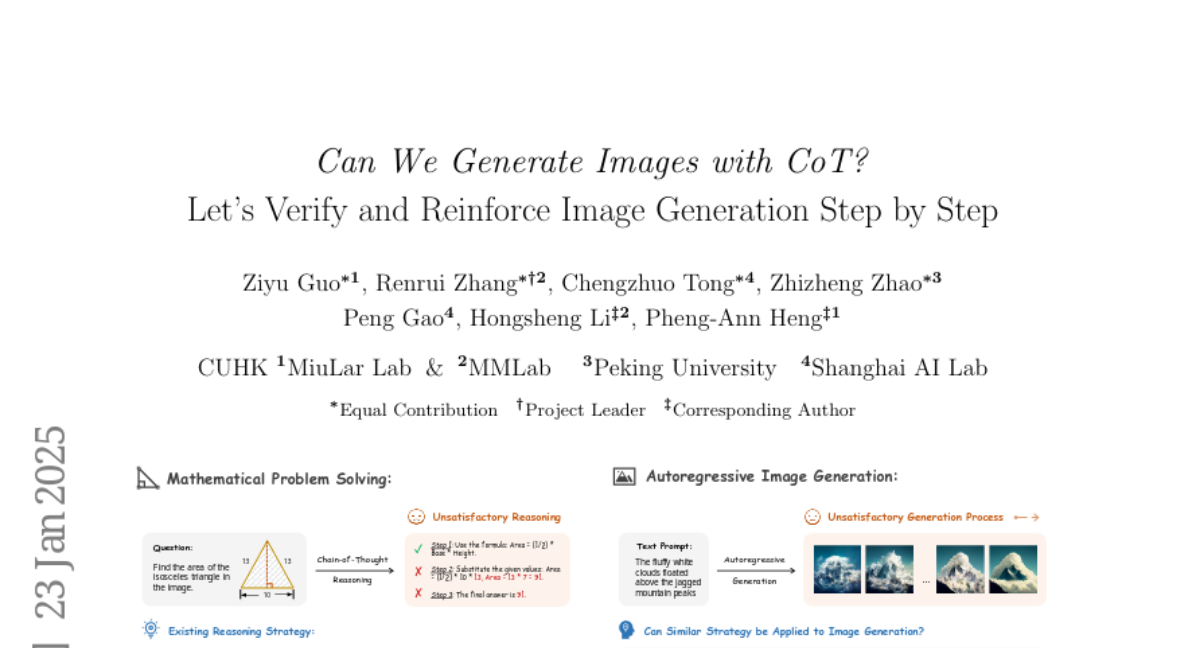

5. Can We Generate Images with CoT? Let’s Verify and Reinforce Image Generation Step by Step

🔑 Keywords: Chain-of-Thought, autoregressive image generation, Direct Preference Optimization, Potential Assessment Reward Model

💡 Category: Generative Models

🌟 Research Objective:

– The paper investigates the application of Chain-of-Thought reasoning to enhance autoregressive image generation.

🛠️ Research Methods:

– The study focuses on three techniques: scaling test-time computation for verification, aligning model preferences with Direct Preference Optimization (DPO), and integrating these techniques for complementary effects. Additionally, it introduces the Potential Assessment Reward Model (PARM) and PARM++ to improve image generation performance.

💬 Research Conclusions:

– Results demonstrate a significant improvement in image generation performance, specifically achieving a +24% improvement on the GenEval benchmark, surpassing Stable Diffusion 3 by +15%. The combined techniques provide a unique pathway for integrating Chain-of-Thought reasoning in image generation.

👉 Paper link: https://huggingface.co/papers/2501.13926

6. IMAGINE-E: Image Generation Intelligence Evaluation of State-of-the-art Text-to-Image Models

🔑 Keywords: Text-to-Image (T2I) models, Diffusion models, FLUX.1, Ideogram2.0, General-purpose applicability

💡 Category: Generative Models

🌟 Research Objective:

– The study aims to comprehensively evaluate the capabilities and limitations of advanced Text-to-Image (T2I) models across various domains to understand their potential as general-purpose AI tools.

🛠️ Research Methods:

– Developed the IMAGINE-E framework to evaluate six prominent models, including FLUX.1, Ideogram2.0, and others, across five key domains: structured output generation, realism and physical consistency, specific domain generation, challenging scenario generation, and multi-style creation tasks.

💬 Research Conclusions:

– Highlights the outstanding performance of FLUX.1 and Ideogram2.0 in structured and specific domain tasks. The study underscores the expanding applications and potential of T2I models, providing valuable insights into their evolution towards general-purpose usability.

👉 Paper link: https://huggingface.co/papers/2501.13920

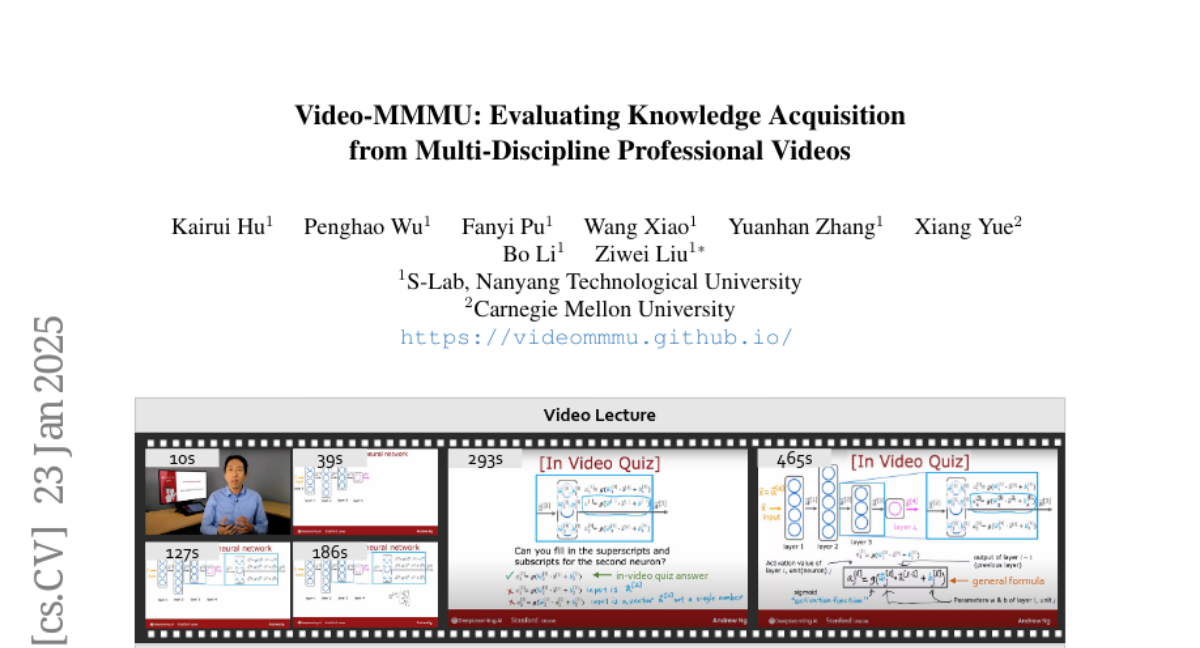

7. Video-MMMU: Evaluating Knowledge Acquisition from Multi-Discipline Professional Videos

🔑 Keywords: Large Multimodal Models, Video-MMMU, knowledge acquisition, cognitive stages

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The study introduces Video-MMMU, a benchmark to evaluate the knowledge acquisition abilities of Large Multimodal Models (LMMs) through videos. It aims to address the lack of a systematic evaluation framework for LMMs in acquiring knowledge from multimedia sources.

🛠️ Research Methods:

– The benchmark consists of 300 expert-level videos and 900 human-annotated questions across six disciplines. It evaluates LMMs using stage-aligned question-answer pairs corresponding to perception, comprehension, and adaptation stages.

💬 Research Conclusions:

– The findings reveal that LMM performance declines with increased cognitive demands. There is a notable gap between human and model knowledge acquisition, emphasizing the need for enhanced methods to improve LMMs’ learning and adaptation abilities from video content.

👉 Paper link: https://huggingface.co/papers/2501.13826

8. Step-KTO: Optimizing Mathematical Reasoning through Stepwise Binary Feedback

🔑 Keywords: Large language models, mathematical reasoning, Step-KTO

💡 Category: Natural Language Processing

🌟 Research Objective:

– To develop a framework, Step-KTO, that guides LLMs towards more coherent and reliable reasoning processes in mathematical reasoning tasks.

🛠️ Research Methods:

– Implementation of a training framework that combines process-level and outcome-level binary feedback to improve reasoning trajectories of LLMs.

💬 Research Conclusions:

– Step-KTO significantly enhances both the accuracy of final answers and the quality of intermediate reasoning steps in mathematical benchmarks like MATH-500.

👉 Paper link: https://huggingface.co/papers/2501.10799

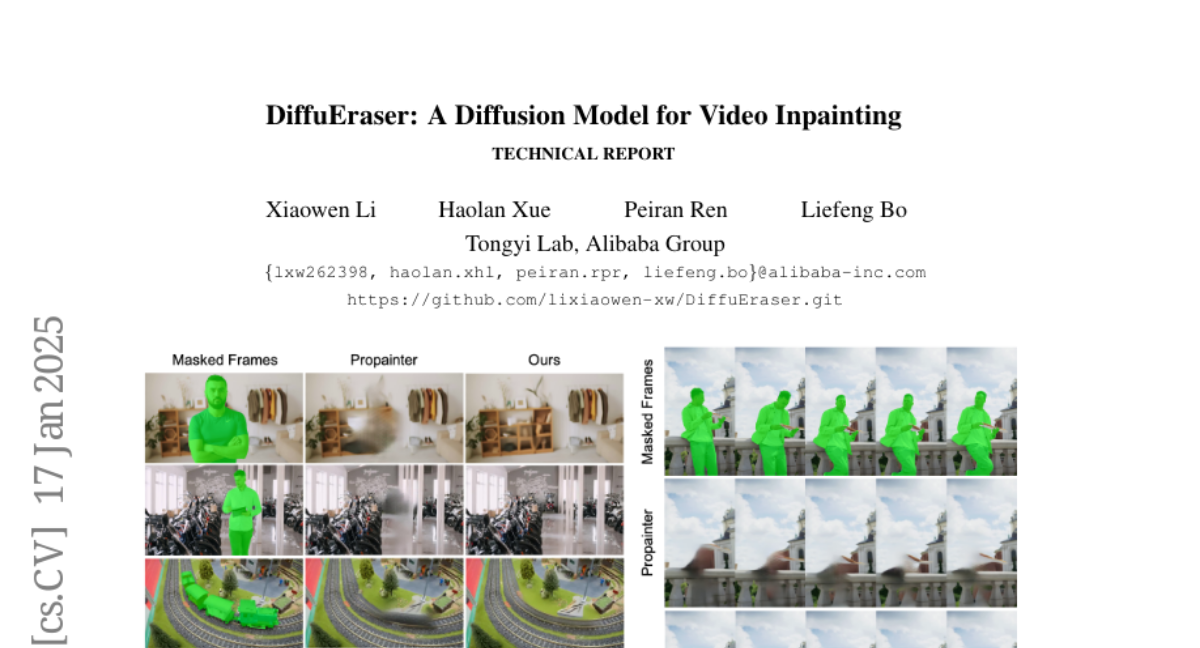

9. DiffuEraser: A Diffusion Model for Video Inpainting

🔑 Keywords: Video Inpainting, Optical Flow, Diffusion Models, Temporal Consistency, Generative Capabilities

💡 Category: Computer Vision

🌟 Research Objective:

– Introduce DiffuEraser, a stable diffusion-based video inpainting model, to enhance detail and structure in masked regions.

🛠️ Research Methods:

– Incorporate prior information for initialization and weak conditioning, expanding temporal receptive fields, and leverage the temporal smoothing of Video Diffusion Models.

💬 Research Conclusions:

– The proposed method surpasses state-of-the-art techniques in content completeness and temporal consistency while maintaining efficiency.

👉 Paper link: https://huggingface.co/papers/2501.10018

10. One-Prompt-One-Story: Free-Lunch Consistent Text-to-Image Generation Using a Single Prompt

🔑 Keywords: Text-to-image generation, Identity-preserving, Context consistency, 1Prompt1Story, Diffusion models

💡 Category: Generative Models

🌟 Research Objective:

– To propose a novel training-free method, 1Prompt1Story, for consistent text-to-image (T2I) generation that preserves identity without extensive training or model modification.

🛠️ Research Methods:

– Leverage inherent context consistency of language models.

– Use techniques like Singular-Value Reweighting and Identity-Preserving Cross-Attention to refine the generation process.

💬 Research Conclusions:

– 1Prompt1Story demonstrated effectiveness over existing methods in ensuring identity preservation and alignment with input prompts in T2I generation.

👉 Paper link: https://huggingface.co/papers/2501.13554

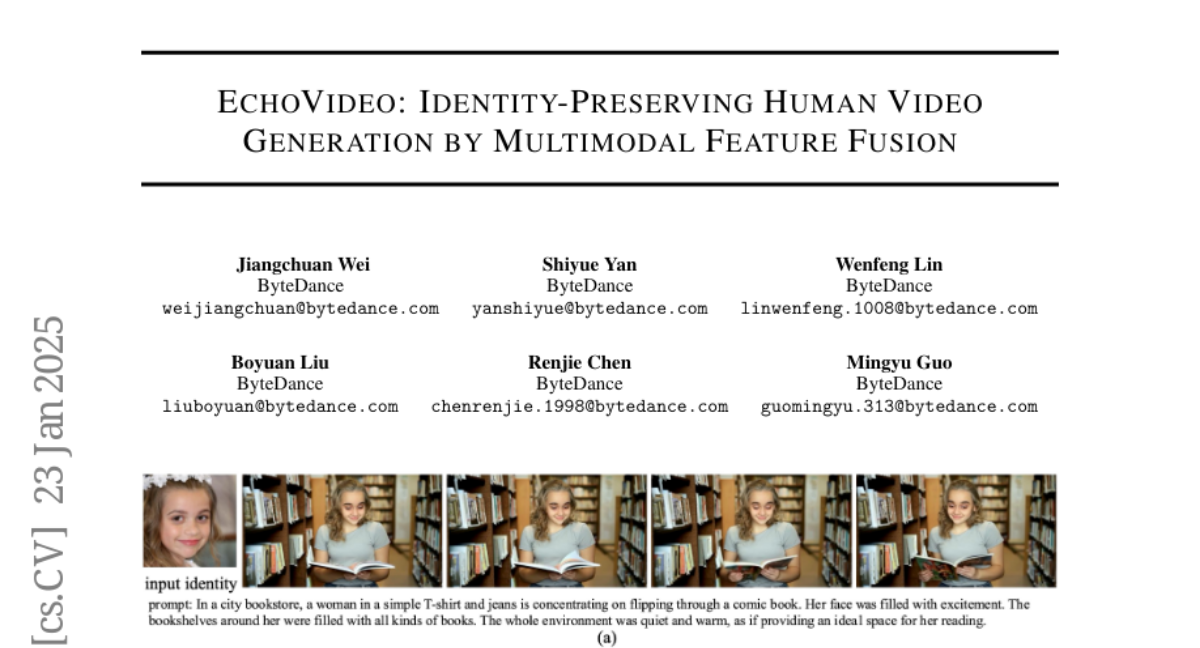

11. EchoVideo: Identity-Preserving Human Video Generation by Multimodal Feature Fusion

🔑 Keywords: Video Generation, Identity-Preserving, EchoVideo, IITF, High-level Semantic Features

💡 Category: Generative Models

🌟 Research Objective:

– To address copy-paste artifacts and low similarity issues in identity-preserving video generation by integrating high-level semantic features from video data.

🛠️ Research Methods:

– Utilization of an Identity Image-Text Fusion Module (IITF) and a two-stage training strategy incorporating stochastic methods for improved video generation.

💬 Research Conclusions:

– EchoVideo preserves facial identities and full-body integrity with high fidelity, achieving excellent quality and controllability in generated videos.

👉 Paper link: https://huggingface.co/papers/2501.13452

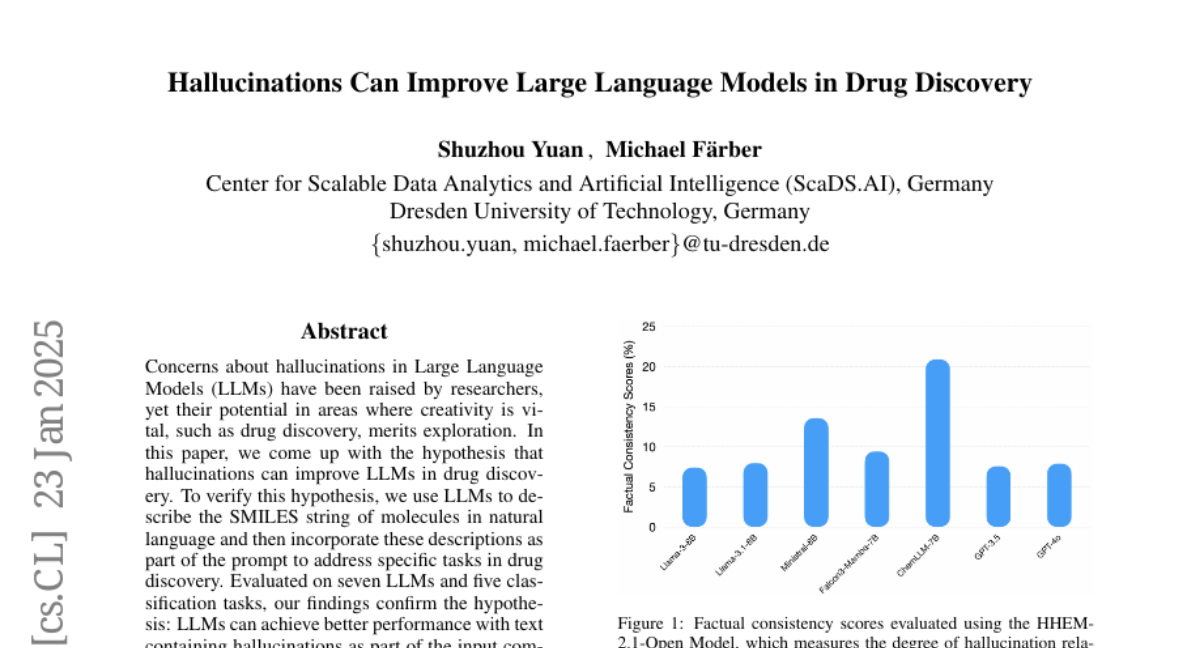

12. Hallucinations Can Improve Large Language Models in Drug Discovery

🔑 Keywords: Hallucinations, Large Language Models, Drug Discovery, SMILES, GPT-4o

💡 Category: AI in Healthcare

🌟 Research Objective:

– To explore the hypothesis that hallucinations can enhance the performance of Large Language Models (LLMs) in the field of drug discovery.

🛠️ Research Methods:

– Used LLMs to convert SMILES strings of molecules into natural language descriptions, incorporating these into prompts for specific drug discovery tasks. Tested on seven LLMs across five classification tasks.

💬 Research Conclusions:

– Findings confirmed the hypothesis, with notable improvements in performance when hallucinations were included, such as an 18.35% gain in ROC-AUC by Llama-3.1-8B over baseline and consistent enhancements from hallucinations generated by GPT-4o.

– Empirical analyses and a case study were conducted to understand factors and reasons underlying performance improvements.

👉 Paper link: https://huggingface.co/papers/2501.13824

13. Debate Helps Weak-to-Strong Generalization

🔑 Keywords: Scalable Oversight, Weak-to-Strong Generalization, Human Supervision, Model Alignment, Debate

💡 Category: Natural Language Processing

🌟 Research Objective:

– To improve the alignment of AI models by combining scalable oversight and weak-to-strong generalization.

🛠️ Research Methods:

– Fine-tuning a weak model using a strong pretrained model, then fine-tuning the strong model with labels generated by the weak model.

– Utilizing debate methods to assist weak models in extracting trustworthy information from strong models.

💬 Research Conclusions:

– The combined approach enhances model alignment, showing potential for debate to aid in weak-to-strong generalization.

– Experiments on OpenAI benchmarks demonstrate improved alignment with the combination method.

👉 Paper link: https://huggingface.co/papers/2501.13124

14. Evolution and The Knightian Blindspot of Machine Learning

🔑 Keywords: Robustness, Knightian uncertainty, open world, evolution, Reinforcement Learning

💡 Category: Reinforcement Learning

🌟 Research Objective:

– This paper aims to identify and address the blind spot in machine learning concerning its lack of robustness to an unknown future in an open world, with a focus on Knightian uncertainty.

🛠️ Research Methods:

– The paper contrasts Reinforcement Learning (RL) with biological evolution to illustrate the limitations of current ML formalisms in handling unforeseen situations in complex environments.

💬 Research Conclusions:

– The paper suggests that the fragility of machine learning systems might stem from blind spots in its foundational methods. It advocates for integrating mechanisms found in evolutionary processes to enhance RL’s robustness against unpredictable challenges and improve open-world AI efficiency.

👉 Paper link: https://huggingface.co/papers/2501.13075

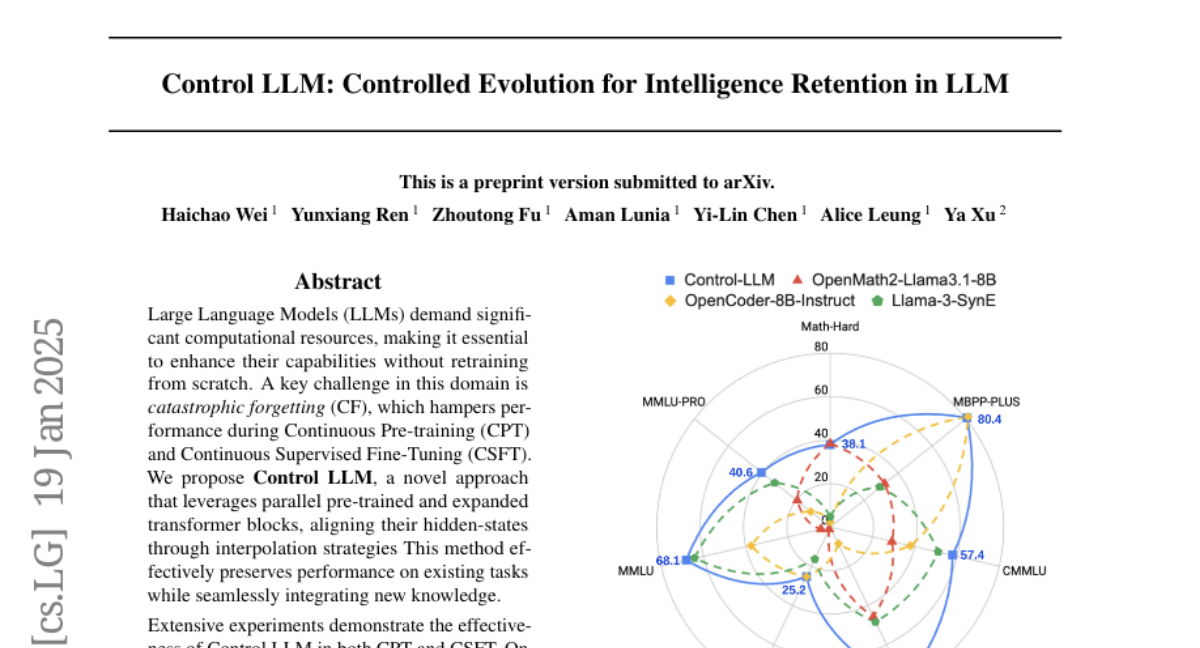

15. Control LLM: Controlled Evolution for Intelligence Retention in LLM

🔑 Keywords: Large Language Models, Continuous Pre-training, Catastrophic Forgetting, Control LLM, LinkedIn

💡 Category: Natural Language Processing

🌟 Research Objective:

– The paper aims to enhance the capabilities of Large Language Models (LLMs) without retraining from scratch by addressing catastrophic forgetting during Continuous Pre-training (CPT) and Continuous Supervised Fine-Tuning (CSFT).

🛠️ Research Methods:

– Introduced Control LLM, an approach that uses parallel pre-trained and expanded transformer blocks, with interpolation strategies to align hidden states and preserve performance on existing tasks while integrating new knowledge.

💬 Research Conclusions:

– Control LLM demonstrated significant improvements in mathematical reasoning, coding performance, and multilingual capabilities, achieving state-of-the-art results among open-source models with less data and compute, maintaining strong original capabilities with minimal degradation. It has been successfully implemented in LinkedIn’s GenAI-powered products, with training and evaluation resources being released to the community.

👉 Paper link: https://huggingface.co/papers/2501.10979

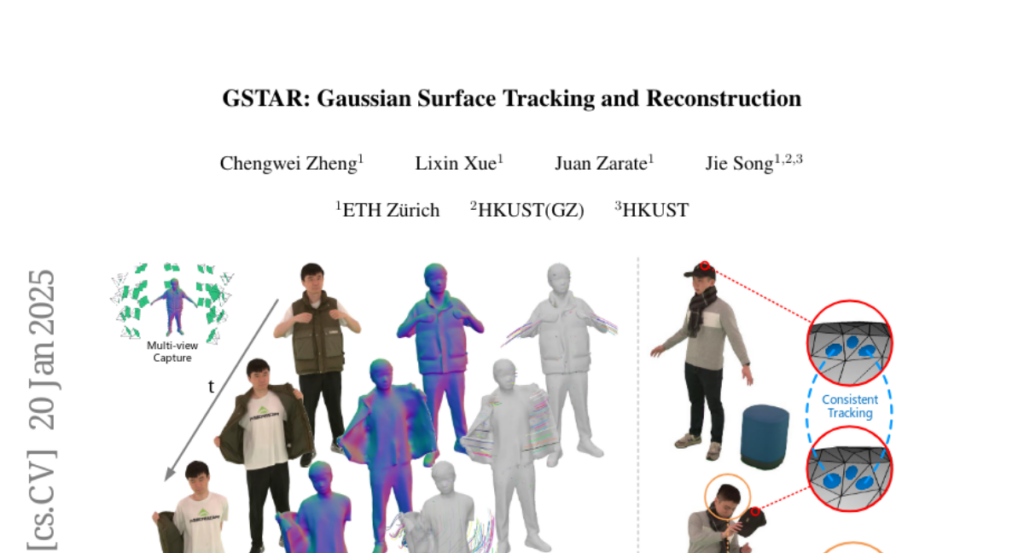

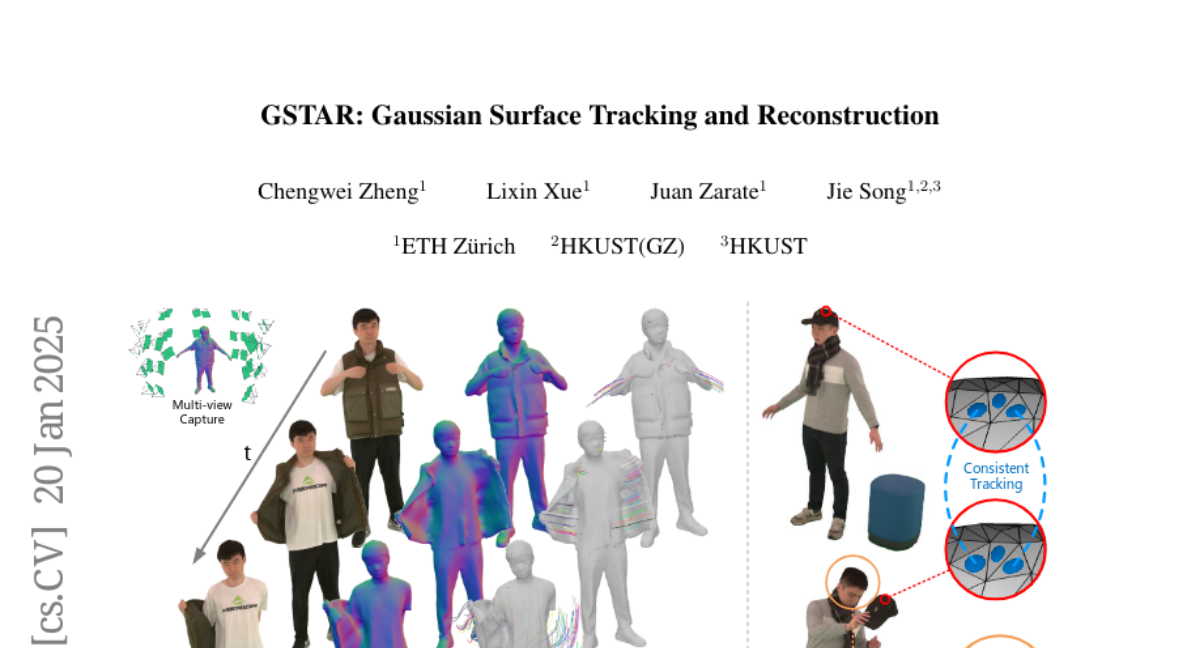

16. GSTAR: Gaussian Surface Tracking and Reconstruction

🔑 Keywords: 3D Gaussian Splatting, Photo-realistic Rendering, Surface Reconstruction, 3D Tracking

💡 Category: Computer Vision

🌟 Research Objective:

– The paper aims to address the challenges of tracking dynamic surfaces with changing topology using a novel method called GSTAR.

🛠️ Research Methods:

– GSTAR involves binding Gaussians to mesh faces to represent dynamic objects, adapting unbinding when topology changes occur, and using a surface-based scene flow method for robust initialization.

💬 Research Conclusions:

– GSTAR effectively tracks and reconstructs dynamic surfaces, enabling a range of applications, and demonstrates improved capabilities over existing methods.

👉 Paper link: https://huggingface.co/papers/2501.10283

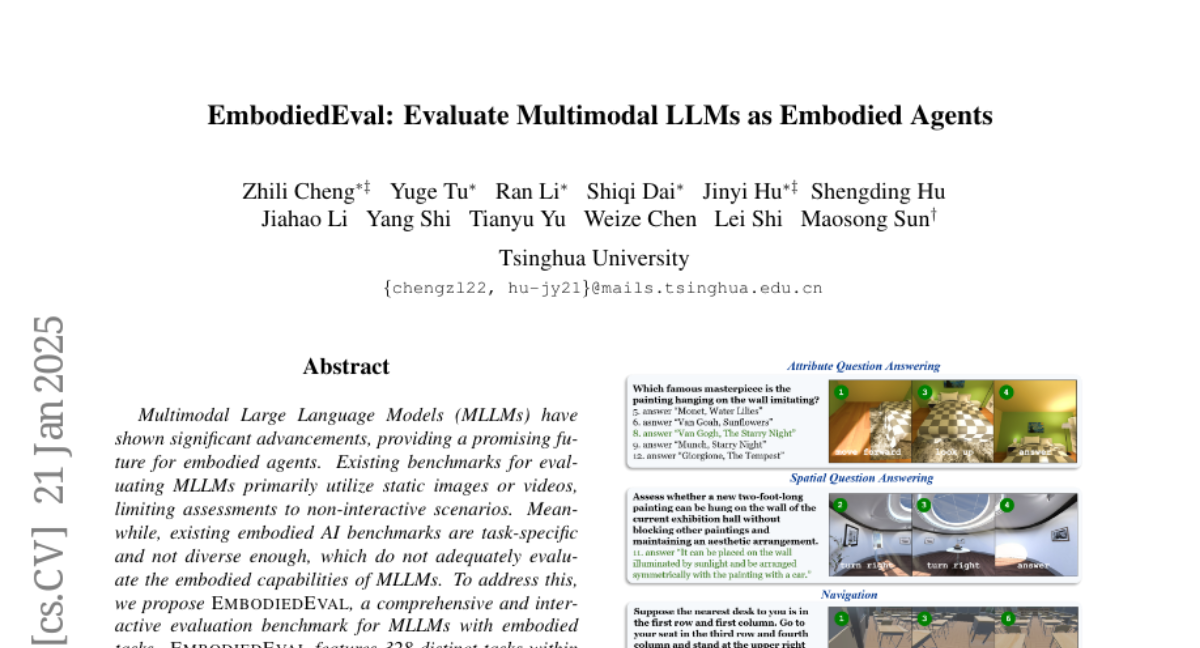

17. EmbodiedEval: Evaluate Multimodal LLMs as Embodied Agents

🔑 Keywords: Multimodal Large Language Models, Embodied AI, EmbodiedEval, Evaluation Benchmark

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– To address the limitations of existing benchmarks and propose EmbodiedEval, a comprehensive and interactive evaluation benchmark for evaluating MLLMs in embodied tasks.

🛠️ Research Methods:

– Development of EmbodiedEval with 328 tasks across 125 diverse 3D scenes, covering navigation, object interaction, social interaction, attribute question answering, and spatial question answering.

💬 Research Conclusions:

– State-of-the-art MLLMs show significant shortfalls compared to human levels on embodied tasks. Insights into limitations are provided, with comprehensive evaluation data and framework open-sourced for further research.

👉 Paper link: https://huggingface.co/papers/2501.11858