Meta Unveils LLaMA 3.2: Pioneering AI Native Applications with First Open-Source Multimodal Model

Image from Meta.

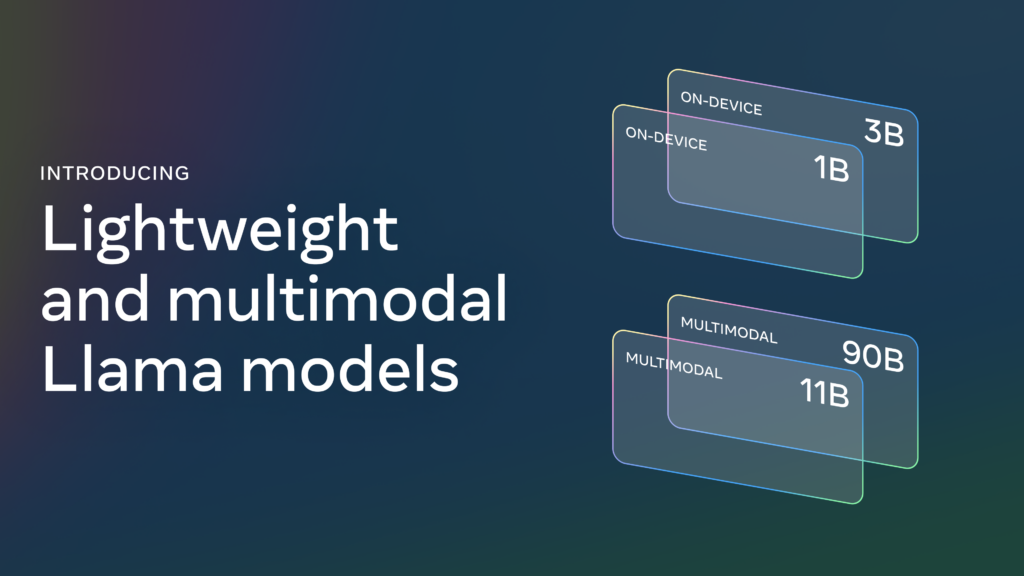

🚀 Meta has unveiled LLaMA 3.2, showcasing a significant advancement in AI technology with its first open-source model capable of processing both images and text. This innovative release introduces small and medium-sized vision LLMs optimized for edge devices, enhancing the deployment of AI in mobile and other local contexts.

LLaMA 3.2 features an impressive context length of 128K tokens, poised to redefine standards for AI Native applications. This capability allows for more sophisticated interactions while processing data directly on devices, ensuring user privacy and security. The potential for transforming user experiences and improving application efficiency is substantial, fostering collaboration and innovation in the AI landscape. Explore how this innovation is shaping the future of AI!

Read more here: https://ai.meta.com/blog/llama-3-2-connect-2024-vision-edge-mobile-devices/.

Join us at AI Native Foundation Membership Dashboard for the latest insights on AI Native, or follow our linkedin account at AI Native Foundation and our twitter account at AINativeF.