AI Native Daily Paper Digest – 20241218

1. Are Your LLMs Capable of Stable Reasoning?

🔑 Keywords: Large Language Models, G-Pass@k, LiveMathBench, Evaluation Metrics

💡 Category: Natural Language Processing

🌟 Research Objective:

– Address the gap between benchmark performances and real-world applications for Large Language Models, particularly in complex reasoning tasks.

🛠️ Research Methods:

– Introduce G-Pass@k, a novel evaluation metric for continuous assessment of model performance.

– Develop LiveMathBench, a dynamic benchmark with challenging mathematical problems to minimize data leakage risks.

💬 Research Conclusions:

– There is substantial room for improvement in LLMs’ “realistic” reasoning capabilities, highlighting the need for robust evaluation methods.

👉 Paper link: https://huggingface.co/papers/2412.13147

2. Multi-Dimensional Insights: Benchmarking Real-World Personalization in Large Multimodal Models

🔑 Keywords: Large Multimodal Models, MDI-Benchmark, Age Stratification, Real-world Applications

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The study aims to introduce the MDI-Benchmark to evaluate large multimodal models comprehensively, focusing on aligning them with real-world human needs.

🛠️ Research Methods:

– The researchers developed the MDI-Benchmark comprising over 500 images, employing simple and complex questions to assess the models’ capabilities and stratifying questions by three age categories to evaluate performance across different demographics.

💬 Research Conclusions:

– The MDI-Benchmark has demonstrated that models like GPT-4 achieve 79% accuracy on age-related tasks, indicating room for improvement in real-world scenario applications, and it is poised to open new avenues for personalization in LMMs.

👉 Paper link: https://huggingface.co/papers/2412.12606

3. OmniEval: An Omnidirectional and Automatic RAG Evaluation Benchmark in Financial Domain

🔑 Keywords: Large Language Models, RAG, OmniEval, Financial Domain, Evaluation Metrics

💡 Category: AI in Finance

🌟 Research Objective:

– Introduce OmniEval, a comprehensive benchmark for Retrieval-Augmented Generation (RAG) in the financial domain.

🛠️ Research Methods:

– Developed a multi-dimensional evaluation system with a matrix-based scenario evaluation, multi-stage evaluation, and robust metrics for assessing RAG systems.

💬 Research Conclusions:

– OmniEval provides a comprehensive evaluation on the RAG pipeline, revealing performance variations and opportunities for improvement in vertical domains.

👉 Paper link: https://huggingface.co/papers/2412.13018

4. Compressed Chain of Thought: Efficient Reasoning Through Dense Representations

🔑 Keywords: Chain-of-thought, Contemplation tokens, Compressed Chain-of-Thought, Reasoning improvement

💡 Category: Natural Language Processing

🌟 Research Objective:

– Introduce and propose Compressed Chain-of-Thought (CCoT) for enhancing reasoning in language models by using variable-length contemplation tokens.

🛠️ Research Methods:

– Utilize compressed representations of reasoning chains as contemplation tokens within pre-existing decoder language models to improve reasoning performance.

💬 Research Conclusions:

– CCoT achieves improved accuracy through reasoning over dense content representations, with adjustable reasoning improvements by controlling the number of generated contemplation tokens.

👉 Paper link: https://huggingface.co/papers/2412.13171

5. Emergence of Abstractions: Concept Encoding and Decoding Mechanism for In-Context Learning in Transformers

🔑 Keywords: In-Context Learning, Transformer, Concept Encoding-Decoding, AI Native, Large Language Models

💡 Category: Natural Language Processing

🌟 Research Objective:

– To explain the process of In-Context Learning (ICL) in autoregressive transformers through a concept encoding-decoding mechanism, analyzing how transformers form and use internal abstractions.

🛠️ Research Methods:

– Analyzed training dynamics of a small transformer on synthetic ICL tasks to observe the emergence of concept encoding and decoding.

– Validated the mechanism across various pretrained models of different scales (Gemma-2 2B/9B/27B, Llama-3.1 8B/70B).

– Utilized mechanistic interventions and controlled finetuning to establish the causal relationship between concept encoding quality and ICL performance.

💬 Research Conclusions:

– Demonstrated that the quality of concept encoding is causally related and predictive of ICL performance.

– Provided empirical insights into better understanding the success and failure modes of large language models through their representations.

👉 Paper link: https://huggingface.co/papers/2412.12276

6. Feather the Throttle: Revisiting Visual Token Pruning for Vision-Language Model Acceleration

🔑 Keywords: Vision-Language Models, Acceleration, Pruning, FEATHER

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To examine the existing acceleration approach for Vision-Language Models and its ability to maintain performance across various tasks.

🛠️ Research Methods:

– Analyzing early pruning of visual tokens and its impact on tasks.

– Proposing FEATHER, a new approach that addresses identified pruning issues and improves performance through ensemble criteria and uniform sampling.

💬 Research Conclusions:

– The current acceleration method’s success is due to the benchmarks’ limitations rather than superior compression of visual information.

– FEATHER significantly improves performance, particularly in localization tasks, with over 5 times the performance improvement.

👉 Paper link: https://huggingface.co/papers/2412.13180

7. Proposer-Agent-Evaluator(PAE): Autonomous Skill Discovery For Foundation Model Internet Agents

🔑 Keywords: Generalist Agent, Foundation Models, Reinforcement Learning, Autonomous Task Proposal, VLM-based Success Evaluator

💡 Category: Reinforcement Learning

🌟 Research Objective:

– To develop a learning system enabling foundation model agents to autonomously discover and practice diverse skills without the limitations of manually annotated instructions.

🛠️ Research Methods:

– Introduction of the Proposer-Agent-Evaluator (PAE) system featuring a context-aware task proposer and a VLM-based success evaluator, validated using real-world and self-hosted websites.

💬 Research Conclusions:

– PAE effectively generalizes human-annotated benchmarks with state-of-the-art performances, making it the first system to apply autonomous task proposal with RL for such agents.

👉 Paper link: https://huggingface.co/papers/2412.13194

8. VisDoM: Multi-Document QA with Visually Rich Elements Using Multimodal Retrieval-Augmented Generation

🔑 Keywords: VisDoMBench, VisDoMRAG, multimodal content, question answering, Retrieval Augmented Generation

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The paper aims to introduce VisDoMBench, a benchmark for evaluating question answering systems in scenarios involving multiple documents with rich multimodal content.

🛠️ Research Methods:

– The authors propose VisDoMRAG, a novel approach utilizing multimodal Retrieval Augmented Generation (RAG) to process and reason both visual and textual information simultaneously.

💬 Research Conclusions:

– Extensive experiments demonstrate that VisDoMRAG enhances the accuracy of multimodal document question answering systems by 12-20% compared to unimodal and long-context large language model baselines.

👉 Paper link: https://huggingface.co/papers/2412.10704

9. MIVE: New Design and Benchmark for Multi-Instance Video Editing

🔑 Keywords: zero-shot video editing, Multi-Instance Video Editing, mask-based framework, editing leakage, MIVE Dataset

💡 Category: Computer Vision

🌟 Research Objective:

– The research introduces MIVE, a zero-shot Multi-Instance Video Editing framework, to address challenges in localized video editing involving multiple objects.

🛠️ Research Methods:

– MIVE includes two modules: Disentangled Multi-instance Sampling (DMS) and Instance-centric Probability Redistribution (IPR), and also introduces the Cross-Instance Accuracy (CIA) Score along with the MIVE Dataset for evaluation.

💬 Research Conclusions:

– MIVE significantly enhances editing faithfulness, accuracy, and leakage prevention over state-of-the-art methods, establishing a new benchmark in the field of multi-instance video editing.

👉 Paper link: https://huggingface.co/papers/2412.12877

10. When to Speak, When to Abstain: Contrastive Decoding with Abstention

🔑 Keywords: Large Language Models, Hallucination, Contrastive Decoding with Abstention

💡 Category: Natural Language Processing

🌟 Research Objective:

– The research aims to address the limitations of Large Language Models (LLMs) when they encounter scenarios lacking relevant knowledge, which can lead to hallucinations and reduce reliability in high-stakes applications.

🛠️ Research Methods:

– The study introduces Contrastive Decoding with Abstention (CDA), a training-free method enabling LLMs to generate responses when relevant knowledge is available and abstain otherwise. This method adaptively assesses and prioritizes relevant knowledge for a given query.

💬 Research Conclusions:

– Experiments on four LLMs across three question-answering datasets show that CDA can effectively achieve accurate generation and abstention, suggesting its potential to enhance the reliability of LLMs and maintain user trust.

👉 Paper link: https://huggingface.co/papers/2412.12527

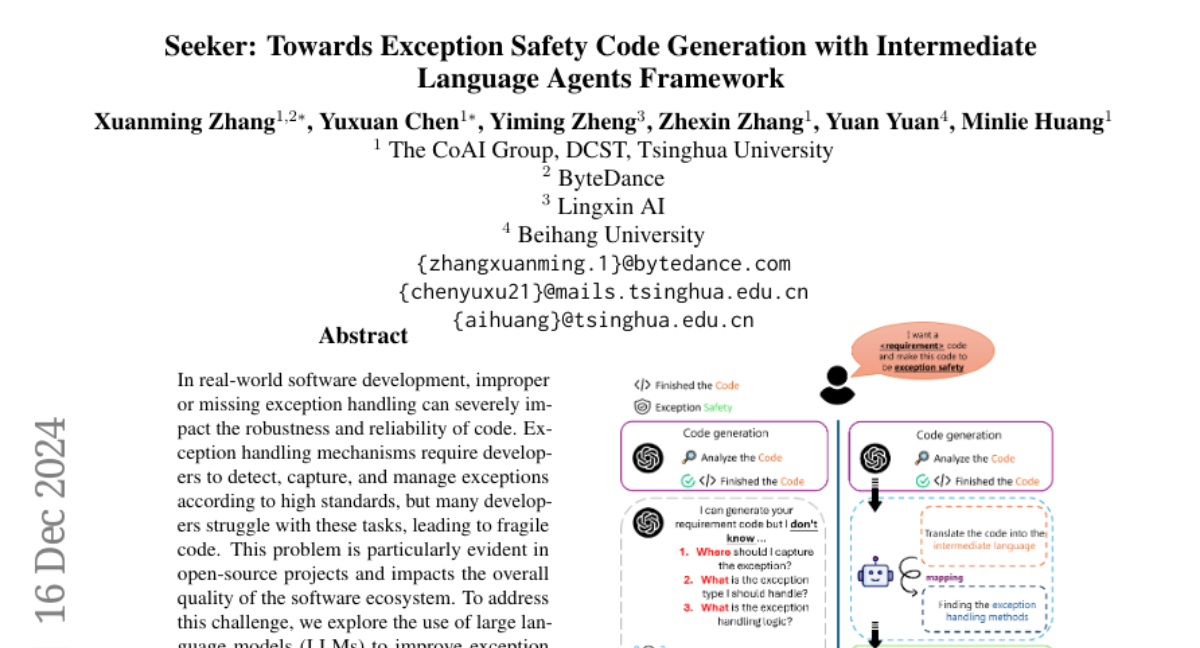

11. Seeker: Towards Exception Safety Code Generation with Intermediate Language Agents Framework

🔑 Keywords: Exception Handling, Large Language Models (LLMs), Seeker Framework, Code Reliability, Software Development

💡 Category: AI Systems and Tools

🌟 Research Objective:

– The study aims to explore the use of AI, specifically Large Language Models (LLMs), to improve exception handling practices in software development, enhancing the robustness and reliability of code.

🛠️ Research Methods:

– A systematic approach was employed, wherein a novel multi-agent framework called Seeker was developed, consisting of agents like Scanner, Detector, Predator, Ranker, and Handler to support LLMs in better detecting, capturing, and resolving exceptions.

💬 Research Conclusions:

– The research provides the first systematic insights on leveraging LLMs for enhanced exception handling in real-world development scenarios, addressing key issues like Insensitive Detection of Fragile Code and encouraging better exception management practices across open-source projects.

👉 Paper link: https://huggingface.co/papers/2412.11713

12. SUGAR: Subject-Driven Video Customization in a Zero-Shot Manner

🔑 Keywords: Zero-shot, Video Customization, Style and Motion Alignment, Synthetic Dataset, Identity Preservation

💡 Category: Generative Models

🌟 Research Objective:

– To develop SUGAR, a zero-shot method for subject-driven video customization that aligns generation with user-specified visual attributes.

🛠️ Research Methods:

– Implementation of a scalable pipeline to construct a synthetic dataset with 2.5 million image-video-text triplets.

– Introduction of special attention designs, improved training strategies, and a refined sampling algorithm to enhance model performance.

💬 Research Conclusions:

– SUGAR achieves state-of-the-art results in identity preservation, video dynamics, and video-text alignment without additional test-time cost, showcasing its effectiveness in subject-driven video customization.

👉 Paper link: https://huggingface.co/papers/2412.10533