AI Native Daily Paper Digest – 20250109

1. rStar-Math: Small LLMs Can Master Math Reasoning with Self-Evolved Deep Thinking

🔑 Keywords: Small Language Models, Math Reasoning, Monte Carlo Tree Search, Process Reward Model

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– The research aims to show that Small Language Models (SLMs) can match or exceed the math reasoning performance of larger models like OpenAI o1 without the need for distillation from superior models.

🛠️ Research Methods:

– Introduced a Monte Carlo Tree Search (MCTS) for deep thinking during math reasoning tasks, supported by a novel process reward model. Used a code-augmented CoT data synthesis method and a self-evolution strategy to train the SLMs.

💬 Research Conclusions:

– Achieved state-of-the-art math reasoning improvements in SLMs, significantly boosting performance on benchmarks such as MATH and USA Math Olympiad (AIME), surpassing even the o1-preview in certain scenarios.

👉 Paper link: https://huggingface.co/papers/2501.04519

2. Towards System 2 Reasoning in LLMs: Learning How to Think With Meta Chain-of-Though

🔑 Keywords: Meta Chain-of-Thought, Chain-of-Thought, reasoning, reinforcement learning, synthetic data generation

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– The study introduces Meta Chain-of-Thought (Meta-CoT) to model underlying reasoning in traditional Chain-of-Thought frameworks.

🛠️ Research Methods:

– Utilizes process supervision, synthetic data generation, and search algorithms to generate Meta-CoTs.

– Incorporates instruction tuning with linearized search traces and post-training reinforcement learning.

💬 Research Conclusions:

– Presents a pipeline for training LLMs to achieve Meta-CoTs aiming for improved reasoning capabilities.

– Discusses open questions on scaling, verifier roles, and discovering new reasoning algorithms.

👉 Paper link: https://huggingface.co/papers/2501.04682

3. URSA: Understanding and Verifying Chain-of-thought Reasoning in Multimodal Mathematics

🔑 Keywords: Chain-of-thought, Large Language Models, Multimodal Mathematics, SOTA, URSA-7B

💡 Category: Natural Language Processing

🌟 Research Objective:

– To enhance Chain-of-thought reasoning capabilities in multimodal mathematical reasoning by addressing the scarcity of high-quality training data.

🛠️ Research Methods:

– Introduced a three-module synthesis strategy involving CoT distillation, trajectory-format rewriting, and format unification, resulting in the MMathCoT-1M dataset.

– Developed a data synthesis strategy creating DualMath-1.1M datasets for test-time scaling and enhanced supervision.

💬 Research Conclusions:

– The URSA-7B model achieved state-of-the-art performance on multiple benchmarks after training on MMathCoT-1M.

– Transitioning to URSA-RM-7B, the model reinforced test-time performance of URSA-7B, showing strong out-of-distribution verification capabilities.

👉 Paper link: https://huggingface.co/papers/2501.04686

4. Agent Laboratory: Using LLM Agents as Research Assistants

🔑 Keywords: Agent Laboratory, LLM-based framework, research process, human feedback, research expenses

💡 Category: AI Systems and Tools

🌟 Research Objective:

– To accelerate scientific discovery, reduce research costs, and improve research quality through an autonomous LLM-based framework, Agent Laboratory.

🛠️ Research Methods:

– Use of Agent Laboratory, an autonomous system, to complete the research process including literature review, experimentation, and report writing while incorporating human feedback and guidance.

💬 Research Conclusions:

– Agent Laboratory driven by o1-preview generates optimal research outcomes.

– The generated machine learning code surpasses existing methods in achieving state-of-the-art performance.

– Human feedback at each stage significantly enhances research quality.

– Achieves an 84% reduction in research expenses compared to previous autonomous methods.

👉 Paper link: https://huggingface.co/papers/2501.04227

5. LLM4SR: A Survey on Large Language Models for Scientific Research

🔑 Keywords: Large Language Models, scientific research, hypothesis discovery, experiment planning, scientific writing

💡 Category: Natural Language Processing

🌟 Research Objective:

– To explore how Large Language Models (LLMs) are revolutionizing the scientific research process across various stages.

🛠️ Research Methods:

– Systematic survey of LLM applications in hypothesis discovery, experiment planning and implementation, scientific writing, and peer reviewing.

💬 Research Conclusions:

– LLMs have transformative potential in scientific research, showcasing task-specific methodologies and evaluation benchmarks while identifying challenges and proposing future research directions.

👉 Paper link: https://huggingface.co/papers/2501.04306

6. InfiGUIAgent: A Multimodal Generalist GUI Agent with Native Reasoning and Reflection

🔑 Keywords: GUI Agents, Multimodal Large Language Models, Task Automation, Native Reasoning

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The study aims to enhance task automation on computing devices, like computers and mobile phones, using GUI Agents powered by Multimodal Large Language Models (MLLMs).

🛠️ Research Methods:

– The researchers introduced InfiGUIAgent, employing a two-stage supervised fine-tuning pipeline to develop GUI understanding, grounding, and advanced reasoning skills.

💬 Research Conclusions:

– InfiGUIAgent showed competitive performance on various GUI benchmarks, emphasizing the importance of native reasoning abilities in improving GUI interaction for automation tasks.

👉 Paper link: https://huggingface.co/papers/2501.04575

7. GeAR: Generation Augmented Retrieval

🔑 Keywords: Document retrieval, Semantic similarity, Fusion and decoding, Fine-grained information, Large language models

💡 Category: Natural Language Processing

🌟 Research Objective:

– To improve document retrieval accuracy by addressing the limitations of scalar semantic similarity and emphasizing fine-grained semantic relationships.

🛠️ Research Methods:

– Introduction of the Generation Augmented Retrieval (GeAR) method with innovative fusion and decoding modules to generate relevant text from document queries without increasing computational load.

💬 Research Conclusions:

– GeAR demonstrates strong retrieval and localization performance across multiple scenarios, providing new insights into retrieval results interpretation. Future research will be facilitated by the release of the framework’s code, data, and models.

👉 Paper link: https://huggingface.co/papers/2501.02772

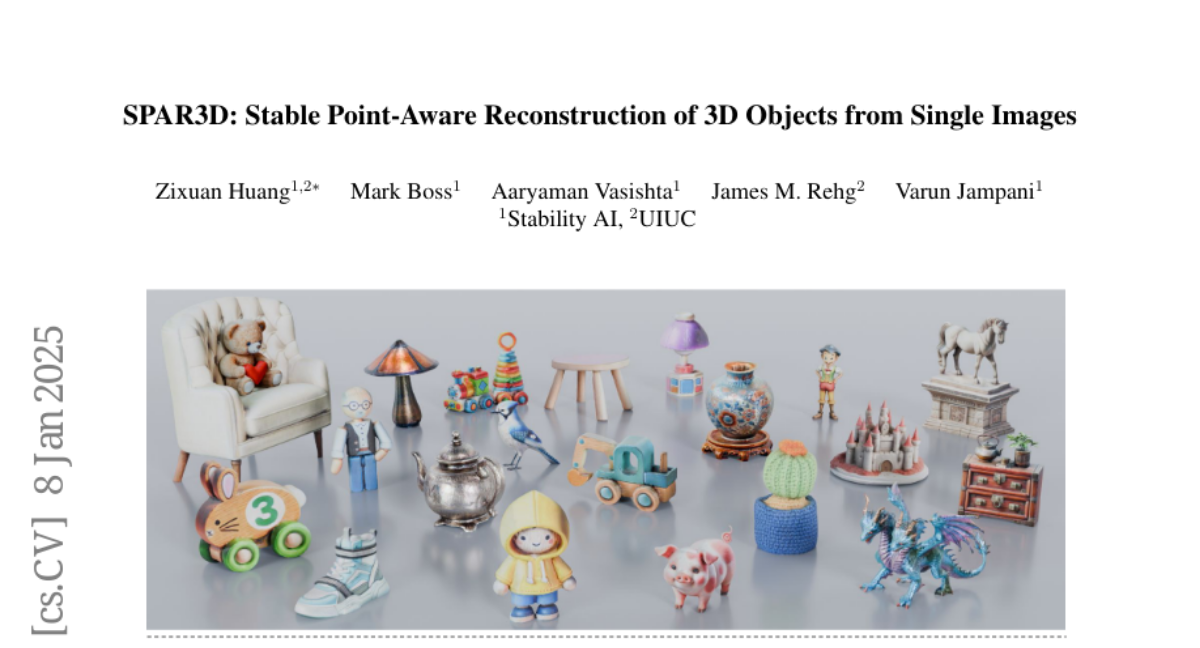

8. SPAR3D: Stable Point-Aware Reconstruction of 3D Objects from Single Images

🔑 Keywords: 3D object reconstruction, regression, generative modeling, SPAR3D, point clouds

💡 Category: Computer Vision

🌟 Research Objective:

– To address the challenges of single-image 3D object reconstruction by integrating regression-based and generative modeling approaches in a novel two-stage design.

🛠️ Research Methods:

– Developed SPAR3D, which combines a lightweight point diffusion model for generating sparse 3D point clouds and a subsequent mesh creation stage using these point clouds and input images to achieve detailed reconstructions.

💬 Research Conclusions:

– SPAR3D significantly surpasses previous state-of-the-art methods in performance, offering a fast inference speed of 0.7 seconds and allowing interactive user edits through intermediate point cloud representation.

👉 Paper link: https://huggingface.co/papers/2501.04689

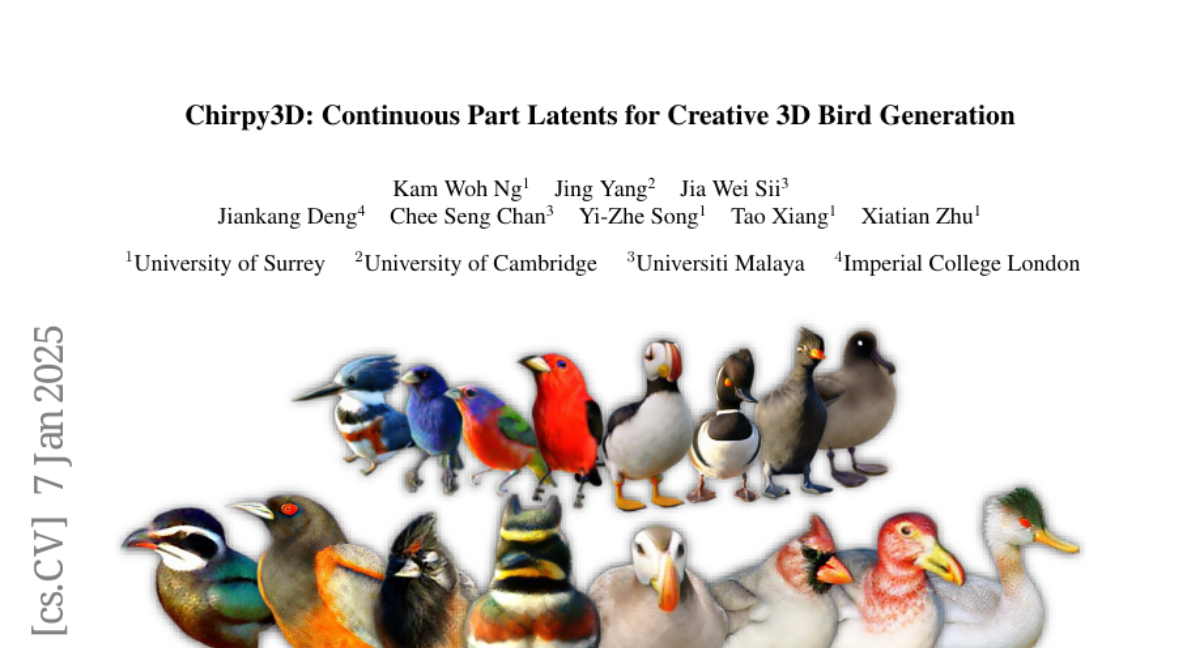

9. Chirpy3D: Continuous Part Latents for Creative 3D Bird Generation

🔑 Keywords: 3D generation, fine-grained understanding, multi-view diffusion

💡 Category: Generative Models

🌟 Research Objective:

– To advance 3D generation techniques, enabling both intricate details and creative novelty in 3D objects.

🛠️ Research Methods:

– Utilizing multi-view diffusion and modeling part latents as continuous distributions, alongside a self-supervised feature consistency loss.

💬 Research Conclusions:

– Successfully developed the first system capable of generating novel 3D objects with species-specific details, with demonstrated applications on birds, extendable beyond current examples.

👉 Paper link: https://huggingface.co/papers/2501.04144

10. DPO Kernels: A Semantically-Aware, Kernel-Enhanced, and Divergence-Rich Paradigm for Direct Preference Optimization

🔑 Keywords: Large Language Models, Direct Preference Optimization, Kernel Methods, Alignment, Robust Generalization

💡 Category: Natural Language Processing

🌟 Research Objective:

– The study aims to enhance the alignment of **Large Language Models** with diverse values and preferences by addressing the limitations of fixed divergences and limited feature transformations.

🛠️ Research Methods:

– Proposed **DPO-Kernels**, integrating **Kernel Methods** such as polynomial, RBF, and spectral kernels for richer transformations. Introduced a hybrid loss for embedding-based and probability-based objectives, alternative divergences for stability, data-driven selection metrics for optimal kernel-divergence pairing, and a hierarchical mixture of kernels.

💬 Research Conclusions:

– **DPO-Kernels** demonstrated state-of-the-art performance on 12 datasets in areas like factuality, safety, and reasoning. It provides robust generalization and serves as a comprehensive resource for further alignment research in **Large Language Models**.

👉 Paper link: https://huggingface.co/papers/2501.03271

11. EpiCoder: Encompassing Diversity and Complexity in Code Generation

🔑 Keywords: Instruction Tuning, Code LLMs, Feature Tree, EpiCoder, Data Synthesis

💡 Category: AI Systems and Tools

🌟 Research Objective:

– The paper aims to optimize code language models (LLMs) by introducing a novel feature tree-based synthesis framework that enhances data complexity and diversity.

🛠️ Research Methods:

– Employed a feature tree-based framework to model semantic relationships between code elements, allowing for nuanced data generation and fine-tuning of base models, resulting in the EpiCoder series.

💬 Research Conclusions:

– The approach demonstrated state-of-the-art performance in code synthesis and showed significant potential in handling complex repository-level code data, assessed through software engineering principles and LLM-as-a-judge methods.

👉 Paper link: https://huggingface.co/papers/2501.04694

12. Multi-task retriever fine-tuning for domain-specific and efficient RAG

🔑 Keywords: Retrieval-Augmented Generation, Large Language Models, fine-tune, encoder, scalability

💡 Category: Natural Language Processing

🌟 Research Objective:

– Address limitations in Large Language Models by enhancing Retrieval-Augmented Generation applications through fine-tuning retrievers.

🛠️ Research Methods:

– Instruction fine-tune a small retriever encoder on diverse domain-specific tasks to serve various use cases while maintaining low cost, scalability, and speed.

💬 Research Conclusions:

– The encoder demonstrates generalization to out-of-domain settings and unseen retrieval tasks in real-world enterprise scenarios.

👉 Paper link: https://huggingface.co/papers/2501.04652