AI Native Daily Paper Digest – 20250114

1. The Lessons of Developing Process Reward Models in Mathematical Reasoning

🔑 Keywords: Process Reward Models, Large Language Models, Monte Carlo estimation, Evaluation Framework

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– Investigate and address challenges in developing effective Process Reward Models (PRMs) for supervising mathematical reasoning in Large Language Models (LLMs).

🛠️ Research Methods:

– Conduct extensive experiments using Monte Carlo estimation and compare its effectiveness against LLM-as-a-judge and human annotation. Implement a consensus filtering mechanism to integrate these methodologies and propose a comprehensive evaluation framework.

💬 Research Conclusions:

– Demonstrated that traditional Monte Carlo estimation for PRMs underperforms compared to other methods. Identified biases in existing evaluation strategies and developed a new state-of-the-art PRM that enhances model performance and data efficiency, offering guidelines for future research in process supervision models.

👉 Paper link: https://huggingface.co/papers/2501.07301

2. Tensor Product Attention Is All You Need

🔑 Keywords: Tensor Product Attention, memory efficiency, sequence modeling, T6, RoPE

💡 Category: Natural Language Processing

🌟 Research Objective:

– The study aims to develop a novel attention mechanism, Tensor Product Attention (TPA), to reduce memory overhead while maintaining model quality in language models handling longer input sequences.

🛠️ Research Methods:

– The researchers propose using tensor decompositions to represent queries, keys, and values compactly, integrating contextual factorization and RoPE, and introduce the Tensor ProducT ATTenTion Transformer (T6) for empirical evaluation against standard Transformer baselines.

💬 Research Conclusions:

– T6 demonstrates superior performance in language modeling tasks compared to standard Transformer models, achieving both improved quality and significant memory efficiency, which enables processing longer sequences with fixed resources, addressing scalability challenges.

👉 Paper link: https://huggingface.co/papers/2501.06425

3. BIOMEDICA: An Open Biomedical Image-Caption Archive, Dataset, and Vision-Language Models Derived from Scientific Literature

🔑 Keywords: Vision-Language Models, BIOMEDICA, CLIP-style models, zero-shot classification, image-text retrieval

💡 Category: AI in Healthcare

🌟 Research Objective:

– The study aims to fill the gap in creating generalist biomedical vision-language models by introducing BIOMEDICA, a scalable framework for producing large-scale, annotated, and publicly accessible multimodal datasets in the biomedical field.

🛠️ Research Methods:

– BIOMEDICA extracts and annotates over 24 million image-text pairs from the PubMed Central Open Access subset. The framework supports continuous pre-training of CLIP-style models via streaming, reducing the need for extensive local data storage.

💬 Research Conclusions:

– The models trained on the BIOMEDICA dataset achieve state-of-the-art performance across 40 biomedical tasks, with significant improvements in zero-shot classification and image-text retrieval, all while using significantly less computational resources.

👉 Paper link: https://huggingface.co/papers/2501.07171

4. $\text{Transformer}^2$: Self-adaptive LLMs

🔑 Keywords: Self-adaptive LLMs, real-time adaptation, reinforcement learning, dynamic AI systems

💡 Category: Natural Language Processing

🌟 Research Objective:

– The research aims to address the limitations of traditional fine-tuning methods by developing \implname, a self-adaptive framework for large language models (LLMs) to adapt to unseen tasks efficiently and in real-time.

🛠️ Research Methods:

– \implname utilizes a two-pass mechanism during inference that includes a dispatch system to identify task properties and employs reinforcement learning-trained “expert” vectors for task-specific behavior adjustment.

💬 Research Conclusions:

– The framework outperforms existing methods like LoRA in terms of efficiency and fewer parameters, demonstrating adaptability and versatility across various LLM architectures and modalities, including vision-language tasks, marking a step towards dynamic AI systems.

👉 Paper link: https://huggingface.co/papers/2501.06252

5. MinMo: A Multimodal Large Language Model for Seamless Voice Interaction

🔑 Keywords: Multimodal Large Language Models, Voice Interaction, Duplex Communication, Speech Generation, AI Native

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Introduce MinMo, a Multimodal Large Language Model, to enhance seamless voice interactions by addressing limitations of previous models.

🛠️ Research Methods:

– Employ multi-stage training for various alignments with 1.4 million hours of speech data to achieve state-of-the-art performance.

💬 Research Conclusions:

– MinMo achieves advanced capabilities in voice comprehension and generation and offers full-duplex communication with improved instruction-following for nuanced speech generation.

👉 Paper link: https://huggingface.co/papers/2501.06282

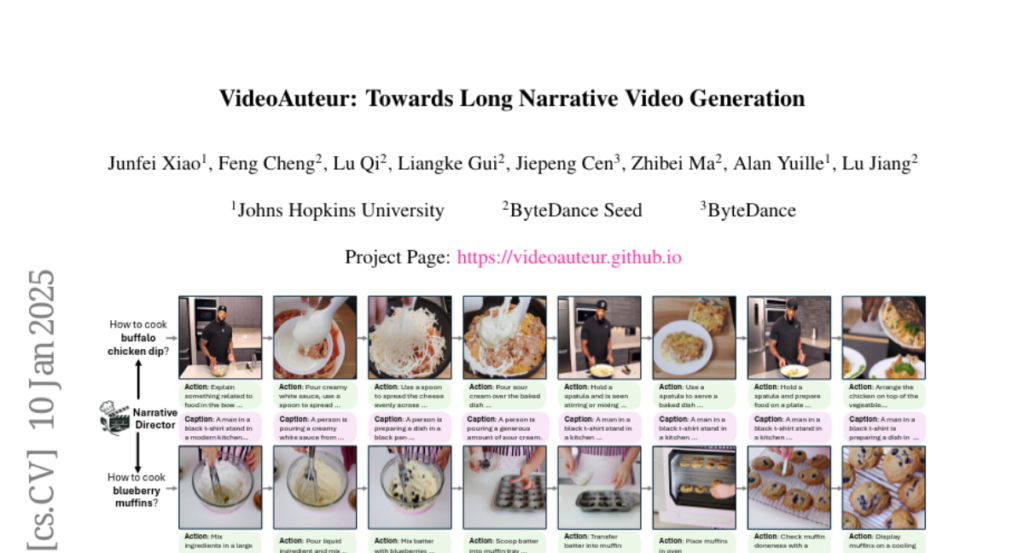

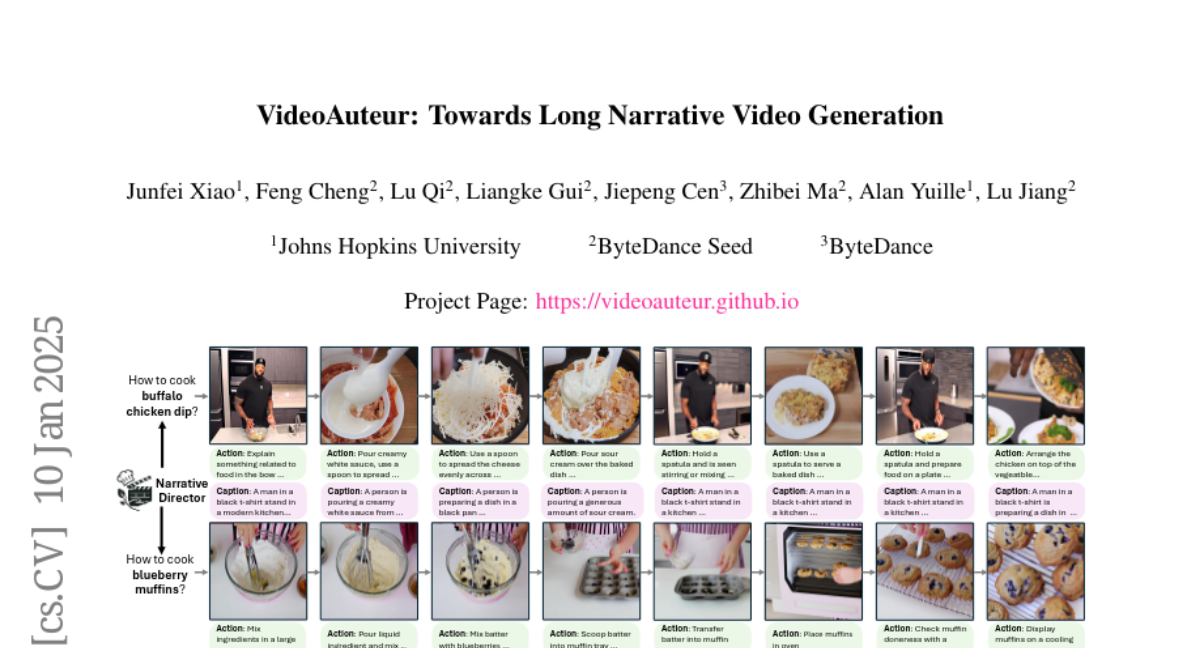

6. VideoAuteur: Towards Long Narrative Video Generation

🔑 Keywords: video generation, long-form narrative, Vision-Language Models, visual embeddings

💡 Category: Generative Models

🌟 Research Objective:

– To advance the generation of long-form narratives in cooking videos by addressing challenges in producing coherent and informative long video sequences.

🛠️ Research Methods:

– Utilization of a large-scale cooking video dataset and validation of this dataset through state-of-the-art Vision-Language Models and video generation models.

– Introduction of a Long Narrative Video Director to improve visual and semantic coherence with finetuning techniques that integrate text and image embeddings.

💬 Research Conclusions:

– The proposed method significantly improves the generation of visually detailed and semantically aligned keyframes, enhancing overall video quality.

👉 Paper link: https://huggingface.co/papers/2501.06173

7. O1 Replication Journey — Part 3: Inference-time Scaling for Medical Reasoning

🔑 Keywords: inference-time scaling, large language models, medical reasoning, hypothetico-deductive method

💡 Category: AI in Healthcare

🌟 Research Objective:

– To explore the potential of inference-time scaling in large language models for enhancing medical reasoning tasks including diagnostic decision-making and treatment planning.

🛠️ Research Methods:

– Conducted extensive experiments on medical benchmarks like MedQA, Medbullets, and JAMA Clinical Challenges to assess performance improvements and reasoning chain complexity.

💬 Research Conclusions:

– Findings reveal performance improvements ranging from 6%-11% with increased inference time, correlation between task complexity and reasoning chain length, and adherence to hypothetico-deductive method principles in differential diagnoses.

👉 Paper link: https://huggingface.co/papers/2501.06458

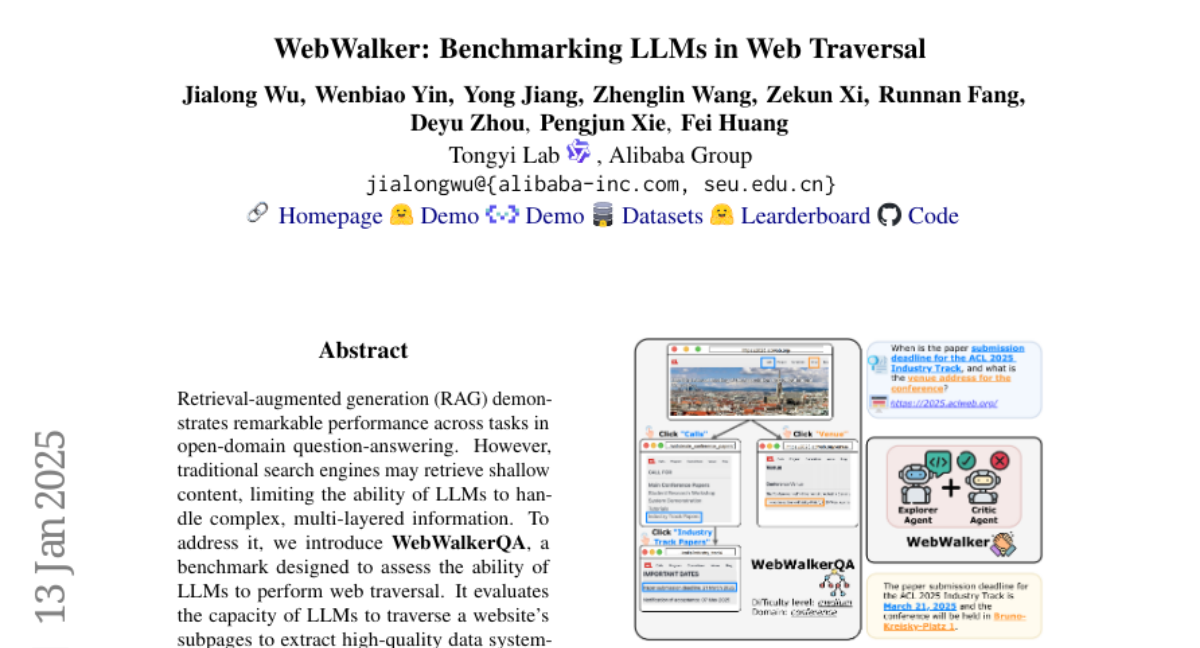

8. WebWalker: Benchmarking LLMs in Web Traversal

🔑 Keywords: Retrieval-Augmented Generation, LLMs, WebWalkerQA, Web Navigation

💡 Category: Natural Language Processing

🌟 Research Objective:

– To evaluate the capability of large language models (LLMs) in performing web traversal for extracting high-quality data.

🛠️ Research Methods:

– Introduction of WebWalker, a multi-agent framework using an explore-critic paradigm to mimic human-like web navigation.

💬 Research Conclusions:

– WebWalkerQA benchmark is designed to be challenging and effective in demonstrating the combined capabilities of RAG and WebWalker in real-world scenarios.

👉 Paper link: https://huggingface.co/papers/2501.07572

9. SPAM: Spike-Aware Adam with Momentum Reset for Stable LLM Training

🔑 Keywords: Large Language Models, Gradient Spikes, Optimization, Spike-Aware Adam, Memory-Efficient

💡 Category: Natural Language Processing

🌟 Research Objective:

– Investigate and address gradient spikes in the training of Large Language Models (LLMs) to enhance training stability and resource efficiency.

🛠️ Research Methods:

– Introduce Spike-Aware Adam with Momentum Reset (SPAM) optimizer to counteract gradient spikes through momentum reset and gradient clipping.

– Conduct extensive experiments across various tasks and model sizes, including pre-training, fine-tuning, reinforcement learning, and time series forecasting.

💬 Research Conclusions:

– SPAM optimizer consistently outperforms Adam and its variants in terms of stability and efficiency.

– Demonstrates superior performance under memory constraints compared to state-of-the-art memory-efficient optimizers.

– Underscores the importance of addressing gradient spikes for efficient LLM training.

👉 Paper link: https://huggingface.co/papers/2501.06842

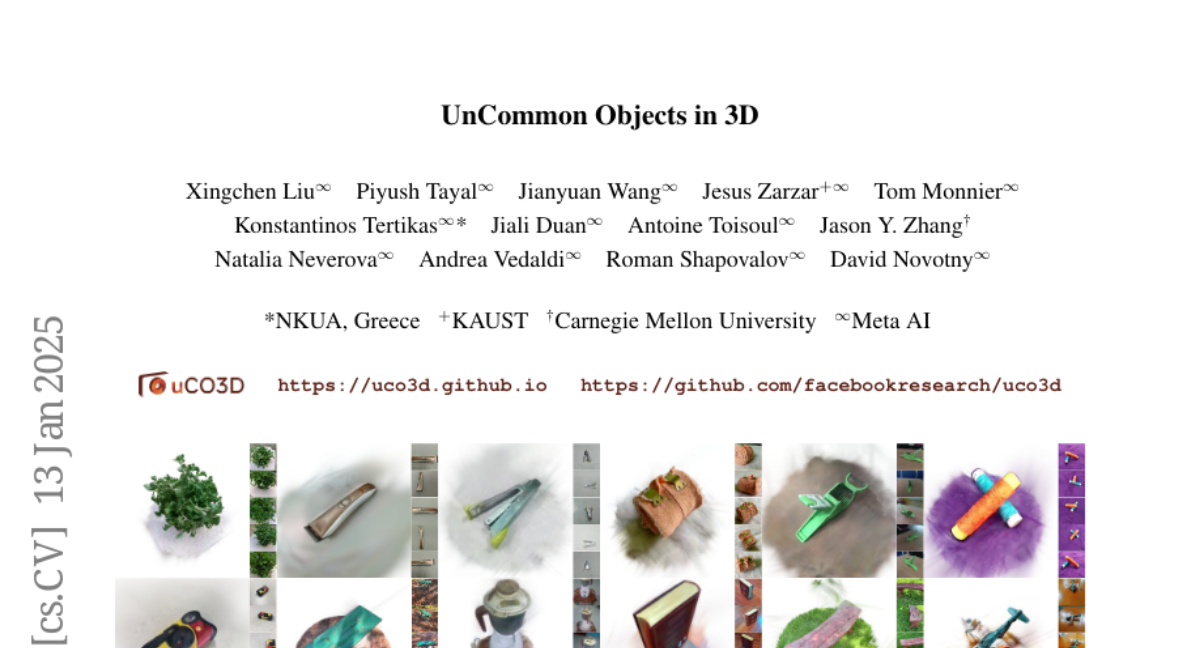

10. UnCommon Objects in 3D

🔑 Keywords: 3D deep learning, 3D generative AI, high-resolution videos, object categories, annotations

💡 Category: Computer Vision

🌟 Research Objective:

– Introduce a new dataset, Uncommon Objects in 3D (uCO3D), for enhancing 3D deep learning and generative AI.

🛠️ Research Methods:

– Collection and annotation of high-resolution videos covering over 1,000 object categories with full-360° coverage.

💬 Research Conclusions:

– uCO3D outperforms existing datasets like MVImgNet and CO3Dv2 in terms of diversity, quality, and effectiveness in training large 3D models.

👉 Paper link: https://huggingface.co/papers/2501.07574

11. ChemAgent: Self-updating Library in Large Language Models Improves Chemical Reasoning

🔑 Keywords: Chemical reasoning, Large language models, ChemAgent, Structured collection, Experience improvement

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– The study aims to enhance the performance of large language models (LLMs) in chemical reasoning by introducing ChemAgent, a framework that uses a dynamic, self-updating library for improved task decomposition and solution generation.

🛠️ Research Methods:

– The method involves decomposing chemical tasks into sub-tasks and forming them into a structured collection, called memory, to facilitate effective task solving and continuous improvement based on past experiences.

💬 Research Conclusions:

– ChemAgent demonstrates significant performance improvements of up to 46% in chemical reasoning tasks over existing methods, with potential applications in drug discovery and materials science.

👉 Paper link: https://huggingface.co/papers/2501.06590

12. Evaluating Sample Utility for Data Selection by Mimicking Model Weights

🔑 Keywords: Foundation models, Data selection, Mimic Score, Dataset quality, CLIP models

💡 Category: Foundations of AI

🌟 Research Objective:

– To introduce the Mimic Score as a new data quality metric that identifies useful data samples for training new models by evaluating their alignment with a pretrained reference model.

🛠️ Research Methods:

– Development of Grad-Mimic, a data selection framework utilizing the Mimic Score to filter and prioritize valuable samples by assessing their gradient alignment.

– Empirical evaluation across six image datasets to measure performance improvements and effectiveness of the proposed method.

💬 Research Conclusions:

– Mimic Score-guided training consistently boosts performance in image datasets and enhances CLIP model efficacy.

– The proposed method surpasses existing filtering techniques in accurately estimating and improving dataset quality.

👉 Paper link: https://huggingface.co/papers/2501.06708