AI Native Daily Paper Digest – 20250115

1. MiniMax-01: Scaling Foundation Models with Lightning Attention

🔑 Keywords: MiniMax-01, Lightning Attention, Mixture of Experts, Vision-Language Model

💡 Category: Generative Models

🌟 Research Objective:

– To introduce the MiniMax-01 series that offers superior capabilities in processing longer contexts compared to top-tier models.

🛠️ Research Methods:

– Integrated lightning attention with Mixture of Experts (MoE) featuring 32 experts and optimized parallel strategy.

– Developed efficient computation-communication overlap techniques for handling hundreds of billions of parameters.

💬 Research Conclusions:

– MiniMax-01 series matches state-of-the-art models such as GPT-4o and Claude-3.5-Sonnet but provides 20-32 times longer context window.

– Publicly released models available for further study and application.

👉 Paper link: https://huggingface.co/papers/2501.08313

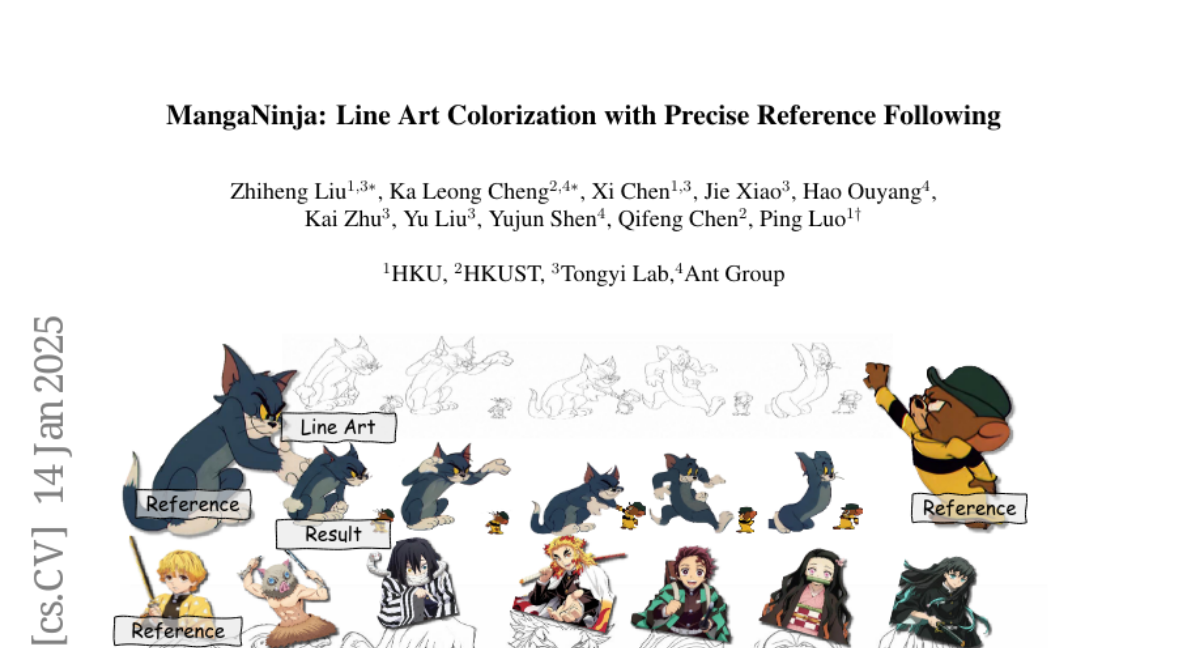

2. MangaNinja: Line Art Colorization with Precise Reference Following

🔑 Keywords: Diffusion models, MangaNinjia, line art colorization, point-driven control, patch shuffling

💡 Category: Generative Models

🌟 Research Objective:

– To enhance the accuracy of reference-guided line art colorization with MangaNinjia by implementing novel techniques.

🛠️ Research Methods:

– Integration of a patch shuffling module for better correspondence learning between reference images and target art.

– Introduction of a point-driven control scheme for precise color matching.

💬 Research Conclusions:

– Experiments confirm MangaNinjia’s superior performance in colorizing line art, demonstrating advantages over existing methods, especially in challenging scenarios.

👉 Paper link: https://huggingface.co/papers/2501.08332

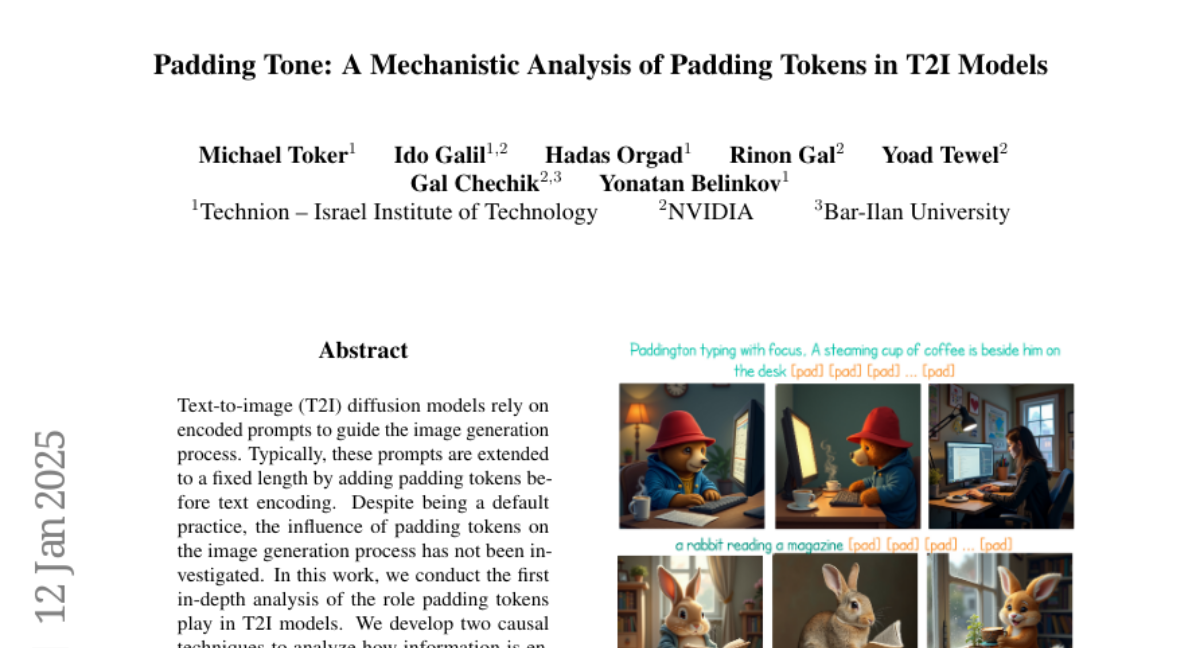

3. Padding Tone: A Mechanistic Analysis of Padding Tokens in T2I Models

🔑 Keywords: Text-to-image, Diffusion models, Padding tokens, Image generation

💡 Category: Generative Models

🌟 Research Objective:

– To analyze the impact of padding tokens on the image generation process in text-to-image diffusion models.

🛠️ Research Methods:

– Developed two causal techniques to assess how information from tokens is encoded throughout the T2I pipeline.

💬 Research Conclusions:

– Found that padding tokens can influence the model’s output in three scenarios: during text encoding, during diffusion, or being ignored, depending on model architecture and training.

– Identified key relationships between the effects of padding tokens and the model’s architecture and training process, offering insights for future T2I model design and training practices.

👉 Paper link: https://huggingface.co/papers/2501.06751

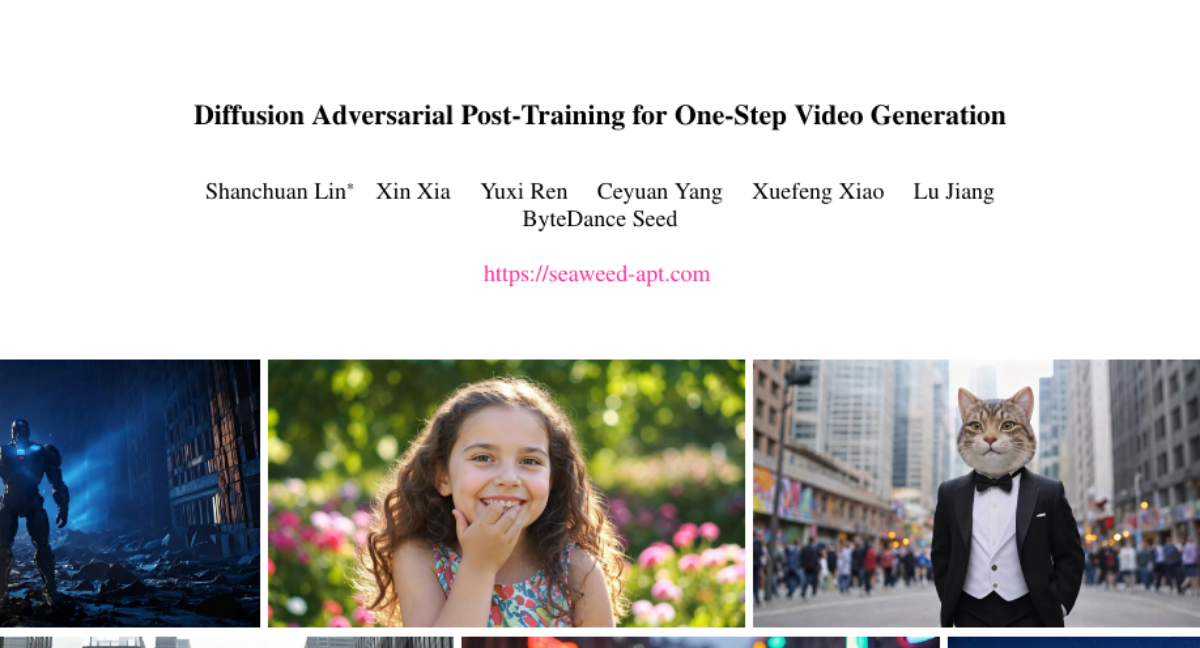

4. Diffusion Adversarial Post-Training for One-Step Video Generation

🔑 Keywords: Diffusion models, Adversarial Post-Training, Video generation

💡 Category: Generative Models

🌟 Research Objective:

– The study aims to enhance the efficiency of one-step video generation while maintaining quality, following on from diffusion pre-training.

🛠️ Research Methods:

– Introduced Adversarial Post-Training (APT) with architectural and procedural improvements, along with an approximated R1 regularization objective for stability and quality.

💬 Research Conclusions:

– The proposed model, Seaweed-APT, achieves real-time video generation of 2-second, 1280×720, 24fps videos and high-quality 1024px images in a single forward evaluation step.

👉 Paper link: https://huggingface.co/papers/2501.08316

5. A Multi-Modal AI Copilot for Single-Cell Analysis with Instruction Following

🔑 Keywords: Large language models, scRNA-seq, AI copilot, Multi-Modal Learning, InstructCell

💡 Category: AI in Healthcare

🌟 Research Objective:

– To address the inefficiencies of conventional single-cell RNA sequencing interaction tools by developing InstructCell, a multi-modal AI copilot.

🛠️ Research Methods:

– Construction of a comprehensive multi-modal instruction dataset pairing text-based instructions with scRNA-seq profiles. Development of a multi-modal cell language architecture to process both modalities.

💬 Research Conclusions:

– InstructCell improves accessibility and efficiency in single-cell data analysis, surpassing current models and facilitating deeper biological insights.

👉 Paper link: https://huggingface.co/papers/2501.08187

6. Omni-RGPT: Unifying Image and Video Region-level Understanding via Token Marks

🔑 Keywords: Omni-RGPT, multimodal large language model, region-level comprehension, Token Mark, RegVID-300k

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The study introduces Omni-RGPT, a multimodal large language model focused on enhancing region-level comprehension for both images and videos.

🛠️ Research Methods:

– Utilizes Token Mark to highlight target regions within the visual feature space, connecting visual and text tokens through spatial prompts and incorporating these into video comprehension tasks.

– Introduces a large-scale dataset (RegVID-300k) for region-level video instruction.

💬 Research Conclusions:

– Achieves state-of-the-art results on image and video commonsense reasoning benchmarks with robust performance in captioning and referring expression comprehension tasks.

👉 Paper link: https://huggingface.co/papers/2501.08326

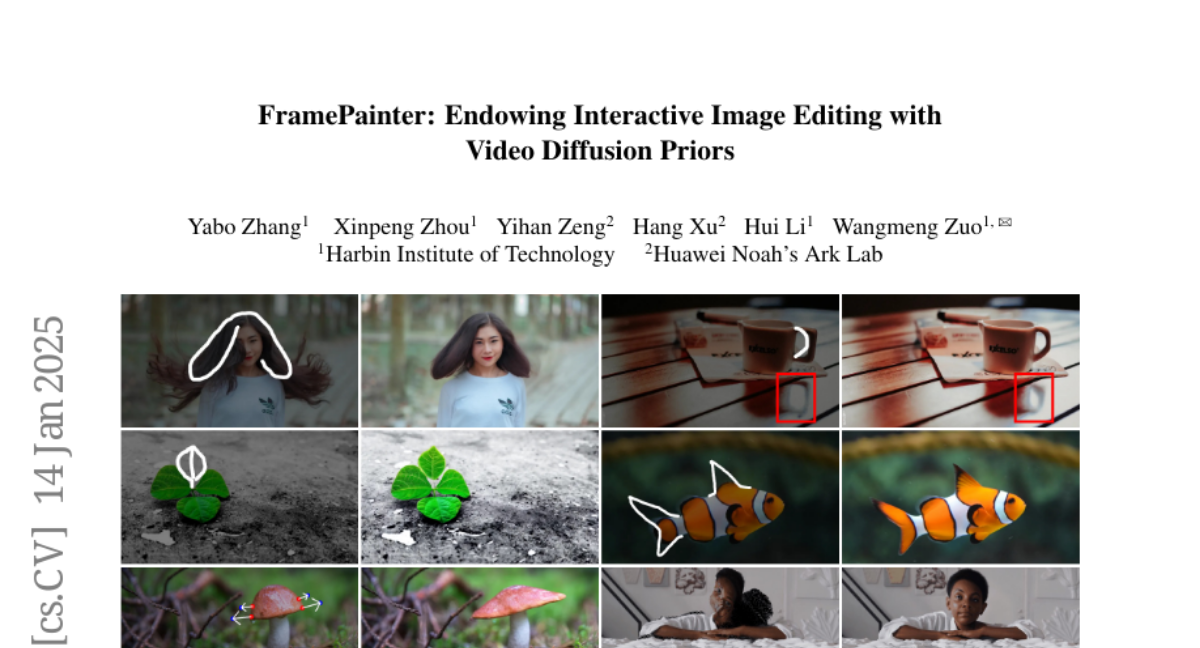

7. FramePainter: Endowing Interactive Image Editing with Video Diffusion Priors

🔑 Keywords: Interactive image editing, video diffusion, FramePainter, temporal consistency, visual interaction

💡 Category: Generative Models

🌟 Research Objective:

– The study aims to reformulate interactive image editing as an image-to-video generation problem to leverage video diffusion priors for reducing training costs and ensuring temporal consistency.

🛠️ Research Methods:

– Introduced FramePainter, which utilizes Stable Video Diffusion with a sparse control encoder and proposes matching attention for handling large motion between frames while maintaining dense correspondence.

💬 Research Conclusions:

– FramePainter demonstrates superior performance over previous methods with minimal training data and is highly effective in diverse editing scenarios, achieving seamless and coherent image edits and generalizing well to novel contexts.

👉 Paper link: https://huggingface.co/papers/2501.08225

8. Democratizing Text-to-Image Masked Generative Models with Compact Text-Aware One-Dimensional Tokens

🔑 Keywords: Image Tokenizers, Text-to-Image Generative Models, TA-TiTok, MaskGen, Open Data

💡 Category: Generative Models

🌟 Research Objective:

– To introduce Text-Aware Transformer-based 1-Dimensional Tokenizer (TA-TiTok) to improve the efficiency and scalability of image tokenizers in text-to-image generative models.

🛠️ Research Methods:

– Developed TA-TiTok, integrating textual information during the decoding stage and employing a simplified one-stage training process, avoiding complex distillation methods.

💬 Research Conclusions:

– TA-TiTok and MaskGen, trained on open data, demonstrate performance on par with models trained on private datasets, promoting accessibility and democratization in the text-to-image generative model field.

👉 Paper link: https://huggingface.co/papers/2501.07730

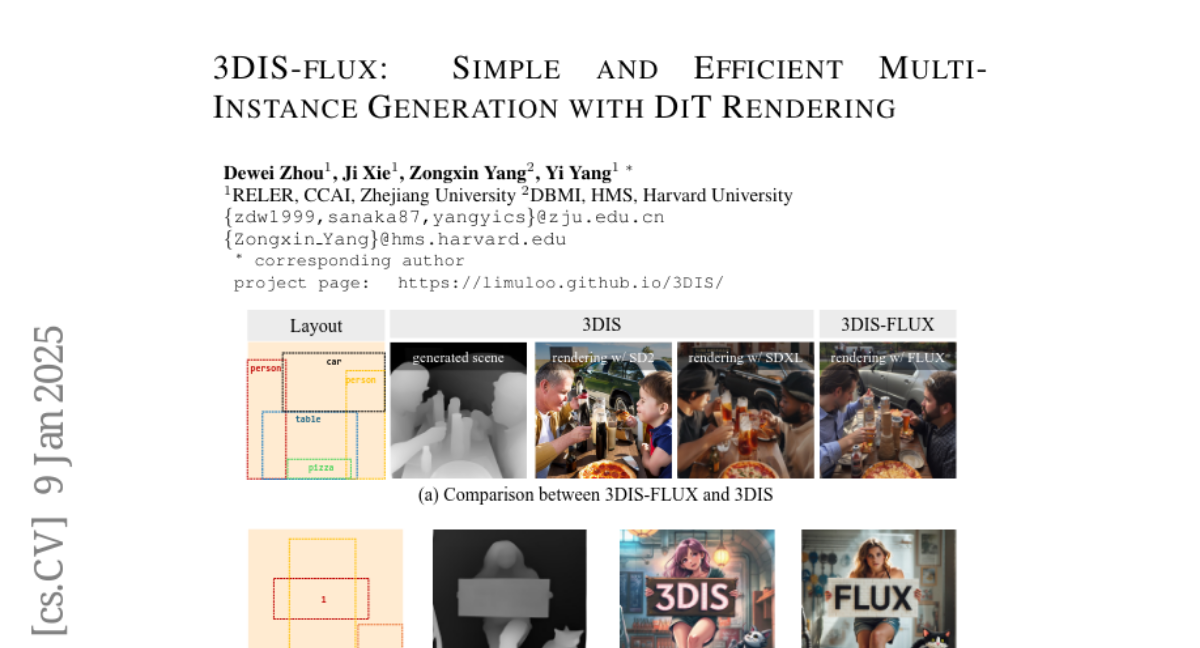

9. 3DIS-FLUX: simple and efficient multi-instance generation with DiT rendering

🔑 Keywords: Multi-instance generation (MIG), 3DIS, FLUX, Depth map controlled image generation

💡 Category: Generative Models

🌟 Research Objective:

– To present an advanced method for controllable text-to-image generation by integrating the FLUX model into the existing 3DIS framework for better rendering capabilities.

🛠️ Research Methods:

– Introducing Depth-Driven Decoupled Instance Synthesis (3DIS) which divides the multi-instance generation process into a two-phase approach: depth-based scene construction and detail rendering using pre-trained models.

– Leveraging the FLUX.1-Depth-dev model for precise depth map controlled image generation by manipulating the Attention Mask in Joint Attention mechanism.

💬 Research Conclusions:

– 3DIS-FLUX, which incorporates the FLUX model, exceeds the original 3DIS method and other state-of-the-art adapter-based methods in terms of performance and image quality.

👉 Paper link: https://huggingface.co/papers/2501.05131

10. PokerBench: Training Large Language Models to become Professional Poker Players

🔑 Keywords: PokerBench, Large Language Models, Poker-playing, Fine-tuning, Game Theory

💡 Category: Natural Language Processing

🌟 Research Objective:

– Introduce PokerBench as a benchmark to evaluate the poker-playing abilities of large language models (LLMs) in complex, strategic games.

🛠️ Research Methods:

– Developed a dataset of 11,000 poker scenarios in collaboration with trained poker players and evaluated models such as GPT-4, ChatGPT 3.5, Llama, and Gemma series.

💬 Research Conclusions:

– Initial performance of LLMs in poker was suboptimal; however, fine-tuning led to marked improvements. Higher PokerBench scores correspond to higher win rates, though limitations exist, suggesting the need for advanced training methodologies.

👉 Paper link: https://huggingface.co/papers/2501.08328

11. HALoGEN: Fantastic LLM Hallucinations and Where to Find Them

🔑 Keywords: Generative Models, Hallucinations, HALoGEN, Large Language Models, Error Classification

💡 Category: Natural Language Processing

🌟 Research Objective:

– The study aims to create a comprehensive benchmark called HALoGEN to evaluate hallucinations in generative large language models across multiple domains.

🛠️ Research Methods:

– The research involved generating 10,923 prompts and developing automatic high-precision verifiers to evaluate the accuracy of ~150,000 generations from 14 language models across nine domains.

💬 Research Conclusions:

– The findings reveal a high occurrence of hallucinations in even the best-performing models, with up to 86% of generated atomic facts being incorrect in certain domains. The study introduces a novel error classification to distinguish between hallucination types.

👉 Paper link: https://huggingface.co/papers/2501.08292

12. Tarsier2: Advancing Large Vision-Language Models from Detailed Video Description to Comprehensive Video Understanding

🔑 Keywords: Large Vision-Language Model, Video Description, Temporal Alignment, AI Native, Ethical AI

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Introduce Tarsier2, a state-of-the-art LVLM, for generating detailed and accurate video descriptions and enhancing general video understanding.

🛠️ Research Methods:

– Scale pre-training data from 11M to 40M video-text pairs, perform fine-grained temporal alignment during supervised fine-tuning, and use model-based sampling with DPO training for optimization.

💬 Research Conclusions:

– Tarsier2-7B consistently outperforms leading models including GPT-4o and Gemini 1.5 Pro in video description tasks and sets new state-of-the-art results across 15 public benchmarks, demonstrating its versatility and robustness.

👉 Paper link: https://huggingface.co/papers/2501.07888

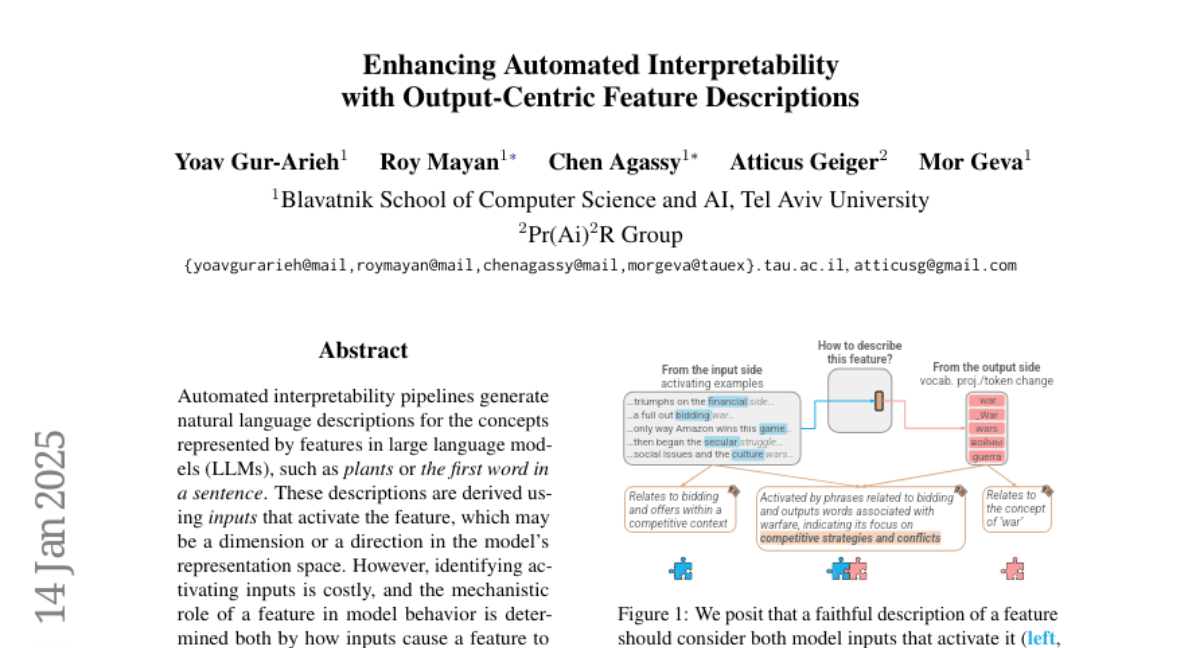

13. Enhancing Automated Interpretability with Output-Centric Feature Descriptions

🔑 Keywords: Automated interpretability, Large language models, Causal effect, Feature descriptions, Output-centric

💡 Category: Natural Language Processing

🌟 Research Objective:

– To propose efficient, output-centric methods for automatically generating natural language descriptions for features in large language models that better capture the causal effect of a feature on model outputs.

🛠️ Research Methods:

– Utilized steering evaluations and developed methods that use tokens with higher weights after feature stimulation or the highest weight tokens from the vocabulary “unembedding” head.

💬 Research Conclusions:

– Output-centric descriptions more effectively capture the causal effects on model outputs than input-centric methods, and combining both approaches yields optimal performance. Additionally, output-centric descriptions can identify activating inputs for features previously considered inactive.

👉 Paper link: https://huggingface.co/papers/2501.08319

14. Potential and Perils of Large Language Models as Judges of Unstructured Textual Data

🔑 Keywords: Large Language Models, Text Analysis, Thematic Summarization, AI Native

💡 Category: Natural Language Processing

🌟 Research Objective:

– To investigate the effectiveness of large language models (LLMs) in accurately representing the perspectives in text-based datasets for thematic summarization.

🛠️ Research Methods:

– Utilized Anthropic Claude model to generate thematic summaries from survey responses with LLM judges including Amazon’s Titan Express, Nova Pro, and Meta’s Llama, compared to human evaluations using Cohen’s kappa, Spearman’s rho, and Krippendorff’s alpha.

💬 Research Conclusions:

– LLMs as judges provide a scalable solution comparable to human raters, yet humans excel in detecting context-specific nuances, emphasizing careful consideration when generalizing LLM judge models across various contexts.

👉 Paper link: https://huggingface.co/papers/2501.08167

15. OpenCSG Chinese Corpus: A Series of High-quality Chinese Datasets for LLM Training

🔑 Keywords: Large Language Models, Chinese LLMs, OpenCSG Chinese Corpus, Pretraining, Performance Improvement

💡 Category: Natural Language Processing

🌟 Research Objective:

– To address the challenge of scarcity of high-quality Chinese datasets for Chinese LLMs by proposing the OpenCSG Chinese Corpus.

🛠️ Research Methods:

– Development of multiple high-quality datasets like Fineweb-edu-chinese, Cosmopedia-chinese, and Smoltalk-chinese with distinct characteristics and a focus on scalable and reproducible data curation processes.

💬 Research Conclusions:

– Extensive experimental analyses showed significant performance improvements in tasks, demonstrating the effectiveness of the OpenCSG Chinese Corpus for training Chinese LLMs.

👉 Paper link: https://huggingface.co/papers/2501.08197

16. AfriHate: A Multilingual Collection of Hate Speech and Abusive Language Datasets for African Languages

🔑 Keywords: Hate Speech, Abusive Language, Multilingual, Local Languages, LLMs

💡 Category: Natural Language Processing

🌟 Research Objective:

– The study aims to address the challenges in moderating hate speech and abusive language in the Global South due to the lack of high-quality local language data and the failure to include local communities in the moderation processes.

🛠️ Research Methods:

– The introduction of AfriHate, a multilingual dataset collection in 15 African languages, with instances annotated by native speakers knowledgeable about local cultures. The study also explores classification baselines with and without using Large Language Models (LLMs).

💬 Research Conclusions:

– The research highlights limitations in current moderation approaches and provides a valuable resource in AfriHate, potentially improving the identification and moderation of hate speech and abusive language in underrepresented languages.

👉 Paper link: https://huggingface.co/papers/2501.08284

17. In-situ graph reasoning and knowledge expansion using Graph-PReFLexOR

🔑 Keywords: Transformers, Reinforcement Learning, Graph-PReFLexOR, Symbolic Abstraction, Automated Scientific Discovery

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– To develop a framework, Graph-PReFLexOR, that combines graph reasoning with symbolic abstraction for enhanced reasoning and discovery.

🛠️ Research Methods:

– Utilizes reinforcement learning and category theory to encode reasoning as structured mappings with knowledge graphs and isomorphic representations.

💬 Research Conclusions:

– Demonstrates superior reasoning depth and adaptability with applications in hypothesis generation and interdisciplinary discovery, establishing groundwork for autonomous reasoning solutions.

👉 Paper link: https://huggingface.co/papers/2501.08120

18. MatchAnything: Universal Cross-Modality Image Matching with Large-Scale Pre-Training

🔑 Keywords: Image Matching, Multi-Modal, Deep Learning, Cross-Modality, Generalization

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The study aims to enhance the performance of image matching across different imaging modalities by overcoming the limitations of scarce annotated cross-modal training data.

🛠️ Research Methods:

– A large-scale pre-training framework using synthetic cross-modal training signals is proposed, leveraging diverse data to train models for recognizing fundamental structures across images.

💬 Research Conclusions:

– The proposed matching model demonstrates exceptional generalizability, outperforming existing methods on more than eight unseen cross-modality registration tasks using the same network weight.

– This advancement broadens the applicability of image matching technologies across various scientific fields, promoting new applications in multi-modality human and artificial intelligence analysis.

👉 Paper link: https://huggingface.co/papers/2501.07556