AI Native Daily Paper Digest – 20250117

1. Inference-Time Scaling for Diffusion Models beyond Scaling Denoising Steps

🔑 Keywords: Generative Models, Large Language Models, Diffusion Models, Inference-time Scaling

💡 Category: Generative Models

🌟 Research Objective:

– Investigate the inference-time scaling behavior of diffusion models and enhance generation performance with increased computation.

🛠️ Research Methods:

– Structured the design space along verifiers for feedback and algorithms to identify better noise candidates in diffusion sampling.

– Conducted extensive experiments on class-conditioned and text-conditioned image generation.

💬 Research Conclusions:

– Increasing inference-time computation significantly improves the quality of samples generated by diffusion models.

– The framework’s components can be tailored for different application scenarios, especially considering the complexity of images.

👉 Paper link: https://huggingface.co/papers/2501.09732

2. OmniThink: Expanding Knowledge Boundaries in Machine Writing through Thinking

🔑 Keywords: OmniThink, retrieval-augmented generation, knowledge density

💡 Category: Generative Models

🌟 Research Objective:

– The study aims to enhance machine writing by overcoming the limitations of retrieval-augmented generation that often results in shallow and repetitive content.

🛠️ Research Methods:

– The authors introduce OmniThink, a framework simulating human-like cognitive processes for improving content generation in machine writing.

💬 Research Conclusions:

– OmniThink effectively increases knowledge density and maintains coherence and depth in generated articles, with positive human evaluations and expert feedback supporting its capability in addressing long-form writing challenges.

👉 Paper link: https://huggingface.co/papers/2501.09751

3. Learnings from Scaling Visual Tokenizers for Reconstruction and Generation

🔑 Keywords: Visual Tokenization, Auto-Encoding, Transformer, Generative Models, ViTok

💡 Category: Generative Models

🌟 Research Objective:

– The study explores the scaling effects in auto-encoders, particularly focusing on how these effects influence reconstruction and generative performance.

🛠️ Research Methods:

– The research replaces the traditional convolutional approach with an enhanced Vision Transformer architecture (ViTok) for tokenization and tests it on large-scale image and video datasets surpassing ImageNet-1K.

💬 Research Conclusions:

– Scaling the auto-encoder’s bottleneck shows strong correlation with reconstruction, while its influence on generation is complex; scaling the decoder improves reconstruction but has mixed effects on generation. ViTok achieves competitive performance with fewer computational resources compared to existing models.

👉 Paper link: https://huggingface.co/papers/2501.09755

4. Exploring the Inquiry-Diagnosis Relationship with Advanced Patient Simulators

🔑 Keywords: Online medical consultation, Large language models, Inquiry phase, Patient simulator, Diagnosis

💡 Category: AI in Healthcare

🌟 Research Objective:

– To explore the relationship between the inquiry and diagnosis phases in online medical consultations, using strategies extracted from real doctor-patient interactions.

🛠️ Research Methods:

– Developing a patient simulator guided by real interaction strategies to simulate patient responses, and conducting experiments to analyze the inquiry-diagnosis relationship.

💬 Research Conclusions:

– Both inquiry and diagnosis are bound by Liebig’s law, meaning poor inquiry quality impacts diagnostic effectiveness, irrespective of diagnostic capability.

– Significant differences exist in model performances during inquiries, categorized into four types to understand performance variations.

👉 Paper link: https://huggingface.co/papers/2501.09484

5. Towards Large Reasoning Models: A Survey of Reinforced Reasoning with Large Language Models

🔑 Keywords: Large Language Models, reasoning processes, reinforcement learning, automated data construction, OpenAI

💡 Category: Natural Language Processing

🌟 Research Objective:

– To present a comprehensive review of recent progress in the reasoning capabilities of Large Language Models.

🛠️ Research Methods:

– Implementing reinforcement learning to enhance reasoning through trial-and-error, and utilizing test-time scaling to improve accuracy.

💬 Research Conclusions:

– The integration of learning-to-reason techniques with test-time scaling points towards the development of Large Reasoning Models, marking a new frontier in LLM research.

👉 Paper link: https://huggingface.co/papers/2501.09686

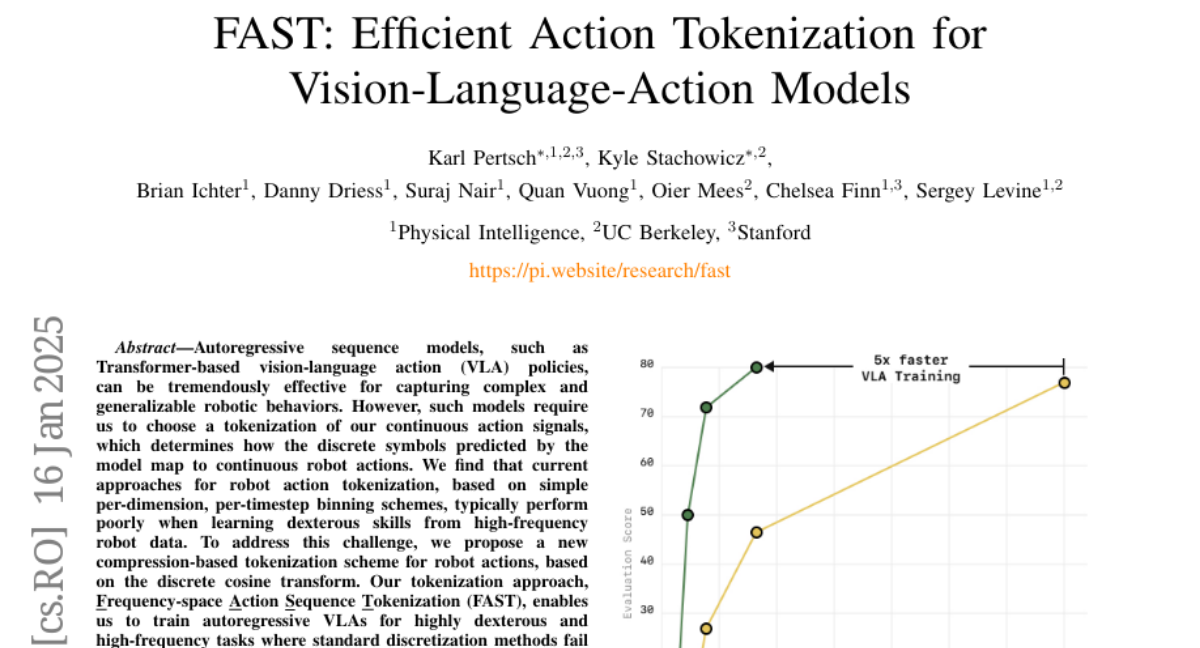

6. FAST: Efficient Action Tokenization for Vision-Language-Action Models

🔑 Keywords: Autoregressive sequence models, Frequency-space Action Sequence Tokenization, robot action tokenization, transformer-based vision-language action policies, high-frequency tasks

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– The study aims to develop an effective tokenization scheme for robotic actions to improve learning dexterous skills from high-frequency data.

🛠️ Research Methods:

– The research introduces Frequency-space Action Sequence Tokenization (FAST) using discrete cosine transform for transforming robot actions into discrete symbols.

– Implementation of FAST and release of FAST+ as a universal robot action tokenizer based on training on 1M real robot action trajectories.

💬 Research Conclusions:

– The proposed FAST approach enables training of transformer-based vision-language action policies for complex and high-frequency robotic tasks more efficiently.

– Combination with pi0 VLA shows that the method can handle extensive training datasets, achieving comparable performance to diffusion VLA models while reducing training time significantly.

👉 Paper link: https://huggingface.co/papers/2501.09747

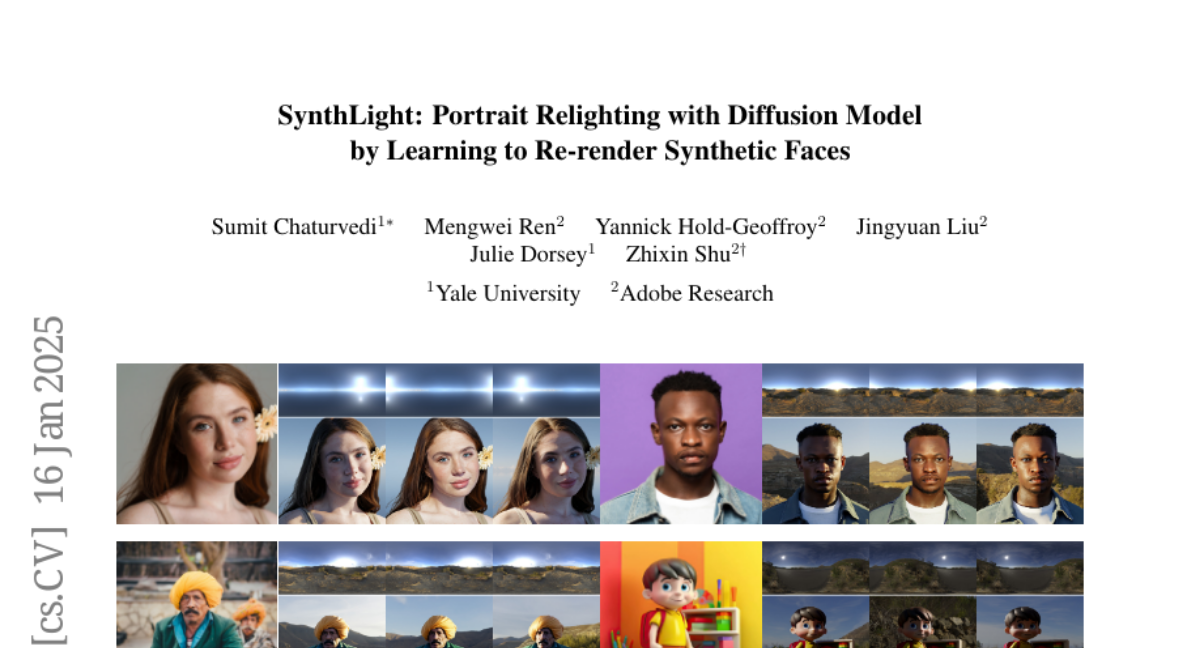

7. SynthLight: Portrait Relighting with Diffusion Model by Learning to Re-render Synthetic Faces

🔑 Keywords: SynthLight, diffusion model, portrait relighting, rendering, classifier-free guidance

💡 Category: Generative Models

🌟 Research Objective:

– The paper introduces SynthLight, aimed at advancing the field of portrait relighting through the use of a novel diffusion model.

🛠️ Research Methods:

– The authors utilize a physically-based rendering engine to create a dataset of 3D head assets under various lighting conditions, developing two strategies: multi-task training with real human portraits and an inference time diffusion sampling procedure with classifier-free guidance.

💬 Research Conclusions:

– The proposed method effectively generalizes to diverse real photographs, maintaining subject identity while generating realistic illumination effects. Quantitative evaluation on Light Stage data shows comparable performance to state-of-the-art methods, and qualitative results reveal rich illumination effects.

👉 Paper link: https://huggingface.co/papers/2501.09756

8. The Heap: A Contamination-Free Multilingual Code Dataset for Evaluating Large Language Models

🔑 Keywords: Large Language Models, Code Datasets, Data Contamination, Multilingual Dataset, Deduplicated Data

💡 Category: AI Systems and Tools

🌟 Research Objective:

– Address the scarcity of code for evaluating large language models by providing a large multilingual and deduplicated dataset.

🛠️ Research Methods:

– The creation and release of The Heap, a dataset covering 57 programming languages, deduplicated from other open code datasets.

💬 Research Conclusions:

– The Heap enables fair and efficient evaluation of large language models without the concern of data contamination and extensive data cleaning.

👉 Paper link: https://huggingface.co/papers/2501.09653

9. RLHS: Mitigating Misalignment in RLHF with Hindsight Simulation

🔑 Keywords: Generative AI, Reinforcement Learning, Human Feedback, Misalignment, Hindsight Feedback

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The study aims to enhance the alignment of Generative AI systems, like foundation models, with human values by improving feedback mechanisms to ensure helpful and trustworthy behavior.

🛠️ Research Methods:

– Introduced Reinforcement Learning from Hindsight Simulation (RLHS), which simulates plausible consequences and evaluates behaviors with hindsight feedback, applied to Proximal Policy Optimization (PPO) and Direct Preference Optimization (DPO).

💬 Research Conclusions:

– RLHS significantly reduces misalignment compared to traditional Reinforcement Learning from Human Feedback (RLHF), improving user satisfaction and goal achievement in an online user study.

👉 Paper link: https://huggingface.co/papers/2501.08617

10. CaPa: Carve-n-Paint Synthesis for Efficient 4K Textured Mesh Generation

🔑 Keywords: generative modeling, 3D generation, CaPa, high-fidelity 3D assets

💡 Category: Generative Models

🌟 Research Objective:

– The central goal is to efficiently synthesize high-quality 3D assets from textual or visual inputs, addressing current issues in 3D generation such as multi-view inconsistency and low fidelity.

🛠️ Research Methods:

– Introduces a carve-and-paint framework, CaPa, that splits the process into two stages: geometry generation using a 3D latent diffusion model and texture synthesis with Spatially Decoupled Attention.

– Utilizes a 3D-aware occlusion inpainting algorithm to enhance texture cohesion and fill untextured regions.

💬 Research Conclusions:

– CaPa generates high-quality 3D assets in under 30 seconds, offering outputs ready for commercial applications while excelling in texture fidelity and geometric stability, setting a new standard for practical 3D asset generation.

👉 Paper link: https://huggingface.co/papers/2501.09433

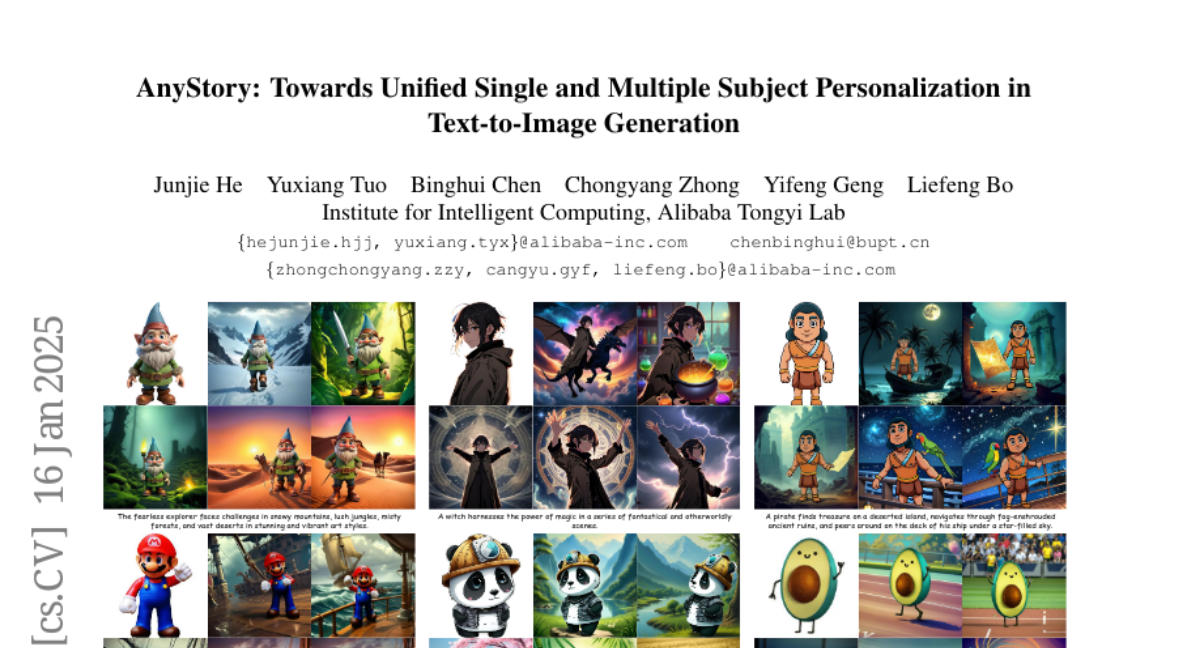

11. AnyStory: Towards Unified Single and Multiple Subject Personalization in Text-to-Image Generation

🔑 Keywords: large-scale generative models, text-to-image generation, personalized subject generation, encode-then-route, ReferenceNet

💡 Category: Generative Models

🌟 Research Objective:

– The research aims to improve high-fidelity personalized image generation for both single and multiple subjects without compromising fidelity.

🛠️ Research Methods:

– Introduce AnyStory, utilizing an “encode-then-route” approach with a universal image encoder called ReferenceNet, and a decoupled instance-aware subject router.

💬 Research Conclusions:

– Demonstrates superior performance in maintaining subject details, aligning with text descriptions, and enabling personalization for multiple subjects.

👉 Paper link: https://huggingface.co/papers/2501.09503

12. Do generative video models learn physical principles from watching videos?

🔑 Keywords: AI video generation, world models, visual realism, Physics-IQ, physical principles

💡 Category: Generative Models

🌟 Research Objective:

– The research aims to explore whether AI video models learn comprehensive world models that understand physical laws or are simply achieving realism through advanced pixel prediction.

🛠️ Research Methods:

– Development of a Physics-IQ benchmark dataset, specifically designed to evaluate the models’ understanding of physical principles such as fluid dynamics, optics, and thermodynamics.

💬 Research Conclusions:

– The study finds that current AI video models exhibit limited physical understanding that is not correlated with visual realism, indicating the potential to learn some physical principles from observation, yet significant challenges remain.

👉 Paper link: https://huggingface.co/papers/2501.09038