AI Native Daily Paper Digest – 20250211

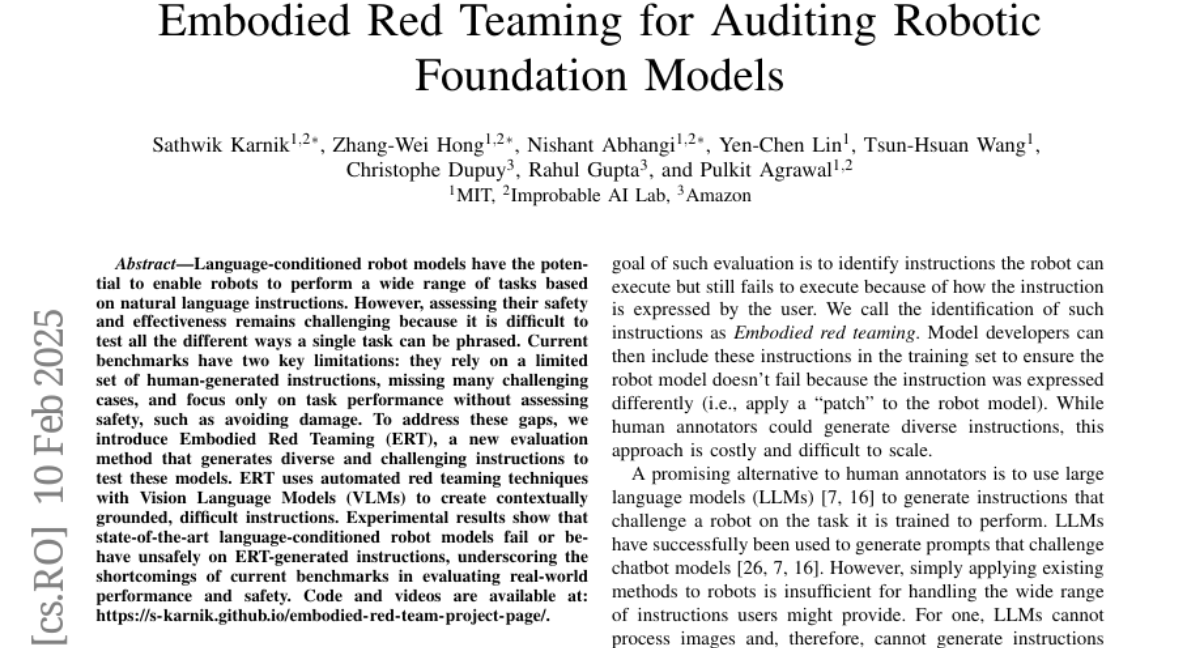

1. SynthDetoxM: Modern LLMs are Few-Shot Parallel Detoxification Data Annotators

🔑 Keywords: multilingual text detoxification, parallel datasets, LLMs, SynthDetoxM, data scarcity

💡 Category: Natural Language Processing

🌟 Research Objective:

– Introduce a pipeline for generating multilingual parallel detoxification data due to the scarcity of such datasets.

– Present SynthDetoxM, a novel dataset comprising 16,000 high-quality detoxification sentence pairs across multiple languages.

🛠️ Research Methods:

– Utilize nine modern open-source LLMs in a few-shot setting to create synthetic datasets from various toxicity evaluation sources.

💬 Research Conclusions:

– Models trained on the synthetic dataset SynthDetoxM show superior performance to human-annotated datasets and outperform other evaluated LLMs in few-shot settings.

– Released dataset and code aim to support further research in the field of multilingual text detoxification.

👉 Paper link: https://huggingface.co/papers/2502.06394

2. Can 1B LLM Surpass 405B LLM? Rethinking Compute-Optimal Test-Time Scaling

🔑 Keywords: Test-Time Scaling, Large Language Models, Process Reward Models, Policy Models, Inference Efficiency

💡 Category: Natural Language Processing

🌟 Research Objective:

– To explore the optimal approach for scaling test-time computation across various policy models and problem difficulty levels.

– To examine the extent to which extended computation can improve the performance of large language models on complex tasks.

🛠️ Research Methods:

– Comprehensive experiments were conducted on the MATH-500 and challenging AIME24 tasks to analyze the impact of TTS on LLM performance.

💬 Research Conclusions:

– The optimal TTS strategy varies based on policy models, PRMs, and task difficulty; small LLMs can outperform larger models when adopting compute-optimal strategies.

– Specific examples include a 1B LLM outperforming a 405B LLM on MATH-500, and smaller models like 0.5B and 3B achieving superior results compared to larger models like GPT-4o, o1, and DeepSeek-R1, highlighting TTS’s potential in enhancing LLM reasoning abilities.

👉 Paper link: https://huggingface.co/papers/2502.06703

3. Exploring the Limit of Outcome Reward for Learning Mathematical Reasoning

🔑 Keywords: Reinforcement Learning, mathematical reasoning, OREAL

💡 Category: Reinforcement Learning

🌟 Research Objective:

– To explore the performance limits of mathematical reasoning using the Outcome Reward-based reinforcement Learning (OREAL) framework.

🛠️ Research Methods:

– Introduction of OREAL framework applying reinforcement learning with binary outcome rewards and a token-level reward model for sampling in reasoning trajectories.

💬 Research Conclusions:

– The OREAL framework allows a 7B model to achieve performance on par with 32B models, reaching a 94.0 pass@1 accuracy on MATH-500, surpassing previous models with a 95.0 pass@1 accuracy.

👉 Paper link: https://huggingface.co/papers/2502.06781

4. Training Language Models for Social Deduction with Multi-Agent Reinforcement Learning

🔑 Keywords: Multi-Agent, Natural Language, Zero-Shot Coordination, Reinforcement Learning, Social Deduction

💡 Category: Reinforcement Learning

🌟 Research Objective:

– Train language models to facilitate natural language communication in multi-agent environments without human demonstrations.

🛠️ Research Methods:

– Decompose communication into listening and speaking tasks.

– Use agent goals as dense reward signals to guide communication.

– Employ multi-agent reinforcement learning to enhance both listening and speaking skills.

💬 Research Conclusions:

– The approach improves discussion quality, such as suspect accusation and evidence provision in social deduction settings, doubling the win rates compared to standard RL methods.

👉 Paper link: https://huggingface.co/papers/2502.06060

5. CODESIM: Multi-Agent Code Generation and Problem Solving through Simulation-Driven Planning and Debugging

🔑 Keywords: Large Language Models, code generation, program synthesis, CodeSim, debugging

💡 Category: AI Systems and Tools

🌟 Research Objective:

– This paper aims to introduce CodeSim, a novel multi-agent code generation framework for enhancing code synthesis processes through a human-like perception approach.

🛠️ Research Methods:

– CodeSim employs a unique method of plan verification and internal debugging via step-by-step simulation of input/output, addressing planning, coding, and debugging stages in program synthesis.

💬 Research Conclusions:

– The framework demonstrates significant code generation capabilities and achieves state-of-the-art results across several benchmarks, with notable percentages in HumanEval, MBPP, APPS, and CodeContests.

– CodeSim shows promise for further improvement when used in conjunction with external debuggers and is open-sourced for future research and development.

👉 Paper link: https://huggingface.co/papers/2502.05664

6. LM2: Large Memory Models

🔑 Keywords: Large Memory Model, Transformer architectures, multi-step reasoning, memory module, cross attention

💡 Category: Natural Language Processing

🌟 Research Objective:

– Introduce the Large Memory Model (LM2) to enhance Transformer architectures in multi-step reasoning and long context comprehension.

🛠️ Research Methods:

– The LM2 is a decoder-only Transformer enhanced with an auxiliary memory module for better contextual representation using cross attention and gating mechanisms.

💬 Research Conclusions:

– The LM2 outperforms previous models like RMT and Llama-3.2 in tasks requiring multi-hop inference and large-context question-answering, proving the effectiveness and enhanced capabilities of explicit memory integration in Transformers.

👉 Paper link: https://huggingface.co/papers/2502.06049

7. Show-o Turbo: Towards Accelerated Unified Multimodal Understanding and Generation

🔑 Keywords: unified multimodal understanding, Show-o Turbo, denoising, consistency distillation, curriculum learning

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The paper aims to address inefficiencies in the Show-o model for text-to-image and image-to-text generation by introducing Show-o Turbo.

🛠️ Research Methods:

– It identifies a unified denoising perspective for generating images and text, extends consistency distillation (CD), and employs a trajectory segmentation strategy with curriculum learning.

💬 Research Conclusions:

– Show-o Turbo achieves better efficiency in text-to-image and image-to-text generation, displaying improved GenEval scores and speedup without significant performance loss, compared to the original Show-o model.

👉 Paper link: https://huggingface.co/papers/2502.05415

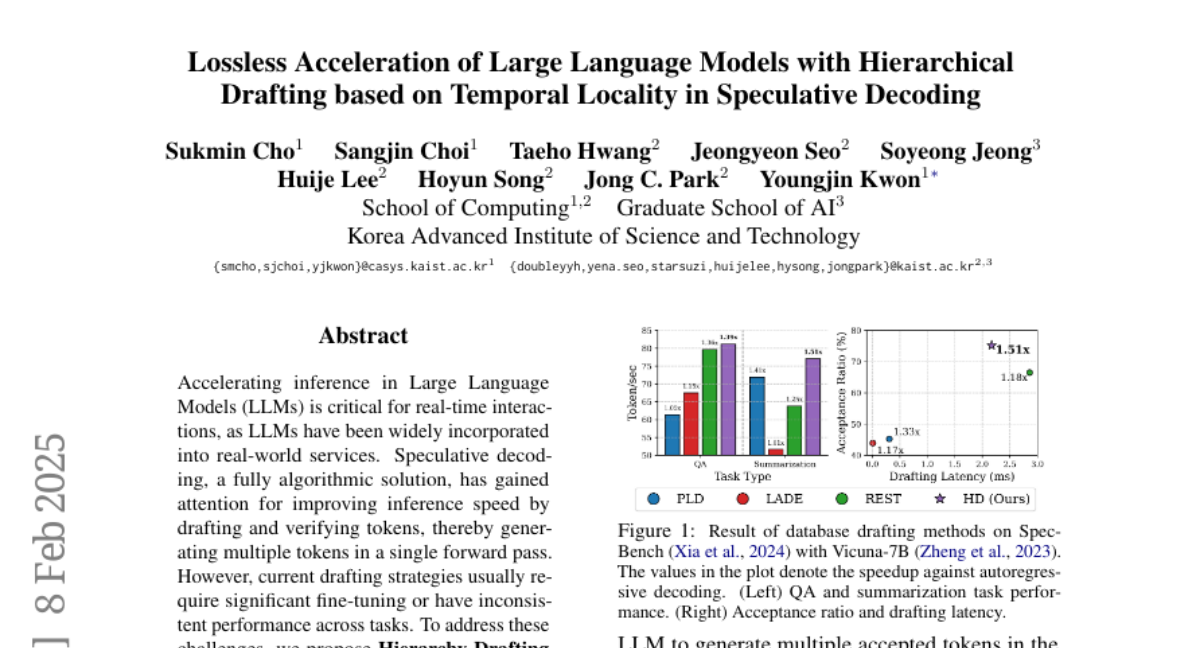

8. Lossless Acceleration of Large Language Models with Hierarchical Drafting based on Temporal Locality in Speculative Decoding

🔑 Keywords: Large Language Models, Inference Speed, Speculative Decoding, Hierarchy Drafting, Database Drafting

💡 Category: Natural Language Processing

🌟 Research Objective:

– Accelerate inference in Large Language Models for real-time interactions using a novel method called Hierarchy Drafting.

🛠️ Research Methods:

– Proposing and implementing the Hierarchy Drafting approach, which organizes token sources into hierarchical databases based on temporal locality, and evaluating its performance using Spec-Bench with models of 7B and 13B parameters.

💬 Research Conclusions:

– Hierarchy Drafting improves inference speed consistently across various tasks, model sizes, and temperatures, outperforming existing methods in robust inference speedups.

👉 Paper link: https://huggingface.co/papers/2502.05609

9. ReasonFlux: Hierarchical LLM Reasoning via Scaling Thought Templates

🔑 Keywords: Hierarchical LLM Reasoning, Thought Templates, Reinforcement Learning, ReasonFlux-32B, Inference Scaling

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– To optimize the reasoning search space in LLMs and enhance their mathematical reasoning capabilities using hierarchical LLM reasoning through scalable thought templates.

🛠️ Research Methods:

– Implemented a structured and generic thought template library, conducted hierarchical reinforcement learning on these templates, and developed an adaptive inference scaling system for LLMs.

💬 Research Conclusions:

– The ReasonFlux-32B model showed significant improvements in math reasoning, achieving 91.2% accuracy on the MATH benchmark and outperforming previous powerful LLMs by notable margins on other benchmarks.

👉 Paper link: https://huggingface.co/papers/2502.06772

10. Matryoshka Quantization

🔑 Keywords: Matryoshka Quantization, Quantization, Model weights, int2 precision

💡 Category: AI Systems and Tools

🌟 Research Objective:

– Introduce Matryoshka Quantization (MatQuant) as a method to reduce communication and inference costs of large models while improving precision.

🛠️ Research Methods:

– Develop a multi-scale quantization technique that enables training a single model, which can be served at different precision levels, specifically leveraging the nested structure of integer types.

💬 Research Conclusions:

– MatQuant allows a single model to achieve up to 10% increased accuracy in int2 precision compared to standard quantization methods, showcasing significant advancements in model accuracy at low precision levels.

👉 Paper link: https://huggingface.co/papers/2502.06786

11. The Hidden Life of Tokens: Reducing Hallucination of Large Vision-Language Models via Visual Information Steering

🔑 Keywords: LVLMs, hallucination, visual information, VISTA, decoding strategies

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The study investigates hallucination in Large Vision-Language Models (LVLMs) and proposes to understand its internal dynamics through token logits rankings.

🛠️ Research Methods:

– An inference-time intervention framework called VISTA is introduced, leveraging token-logit augmentation to reinforce visual information and promote semantically meaningful decoding without external supervision.

💬 Research Conclusions:

– VISTA effectively reduces hallucination by about 40% on tested open-ended generation tasks and surpasses existing methods in multiple benchmarks and decoding strategies.

👉 Paper link: https://huggingface.co/papers/2502.03628

12. EVEv2: Improved Baselines for Encoder-Free Vision-Language Models

🔑 Keywords: Encoder-free VLMs, Multi-modal systems, EVEv2.0, Data efficiency, Vision-language models

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To narrow the performance gap between encoder-free vision-language models (VLMs) and their encoder-based counterparts by thoroughly investigating their characteristics.

🛠️ Research Methods:

– Developing and evaluating strategies for encoder-free VLMs that effectively decompose and hierarchically associate vision and language within a unified model.

💬 Research Conclusions:

– EVEv2.0, an improved family of encoder-free VLMs, shows superior data efficiency and strong vision-reasoning capabilities using a decoder-only architecture across modalities.

👉 Paper link: https://huggingface.co/papers/2502.06788

13. History-Guided Video Diffusion

🔑 Keywords: Classifier-free guidance, Diffusion models, Video diffusion, History Guidance, DFoT

💡 Category: Generative Models

🌟 Research Objective:

– To extend classifier-free guidance to video diffusion for generating video conditioned on variable-length context frames, termed as history.

🛠️ Research Methods:

– Introduction of the Diffusion Forcing Transformer (DFoT) and a theoretically grounded training objective to enable conditioning on a flexible number of history frames.

– Development of a family of guidance methods called History Guidance enabled by DFoT.

💬 Research Conclusions:

– Vanilla history guidance improves video generation quality and temporal consistency.

– An advanced method enhances motion dynamics, allows for compositional generalization, and enables the generation of extremely long videos.

👉 Paper link: https://huggingface.co/papers/2502.06764

14. Lumina-Video: Efficient and Flexible Video Generation with Multi-scale Next-DiT

🔑 Keywords: Diffusion Transformers, Lumina-Video, video generation, Multi-scale Next-DiT, motion score

💡 Category: Generative Models

🌟 Research Objective:

– The study aims to enhance generative models’ capabilities in photorealistic image and video generation, specifically focusing on untapped video synthesis potential using Next-DiT.

🛠️ Research Methods:

– Introduces the Lumina-Video framework, which incorporates a Multi-scale Next-DiT architecture for joint learning of multiple patchifications.

– Implements a progressive training scheme with increasingly higher resolution and FPS, coupled with a multi-source training using mixed natural and synthetic data.

💬 Research Conclusions:

– Lumina-Video achieves high aesthetic quality and motion smoothness, with enhanced training and inference efficiency for video generation.

– Further proposes Lumina-V2A for synchronized audio generation in videos.

👉 Paper link: https://huggingface.co/papers/2502.06782

15. Dual Caption Preference Optimization for Diffusion Models

🔑 Keywords: Human Preference Optimization, Large Language Models, Text-to-Image Diffusion, Dual Caption Preference Optimization

💡 Category: Generative Models

🌟 Research Objective:

– To enhance text-to-image diffusion models by addressing challenges in preference optimization using a novel approach called Dual Caption Preference Optimization (DCPO).

🛠️ Research Methods:

– Introduction of DCPO, utilizing two distinct captions to mitigate the irrelevant prompt issue.

– Development of the Pick-Double Caption dataset with separate captions for preferred and less preferred images.

– Implementation of three caption generation strategies: captioning, perturbation, and hybrid methods.

💬 Research Conclusions:

– DCPO significantly improves image quality and relevance to prompts, surpassing existing models such as Stable Diffusion (SD) 2.1 and others across multiple evaluation metrics.

👉 Paper link: https://huggingface.co/papers/2502.06023

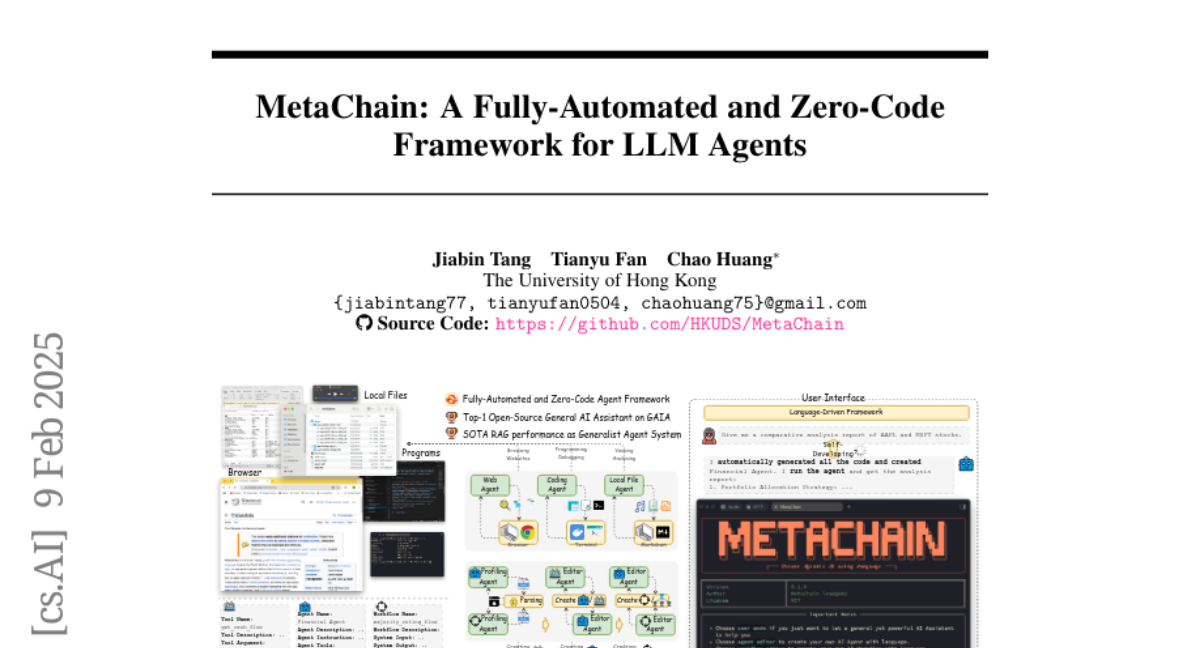

16. MetaChain: A Fully-Automated and Zero-Code Framework for LLM Agents

🔑 Keywords: LLM Agents, Natural Language, MetaChain, Retrieval-Augmented Generation

💡 Category: AI Systems and Tools

🌟 Research Objective:

– To develop a framework, MetaChain, that enables individuals without technical expertise to create LLM agents using natural language.

🛠️ Research Methods:

– Introduction of a Fully-Automated and Self-Developing framework comprising four components: Agentic System Utilities, LLM-powered Actionable Engine, Self-Managing File System, and Self-Play Agent Customization module.

💬 Research Conclusions:

– MetaChain proves effective in generalist multi-agent tasks and excels in Retrieval-Augmented Generation capabilities, outperforming existing state-of-the-art methods.

👉 Paper link: https://huggingface.co/papers/2502.05957

17. Efficient-vDiT: Efficient Video Diffusion Transformers With Attention Tile

🔑 Keywords: Diffusion Transformers, 3D attention, sampling process, consistency distillation, distributed inference

💡 Category: Generative Models

🌟 Research Objective:

– The paper aims to address the inefficiency in generating high-fidelity videos using Diffusion Transformers with 3D full attention by reducing complexity and speeding up the process.

🛠️ Research Methods:

– The study proposes a new family of sparse 3D attention that reduces computational complexity and employs multi-step consistency distillation to activate few-step generation capacities, alongside a three-stage training pipeline.

💬 Research Conclusions:

– The approach significantly accelerates the Open-Sora-Plan-1.2 model video generation by 7.4x-7.8x, with distributed inference achieving an additional speedup of 3.91x using 4 GPUs, with a minimal performance trade-off.

👉 Paper link: https://huggingface.co/papers/2502.06155

18. The Curse of Depth in Large Language Models

🔑 Keywords: Curse of Depth, Large Language Models, Pre-Layer Normalization, LayerNorm Scaling, Training Performance

💡 Category: Natural Language Processing

🌟 Research Objective:

– The paper introduces and addresses the Curse of Depth in Large Language Models (LLMs), where many layers are less effective than expected due to Pre-Layer Normalization.

🛠️ Research Methods:

– The phenomenon was confirmed across popular LLMs like Llama and Qwen through theoretical and empirical analysis, leading to the introduction of a method called LayerNorm Scaling to mitigate the issue.

💬 Research Conclusions:

– Implementing LayerNorm Scaling improves the pre-training and fine-tuning performance of LLMs by enabling deeper layers to contribute more effectively, as demonstrated in models ranging from 130M to 1B parameters.

👉 Paper link: https://huggingface.co/papers/2502.05795

19. APE: Faster and Longer Context-Augmented Generation via Adaptive Parallel Encoding

🔑 Keywords: Context-augmented generation, Parallel Encoding, Adaptive Parallel Encoding, RAG, ICL

💡 Category: Natural Language Processing

🌟 Research Objective:

– The study aims to improve context-augmented generation (CAG) by addressing computational inefficiencies and performance drops in parallel encoding techniques like RAG and ICL.

🛠️ Research Methods:

– Introduces Adaptive Parallel Encoding (APE) which utilizes shared prefix, attention temperature, and scaling factor to align parallel with sequential encoding.

💬 Research Conclusions:

– APE maintains 98% and 93% of sequential encoding performance on RAG and ICL tasks, respectively, and improves over parallel encoding by 3.6% and 7.9%. It also effectively scales to many-shot CAG, offering a 4.5x speedup and 28x reduction in prefilling time.

👉 Paper link: https://huggingface.co/papers/2502.05431

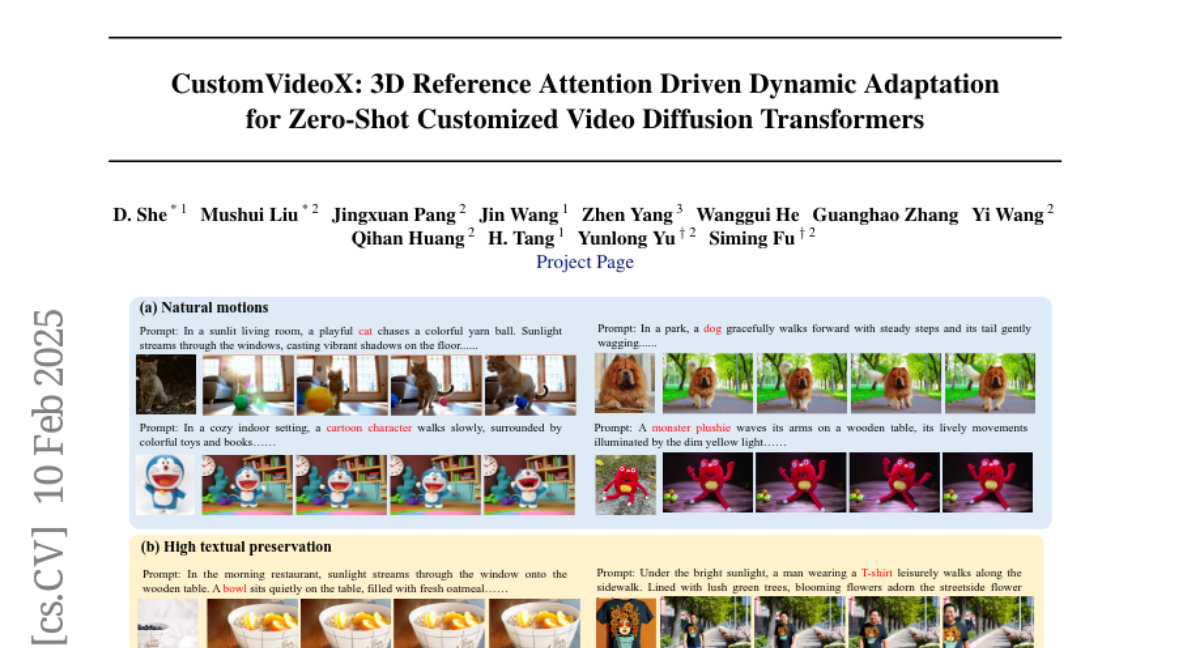

20. CustomVideoX: 3D Reference Attention Driven Dynamic Adaptation for Zero-Shot Customized Video Diffusion Transformers

🔑 Keywords: Customized generation, personalized video generation, video diffusion transformer, 3D Reference Attention, Time-Aware Reference Attention Bias

💡 Category: Generative Models

🌟 Research Objective:

– The primary goal is to enhance personalized video generation using a reference image, addressing challenges like temporal inconsistencies and quality degradation.

🛠️ Research Methods:

– CustomVideoX framework uses a video diffusion transformer and focuses on training LoRA parameters for efficient feature extraction.

– Introduces 3D Reference Attention to improve interaction between reference images and video frames spatially and temporally.

– Implements Time-Aware Reference Attention Bias and Entity Region-Aware Enhancement for better modulation and alignment of reference features.

💬 Research Conclusions:

– CustomVideoX surpasses existing methods in achieving higher video consistency and quality, as demonstrated by a new benchmark, VideoBench.

👉 Paper link: https://huggingface.co/papers/2502.06527

21. DreamDPO: Aligning Text-to-3D Generation with Human Preferences via Direct Preference Optimization

🔑 Keywords: Text-to-3D generation, human preferences, optimization

💡 Category: Generative Models

🌟 Research Objective:

– To integrate human preferences into the 3D generation process using the DreamDPO framework.

🛠️ Research Methods:

– The method involves constructing pairwise examples, aligning them with human preferences via reward models, and optimizing the 3D representation with a preference-driven loss function.

💬 Research Conclusions:

– DreamDPO improves the alignment of generated 3D content with human preferences and achieves higher-quality, more controllable results compared to existing methods.

👉 Paper link: https://huggingface.co/papers/2502.04370

22. Steel-LLM:From Scratch to Open Source — A Personal Journey in Building a Chinese-Centric LLM

🔑 Keywords: Steel-LLM, Chinese language model, open-source, model-building, benchmarks

💡 Category: Natural Language Processing

🌟 Research Objective:

– The primary objective was to develop Steel-LLM, a high-quality, open-source Chinese-centric language model with limited computational resources.

🛠️ Research Methods:

– The model was developed using a 1-billion-parameter architecture with a focus on training primarily on Chinese data and some English data, while emphasizing transparency and sharing practical insights.

💬 Research Conclusions:

– Steel-LLM achieved competitive performance on benchmarks CEVAL and CMMLU, surpassing early models from larger institutions, and the project offers valuable insights for future LLM developers.

👉 Paper link: https://huggingface.co/papers/2502.06635

23. Towards Internet-Scale Training For Agents

🔑 Keywords: LLM, Internet-scale training, human demonstrations, generalization, Llama 3.1

💡 Category: Natural Language Processing

🌟 Research Objective:

– To develop a scalable pipeline that enables web navigation agents to train at an Internet-scale without relying heavily on human annotations.

🛠️ Research Methods:

– A three-stage pipeline involving a Large Language Model (LLM) generating tasks for 150k websites, LLM agents completing these tasks, and another LLM reviewing and judging the outcomes.

💬 Research Conclusions:

– The pipeline achieves competitive results compared to human annotations, improving Step Accuracy, and significantly enhancing generalization abilities to diverse real-world websites, outperforming models trained solely on human data.

👉 Paper link: https://huggingface.co/papers/2502.06776

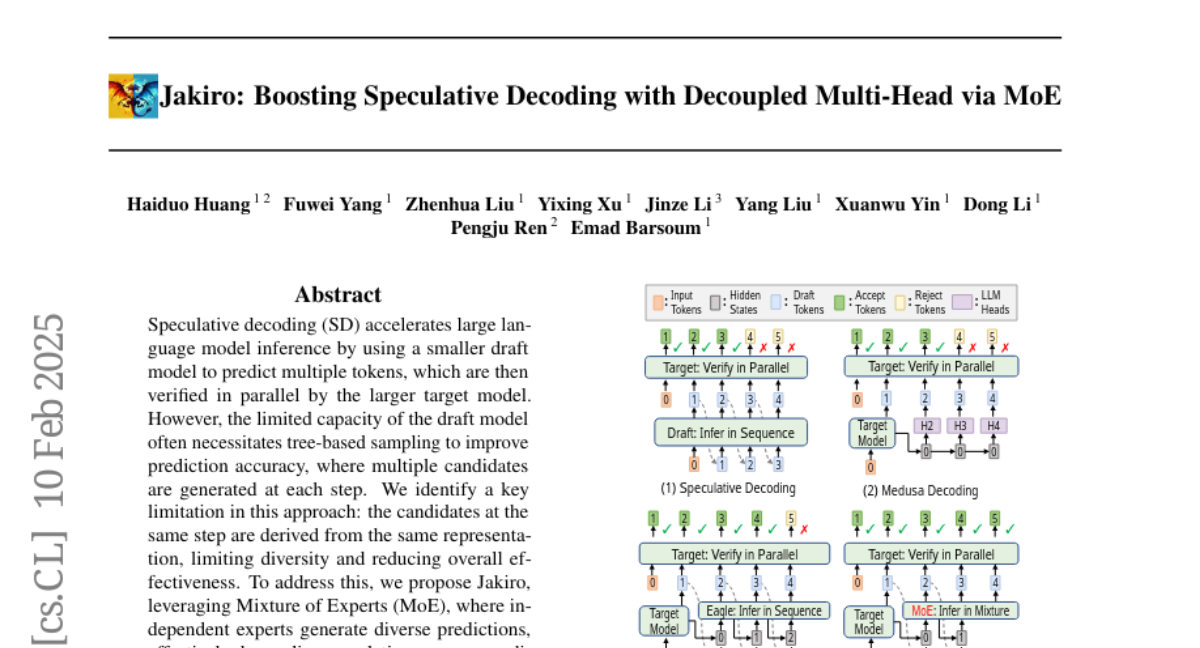

24. Jakiro: Boosting Speculative Decoding with Decoupled Multi-Head via MoE

🔑 Keywords: Speculative decoding, Mixture of Experts, Autoregressive decoding, Prediction accuracy, Inference speedup

💡 Category: Natural Language Processing

🌟 Research Objective:

– The research aims to improve prediction accuracy and inference speed of large language models by addressing limitations in traditional speculative decoding methods.

🛠️ Research Methods:

– The authors propose “Jakiro”, which utilizes a Mixture of Experts (MoE) approach to generate diverse predictions and introduce a hybrid inference strategy combining autoregressive decoding with parallel decoding, enhanced by a contrastive mechanism.

💬 Research Conclusions:

– The proposed method significantly enhances prediction accuracy and inference speed, establishing a new state-of-the-art in speculative decoding as validated by extensive experiments across diverse models.

👉 Paper link: https://huggingface.co/papers/2502.06282

25. Embodied Red Teaming for Auditing Robotic Foundation Models

🔑 Keywords: language-conditioned robot models, safety, effectiveness, Embodied Red Teaming, Vision Language Models

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– To evaluate the safety and effectiveness of language-conditioned robot models in performing tasks based on natural language instructions.

🛠️ Research Methods:

– Introduction of Embodied Red Teaming (ERT), an evaluation method using automated red teaming techniques with Vision Language Models to generate diverse and challenging instructions.

💬 Research Conclusions:

– State-of-the-art language-conditioned robot models demonstrate limitations and unsafe behavior on ERT-generated instructions, highlighting the inadequacies of current benchmarks in assessing real-world performance and safety.

👉 Paper link: https://huggingface.co/papers/2411.18676