AI Native Daily Paper Digest – 20250217

1. Region-Adaptive Sampling for Diffusion Transformers

🔑 Keywords: Diffusion models, Diffusion Transformers, RAS, real-time applications, sampling strategy

💡 Category: Generative Models

🌟 Research Objective:

– To enhance real-time performance of Diffusion models by introducing a new sampling strategy.

🛠️ Research Methods:

– Developed RAS, a training-free approach using Diffusion Transformers to dynamically allocate sampling ratios based on model focus in images.

💬 Research Conclusions:

– RAS achieved up to 2.51x speedup with minimal quality degradation, proving effective for efficient diffusion transformers and real-time applications.

👉 Paper link: https://huggingface.co/papers/2502.10389

2. Large Language Diffusion Models

🔑 Keywords: Autoregressive models, LLaDA, Diffusion model, In-context learning

💡 Category: Generative Models

🌟 Research Objective:

– Challenge the conventional view of Autoregressive models as the cornerstone of LLMs and propose an alternative with diffusion models through LLaDA.

🛠️ Research Methods:

– Train LLaDA, a diffusion model, from scratch using a pre-training and supervised fine-tuning paradigm, optimizing through a likelihood bound.

💬 Research Conclusions:

– LLaDA shows strong scalability, outperforming self-constructed ARM baselines, rivals strong LLMs like LLaMA3 8B in in-context learning, and addresses the reversal curse.

👉 Paper link: https://huggingface.co/papers/2502.09992

3. Step-Video-T2V Technical Report: The Practice, Challenges, and Future of Video Foundation Model

🔑 Keywords: Step-Video-T2V, Video Generation, Diffusion Model, Video Foundation Models, Text-to-Video

💡 Category: Generative Models

🌟 Research Objective:

– The paper introduces Step-Video-T2V, a text-to-video model designed to enhance video content creation through advanced video foundation models.

🛠️ Research Methods:

– Utilizes a deep compression Variational Autoencoder (Video-VAE) and bilingual text encoders, with a DiT employing 3D full attention for denoising input noise.

💬 Research Conclusions:

– The effectiveness of the model is demonstrated through the Step-Video-T2V-Eval benchmark, showing superior video generation compared to existing models. The authors provide insights into current model limitations and propose future advancements.

👉 Paper link: https://huggingface.co/papers/2502.10248

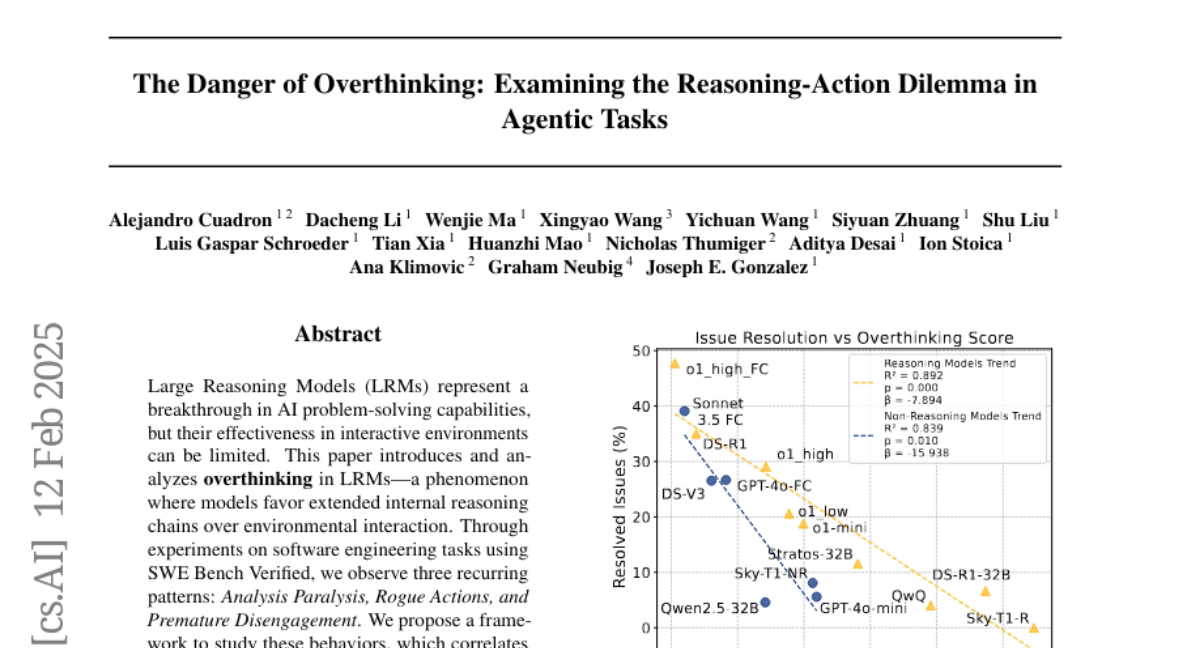

4. The Danger of Overthinking: Examining the Reasoning-Action Dilemma in Agentic Tasks

🔑 Keywords: Large Reasoning Models, Overthinking, AI Native, Reinforcement Learning

💡 Category: Foundations of AI

🌟 Research Objective:

– Investigate and analyze the phenomenon of overthinking in Large Reasoning Models (LRMs) and its impact on performance in interactive environments.

🛠️ Research Methods:

– Conduct experiments on software engineering tasks with SWE Bench Verified, observing recurring patterns and analyzing 4018 trajectories to assess overthinking.

💬 Research Conclusions:

– Identified behaviors like Analysis Paralysis, Rogue Actions, and Premature Disengagement, with higher overthinking scores correlating with decreased performance. Strategies to mitigate overthinking improved model performance by nearly 30% and reduced computational costs by 43%. Open-sourced framework aims to foster further research.

👉 Paper link: https://huggingface.co/papers/2502.08235

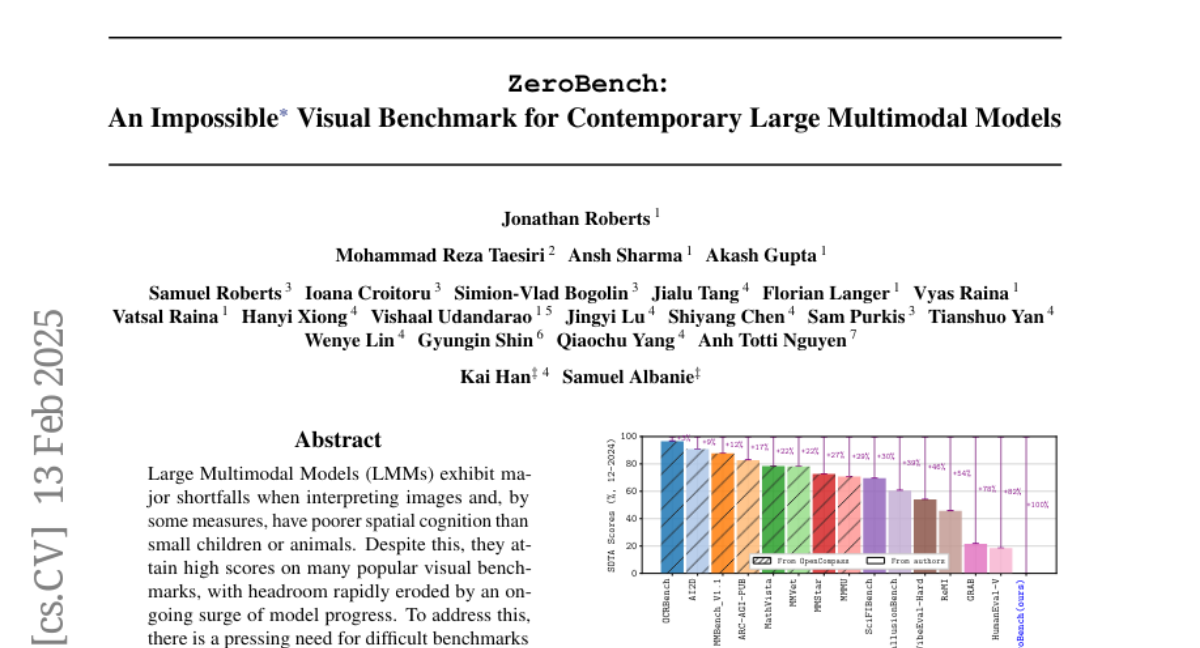

5. ZeroBench: An Impossible Visual Benchmark for Contemporary Large Multimodal Models

🔑 Keywords: Large Multimodal Models, visual reasoning, visual benchmarks, ZeroBench

💡 Category: Computer Vision

🌟 Research Objective:

– To introduce a challenging visual reasoning benchmark, ZeroBench, that modern Large Multimodal Models (LMMs) cannot solve.

🛠️ Research Methods:

– Developed ZeroBench with 100 manually curated questions and 334 subquestions to test the limits of LMMs.

– Evaluated 20 Large Multimodal Models on ZeroBench, all scoring 0.0%.

💬 Research Conclusions:

– Demonstrated that contemporary frontier LMMs fall short in visual reasoning and spatial cognition compared to smaller children or animals.

– Identified the need for more difficult benchmarks to stimulate progress in visual understanding.

👉 Paper link: https://huggingface.co/papers/2502.09696

6. MM-RLHF: The Next Step Forward in Multimodal LLM Alignment

🔑 Keywords: Multimodal Large Language Models, Human Preferences, Reward Models, Dynamic Reward Scaling, Safety Improvement

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Explore whether aligning Multimodal Large Language Models (MLLMs) with human preferences can enhance model capability.

🛠️ Research Methods:

– Introduced MM-RLHF, a dataset with 120k human-annotated preference comparison pairs.

– Proposed a Critique-Based Reward Model and Dynamic Reward Scaling to improve reward model quality and alignment algorithm efficiency.

💬 Research Conclusions:

– Achieved a 19.5% increase in conversational abilities and a 60% improvement in safety for LLaVA-ov-7B, underscoring the efficacy of the proposed approach.

– All resources related to the research have been open-sourced for further exploration and use.

👉 Paper link: https://huggingface.co/papers/2502.10391

7. Diverse Inference and Verification for Advanced Reasoning

🔑 Keywords: Reasoning LLMs, International Mathematical Olympiad, Abstraction and Reasoning Corpus, test-time simulations

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– The research aims to address the difficulties reasoning LLMs face with advanced tasks by employing a diverse inference approach combining multiple models and methods.

🛠️ Research Methods:

– A diverse inference approach is used, involving the verification of mathematics and code problems, rejection sampling, test-time simulations, reinforcement learning, and meta-learning with inference feedback.

💬 Research Conclusions:

– The proposed method significantly improves the accuracy of solving challenging problems, such as increasing the accuracy of IMO combinatorics problems to 77.8%, solving 80% of ARC puzzles unsolved by humans, and enhancing HLE question accuracy to 37%.

👉 Paper link: https://huggingface.co/papers/2502.09955

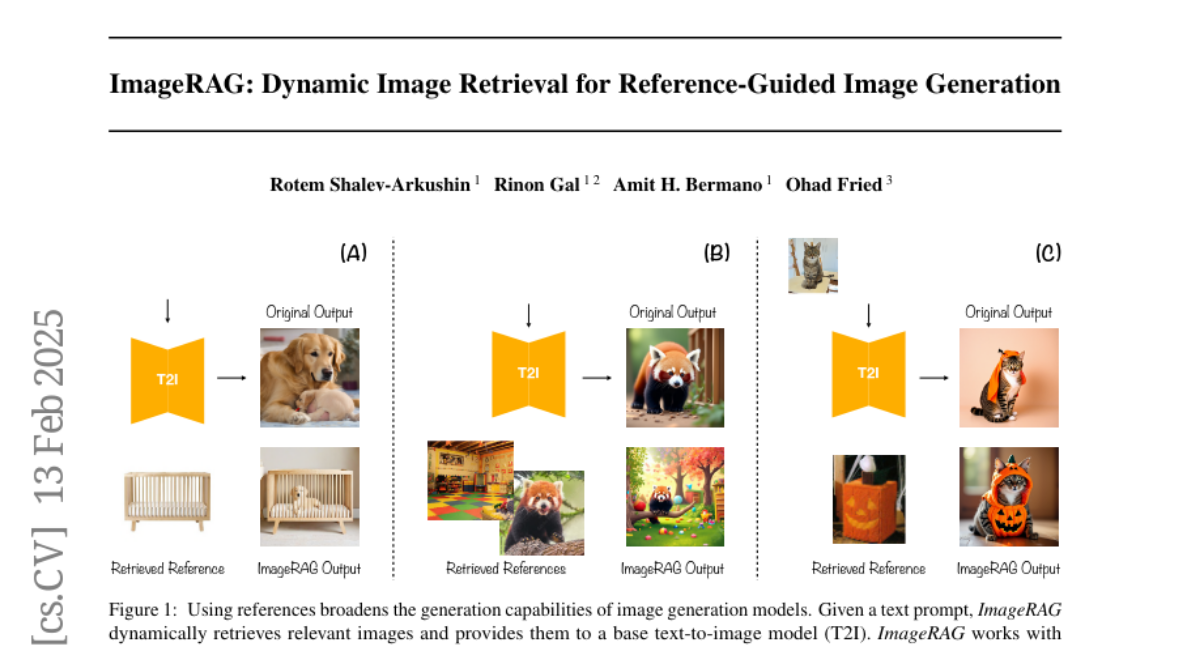

8. ImageRAG: Dynamic Image Retrieval for Reference-Guided Image Generation

🔑 Keywords: Diffusion models, Retrieval-Augmented Generation (RAG), ImageRAG, Image conditioning

💡 Category: Generative Models

🌟 Research Objective:

– Address the challenge of generating rare or unseen concepts in visual content synthesis using Diffusion models.

🛠️ Research Methods:

– Introduction of ImageRAG, utilizing dynamic image retrieval based on text prompts to guide the generation process without requiring RAG-specific training.

💬 Research Conclusions:

– ImageRAG is adaptable across different model types and significantly improves generation of rare and fine-grained concepts.

👉 Paper link: https://huggingface.co/papers/2502.09411

9. Precise Parameter Localization for Textual Generation in Diffusion Models

🔑 Keywords: Diffusion Models, Textual Generation, Cross Attention, Joint Attention, LoRA Fine-Tuning

💡 Category: Generative Models

🌟 Research Objective:

– This study aims to improve the efficiency and performance of textual generation within diffusion models by targeting specific attention layers responsible for text content generation.

🛠️ Research Methods:

– Using attention activation patching, the study identifies that less than 1% of diffusion models’ parameters affect image textual content generation. Subsequently, the cross and joint attention layers are localized for enhancements using LoRA-based fine-tuning.

💬 Research Conclusions:

– The research demonstrates that fine-tuning localized layers enhances text-generation capabilities while maintaining quality and diversity. It also shows applications in text editing within generated images and preventing toxic text generation without additional costs.

👉 Paper link: https://huggingface.co/papers/2502.09935

10. DarwinLM: Evolutionary Structured Pruning of Large Language Models

🔑 Keywords: Large Language Models, structured pruning, evolutionary search, post-compression training

💡 Category: Natural Language Processing

🌟 Research Objective:

– To develop a training-aware structured pruning method for compressing large language models that improves speed without compromising performance.

🛠️ Research Methods:

– Introduced \sysname, an evolutionary search-based method that generates multiple offspring models through mutation and selects the best performing ones, incorporating a lightweight multistep training process for performance evaluation.

💬 Research Conclusions:

– Validated through experiments on various models, \sysname achieves state-of-the-art performance in structured pruning, surpassing existing methods like ShearedLlama with significantly lower data requirements during post-compression training.

👉 Paper link: https://huggingface.co/papers/2502.07780

11. FoNE: Precise Single-Token Number Embeddings via Fourier Features

🔑 Keywords: Large Language Models, Fourier Number Embedding, Numerical Tasks

💡 Category: Natural Language Processing

🌟 Research Objective:

– The paper aims to improve numerical value representation and processing efficiency in Large Language Models (LLMs) using a novel Fourier Number Embedding (FoNE) method.

🛠️ Research Methods:

– Introducing FoNE, which maps numbers directly into embedding space with Fourier features, enabling each number to be encoded as a single token with two embedding dimensions per digit.

💬 Research Conclusions:

– FoNE significantly accelerates training and inference while reducing computational overhead and achieving higher accuracy in numerical tasks compared to traditional methods. It notably requires less data to reach high accuracy levels and provides 100% accuracy on extensive test datasets for operations like addition, subtraction, and multiplication.

👉 Paper link: https://huggingface.co/papers/2502.09741

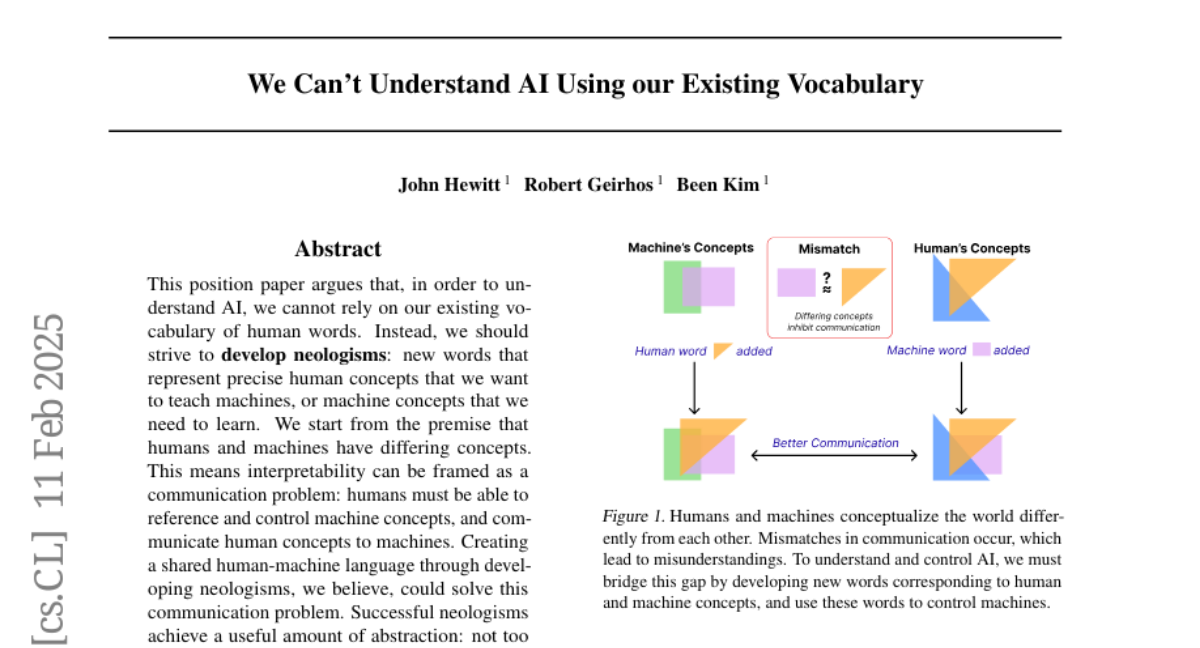

12. We Can’t Understand AI Using our Existing Vocabulary

🔑 Keywords: AI Understanding, Neologisms, Interpretability, Human-Machine Communication

💡 Category: Human-AI Interaction

🌟 Research Objective:

– To explore the need for developing new vocabulary, or neologisms, for effective communication between humans and AI.

🛠️ Research Methods:

– The development of specific neologisms, such as “length neologism” and “diversity neologism,” to demonstrate control and understanding of machine responses.

💬 Research Conclusions:

– Current human vocabulary is insufficient for fully understanding AI, and creating shared human-machine language through neologisms could enhance communication and control over AI systems.

👉 Paper link: https://huggingface.co/papers/2502.07586

13. AdaPTS: Adapting Univariate Foundation Models to Probabilistic Multivariate Time Series Forecasting

🔑 Keywords: Pre-trained foundation models, adapters, multivariate tasks, forecasting accuracy, uncertainty quantification

💡 Category: Machine Learning

🌟 Research Objective:

– To address challenges in using pre-trained foundation models for multivariate time series forecasting by introducing adapters that manage feature dependencies and quantify uncertainty.

🛠️ Research Methods:

– Utilization of feature-space transformations and strategies inspired by representation learning and Bayesian neural networks to implement adapters for projecting multivariate inputs into a latent space.

💬 Research Conclusions:

– The introduction of adapters significantly improves forecasting accuracy and uncertainty quantification, validated through experiments on synthetic and real-world datasets, thus promoting the effective application of time series foundation models in multivariate contexts.

👉 Paper link: https://huggingface.co/papers/2502.10235

14. Small Models, Big Impact: Efficient Corpus and Graph-Based Adaptation of Small Multilingual Language Models for Low-Resource Languages

🔑 Keywords: Low-resource languages, Multilingual models, Adapter-based methods, Sequential Bottleneck, Invertible Bottleneck

💡 Category: Natural Language Processing

🌟 Research Objective:

– Investigate parameter-efficient adapter-based methods for adapting smaller multilingual models to low-resource languages.

🛠️ Research Methods:

– Evaluate three adapter architectures: Sequential Bottleneck, Invertible Bottleneck, and Low-Rank Adaptation using data from GlotCC and ConceptNet.

💬 Research Conclusions:

– Sequential Bottleneck adapters excel in language modeling, while Invertible Bottleneck adapters perform better in downstream tasks. Adapter-based methods match or surpass full fine-tuning with fewer parameters, highlighting the effectiveness of smaller models for low-resource languages.

👉 Paper link: https://huggingface.co/papers/2502.10140

15. Text-guided Sparse Voxel Pruning for Efficient 3D Visual Grounding

🔑 Keywords: 3D visual grounding, sparse convolution, text-guided pruning, completion-based addition

💡 Category: Computer Vision

🌟 Research Objective:

– The paper aims to develop an efficient multi-level convolution architecture for real-time 3D visual grounding.

🛠️ Research Methods:

– The study proposes a novel framework using text-guided pruning (TGP) for iterative sparsification and completion-based addition (CBA) to enhance interaction between 3D scene representations and text features.

💬 Research Conclusions:

– The proposed method doubles the inference speed, achieving state-of-the-art accuracy and outperforms previous methods by 100% FPS and shows significant accuracy improvements on benchmarks like ScanRefer, NR3D, and SR3D.

👉 Paper link: https://huggingface.co/papers/2502.10392

16. Selective Self-to-Supervised Fine-Tuning for Generalization in Large Language Models

🔑 Keywords: Large Language Models, Fine-Tuning, Generalization, S3FT, Supervised Fine-Tuning

💡 Category: Natural Language Processing

🌟 Research Objective:

– To introduce and evaluate Selective Self-to-Supervised Fine-Tuning (S3FT), a fine-tuning approach aimed at improving generalization in Large Language Models while maintaining performance on specific tasks.

🛠️ Research Methods:

– S3FT leverages multiple valid responses to a query and uses a judge to identify correct model responses from the training set. It then fine-tunes using these responses along with gold responses to reduce model specialization.

💬 Research Conclusions:

– The study demonstrates that S3FT reduces average performance drop by half compared to standard supervised fine-tuning (SFT) on benchmarks like MMLU and TruthfulQA, enhancing generalization capabilities and task performance.

👉 Paper link: https://huggingface.co/papers/2502.08130

17. STMA: A Spatio-Temporal Memory Agent for Long-Horizon Embodied Task Planning

🔑 Keywords: Spatio-Temporal Memory, Task Planning, Embodied Agents

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– The aim is to enable agents to execute long-horizon tasks in dynamic environments while maintaining robust decision-making and adaptability using the Spatio-Temporal Memory Agent (STMA).

🛠️ Research Methods:

– Introduction of STMA with three components: spatio-temporal memory module, dynamic knowledge graph, and planner-critic mechanism. Evaluation conducted in the TextWorld environment across 32 tasks.

💬 Research Conclusions:

– STMA shows a 31.25% improvement in success rate and a 24.7% increase in average score over existing models, demonstrating the effectiveness of spatio-temporal memory in enhancing the memory capabilities of embodied agents.

👉 Paper link: https://huggingface.co/papers/2502.10177

18. Jailbreaking to Jailbreak

🔑 Keywords: LLMs, Refusal Training, Jailbreaks, AI Safety

💡 Category: Natural Language Processing

🌟 Research Objective:

– Introduce a novel method leveraging jailbroken LLMs to enhance attack strategies against refusal-trained language models.

🛠️ Research Methods:

– Utilize a human-operated jailbreak approach to convert refusal-trained LLMs into J_2 attackers capable of self-jailbreaking and red teaming.

💬 Research Conclusions:

– Demonstrate that LLMs like Sonnet 3.5 and Gemini 1.5 pro achieve high attack success rates in strategic red teaming, revealing overlooked vulnerabilities in AI safeguards.

👉 Paper link: https://huggingface.co/papers/2502.09638

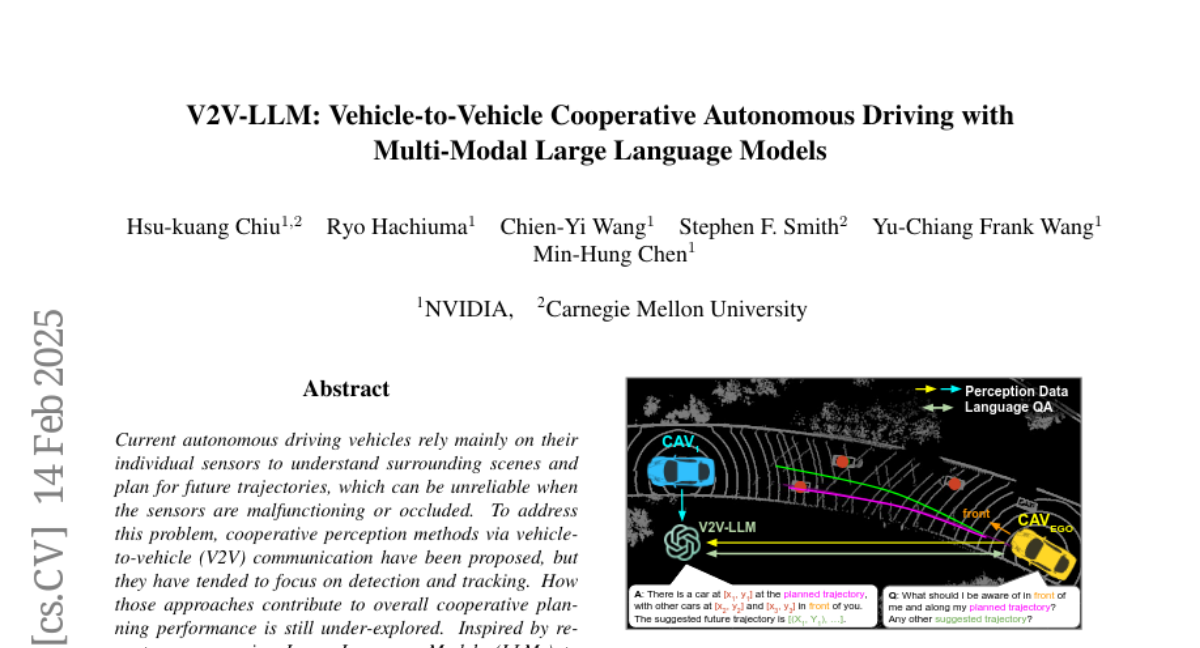

19. V2V-LLM: Vehicle-to-Vehicle Cooperative Autonomous Driving with Multi-Modal Large Language Models

🔑 Keywords: Autonomous Driving, V2V Communication, Large Language Models, Cooperative Perception, Vehicle-to-Vehicle Question-Answering

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– To enhance autonomous driving by integrating Large Language Models (LLMs) into cooperative perception methods, focusing on overall cooperative planning performance.

🛠️ Research Methods:

– Development of a novel problem setting and the Vehicle-to-Vehicle Question-Answering (V2V-QA) dataset.

– Introduction of a baseline method, Vehicle-to-Vehicle Large Language Model (V2V-LLM), which integrates perception information from multiple connected autonomous vehicles.

💬 Research Conclusions:

– The proposed V2V-LLM demonstrates a promising unified model architecture that excels in performing various tasks in cooperative autonomous driving compared to other fusion approaches.

– This research opens a new direction to potentially improve the safety of future autonomous driving systems.

👉 Paper link: https://huggingface.co/papers/2502.09980

20. MRS: A Fast Sampler for Mean Reverting Diffusion based on ODE and SDE Solvers

🔑 Keywords: Controllable Generation, Diffusion Models, MR Diffusion, MR Sampler, Image Restoration

💡 Category: Generative Models

🌟 Research Objective:

– To develop an efficient algorithm named MR Sampler that reduces the sampling NFEs in MR Diffusion to make generation controllable and practical.

🛠️ Research Methods:

– Solving reverse-time SDE and PF-ODE associated with MR Diffusion to derive semi-analytical solutions consisting of an analytical function and a neural network parameterized integral.

💬 Research Conclusions:

– MR Sampler achieves a 10 to 20 times speedup in sampling while maintaining high quality across ten image restoration tasks, enhancing the practicality of controllable generation in MR Diffusion.

👉 Paper link: https://huggingface.co/papers/2502.07856

21. Agentic End-to-End De Novo Protein Design for Tailored Dynamics Using a Language Diffusion Model

🔑 Keywords: Generative AI, protein design, vibrational modes, de novo

💡 Category: Generative Models

🌟 Research Objective:

– The paper presents VibeGen, a generative AI framework designed to engineer proteins with specific dynamic properties based on vibrational modes.

🛠️ Research Methods:

– VibeGen uses a dual-model architecture: a protein designer to generate sequences and a predictor to evaluate their dynamic accuracy, validated via full-atom molecular simulations.

💬 Research Conclusions:

– The designed proteins match prescribed vibrational amplitudes and adopt stable, functionally relevant structures, expanding protein design beyond natural evolutionary constraints and suggesting implications for biomolecule engineering.

👉 Paper link: https://huggingface.co/papers/2502.10173

22. Cluster and Predict Latents Patches for Improved Masked Image Modeling

🔑 Keywords: Masked Image Modeling, Self-Supervised Learning, Clustering-based Loss

💡 Category: Computer Vision

🌟 Research Objective:

– To introduce CAPI, a novel framework for Masked Image Modeling (MIM) that improves self-supervised representation learning by predicting latent clusterings.

🛠️ Research Methods:

– Systematic analysis of target representations, loss functions, and architectures; implementation of a clustering-based loss that is stable for training and exhibits good scaling properties.

💬 Research Conclusions:

– The CAPI framework, using a ViT-L backbone, achieves high performance with 83.8% accuracy on ImageNet and 32.1% mIoU on ADE20K, substantially surpassing previous MIM methods and approaching state-of-the-art performance such as DINOv2.

👉 Paper link: https://huggingface.co/papers/2502.08769

23. CLaMP 3: Universal Music Information Retrieval Across Unaligned Modalities and Unseen Languages

🔑 Keywords: Cross-modal generalization, Cross-lingual generalization, Music Information Retrieval, Contrastive Learning, Multimodal

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To develop CLaMP 3, a framework aimed at addressing cross-modal and cross-lingual generalization challenges in music information retrieval (MIR).

🛠️ Research Methods:

– Utilization of contrastive learning to align various music modalities with multilingual text in a shared representation space.

– Development of a multilingual text encoder adaptable to unseen languages, and creation of M4-RAG, a comprehensive music-text dataset.

💬 Research Conclusions:

– CLaMP 3 demonstrates state-of-the-art performance in multiple MIR tasks, significantly outperforming previous baselines, highlighting its effectiveness in multimodal and multilingual music contexts.

👉 Paper link: https://huggingface.co/papers/2502.10362