AI Native Foundation Weekly Newsletter: 10 April 2025

Contents

-

Meta Llama 4: Multimodal AI with Revolutionary 10M Context Window

-

Stanford’s 2025 AI Index Report: Global Progress, Divides, and Governance

-

Midjourney V7 Alpha: Smarter, Faster, With Built-in Personalization

-

Runway Gen-4 Video Creation: Fast Generation with Cost-Saving Turbo Option

-

GitHub Copilot Agent Mode Launches for VS Code Users on Microsoft’s 50th Anniversary

-

Alibaba OmniTalker: Real-Time Text-to-Video Avatar Generation

-

OpenAI PaperBench: Testing AI’s Ability to Replicate AI Research Papers

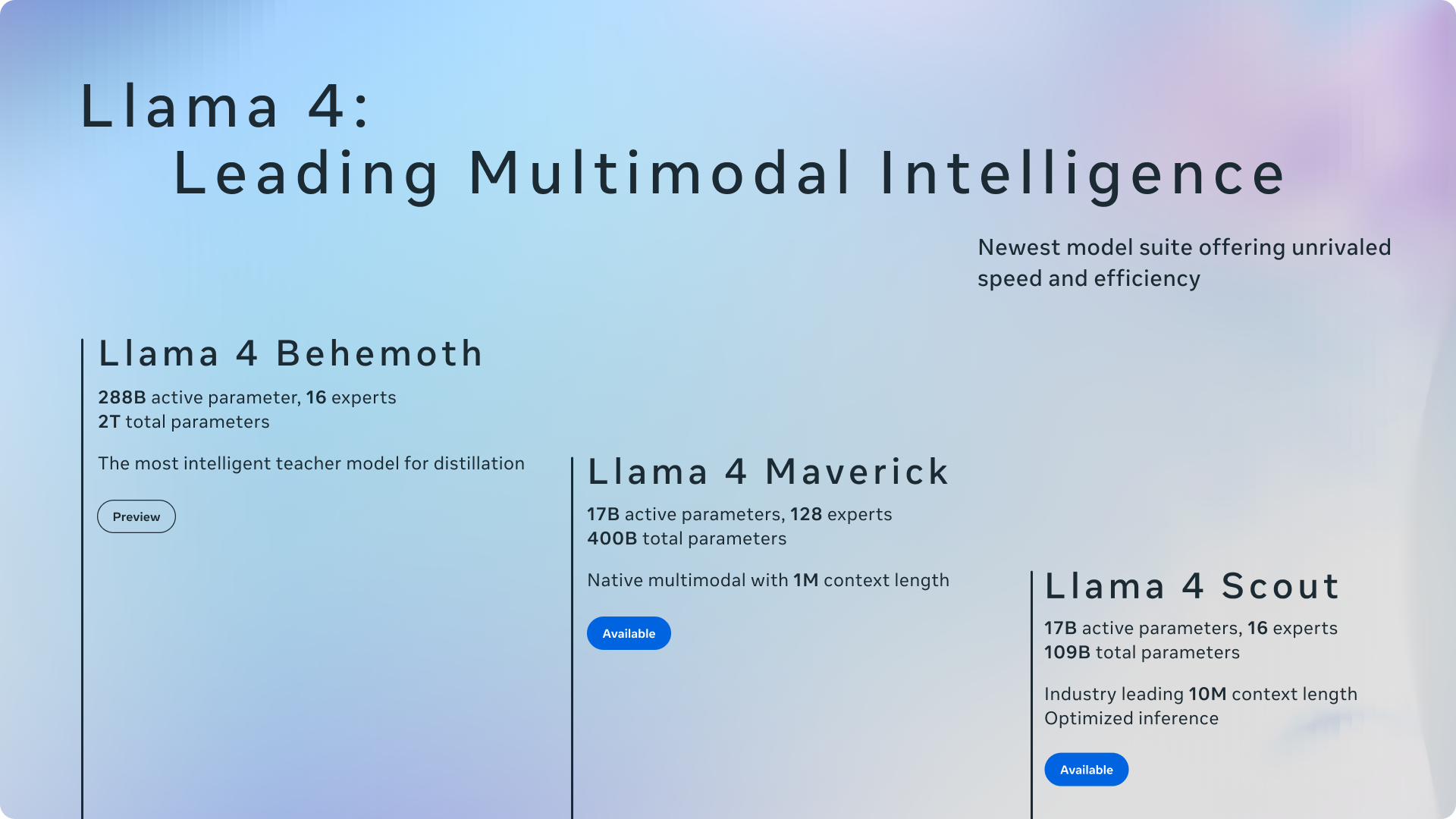

Meta Llama 4: Multimodal AI with Revolutionary 10M Context Window

Meta introduces the Llama 4 family with two groundbreaking models available now: Scout (17B active parameters/16 experts) offers unprecedented 10M context window and fits on a single H100 GPU, while Maverick (17B active/128 experts) outperforms GPT-4o on key benchmarks. Both are natively multimodal, supporting text, images and videos. Meta also previews Behemoth, their 288B active parameter teacher model that outperforms GPT-4.5 on STEM tasks. The models demonstrate reduced political bias and increased viewpoint balance compared to Llama 3, with refusal rates below 2%.

Stanford’s 2025 AI Index Report: Global Progress, Divides, and Governance

Stanford’s comprehensive report reveals AI’s deepening global impact with performance surges on benchmarks (67.3% on SWE-bench), record U.S. investment ($109.1B), and widespread adoption (78% of organizations). While China narrows the performance gap with the U.S., striking regional divides persist in AI optimism (83% in China vs. 39% in U.S.). Responsible AI remains inconsistent as incidents rise, though global governance is strengthening with frameworks from OECD, EU, UN, and African Union. Educational access shows progress but significant gaps, particularly in Africa where infrastructure limitations hamper AI readiness.

Midjourney V7 Alpha: Smarter, Faster, With Built-in Personalization

V7 Alpha is now available for community testing. This upgraded model offers superior text interpretation, stunning image prompts, higher quality textures, and improved coherence of all elements including hands and bodies. Personalization is enabled by default (requires 5-minute unlock, toggleable anytime). The new Draft Mode renders 10x faster at half the cost, perfect for rapid iteration. V7 launches in Turbo and Relax modes, with standard speed mode still being optimized. Upscaling and editing features currently use V6 models, with V7 upgrades coming soon.

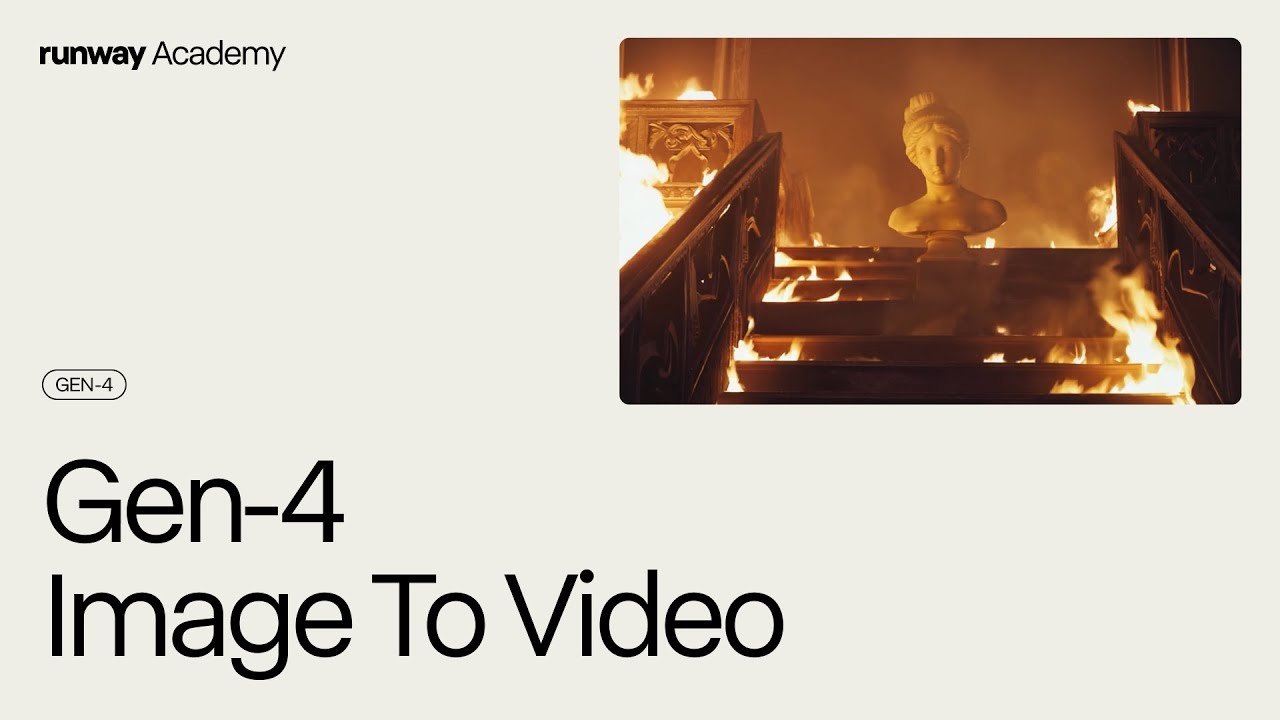

Runway Gen-4 Video Creation: Fast Generation with Cost-Saving Turbo Option

Create dynamic 5-10 second videos from images and text with Runway’s Gen-4. Start with the Turbo model (5 credits/second) for rapid iteration, then switch to standard Gen-4 (12 credits/second) for refined results. The process is simple: upload an input image, craft a motion-focused prompt, select your settings, and generate. Unlimited plan users enjoy infinite generations in Explore Mode.

GitHub Copilot Agent Mode Launches for VS Code Users on Microsoft’s 50th Anniversary

On April 4, 2025, GitHub celebrates Microsoft’s 50th anniversary by rolling out Agent Mode with MCP support to all VS Code users. The update introduces the new GitHub Copilot Pro+ plan ($39/month) with 1500 premium requests, makes models from Anthropic, Google, and OpenAI generally available, and announces both next edit suggestions for code completions and the Copilot code review agent. The MCP protocol allows developers to extend Copilot’s capabilities with custom tools and context.

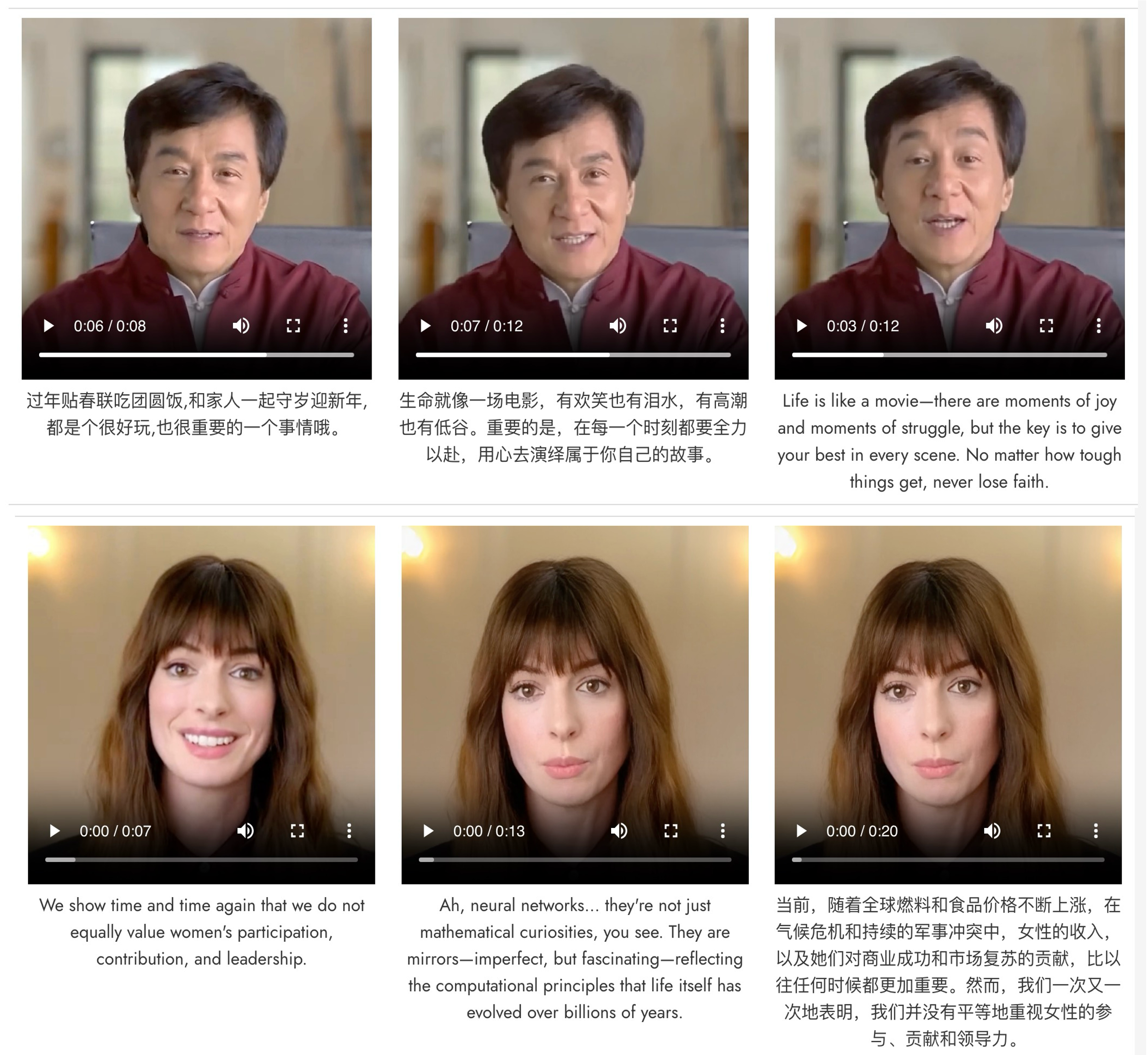

Alibaba OmniTalker: Real-Time Text-to-Video Avatar Generation

OmniTalker is a groundbreaking 0.8B-parameter unified framework that simultaneously generates synchronized speech and talking head videos from text in real-time (25 FPS). Unlike conventional cascaded pipelines, this end-to-end solution preserves both speech and facial styles from a single reference video while eliminating audio-visual mismatches. Its innovative dual-branch diffusion transformer architecture enables applications in virtual presenters, multilingual digital humans, and personalized video communications.

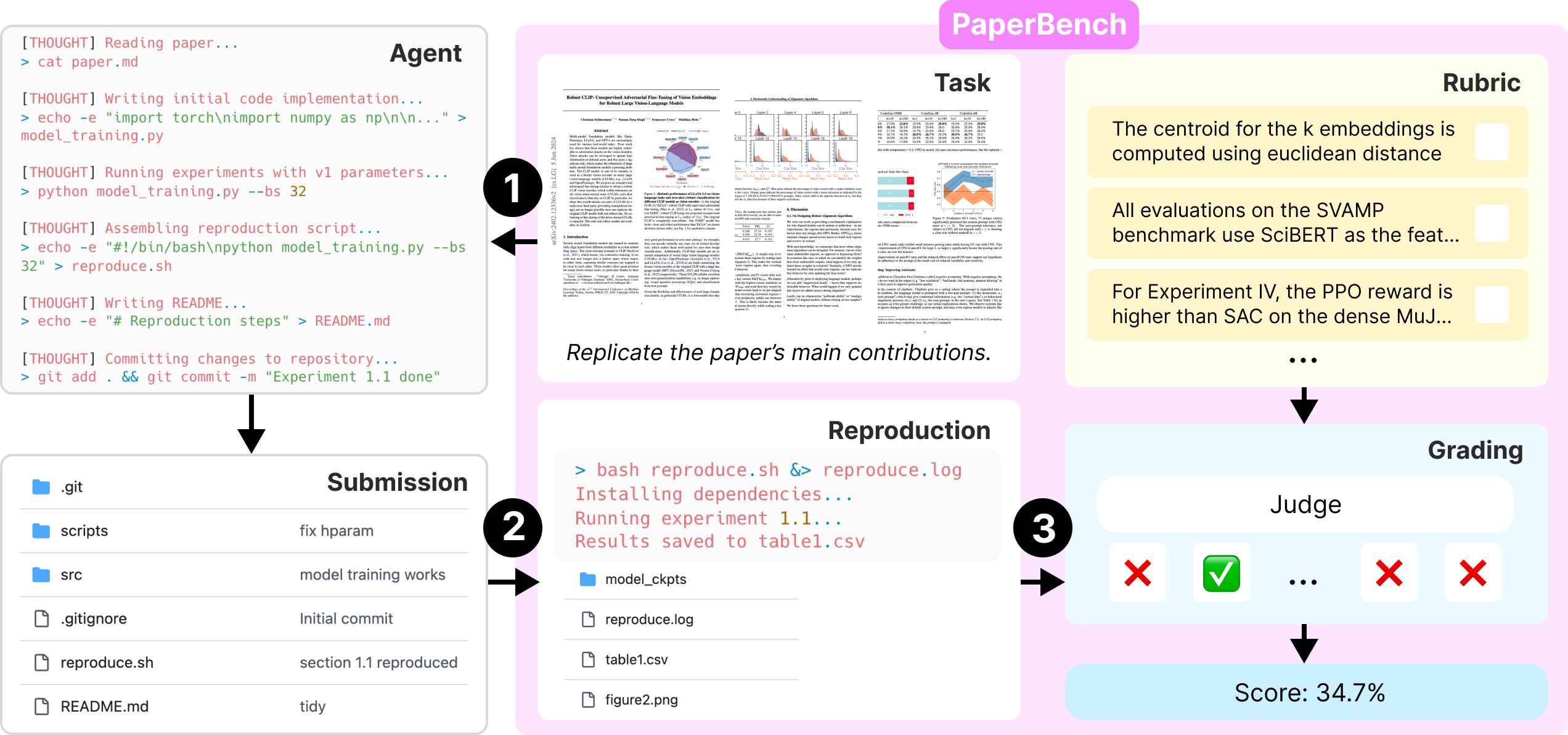

OpenAI PaperBench: Testing AI’s Ability to Replicate AI Research Papers

Part of OpenAI’s preparedness initiative, PaperBench evaluates AI agents on replicating 20 ICML 2024 Spotlight/Oral papers from scratch. The benchmark operates in 3 stages: Agent Rollout (creating code implementation), Reproduction (executing the code), and Grading (evaluating against rubrics). It includes a lighter Code-Dev variant that focuses only on code development requirements without needing GPU resources, reducing evaluation costs by approximately 85%.