AI Native Daily Paper Digest – 20250505

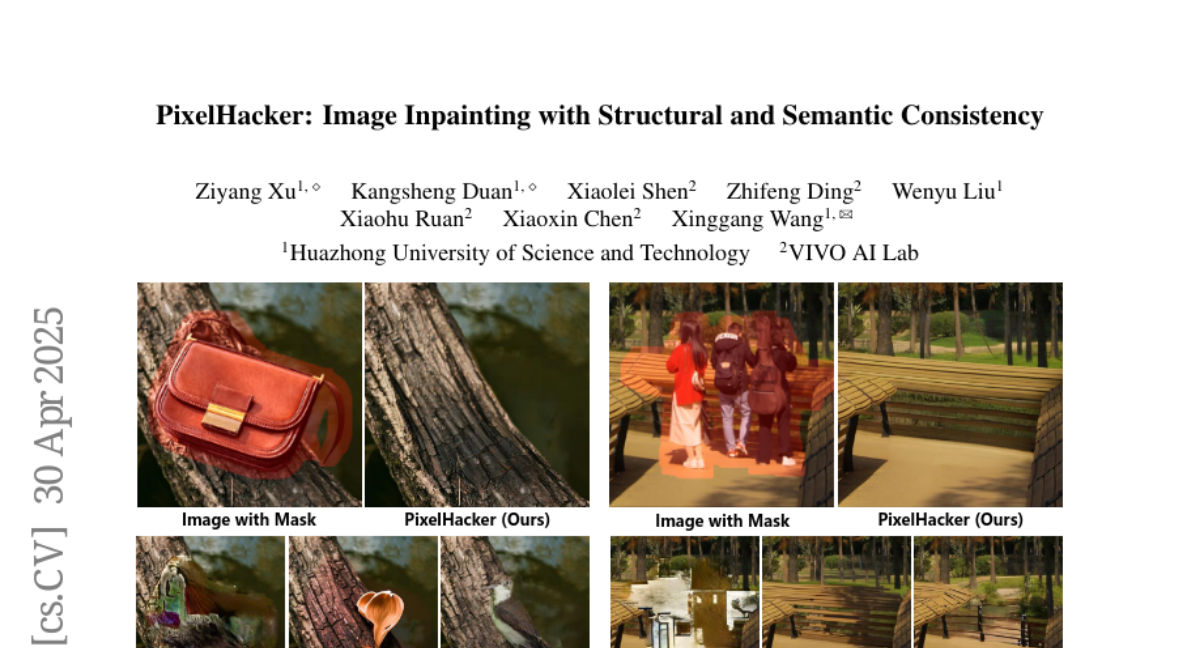

1. PixelHacker: Image Inpainting with Structural and Semantic Consistency

🔑 Keywords: Image Inpainting, Latent Categories Guidance, Diffusion-Based Model, PixelHacker, Linear Attention

💡 Category: Generative Models

🌟 Research Objective:

– The study aims to address the limitations in current image inpainting methods with complex structures and semantics by designing a new paradigm called latent categories guidance and a diffusion-based model named PixelHacker.

🛠️ Research Methods:

– Constructing a large dataset consisting of 14 million image-mask pairs, encoding foreground and background representations into fixed-size embeddings, and integrating these features into the denoising process via linear attention.

💬 Research Conclusions:

– The PixelHacker model, after pre-training and fine-tuning on benchmarks, outperforms state-of-the-art methods across various datasets, achieving remarkable consistency in structure and semantics.

👉 Paper link: https://huggingface.co/papers/2504.20438

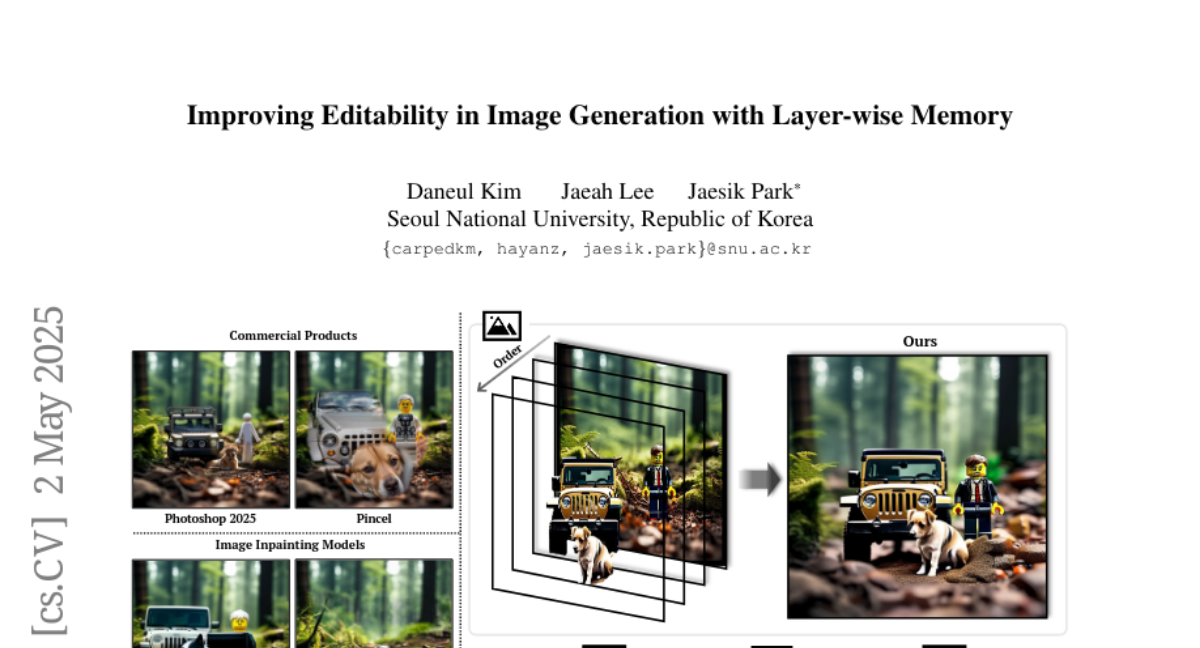

2. Improving Editability in Image Generation with Layer-wise Memory

🔑 Keywords: sequential editing, latent representations, Multi-Query Disentanglement, iterative image editing

💡 Category: Computer Vision

🌟 Research Objective:

– The primary aim is to address limitations in current image editing approaches for maintaining previous edits and integrating new objects naturally in sequential editing tasks.

🛠️ Research Methods:

– The framework uses rough mask inputs, layer-wise memory to store latent representations, and introduces Background Consistency Guidance and Multi-Query Disentanglement to ensure coherent editing across multiple modifications.

💬 Research Conclusions:

– The proposed method demonstrates superior performance in iterative image editing, achieving high-quality results with minimal user effort by using rough masks and maintaining scene coherence.

👉 Paper link: https://huggingface.co/papers/2505.01079

3. Beyond One-Size-Fits-All: Inversion Learning for Highly Effective NLG Evaluation Prompts

🔑 Keywords: NLG, LLM-based evaluation, prompt design, inversion learning, reverse mappings

💡 Category: Natural Language Processing

🌟 Research Objective:

– To propose an inversion learning method that creates effective reverse mappings to improve NLG evaluation efficiency and robustness.

🛠️ Research Methods:

– Development of a technique allowing automatic generation of model-specific evaluation prompts from LLM outputs back to their input instructions, requiring only a single evaluation sample.

💬 Research Conclusions:

– The proposed method enhances LLM-based evaluation by eliminating manual prompt engineering, thus improving both efficiency and robustness.

👉 Paper link: https://huggingface.co/papers/2504.21117

4. Llama-Nemotron: Efficient Reasoning Models

🔑 Keywords: Llama-Nemotron, reasoning models, Neural Architecture Search, dynamic reasoning toggle, open-source

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– To introduce the Llama-Nemotron series, a family of open-source heterogeneous reasoning models designed for exceptional reasoning capabilities and efficient enterprise use.

🛠️ Research Methods:

– Training procedure entailing Neural Architecture Search from Llama 3 models, knowledge distillation, continued pretraining, and a reasoning-focused post-training stage including supervised fine-tuning and large-scale reinforcement learning.

💬 Research Conclusions:

– Llama-Nemotron models offer competitive performance with superior inference throughput and memory efficiency. They are the first to support a dynamic reasoning toggle and are released under a commercially permissive open model license agreement to promote open research and model development.

👉 Paper link: https://huggingface.co/papers/2505.00949

5. Real-World Gaps in AI Governance Research

🔑 Keywords: Generative AI, Model Alignment, Ethical AI, Deployment, Corporate AI Research

💡 Category: AI Ethics and Fairness

🌟 Research Objective:

– The research aims to compare the focus of AI research outputs between leading AI companies and universities, particularly in the areas of safety and reliability.

🛠️ Research Methods:

– The study analyzes 1,178 papers on safety and reliability from a pool of 9,439 generative AI papers authored between January 2020 and March 2025, focusing on pre-deployment and deployment-stage areas.

💬 Research Conclusions:

– Corporate AI research is increasingly focused on pre-deployment tasks such as model alignment and testing, with less attention being given to deployment-stage issues like model bias. Significant research gaps exist in high-risk domains such as healthcare, finance, and misinformation. There is a need for better observability into deployed AI to address these gaps.

👉 Paper link: https://huggingface.co/papers/2505.00174

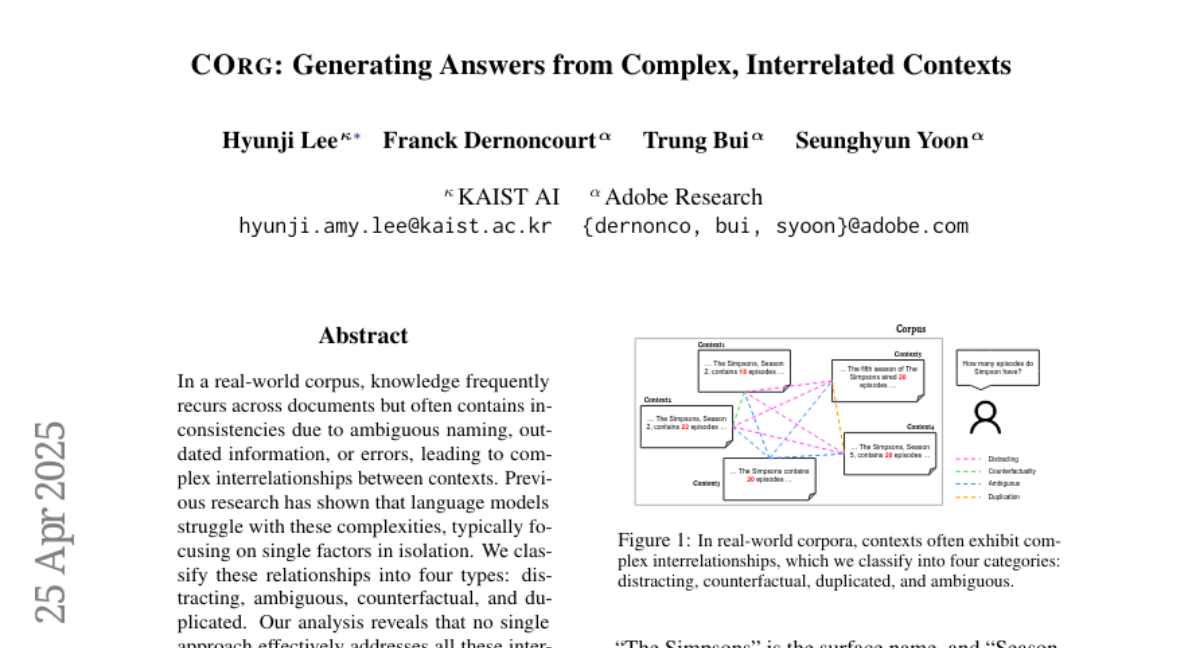

6. CORG: Generating Answers from Complex, Interrelated Contexts

🔑 Keywords: Context Organizer, CORG, graph constructor, reranker, aggregator

💡 Category: Natural Language Processing

🌟 Research Objective:

– To effectively manage the complexities of recurring knowledge in real-world corpora by addressing four types of interrelationships: distracting, ambiguous, counterfactual, and duplicated.

🛠️ Research Methods:

– Introduced CORG, a framework comprising a graph constructor, reranker, and aggregator, to organize contexts into independently processed groups.

💬 Research Conclusions:

– CORG efficiently balances performance and effectiveness, outperforming existing grouping methods and matching the results of more computationally intensive approaches.

👉 Paper link: https://huggingface.co/papers/2505.00023

7. X-Cross: Dynamic Integration of Language Models for Cross-Domain Sequential Recommendation

🔑 Keywords: X-Cross, recommendation systems, low-rank adapters (LoRA), cross-domain tasks, computational overhead

💡 Category: Machine Learning

🌟 Research Objective:

– The paper introduces X-Cross, a cross-domain sequential-recommendation model designed to adapt to new product domains quickly without requiring extensive retraining.

🛠️ Research Methods:

– Utilizes domain-specific language models integrated with low-rank adapters (LoRA) to dynamically refine and propagate knowledge across domains using Amazon datasets.

💬 Research Conclusions:

– X-Cross achieves similar performance to models fine-tuned with LoRA using only 25% of the additional parameters and significantly less fine-tuning data in cross-domain tasks, enhancing scalability and reducing computational overhead.

👉 Paper link: https://huggingface.co/papers/2504.20859

8. TeLoGraF: Temporal Logic Planning via Graph-encoded Flow Matching

🔑 Keywords: STL specifications, Graph Neural Networks, flow-matching, 7DoF Franka Panda robot arm, Ant quadruped navigation

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– The paper aims to address the limitation of fixed or parametrized Signal Temporal Logic (STL) specifications by proposing a more flexible solution, TeLoGraF, which applies Graph Neural Networks to learn solutions for general STL specifications.

🛠️ Research Methods:

– The authors utilized a Graph Neural Networks encoder and flow-matching techniques within TeLoGraF to analyze diverse STL datasets and demonstrated its performance across different simulation environments, from simple 2D models to complex robots such as the 7DoF Franka Panda and Ant quadruped.

💬 Research Conclusions:

– TeLoGraF significantly outperforms traditional STL planning algorithms in terms of STL satisfaction rate and inference speed, being 10-100 times faster. It is capable of addressing complex STLs and remains robust when handling out-distribution STL specifications.

👉 Paper link: https://huggingface.co/papers/2505.00562