AI Native Daily Paper Digest – 20250513

1. Seed1.5-VL Technical Report

🔑 Keywords: Vision-Language Foundation Model, Multimodal Understanding, Mixture-of-Experts, State-of-the-Art Performance, GUI Control

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To advance general-purpose multimodal understanding and reasoning with Seed1.5-VL.

🛠️ Research Methods:

– Developed a 532M-parameter vision encoder integrated with a Mixture-of-Experts LLM totaling 20B active parameters.

– Evaluated across public VLM benchmarks and internal suites.

💬 Research Conclusions:

– Seed1.5-VL achieved state-of-the-art performance on 38 out of 60 public benchmarks.

– Demonstrated superior performance in agent-centric tasks such as GUI control and gameplay, surpassing systems like OpenAI CUA and Claude 3.7.

– Exhibited strong reasoning capabilities effective for multimodal reasoning challenges.

👉 Paper link: https://huggingface.co/papers/2505.07062

2. MiMo: Unlocking the Reasoning Potential of Language Model — From Pretraining to Posttraining

🔑 Keywords: MiMo-7B, reasoning tasks, large language model, pre-training, post-training

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– The primary goal is to develop MiMo-7B, a large language model optimized for reasoning tasks.

🛠️ Research Methods:

– Enhancement of data preprocessing and implementation of a three-stage data mixing strategy during pre-training.

– Integration of reinforcement learning with a test-difficulty-driven code-reward scheme in post-training.

💬 Research Conclusions:

– MiMo-7B-Base exhibits exceptional reasoning capabilities, outperforming larger models up to 32B.

– The advanced model, MiMo-7B-RL, excels in mathematics, programming, and general reasoning tasks, surpassing OpenAI o1-mini.

👉 Paper link: https://huggingface.co/papers/2505.07608

3. Step1X-3D: Towards High-Fidelity and Controllable Generation of Textured 3D Assets

🔑 Keywords: Generative AI, 3D Generation, Data Scarcity, Algorithmic Limitations, Open Framework

💡 Category: Generative Models

🌟 Research Objective:

– Step1X-3D addresses the underdevelopment in 3D generative AI by tackling challenges like data scarcity and algorithmic limitations.

🛠️ Research Methods:

– The study introduces a framework featuring a data curation pipeline for a 2M high-quality dataset and a two-stage 3D-native architecture using a combination of VAE-DiT geometry generator and diffusion-based texture synthesis.

💬 Research Conclusions:

– The framework’s state-of-the-art performance surpasses existing open-source methods and bridges 2D and 3D generation techniques, thereby setting new standards for open research in controllable 3D asset generation.

👉 Paper link: https://huggingface.co/papers/2505.07747

4. Learning from Peers in Reasoning Models

🔑 Keywords: Prefix Dominance Trap, Learning from Peers (LeaP), error correction, error tolerance, reasoning

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– To address the “Prefix Dominance Trap” in Large Reasoning Models (LRMs) by facilitating self-correction through peer interaction.

🛠️ Research Methods:

– Introduction of the Learning from Peers (LeaP) model using a routing mechanism to share reasoning insights among paths, and fine-tuning smaller models into the LeaP-T series to enhance their performance.

💬 Research Conclusions:

– LeaP models demonstrate substantial improvements in reasoning tasks, providing robust error correction and tolerance, with significant performance gains over baseline models and established benchmarks.

👉 Paper link: https://huggingface.co/papers/2505.07787

5. Unified Continuous Generative Models

🔑 Keywords: Continuous Generative Models, Unified Framework, State-of-the-art, Diffusion Transformer, ImageNet

💡 Category: Generative Models

🌟 Research Objective:

– Introduce a unified framework for training, sampling, and analyzing continuous generative models to achieve superior performance.

🛠️ Research Methods:

– Implementation of Unified Continuous Generative Models Trainer and Sampler (UCGM-{T,S}) that demonstrates improved efficiency and performance in generative tasks.

💬 Research Conclusions:

– UCGM achieves state-of-the-art results on ImageNet, with impressive FID scores in reduced steps, showcasing its effectiveness over traditional multi-step and few-step models.

👉 Paper link: https://huggingface.co/papers/2505.07447

6. REFINE-AF: A Task-Agnostic Framework to Align Language Models via Self-Generated Instructions using Reinforcement Learning from Automated Feedback

🔑 Keywords: Large Language Models, human-annotated instruction data, semi-automated framework, Reinforcement Learning

💡 Category: Natural Language Processing

🌟 Research Objective:

– To explore the efficiency of small open-source Large Language Models (LLMs) in generating instruction datasets with reduced human involvement and costs.

🛠️ Research Methods:

– Utilization of a semi-automated framework with small LLMs such as LLaMA 2-7B, LLama 2-13B, and Mistral 7B.

– Integration of a Reinforcement Learning (RL)-based training algorithm into the LLMs-based framework.

💬 Research Conclusions:

– The RL-based framework shows significant improvements in 63-66% of tasks compared to previous methods, demonstrating effectiveness in task performance enhancement.

👉 Paper link: https://huggingface.co/papers/2505.06548

7. AttentionInfluence: Adopting Attention Head Influence for Weak-to-Strong Pretraining Data Selection

🔑 Keywords: AttentionInfluence, reasoning-intensive pretraining, attention head masking, SmolLM corpus, weak-to-strong scaling

💡 Category: Natural Language Processing

🌟 Research Objective:

– To improve the complex reasoning ability of large language models (LLMs) by utilizing reasoning-intensive pretraining data without introducing domain-specific biases.

🛠️ Research Methods:

– Introduced AttentionInfluence, a training-free method using attention head masking to identify and select data.

– Applied this method to a 1.3B-parameter model on the SmolLM corpus, leading to the pretraining of a larger 7B-parameter model with mixed data.

💬 Research Conclusions:

– Demonstrated significant performance improvements on several knowledge-intensive benchmarks, showcasing an effective weak-to-strong scaling property for data selection strategies.

👉 Paper link: https://huggingface.co/papers/2505.07293

8. DanceGRPO: Unleashing GRPO on Visual Generation

🔑 Keywords: Generative Models, Reinforcement Learning, DanceGRPO, Visual Generation, Diffusion Models

💡 Category: Generative Models

🌟 Research Objective:

– Address the alignment challenges of generative model outputs with human preferences and improve RL-based visual generation methods.

🛠️ Research Methods:

– Introduction of DanceGRPO, a unified RL framework adapting Group Relative Policy Optimization to work across diverse generative paradigms, tasks, foundational models, and reward models.

💬 Research Conclusions:

– DanceGRPO demonstrates significant improvements, outperforming baselines by up to 181%, and establishes itself as a robust solution for scaling Reinforcement Learning from Human Feedback tasks in visual generation.

👉 Paper link: https://huggingface.co/papers/2505.07818

9. WebGen-Bench: Evaluating LLMs on Generating Interactive and Functional Websites from Scratch

🔑 Keywords: LLM-based agents, WebGen-Bench, GPT-4o, web applications, web-navigation agent

💡 Category: AI Systems and Tools

🌟 Research Objective:

– The study aims to introduce WebGen-Bench, a novel benchmark to evaluate the ability of LLM-based agents to create multi-file website codebases from scratch.

🛠️ Research Methods:

– Diverse instructions for website generation were compiled using human annotators and GPT-4o, covering major and minor categories of web applications.

– Test cases targeting specific functionalities were generated and manually refined to ensure accuracy. A web-navigation agent was used to automate testing and improve reproducibility.

💬 Research Conclusions:

– Among several evaluated code-agent frameworks, Bolt.diy powered by DeepSeek-R1 achieved only 27.8% accuracy, indicating the challenging nature of the benchmark.

– Training Qwen2.5-Coder-32B-Instruct on Bolt.diy trajectories improved accuracy to 38.2%, outperforming the best proprietary model.

👉 Paper link: https://huggingface.co/papers/2505.03733

10. Skywork-VL Reward: An Effective Reward Model for Multimodal Understanding and Reasoning

🔑 Keywords: Multimodal Reward Model, Vision-Language Models, Reward Model Architecture, Multimodal Reasoning, General-Purpose Reliable Reward Models

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Propose Skywork-VL Reward, a model that offers reward signals for multimodal understanding and reasoning tasks.

🛠️ Research Methods:

– Construct a large-scale multimodal preference dataset covering multiple tasks and scenarios.

– Design a reward model architecture based on Qwen2.5-VL-7B-Instruct, integrating a reward head and using multi-stage fine-tuning with pairwise ranking loss on pairwise preference data.

💬 Research Conclusions:

– Skywork-VL Reward achieves state-of-the-art performance on multimodal VL-RewardBench and shows competitive results on the text-only RewardBench benchmark.

– The model advances general-purpose, reliable reward models for multimodal alignment and is publicly released for transparency and reproducibility.

👉 Paper link: https://huggingface.co/papers/2505.07263

11. Learning Dynamics in Continual Pre-Training for Large Language Models

🔑 Keywords: Continual Pre-Training, large language models, distribution shift, learning rate annealing, CPT scaling law

💡 Category: Natural Language Processing

🌟 Research Objective:

– The study aims to explore the learning dynamics in the Continual Pre-Training (CPT) process for large language models, focusing on the evolution of general and domain-specific performance at each training step.

🛠️ Research Methods:

– The researchers analyzed the CPT loss curve, describing its transition using the decoupling effects of distribution shift and learning rate annealing. They derived a CPT scaling law to predict loss at any training step and across various learning rate schedules.

💬 Research Conclusions:

– The formulation provides a comprehensive understanding of critical CPT factors such as loss potential, peak learning rate, and replay ratio. It also allows for the customization of training hyper-parameters to balance general and domain-specific performance, with validation via extensive experiments across different datasets and parameters.

👉 Paper link: https://huggingface.co/papers/2505.07796

12. Reinforced Internal-External Knowledge Synergistic Reasoning for Efficient Adaptive Search Agent

🔑 Keywords: Retrieval-augmented generation, Large Language Models, Search Agent, Internal-External Knowledge Synergy, Reinforcement Learning

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– The paper aims to address the limitations of current retrieval-augmented generation strategies in large language models by introducing the Reinforced Internal-External Knowledge Synergistic Reasoning Agent (IKEA).

🛠️ Research Methods:

– IKEA utilizes a novel knowledge-boundary aware reward function and a knowledge-boundary aware training dataset to synergize internal and external knowledge, reducing unnecessary retrievals and optimizing search processes.

💬 Research Conclusions:

– IKEA significantly outperforms baseline methods in multiple knowledge reasoning tasks, reducing retrieval frequency and demonstrating strong generalization capabilities.

👉 Paper link: https://huggingface.co/papers/2505.07596

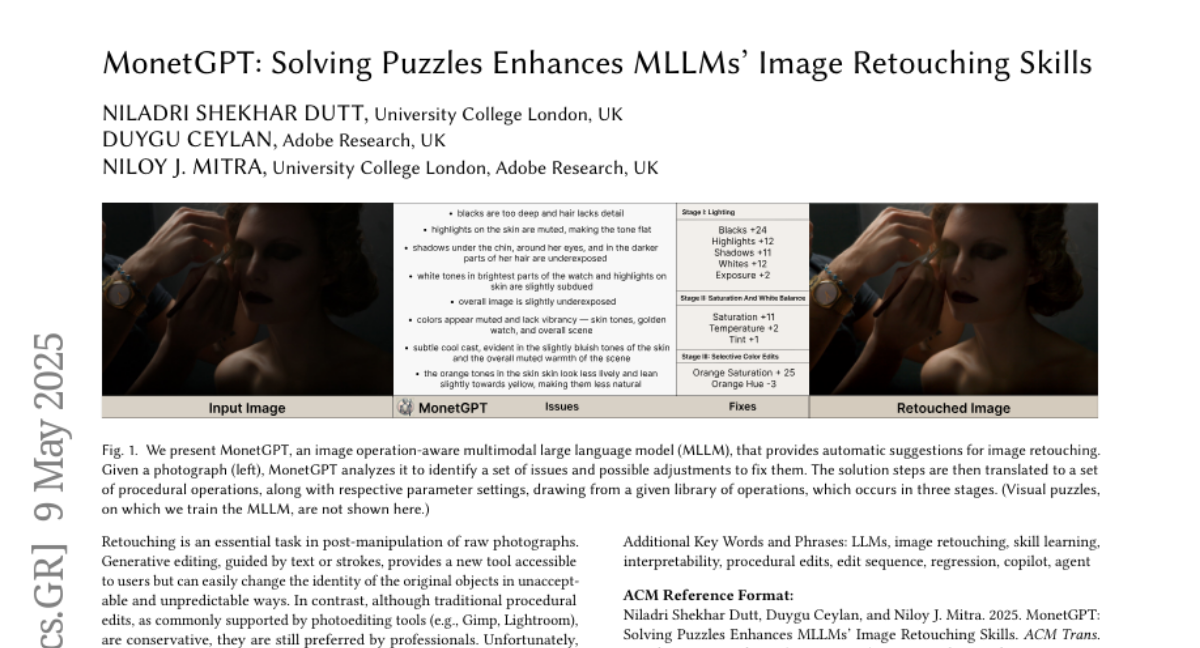

13. MonetGPT: Solving Puzzles Enhances MLLMs’ Image Retouching Skills

🔑 Keywords: Generative editing, Procedural edits, Multimodal large language model, Explainability, Identity preservation

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Investigate if a Multimodal large language model (MLLM) can critique raw photographs and suggest suitable procedural edits.

🛠️ Research Methods:

– Train MLLMs to understand image processing operations using specially designed visual puzzles and create a reasoning dataset from expert-edited photos for fine-tuning.

💬 Research Conclusions:

– The operation-aware MLLM can plan and propose edit sequences that maintain object details and resolution, with advantages in explainability and identity preservation compared to existing methods.

👉 Paper link: https://huggingface.co/papers/2505.06176

14. Position: AI Competitions Provide the Gold Standard for Empirical Rigor in GenAI Evaluation

🔑 Keywords: Generative AI, empirical evaluation, AI Competitions, leakage, contamination

💡 Category: Generative Models

🌟 Research Objective:

– Highlight the inadequacy of traditional ML evaluation strategies for modern Generative AI models and propose AI Competitions as a rigorous alternative.

🛠️ Research Methods:

– Discuss the current challenges of evaluating Generative AI models, focusing on unbounded input/output spaces and prediction dependence.

💬 Research Conclusions:

– Propose viewing AI Competitions as the gold standard for evaluating Generative AI models to effectively address issues like leakage and contamination.

👉 Paper link: https://huggingface.co/papers/2505.00612

15. UMoE: Unifying Attention and FFN with Shared Experts

🔑 Keywords: Sparse Mixture of Experts, Transformer models, attention layers, feed-forward network, efficient parameter sharing

💡 Category: Machine Learning

🌟 Research Objective:

– To unify Sparse Mixture of Experts (MoE) designs in attention and FFN layers, enhancing Transformer model performance.

🛠️ Research Methods:

– Introducing a novel reformulation of the attention mechanism, uncovering an FFN-like structure within attention modules.

💬 Research Conclusions:

– The proposed UMoE architecture achieves superior performance in attention-based MoE layers while allowing efficient parameter sharing between FFN and attention components.

👉 Paper link: https://huggingface.co/papers/2505.07260

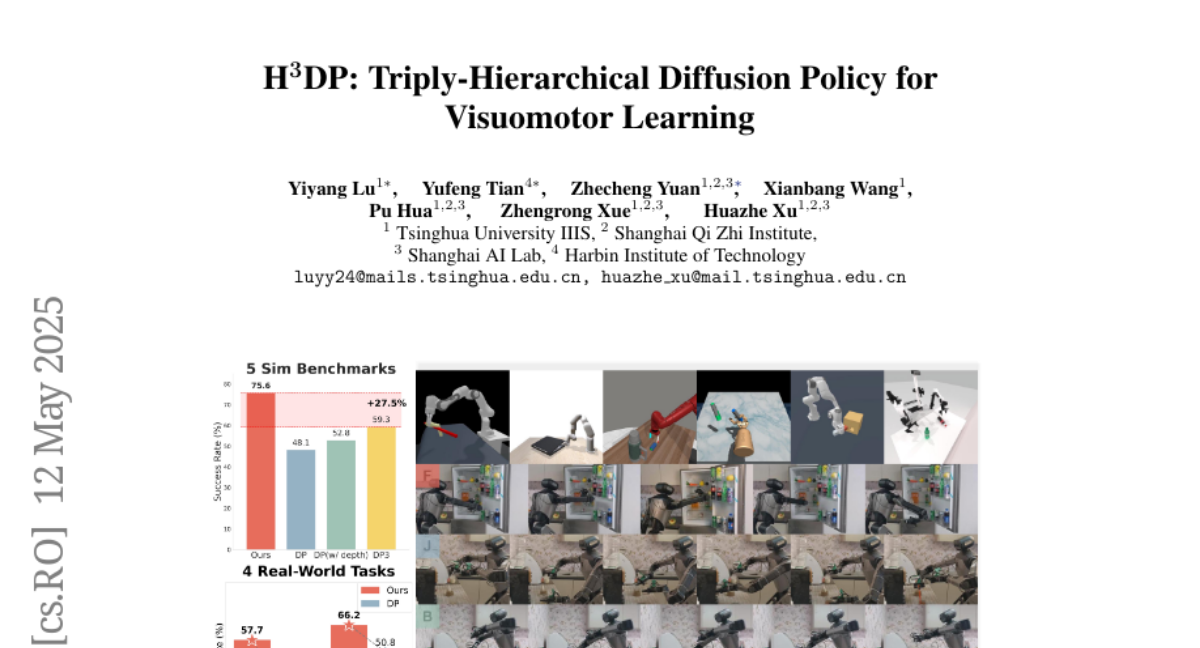

16. H^{3}DP: Triply-Hierarchical Diffusion Policy for Visuomotor Learning

🔑 Keywords: Visuomotor policy learning, Generative models, Visual perception, Action prediction, Hierarchical structures

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– Introduce Triply-Hierarchical Diffusion Policy (H^{3}DP) to enhance the integration between visual features and action generation in robotic manipulation.

🛠️ Research Methods:

– Utilize a triply-hierarchical structure, comprising depth-aware input layering, multi-scale visual representations, and a hierarchically conditioned diffusion process to model the action distribution.

💬 Research Conclusions:

– H^{3}DP achieves a 27.5% average relative improvement over baselines across 44 simulation tasks and demonstrates superior performance in 4 real-world bimanual manipulation tasks.

👉 Paper link: https://huggingface.co/papers/2505.07819

17. Document Attribution: Examining Citation Relationships using Large Language Models

🔑 Keywords: Large Language Models, document summarization, attribution, textual entailment, attention mechanism

💡 Category: Natural Language Processing

🌟 Research Objective:

– To ensure the trustworthiness and interpretability of Large Language Models in document-based tasks through effective attribution techniques.

🛠️ Research Methods:

– Proposing a zero-shot approach using textual entailment with flan-ul2 to improve attribution benchmarks.

– Exploring the attention mechanism with flan-t5-small to enhance process accuracy.

💬 Research Conclusions:

– The proposed methods improve reliability in attribution tasks, demonstrating better performance over existing baselines.

👉 Paper link: https://huggingface.co/papers/2505.06324

18. Overflow Prevention Enhances Long-Context Recurrent LLMs

🔑 Keywords: LLMs, long-context processing, recurrent memory, chunk-based inference, LongBench

💡 Category: Natural Language Processing

🌟 Research Objective:

– To investigate the efficiency of recurrent sub-quadratic models in improving long-context processing.

🛠️ Research Methods:

– Experiments on fixed-size recurrent memory models using a chunk-based inference procedure to process only relevant input portions.

💬 Research Conclusions:

– Chunk-based inference enhances long-context task performance significantly, with improvements ranging from 14% to 51% on various models.

– The method achieves state-of-the-art results on the LongBench v2 benchmark.

– Raises questions on the effectiveness of recurrent models in utilizing long-range dependencies, as chunk-based strategy performs better even in cross-context tasks.

👉 Paper link: https://huggingface.co/papers/2505.07793

19. Continuous Visual Autoregressive Generation via Score Maximization

🔑 Keywords: Visual AutoRegressive modeling, Continuous VAR, strictly proper scoring rules, energy score

💡 Category: Generative Models

🌟 Research Objective:

– The paper introduces a Continuous VAR framework for autoregressive generation of continuous visual data without needing vector quantization.

🛠️ Research Methods:

– The framework is based on strictly proper scoring rules, particularly exploring training objectives using the energy score, which is likelihood-free.

💬 Research Conclusions:

– The approach overcomes information loss seen in traditional methods and can extend previous methodologies like GIVT and diffusion loss by using different strictly proper scores.

👉 Paper link: https://huggingface.co/papers/2505.07812

20. Physics-Assisted and Topology-Informed Deep Learning for Weather Prediction

🔑 Keywords: PASSAT, weather prediction, deep learning, advection equation, spherical graph neural network

💡 Category: Machine Learning

🌟 Research Objective:

– To develop a novel Physics-ASSisted And Topology-informed deep learning model called PASSAT for improving weather prediction by addressing the limitations of existing models.

🛠️ Research Methods:

– PASSAT attributes weather evolution to the advection process and Earth-atmosphere interaction, solving equations on a spherical manifold using a spherical graph neural network.

💬 Research Conclusions:

– PASSAT outperforms state-of-the-art deep learning and operational numerical weather prediction models on the 5.625°-resolution ERA5 data set.

👉 Paper link: https://huggingface.co/papers/2505.04918

21. DynamicRAG: Leveraging Outputs of Large Language Model as Feedback for Dynamic Reranking in Retrieval-Augmented Generation

🔑 Keywords: Retrieval-augmented generation (RAG), LLM-based rerankers, reinforcement learning (RL), DynamicRAG, knowledge-intensive tasks

💡 Category: Natural Language Processing

🌟 Research Objective:

– To propose DynamicRAG, a novel framework that dynamically adjusts the order and number of documents in retrieval-augmented generation systems to optimize generation quality in knowledge-intensive tasks.

🛠️ Research Methods:

– The reranker in DynamicRAG is modeled as an agent optimized through reinforcement learning, using rewards based on the quality of LLM outputs.

💬 Research Conclusions:

– DynamicRAG achieves state-of-the-art results across seven knowledge-intensive datasets, demonstrating superior performance in knowledge retrieval and generation tasks.

👉 Paper link: https://huggingface.co/papers/2505.07233

22. INTELLECT-2: A Reasoning Model Trained Through Globally Decentralized Reinforcement Learning

🔑 Keywords: INTELLECT-2, Reinforcement Learning, Distributed Asynchronous Training, Decentralized Training, GRPO

💡 Category: Reinforcement Learning

🌟 Research Objective:

– To develop INTELLECT-2, a globally distributed RL training run of a 32 billion parameter language model using an asynchronous and decentralized approach.

🛠️ Research Methods:

– Implementation of a novel training framework, PRIME-RL, supported by new components such as TOPLOC for verifying rollouts and SHARDCAST for efficient policy weight broadcasts.

– Modifications to the GRPO training recipe and data filtering techniques for enhanced training stability.

💬 Research Conclusions:

– Successfully improved the state of the art in reasoning models and open-sourced INTELLECT-2 to foster further research in decentralized training methods.

👉 Paper link: https://huggingface.co/papers/2505.07291

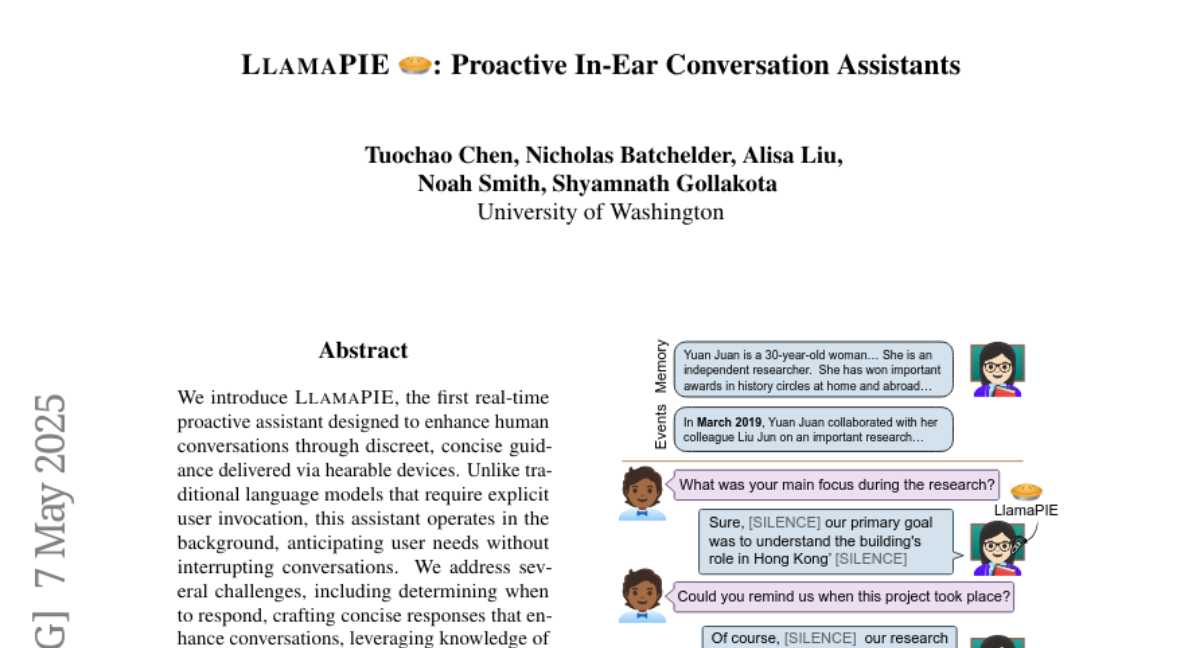

23. LLAMAPIE: Proactive In-Ear Conversation Assistants

🔑 Keywords: LlamaPIE, real-time proactive assistant, hearable devices, semi-synthetic dialogue dataset, two-model pipeline

💡 Category: Human-AI Interaction

🌟 Research Objective:

– Introduce LlamaPIE, the first proactive assistant for enhancing human conversations through hearable devices, operating without explicit user invocation.

🛠️ Research Methods:

– Developed a semi-synthetic dialogue dataset and a two-model pipeline with a smaller decision model and a larger response generation model.

💬 Research Conclusions:

– Evaluations show that LlamaPIE provides effective, unobtrusive assistance, with user studies favorably comparing it to a baseline and a reactive model, suggesting its potential in enhancing live conversations.

👉 Paper link: https://huggingface.co/papers/2505.04066

24. Multi-Objective-Guided Discrete Flow Matching for Controllable Biological Sequence Design

🔑 Keywords: Biomolecule Engineering, Discrete Flow Matching, Multi-Objective, Peptide Generation, DNA Design

💡 Category: Generative Models

🌟 Research Objective:

– The study aims to design biological sequences that meet multiple functional and biophysical criteria using the Multi-Objective-Guided Discrete Flow Matching (MOG-DFM) framework.

🛠️ Research Methods:

– The authors introduced MOG-DFM, a framework that guides pretrained discrete-time flow matching generators towards Pareto-efficient trade-offs across multiple scalar objectives.

– Two unconditional discrete flow matching models, PepDFM and EnhancerDFM, were trained for generating diverse peptides and functional enhancer DNA, respectively.

💬 Research Conclusions:

– MOG-DFM effectively generates peptide binders optimized across multiple properties including hemolysis, non-fouling, solubility, half-life, and binding affinity.

– The framework proves to be a powerful tool for designing DNA sequences with specific enhancer classes and DNA shapes, demonstrating its versatility and effectiveness in biomolecule design.

👉 Paper link: https://huggingface.co/papers/2505.07086

25.