AI Native Daily Paper Digest – 20250516

1. Beyond ‘Aha!’: Toward Systematic Meta-Abilities Alignment in Large Reasoning Models

🔑 Keywords: Large reasoning models, AI Native, Reinforcement Learning, Meta-abilities, Performance Ceiling

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– To develop a scalable and reliable reasoning framework for Large Reasoning Models (LRMs) by explicitly aligning them with meta-abilities like deduction, induction, and abduction.

🛠️ Research Methods:

– Implement a three-stage pipeline involving individual alignment, parameter-space merging, and domain-specific reinforcement learning to improve performance.

💬 Research Conclusions:

– The approach boosts performance over 10% relative to instruction-tuned baselines, with an additional 2% average gain across various benchmarks, proving the efficacy of explicit meta-ability alignment.

👉 Paper link: https://huggingface.co/papers/2505.10554

2. System Prompt Optimization with Meta-Learning

🔑 Keywords: Large Language Models, System Prompts, Meta-Learning, Optimization, Rapid Adaptation

💡 Category: Natural Language Processing

🌟 Research Objective:

– Introduce and tackle bilevel system prompt optimization to create robust system prompts transferable to unseen tasks.

🛠️ Research Methods:

– Propose a meta-learning framework optimizing system prompts across various user prompts and datasets, iteratively updating them for synergy.

💬 Research Conclusions:

– Demonstrated effective generalization of system prompts to different user prompts and rapid adaptation to new tasks with improved performance.

👉 Paper link: https://huggingface.co/papers/2505.09666

3. EnerVerse-AC: Envisioning Embodied Environments with Action Condition

🔑 Keywords: Robotic imitation learning, Action-conditional world model, Future visual observations, Dynamic multi-view image generation, Robotic manipulation evaluation

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– The paper aims to address the costly and challenging testing and evaluation of robotic imitation learning in dynamic environments by proposing EnerVerse-AC (EVAC), an action-conditional world model.

🛠️ Research Methods:

– EVAC introduces a multi-level action-conditioning mechanism and ray map encoding to enable dynamic multi-view image generation; it also expands training data with diverse failure trajectories.

💬 Research Conclusions:

– EVAC allows realistic and controllable robotic inference without physical robots or complex simulations, significantly reducing costs while maintaining high fidelity in robotic manipulation evaluation.

👉 Paper link: https://huggingface.co/papers/2505.09723

4. Parallel Scaling Law for Language Models

🔑 Keywords: parallel computation, ParScale, scaling law, inference efficiency

💡 Category: Natural Language Processing

🌟 Research Objective:

– To introduce a new scaling paradigm called parallel scaling (ParScale) that enhances inference efficiency in language models by increasing the model’s parallel computation.

🛠️ Research Methods:

– The application of P diverse and learnable transformations to input, executing model forward passes in parallel, and dynamically aggregating outputs, reusing existing parameters.

💬 Research Conclusions:

– ParScale can significantly improve inference efficiency by showing similarities to parameter scaling but with reduced memory and latency increases. It enables the deployment of powerful models in low-resource scenarios and provides a new perspective on computational roles in machine learning.

👉 Paper link: https://huggingface.co/papers/2505.10475

5. The CoT Encyclopedia: Analyzing, Predicting, and Controlling how a Reasoning Model will Think

🔑 Keywords: Long chain-of-thought (CoT), large language models, reasoning strategies, CoT Encyclopedia, performance gains

💡 Category: Natural Language Processing

🌟 Research Objective:

– The research aims to enhance understanding and analysis of reasoning strategies in large language models by introducing the CoT Encyclopedia, a framework for interpreting and steering model reasoning.

🛠️ Research Methods:

– The study presents a bottom-up approach that automatically extracts diverse reasoning criteria from model-generated CoTs, embeds them into a semantic space, clusters them into representative categories, and derives contrastive rubrics.

💬 Research Conclusions:

– The CoT Encyclopedia framework offers more interpretable and comprehensive analyses compared to existing methods, potentially leading to performance improvements, by predicting and guiding models towards effective reasoning strategies. Additionally, it highlights the significant impact of training data format on reasoning behavior over the data domain.

👉 Paper link: https://huggingface.co/papers/2505.10185

6. EWMBench: Evaluating Scene, Motion, and Semantic Quality in Embodied World Models

🔑 Keywords: Creative AI, Text-to-Video Diffusion Models, Embodied World Models, Evaluation Framework, Semantic Alignment

💡 Category: Generative Models

🌟 Research Objective:

– The paper addresses the challenge of evaluating Embodied World Models (EWMs) beyond perceptual metrics, focusing on ensuring physically grounded and action-consistent behaviors.

🛠️ Research Methods:

– The authors propose the Embodied World Model Benchmark (EWMBench), which evaluates EWMs based on visual scene consistency, motion correctness, and semantic alignment using a curated dataset and a comprehensive evaluation toolkit.

💬 Research Conclusions:

– The EWMBench framework identifies limitations in existing video generation models and offers insights for future advancements in embodied AI tasks. The dataset and tools are openly accessible to the public.

👉 Paper link: https://huggingface.co/papers/2505.09694

7. WorldPM: Scaling Human Preference Modeling

🔑 Keywords: World Preference Modeling, scaling laws, human preferences, generalization performance, preference fine-tuning

💡 Category: Reinforcement Learning

🌟 Research Objective:

– To explore scaling laws in preference modeling and propose World Preference Modeling (WorldPM) to emphasize scalability using a unified representation of human preferences.

🛠️ Research Methods:

– Collection and training on preference data from public forums, using model sizes ranging from 1.5B to 72B parameters across various metrics and benchmarks.

💬 Research Conclusions:

– WorldPM enhances generalization performance across varying sizes of human preference datasets and shows significant improvements of 4% to 8% in both in-house and public evaluation sets.

👉 Paper link: https://huggingface.co/papers/2505.10527

8. End-to-End Vision Tokenizer Tuning

🔑 Keywords: Vision Tokenization, End-to-End Tuning, Multimodal Understanding, Visual Embeddings

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The objective is to enhance vision tokenization by integrating it end-to-end with target autoregressive tasks to address misalignment issues in existing methods.

🛠️ Research Methods:

– The proposed method, ETT, involves joint optimization of vision tokenization and autoregressive tasks, utilizing visual embeddings from the tokenizer codebook, and can be integrated with minimal architectural adjustments.

💬 Research Conclusions:

– The ETT approach achieves significant performance improvements of 2-6% in multimodal understanding and visual generation tasks compared to baseline models with frozen tokenizers, while maintaining the original reconstruction capabilities.

👉 Paper link: https://huggingface.co/papers/2505.10562

9. MLE-Dojo: Interactive Environments for Empowering LLM Agents in Machine Learning Engineering

🔑 Keywords: MLE-Dojo, Reinforcement Learning, Iterative Experimentation, Large Language Model (LLM), Autonomous Agents

💡 Category: Reinforcement Learning

🌟 Research Objective:

– To introduce MLE-Dojo, a framework for improving autonomous LLM agents in iterative machine learning engineering workflows.

🛠️ Research Methods:

– MLE-Dojo provides an interactive gym-style environment for iterative experimentation, debugging, and solution refinement.

– Utilizes over 200 real-world Kaggle challenges and supports agent training via supervised fine-tuning and reinforcement learning.

💬 Research Conclusions:

– Extensive evaluations show current models make meaningful iterative improvements but still face limitations in generating long-horizon solutions and resolving complex errors efficiently.

– The open-source framework aims to promote community-driven innovation for next-generation machine learning engineering agents.

👉 Paper link: https://huggingface.co/papers/2505.07782

10. Unilogit: Robust Machine Unlearning for LLMs Using Uniform-Target Self-Distillation

🔑 Keywords: Unilogit, self-distillation, Large Language Models, machine unlearning, GDPR

💡 Category: Natural Language Processing

🌟 Research Objective:

– Introduce Unilogit, a self-distillation method for selectively forgetting information in Large Language Models while maintaining model utility.

🛠️ Research Methods:

– Utilizes dynamic adjustment of target logits to achieve uniform probability for target tokens, enhancing self-distillation without additional hyperparameters.

💬 Research Conclusions:

– Unilogit surpasses state-of-the-art methods like NPO and UnDIAL in balancing forgetting and retaining objectives, demonstrating robustness and practical applicability in machine unlearning.

👉 Paper link: https://huggingface.co/papers/2505.06027

11. J1: Incentivizing Thinking in LLM-as-a-Judge via Reinforcement Learning

🔑 Keywords: AI Native, Judgment Tasks, Reinforcement Learning, Chain-of-Thought Reasoning, Judgment Bias

💡 Category: Reinforcement Learning

🌟 Research Objective:

– To improve judgment ability in AI models using a novel reinforcement learning approach, J1, which enhances chain-of-thought reasoning.

🛠️ Research Methods:

– Introduced J1, which converts prompts into judgment tasks with verifiable rewards to promote thinking and reduce judgment bias. Compared Pairwise-J1 vs Pointwise-J1 models, reward strategies, seed prompts, and training variations.

💬 Research Conclusions:

– J1 outperforms existing 8B or 70B models, and even some larger models like o1-mini and DeepSeek-R1, in judgment tasks by better outlining evaluation criteria, comparing with reference answers, and re-evaluating model responses.

👉 Paper link: https://huggingface.co/papers/2505.10320

12. PointArena: Probing Multimodal Grounding Through Language-Guided Pointing

🔑 Keywords: Multimodal Models, PointArena, Pointing Capabilities, Supervised Training, Robotics

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The study aims to evaluate multimodal pointing capabilities across diverse reasoning scenarios using a new platform named PointArena.

🛠️ Research Methods:

– Introduction of PointArena, which includes components like Point-Bench for curated datasets, Point-Battle for interactive model comparisons, and Point-Act for real-world evaluations.

💬 Research Conclusions:

– Molmo-72B consistently outperforms other models, and supervised training specifically targeting pointing tasks significantly improves performance. There is a strong correlation between precise pointing capabilities and the effectiveness of models in bridging abstract reasoning with real-world actions.

👉 Paper link: https://huggingface.co/papers/2505.09990

13. Achieving Tokenizer Flexibility in Language Models through Heuristic Adaptation and Supertoken Learning

🔑 Keywords: Pretrained language models, TokenAdapt, Supertokens, zero-shot perplexity, tokenization

💡 Category: Natural Language Processing

🌟 Research Objective:

– To overcome inefficiencies and limitations in pretrained language models’ fixed tokenization schemes, particularly for multilingual or specialized applications, through innovative tokenizer transplantation and pre-tokenization learning methods.

🛠️ Research Methods:

– Developed Tokenadapt, a model-agnostic tokenizer transplantation method, and introduced novel pre-tokenization learning for multi-word Supertokens to enhance compression and reduce fragmentation, combining local and global estimates for semantic preservation.

💬 Research Conclusions:

– TokenAdapt’s hybrid initialization significantly reduces retraining requirements and yields lower perplexity ratios than conventional methods like ReTok and TransTokenizer. Supertokens achieve notable compression gains, and the approach enhances performance across diverse models.

👉 Paper link: https://huggingface.co/papers/2505.09738

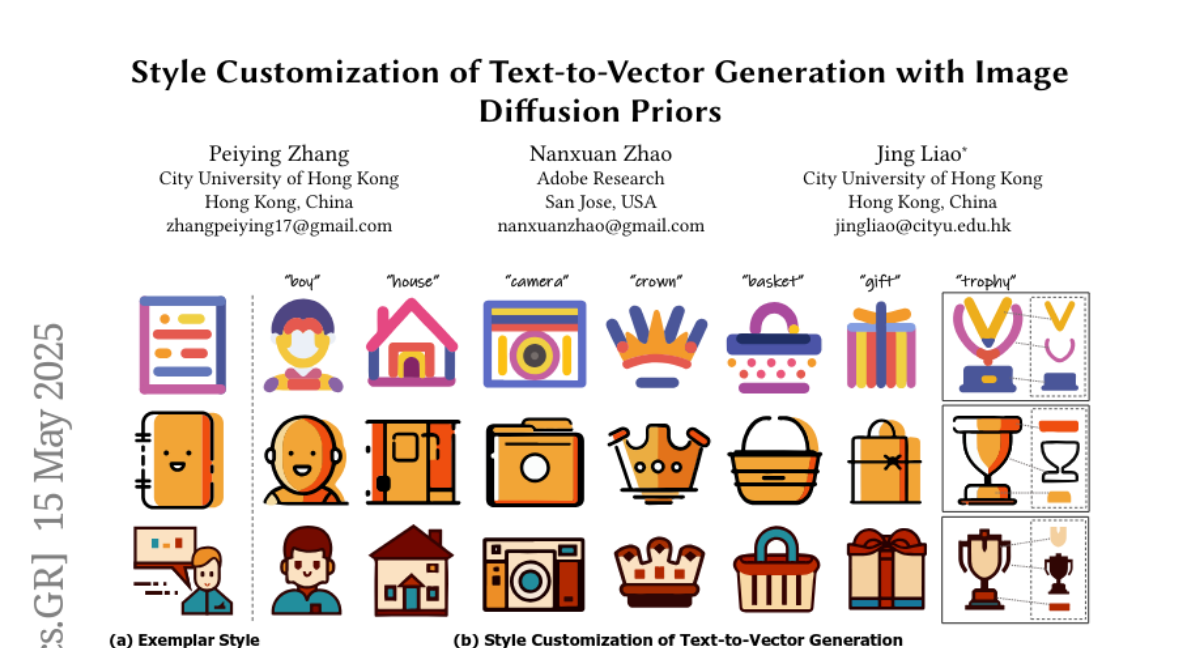

14. Style Customization of Text-to-Vector Generation with Image Diffusion Priors

🔑 Keywords: Scalable Vector Graphics, Text-to-Vector, Style Customization, Structural Regularity, Diffusion Model

💡 Category: Generative Models

🌟 Research Objective:

– The paper aims to address the challenge of style customization in scalable vector graphics generation from text prompts, maintaining consistent visual aesthetic and structural regularity.

🛠️ Research Methods:

– A novel two-stage style customization pipeline is proposed, employing a T2V diffusion model with path-level representation and customization using distilled T2I models.

💬 Research Conclusions:

– The proposed method successfully generates high-quality, diverse SVGs in custom styles efficiently, as validated by extensive experiments.

👉 Paper link: https://huggingface.co/papers/2505.10558

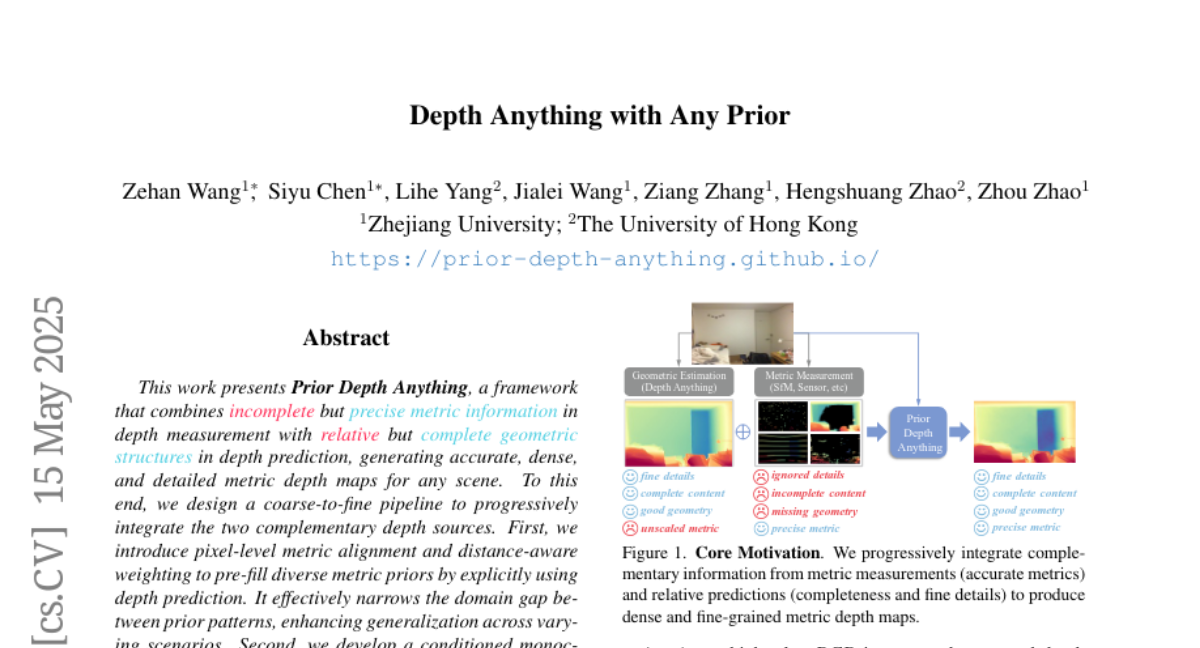

15. Depth Anything with Any Prior

🔑 Keywords: Metric Depth Maps, Coarse-to-Fine Pipeline, Metric Priors, Generalization, Zero-Shot Generalization

💡 Category: Computer Vision

🌟 Research Objective:

– The paper introduces a framework called Prior Depth Anything for generating accurate, dense, and detailed metric depth maps for various scenes by integrating incomplete yet precise metric information with relative complete geometric structures.

🛠️ Research Methods:

– A coarse-to-fine pipeline is designed to combine depth sources, using pixel-level metric alignment and distance-aware weighting, and developing a conditioned monocular depth estimation (MDE) model to refine depth priors.

💬 Research Conclusions:

– The model demonstrates impressive zero-shot generalization across depth-related tasks over 7 real-world datasets, performing well on challenging mixed priors and enabling test-time improvements with flexible accuracy-efficiency trade-offs.

👉 Paper link: https://huggingface.co/papers/2505.10565

16. ReSurgSAM2: Referring Segment Anything in Surgical Video via Credible Long-term Tracking

🔑 Keywords: Surgical Scene Segmentation, Computer-Assisted Surgery, Segment Anything Model 2, Diversity-Driven Memory, Long-Term Tracking

💡 Category: AI in Healthcare

🌟 Research Objective:

– To introduce the ReSurgSAM2, a two-stage surgical referring segmentation framework designed to enhance efficiency and long-term tracking in complex surgical scenarios.

🛠️ Research Methods:

– Utilization of Segment Anything Model 2 for text-referred target detection.

– Implementation of a cross-modal spatial-temporal Mamba for precise detection and segmentation.

– Incorporation of a diversity-driven memory mechanism to ensure consistent long-term tracking.

💬 Research Conclusions:

– ReSurgSAM2 significantly improves accuracy and efficiency in real-time surgical segmentation, achieving a performance of 61.2 FPS.

👉 Paper link: https://huggingface.co/papers/2505.08581

17. OpenThinkIMG: Learning to Think with Images via Visual Tool Reinforcement Learning

🔑 Keywords: LVLMs, OpenThinkIMG, reinforcement learning, adaptive policies, tool-augmented

💡 Category: Reinforcement Learning

🌟 Research Objective:

– To introduce OpenThinkIMG, an open-source framework for enhancing Large Vision-Language Models with adaptive tool usage.

🛠️ Research Methods:

– The use of V-ToolRL, a novel reinforcement learning framework, to train LVLMs to autonomously optimize tool-usage strategies for improved task success.

💬 Research Conclusions:

– OpenThinkIMG significantly enhances tool-augmented visual reasoning, with empirical tests showing substantial performance improvements over existing models and baselines like GPT-4.1.

👉 Paper link: https://huggingface.co/papers/2505.08617

18. QuXAI: Explainers for Hybrid Quantum Machine Learning Models

🔑 Keywords: HQML, XAI, QuXAI, interpretability, Q-MEDLEY

💡 Category: Quantum Machine Learning

🌟 Research Objective:

– Address the explainability gap in hybrid quantum-classical machine learning models by introducing a new framework, QuXAI.

🛠️ Research Methods:

– Development of HQML models incorporating quantum feature maps.

– Utilization of Q-MEDLEY to interpret feature importance and visualize attributions in these systems.

💬 Research Conclusions:

– QuXAI effectively enhances the interpretability and reliability of HQML models, highlighting influential classical aspects and separating noise.

– Demonstrated competitiveness with established XAI techniques and improved confidence in quantum-enhanced AI technologies.

👉 Paper link: https://huggingface.co/papers/2505.10167

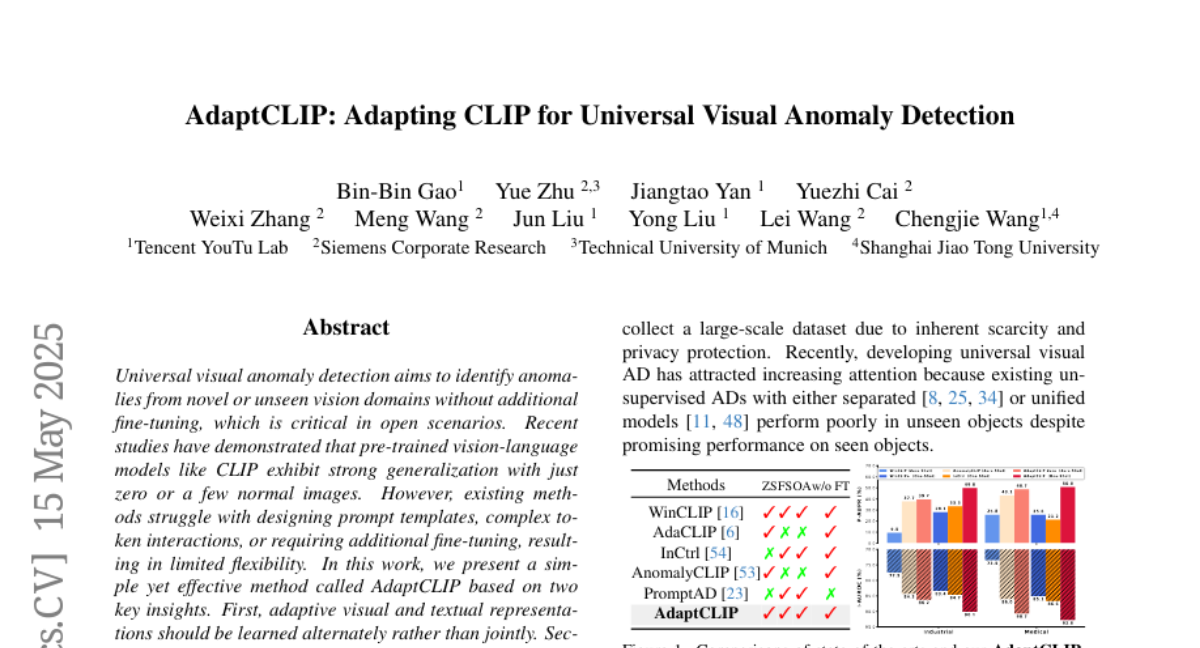

19. AdaptCLIP: Adapting CLIP for Universal Visual Anomaly Detection

🔑 Keywords: Universal Visual Anomaly Detection, AdaptCLIP, CLIP, Zero-/Few-Shot Generalization, Comparative Learning

💡 Category: Computer Vision

🌟 Research Objective:

– To develop a method, AdaptCLIP, for universal visual anomaly detection without additional fine-tuning in novel or unseen vision domains.

🛠️ Research Methods:

– Introduces AdaptCLIP, utilizing adaptive learning of visual and textual representations alternately, and comparative learning that leverages contextual and aligned residual features, with three simple adapters integrated into CLIP models.

💬 Research Conclusions:

– AdaptCLIP offers state-of-the-art performance in 12 anomaly detection benchmarks across industrial and medical domains, with superior flexibility and generalization capabilities.

👉 Paper link: https://huggingface.co/papers/2505.09926

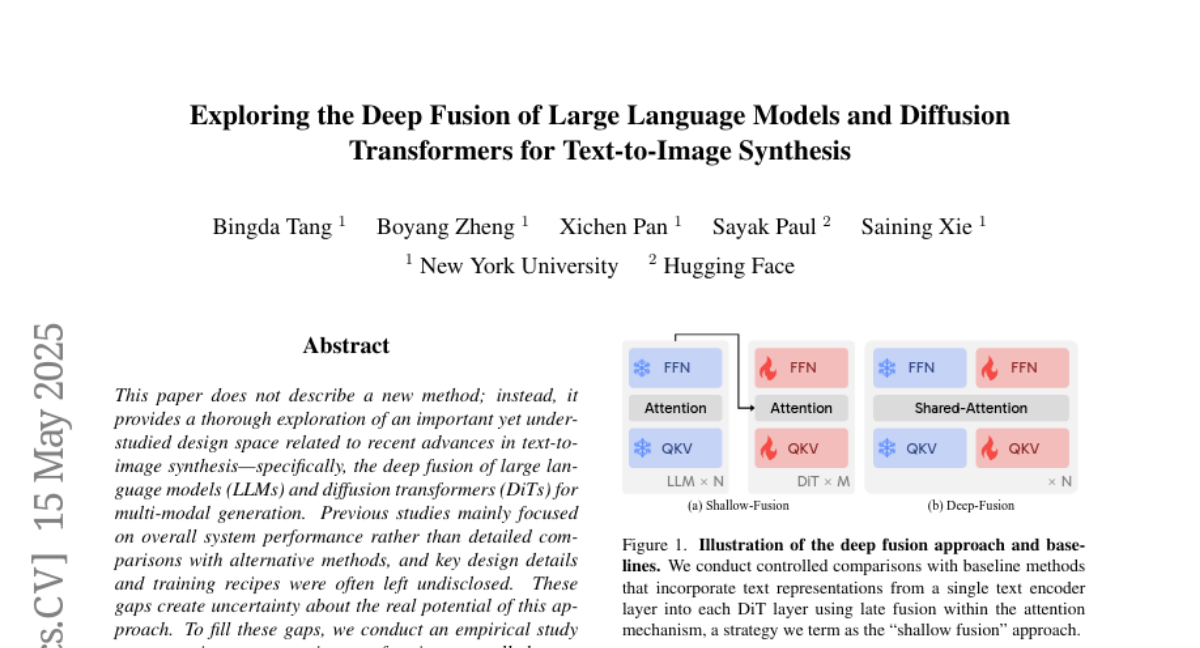

20. Exploring the Deep Fusion of Large Language Models and Diffusion Transformers for Text-to-Image Synthesis

🔑 Keywords: text-to-image synthesis, large language models (LLMs), diffusion transformers (DiTs), multi-modal generation, training recipes

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– This study explores the integration of large language models and diffusion transformers to enhance multi-modal generation, focusing on the text-to-image synthesis domain.

🛠️ Research Methods:

– Conducts empirical studies with controlled comparisons to established baselines and analyzes crucial design choices, providing a clear and reproducible recipe for scalable training.

💬 Research Conclusions:

– The findings aim to fill existing knowledge gaps and offer valuable data and practical guidelines for future research in multi-modal generation.

👉 Paper link: https://huggingface.co/papers/2505.10046

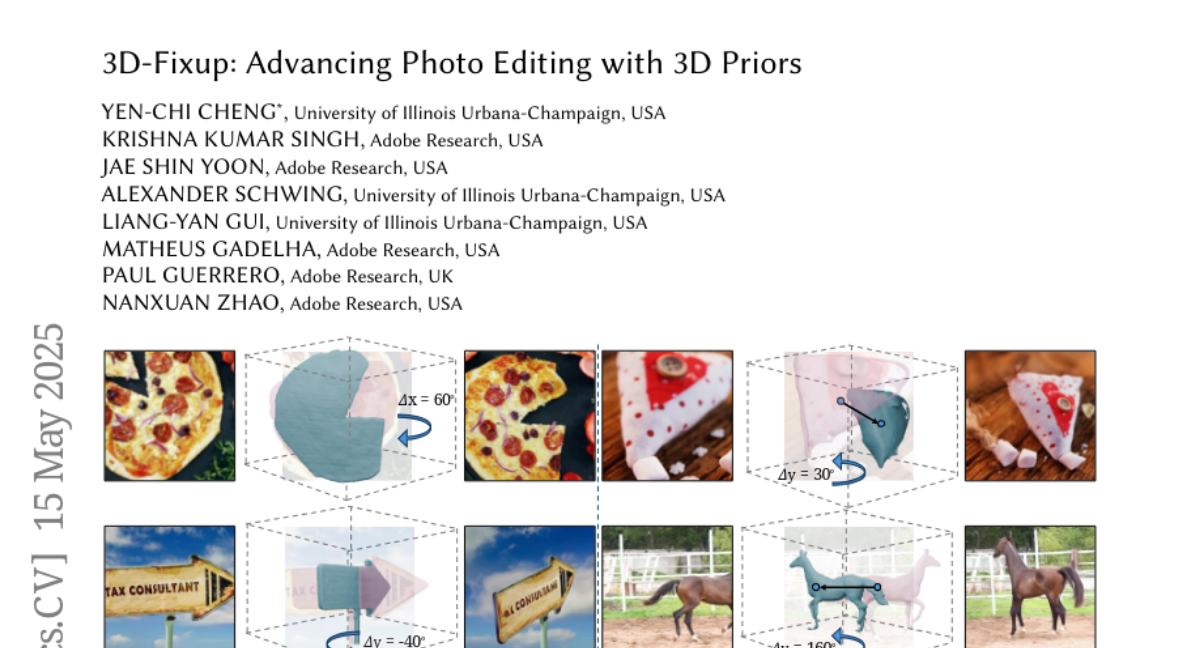

21. 3D-Fixup: Advancing Photo Editing with 3D Priors

🔑 Keywords: diffusion models, 3D-aware image editing, 3D-Fixup, 3D priors, image-to-3D model

💡 Category: Computer Vision

🌟 Research Objective:

– The objective is to overcome challenges in 3D-aware image editing by proposing 3D-Fixup, a new framework that uses learned 3D priors to edit 2D images.

🛠️ Research Methods:

– The study employs a training-based approach leveraging the generative power of diffusion models, incorporating 3D guidance from an image-to-3D model, and utilizes a data generation pipeline for high-quality 3D guidance.

💬 Research Conclusions:

– 3D-Fixup effectively supports complex 3D-aware edits, demonstrating high-quality results, and advances the application of diffusion models in realistic image manipulation. The code is available online for further research and development.

👉 Paper link: https://huggingface.co/papers/2505.10566

22. Few-Shot Anomaly-Driven Generation for Anomaly Classification and Segmentation

🔑 Keywords: Anomaly-driven Generation, Diffusion Model, Weakly-supervised Anomaly Detection, DRAEM, AU-PR Metric

💡 Category: Generative Models

🌟 Research Objective:

– The research aims to address the semantic gap and scarcity of anomaly samples in industrial inspection by proposing the AnoGen method to generate realistic and diverse anomalies.

🛠️ Research Methods:

– The method is implemented in three stages: learning anomaly distribution from real anomalies, guiding a diffusion model using embeddings and bounding boxes to generate anomalies, and employing weakly-supervised detection to train anomaly models.

💬 Research Conclusions:

– Experiments on the MVTec dataset show that the generated anomalies significantly improve performance in anomaly classification and segmentation tasks, with noted improvements in the AU-PR metric.

👉 Paper link: https://huggingface.co/papers/2505.09263

23. Learning to Detect Multi-class Anomalies with Just One Normal Image Prompt

🔑 Keywords: Unsupervised reconstruction, Self-attention transformers, One Normal Image Prompt (OneNIP), Anomaly detection, Anomaly segmentation

💡 Category: Computer Vision

🌟 Research Objective:

– To enhance the efficiency and generalization of self-attention reconstruction models for unified anomaly detection by proposing a novel method called One Normal Image Prompt (OneNIP).

🛠️ Research Methods:

– Introduction of OneNIP for reconstructing normal features and restoring anomaly features using a single normal image prompt.

– Implementation of a supervised refiner to improve pixel-level anomaly segmentation through regression of reconstruction errors using both real normal and synthesized anomalous images.

💬 Research Conclusions:

– OneNIP significantly improves unified anomaly detection performance, outperforming existing methods on industry benchmarks such as MVTec, BTAD, and VisA.

– The proposed method effectively enhances self-attention transformer models by focusing on better anomaly segmentation and detection.

👉 Paper link: https://huggingface.co/papers/2505.09264

24. MetaUAS: Universal Anomaly Segmentation with One-Prompt Meta-Learning

🔑 Keywords: Universal Anomaly Segmentation, Pure Vision Model, MetaUAS, Soft Feature Alignment, One-prompt

💡 Category: Computer Vision

🌟 Research Objective:

– To explore a pure visual foundation model as an alternative to vision-language models for universal visual anomaly segmentation.

🛠️ Research Methods:

– Introduced a novel paradigm that unifies anomaly segmentation into change segmentation using large-scale synthetic image pairs.

– Proposed a one-prompt Meta-learning framework called MetaUAS for Universal Anomaly Segmentation, incorporating a soft feature alignment module.

💬 Research Conclusions:

– Achieved universal anomaly segmentation without reliance on visual-language models or special anomaly detection datasets.

– MetaUAS outperforms previous zero-shot, few-shot, and full-shot anomaly segmentation methods.

👉 Paper link: https://huggingface.co/papers/2505.09265

25. Real2Render2Real: Scaling Robot Data Without Dynamics Simulation or Robot Hardware

🔑 Keywords: Real2Render2Real, robot learning, 3D Gaussian Splatting, vision-language-action models, imitation learning policies

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– To introduce Real2Render2Real (R2R2R), an approach to create robot training data without relying on object dynamics simulation or teleoperation.

🛠️ Research Methods:

– Utilizes scans from smartphones and a single human video demonstration to reconstruct 3D object geometry and generate high-fidelity robot demonstrations.

– Employs 3D Gaussian Splatting for asset generation and trajectory synthesis, maintaining compatibility with rendering engines.

💬 Research Conclusions:

– Models trained with R2R2R data from a single human demonstration can achieve performance comparable to those trained on 150 human teleoperation demonstrations.

👉 Paper link: https://huggingface.co/papers/2505.09601

26. AI Agents vs. Agentic AI: A Conceptual Taxonomy, Applications and Challenge

🔑 Keywords: AI Agents, Agentic AI, Large Language Models (LLMs), Generative AI, Autonomous Systems

💡 Category: AI Systems and Tools

🌟 Research Objective:

– The study aims to distinguish between AI Agents and Agentic AI by providing a conceptual taxonomy, application mapping, and analysis of their divergent design philosophies and capabilities.

🛠️ Research Methods:

– The research employs a structured analysis of architectural evolution, operational mechanisms, interaction styles, and autonomy levels. It also involves comparative examination across different application domains such as customer support and research automation.

💬 Research Conclusions:

– The paper presents a detailed comparison of AI Agents and Agentic AI, highlighting challenges such as hallucination and coordination failure, and proposes targeted solutions like ReAct loops and causal modeling. The goal is to guide the development of robust, scalable, and explainable AI systems.

👉 Paper link: https://huggingface.co/papers/2505.10468

27. X-Sim: Cross-Embodiment Learning via Real-to-Sim-to-Real

🔑 Keywords: sim-to-real, photorealistic simulation, reinforcement learning (RL), diffusion policy, domain adaptation

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– Introduce X-Sim, a framework leveraging object motion for training robot manipulation policies from human videos without needing action labels or robot teleoperation data.

🛠️ Research Methods:

– Utilize photorealistic simulations and object-centric rewards to train a reinforcement learning (RL) policy, then convert it into a diffusion policy for real-world application using domain adaptation.

💬 Research Conclusions:

– X-Sim improves task progress by 30% over hand-tracking and sim-to-real baselines, matches behavior cloning with significantly less data, and adapts to new camera viewpoints and test-time changes.

👉 Paper link: https://huggingface.co/papers/2505.07096

28.