AI Native Daily Paper Digest – 20250602

1. ProRL: Prolonged Reinforcement Learning Expands Reasoning Boundaries in Large Language Models

🔑 Keywords: Prolonged reinforcement learning, Reasoning strategies, Language models, AI-generated summary, KL divergence control

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The study aims to investigate the impact of Prolonged reinforcement learning (ProRL) on uncovering novel reasoning strategies in language models and expanding their reasoning capabilities beyond base models.

🛠️ Research Methods:

– Introduction of ProRL, a novel training methodology that incorporates KL divergence control, reference policy resetting, and a diverse suite of tasks to enhance reasoning in language models.

💬 Research Conclusions:

– ProRL-trained models outperform base models across various evaluations, demonstrating improved reasoning boundaries and suggesting that RL can explore new regions of solution space over time.

👉 Paper link: https://huggingface.co/papers/2505.24864

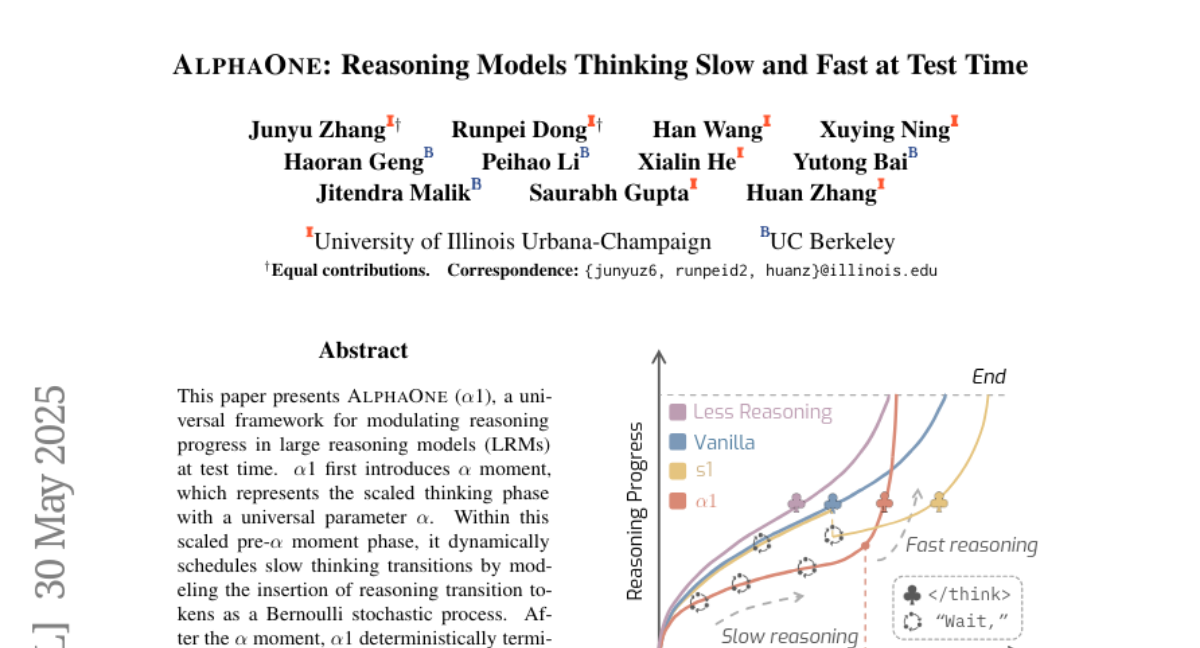

2. AlphaOne: Reasoning Models Thinking Slow and Fast at Test Time

🔑 Keywords: AlphaOne, large reasoning models, slow thinking, efficient answer generation, reasoning transition tokens

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– To modulate reasoning progress in large reasoning models by introducing a universal framework, AlphaOne, with a focus on efficiency and capability enhancement across diverse domains.

🛠️ Research Methods:

– Utilizes alpha moment and a Bernoulli stochastic process for scheduling slow thinking transitions in reasoning models, unifying and generalizing existing monotonic scaling methods.

💬 Research Conclusions:

– AlphaOne demonstrates superior reasoning capability and efficiency through extensive empirical studies across mathematical, coding, and scientific benchmarks.

👉 Paper link: https://huggingface.co/papers/2505.24863

3. Time Blindness: Why Video-Language Models Can’t See What Humans Can?

🔑 Keywords: Vision-Language Models, Temporal Pattern Recognition, Noise-like Frames, Temporal Sequences, SpookyBench

💡 Category: Computer Vision

🌟 Research Objective:

– Introduce SpookyBench as a benchmark to challenge vision-language models specifically on temporal pattern recognition in videos devoid of spatial information.

🛠️ Research Methods:

– Systematic analysis of state-of-the-art vision-language models focusing on their capabilities to process purely temporal cues in noise-like frames.

💬 Research Conclusions:

– Vision-language models currently have a significant performance gap compared to humans in recognizing temporal patterns in the absence of spatial information, indicating a reliance on spatial rather than temporal processing.

– The study suggests that new architectures or training paradigms are needed to better decouple spatial dependencies from temporal processing in these models.

👉 Paper link: https://huggingface.co/papers/2505.24867

4. HardTests: Synthesizing High-Quality Test Cases for LLM Coding

🔑 Keywords: HARDTESTGEN, LLMs, Verifiers, Test Synthesis, Competitive Programming

💡 Category: AI Systems and Tools

🌟 Research Objective:

– To enhance the precision and recall of verifiers in evaluating LLM-generated code through a comprehensive competitive programming dataset called HARDTESTS.

🛠️ Research Methods:

– Developing a pipeline using LLMs for high-quality test synthesis to curate a dataset with 47k problems and synthetic high-quality tests.

💬 Research Conclusions:

– HARDTESTGEN significantly improves precision by 11.3 points and recall by 17.5 points when evaluating LLM-generated code, with even higher gains for more complex problems. The dataset also enhances model training and downstream code generation performance.

👉 Paper link: https://huggingface.co/papers/2505.24098

5. Large Language Models for Data Synthesis

🔑 Keywords: LLMSynthor, synthetic data, Large Language Models, distributional feedback, proposal sampling

💡 Category: Generative Models

🌟 Research Objective:

– Introduce LLMSynthor, a framework to enhance LLMs for efficient and statistically accurate data synthesis.

🛠️ Research Methods:

– Utilizes LLMSynthor as a nonparametric copula simulator with LLM Proposal Sampling to improve sampling efficiency and align real and synthetic data through an iterative synthesis loop.

💬 Research Conclusions:

– LLMSynthor demonstrates high statistical fidelity, practical utility, and adaptability across various privacy-sensitive domains, making it valuable in fields like economics, social science, and urban studies.

👉 Paper link: https://huggingface.co/papers/2505.14752

6. Don’t Look Only Once: Towards Multimodal Interactive Reasoning with Selective Visual Revisitation

🔑 Keywords: Multimodal Large Language Models, visual tokens, multimodal reasoning, dynamic visual access

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The objective is to enhance Multimodal Large Language Models (MLLMs) by enabling selective and dynamic visual region retrieval during inference to improve performance on multimodal reasoning tasks.

🛠️ Research Methods:

– v1 introduces a point-and-copy mechanism to allow dynamic retrieval of relevant image regions.

– A dataset named v1g with 300K multimodal reasoning traces and visual grounding annotations was constructed for training.

💬 Research Conclusions:

– v1 consistently improves performance on tasks requiring fine-grained visual reference and multi-step reasoning in benchmarks like MathVista, MathVision, and MathVerse.

– Dynamic visual access is identified as a promising direction for enhancing grounded multimodal reasoning.

👉 Paper link: https://huggingface.co/papers/2505.18842

7. ViStoryBench: Comprehensive Benchmark Suite for Story Visualization

🔑 Keywords: Story visualization, Generative models, Evaluation benchmark, Diverse dataset, Character consistency

💡 Category: Generative Models

🌟 Research Objective:

– Introduce ViStoryBench, a comprehensive evaluation benchmark for story visualization frameworks that encompasses diverse datasets and metrics.

🛠️ Research Methods:

– Collect a diverse dataset with various story types, artistic styles, and evaluate models across narrative and visual dimensions.

– Incorporate a wide range of evaluation metrics to assess model performance critically.

💬 Research Conclusions:

– ViStoryBench allows researchers to identify strengths and weaknesses in story visualization models and aids in targeted improvements.

👉 Paper link: https://huggingface.co/papers/2505.24862

8. DINO-R1: Incentivizing Reasoning Capability in Vision Foundation Models

🔑 Keywords: Reinforcement Learning, DINO-R1, Vision Foundation Models, Visual Prompting, Group Relative Query Optimization

💡 Category: Computer Vision

🌟 Research Objective:

– The study aims to enhance visual in-context reasoning capabilities in vision foundation models using reinforcement learning, specifically through the development of DINO-R1.

🛠️ Research Methods:

– The introduction of a novel training strategy named Group Relative Query Optimization (GRQO) along with KL-regularization to stabilize objectness distribution and reduce training instability.

💬 Research Conclusions:

– DINO-R1 outperforms supervised fine-tuning baselines across various datasets like COCO, LVIS, and ODinW, showing strong generalization in both open-vocabulary and closed-set visual prompting scenarios.

👉 Paper link: https://huggingface.co/papers/2505.24025

9. Vision Language Models are Biased

🔑 Keywords: Vision Language Models, Biases, Counting, Identification, Accuracy

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Investigate how knowledge about popular subjects affects the accuracy of Vision Language Models on counting and identification tasks.

🛠️ Research Methods:

– Testing the biases of state-of-the-art Vision Language Models across 7 diverse domains in visual counting and identification tasks.

💬 Research Conclusions:

– Vision Language Models demonstrate strong biases, significantly reducing their accuracy in counting tasks, with average accuracy dropping to 17.05%.

– Adding subject-specific text to images further decreases accuracy, and instructing models to double-check results only slightly improves accuracy by +2 points.

👉 Paper link: https://huggingface.co/papers/2505.23941

10. Open CaptchaWorld: A Comprehensive Web-based Platform for Testing and Benchmarking Multimodal LLM Agents

🔑 Keywords: CAPTCHA, multimodal LLM, visual reasoning, interaction capabilities, CAPTCHA Reasoning Depth

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The objective is to evaluate MLLM-powered agents on CAPTCHA puzzles to assess their visual reasoning and interaction capabilities.

🛠️ Research Methods:

– An introduction of Open CaptchaWorld, a benchmark and platform designed with 225 diverse CAPTCHA types to evaluate agents’ performance.

💬 Research Conclusions:

– Humans achieve near-perfect scores on CAPTCHAs, while state-of-the-art MLLM agents perform poorly, with a maximum success rate of 40% versus humans’ 93.3%, underscoring the need for better multimodal reasoning systems.

👉 Paper link: https://huggingface.co/papers/2505.24878

11. CoDA: Coordinated Diffusion Noise Optimization for Whole-Body Manipulation of Articulated Objects

🔑 Keywords: diffusion models, noise-space optimization, basis point sets, motion quality, physical plausibility

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– The paper aims to improve whole-body manipulation of articulated objects by leveraging specialized diffusion models for body and hand motions.

🛠️ Research Methods:

– The authors propose a novel coordinated diffusion noise optimization framework utilizing noise-space optimization over three specialized diffusion models for body and hands, enhanced by a unified representation using basis point sets.

💬 Research Conclusions:

– The proposed method outperforms existing approaches in terms of motion quality and physical plausibility, enabling capabilities such as object pose control, simultaneous walking and manipulation, and whole-body generation from hand-only data.

👉 Paper link: https://huggingface.co/papers/2505.21437

12. MoDoMoDo: Multi-Domain Data Mixtures for Multimodal LLM Reinforcement Learning

🔑 Keywords: Reinforcement Learning, Multimodal Large Language Models, Verifiable Rewards, Data Mixture Strategy

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To enhance the general reasoning abilities and benchmark performance of multimodal large language models (MLLMs) through reinforcement learning with verifiable rewards (RLVR).

🛠️ Research Methods:

– Developed a systematic post-training framework for MLLMs using RLVR, introducing a data mixture strategy to address conflicting objectives from multiple datasets.

– Curated a dataset containing verifiable vision-language problems and enabled multi-domain online RL learning with verifiable rewards.

💬 Research Conclusions:

– The multi-domain RLVR training combined with mixture prediction strategies significantly boosts general reasoning capabilities.

– The proposed data mixture strategy improved accuracy on out-of-distribution benchmarks by 5.24% compared to uniform data mixtures and by 20.74% compared to pre-finetuning baselines.

👉 Paper link: https://huggingface.co/papers/2505.24871

13. EmergentTTS-Eval: Evaluating TTS Models on Complex Prosodic, Expressiveness, and Linguistic Challenges Using Model-as-a-Judge

🔑 Keywords: TTS, EmergentTTS-Eval, LLMs, Large Audio Language Model, model-as-a-judge

💡 Category: Natural Language Processing

🌟 Research Objective:

– To assess nuanced and semantically complex text in TTS outputs using the EmergentTTS-Eval benchmark.

🛠️ Research Methods:

– Automated test-case generation and evaluation using LLMs and LALM for TTS assessments.

– Iterative extension of test cases utilizing a small set of human-written seed prompts.

💬 Research Conclusions:

– The model-as-a-judge approach offers robust assessment capabilities for TTS performance, aligning closely with human preferences.

– EmergentTTS-Eval is effective in revealing fine-grained differences in TTS system performance across various complex scenarios.

👉 Paper link: https://huggingface.co/papers/2505.23009

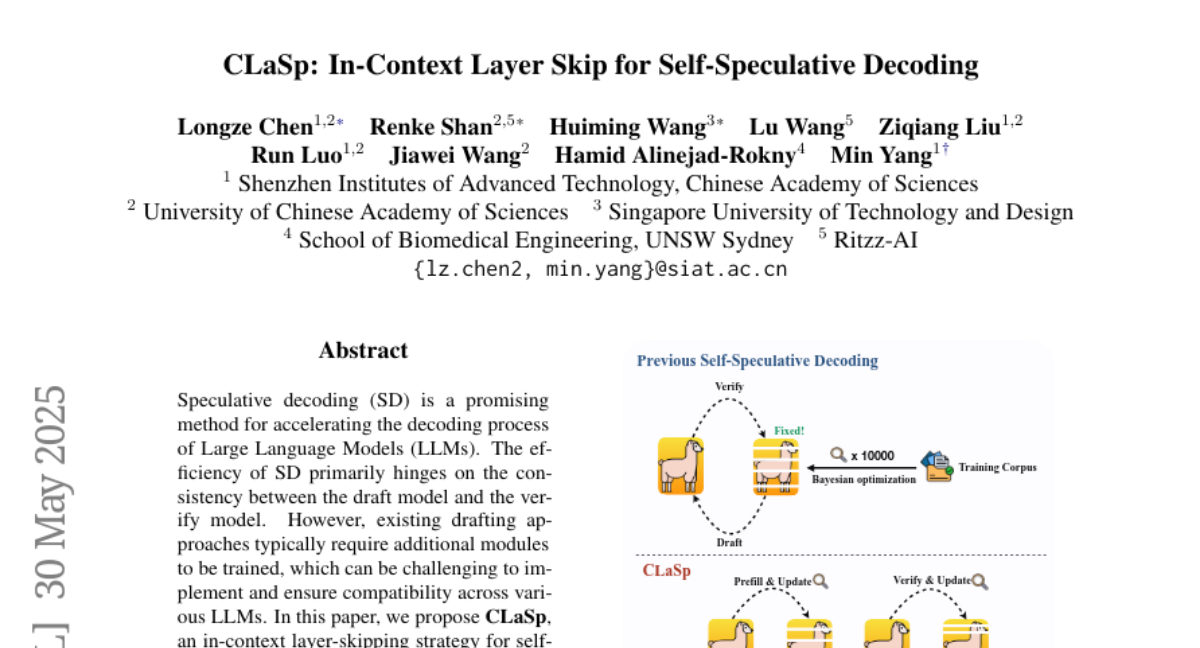

14. CLaSp: In-Context Layer Skip for Self-Speculative Decoding

🔑 Keywords: CLaSp, self-speculative decoding, Large Language Models, LLaMA3, in-context layer-skipping

💡 Category: Natural Language Processing

🌟 Research Objective:

– The paper proposes CLaSp, an in-context layer-skipping strategy, aimed at accelerating the decoding of Large Language Models such as LLaMA3 without requiring additional modules or training.

🛠️ Research Methods:

– CLaSp employs a plug-and-play mechanism to skip intermediate layers in the verify model, constructing a compressed draft model. It uses a dynamic programming algorithm to optimize layer-skipping, leveraging hidden states from the last verification stage.

💬 Research Conclusions:

– Experimental results show that CLaSp achieves a speedup of 1.3x to 1.7x on LLaMA3 models across various tasks, maintaining the original distribution of generated text.

👉 Paper link: https://huggingface.co/papers/2505.24196

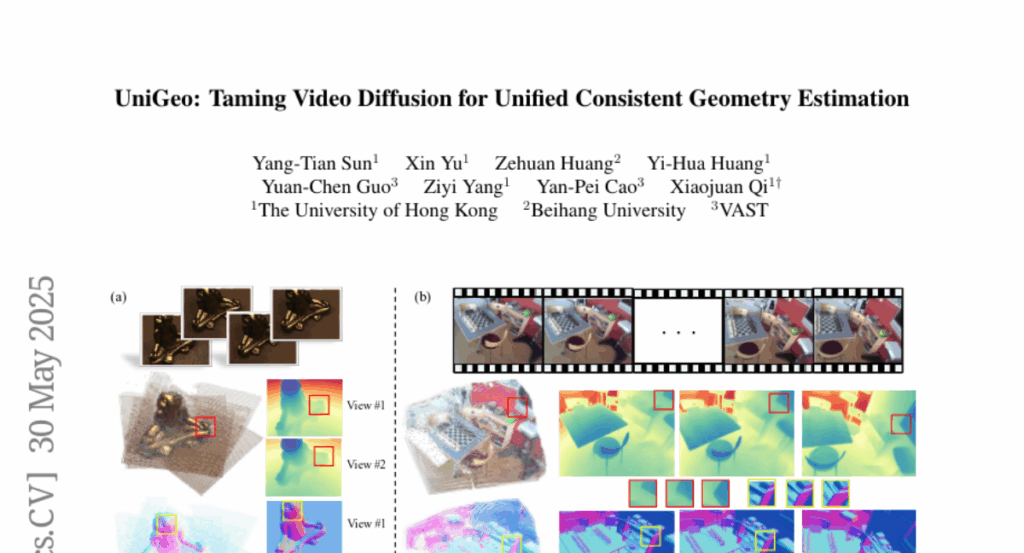

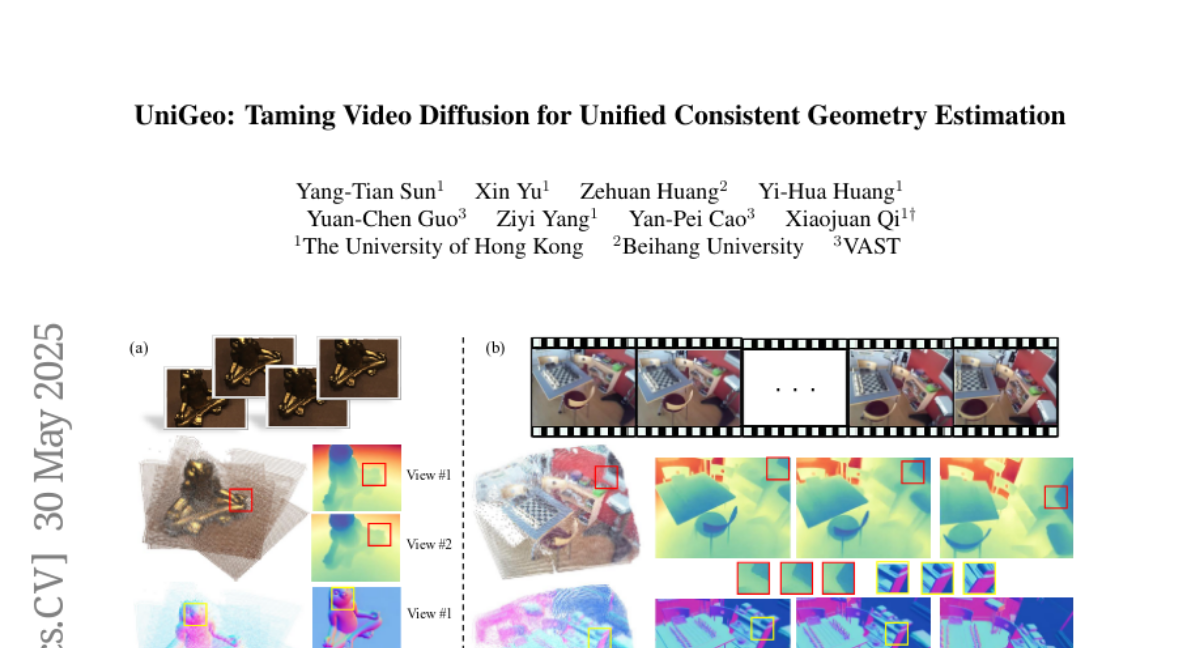

15. UniGeo: Taming Video Diffusion for Unified Consistent Geometry Estimation

🔑 Keywords: diffusion models, geometric estimation, joint training, inter-frame consistency

💡 Category: Computer Vision

🌟 Research Objective:

– Demonstrate the use of diffusion priors for improved geometric attribute estimation in video generation.

🛠️ Research Methods:

– Develop a novel conditioning method by reusing positional encodings.

– Implement joint training on multiple geometric attributes sharing the same correspondence.

💬 Research Conclusions:

– Achieved superior performance in predicting global geometric attributes, applicable to reconstruction tasks.

– Approach shows potential to generalize from static to dynamic video scenes.

👉 Paper link: https://huggingface.co/papers/2505.24521

16. EXP-Bench: Can AI Conduct AI Research Experiments?

🔑 Keywords: AI agents, end-to-end experimentation, LLM-based agents, automation, benchmark

💡 Category: AI Systems and Tools

🌟 Research Objective:

– Introduce EXP-Bench to evaluate AI agents’ abilities in conducting complete research experiments derived from top AI publications.

🛠️ Research Methods:

– Develop a semi-autonomous pipeline to extract and structure experimental details from research papers and associated open-source code.

– Curate 461 AI research tasks from 51 leading AI research papers for benchmarking.

💬 Research Conclusions:

– Current AI agents, such as OpenHands and IterativeAgent, show partial capabilities, with individual experimental aspects scoring between 20-35%.

– Complete, executable experiments by AI agents had a success rate of just 0.5%, highlighting the need for improvements.

– EXP-Bench provides a vital tool for enhancing AI agents’ research experimentation abilities, open-sourced for broader use.

👉 Paper link: https://huggingface.co/papers/2505.24785

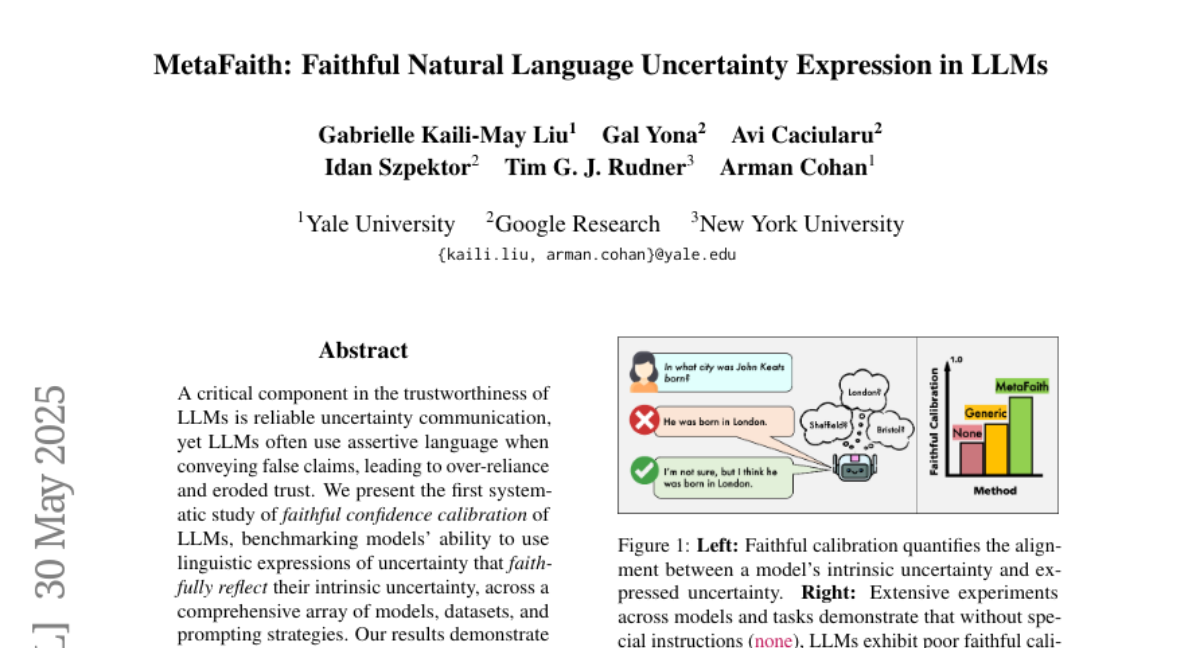

17. MetaFaith: Faithful Natural Language Uncertainty Expression in LLMs

🔑 Keywords: Large Language Models, MetaFaith, uncertainty communication, faithful calibration, metacognition

💡 Category: Natural Language Processing

🌟 Research Objective:

– The study aims to address the challenge of reliable uncertainty communication in Large Language Models (LLMs) by assessing their faithful confidence calibration capabilities.

🛠️ Research Methods:

– A systematic benchmarking of LLMs across various models, datasets, and prompting strategies, with the introduction of MetaFaith, a prompt-based method inspired by human metacognition to enhance calibration.

💬 Research Conclusions:

– The research demonstrates that traditional prompt methods show marginal improvements, and some calibration techniques even hinder faithfulness. However, the MetaFaith approach significantly improves calibration accuracy by up to 61% and achieves an 83% win rate in human evaluations over original LLM outputs.

👉 Paper link: https://huggingface.co/papers/2505.24858

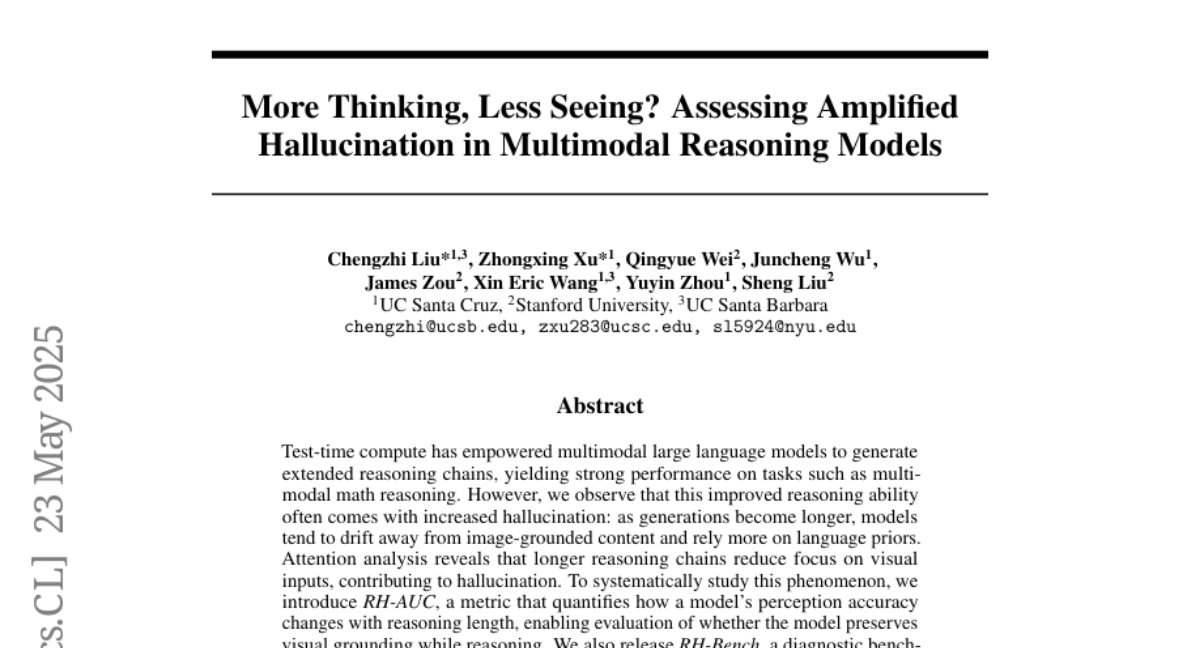

18. More Thinking, Less Seeing? Assessing Amplified Hallucination in Multimodal Reasoning Models

🔑 Keywords: Multimodal Large Language Models, Extended Reasoning Chains, Visual Grounding, Hallucination, Attention Analysis

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Introduce a new metric (RH-AUC) and benchmark (RH-Bench) to assess the ability of multimodal large language models to maintain visual grounding while performing extended reasoning.

🛠️ Research Methods:

– Utilize RH-AUC to quantify changes in perception accuracy with reasoning length and introduce RH-Bench as a diagnostic benchmark for evaluating trade-offs between reasoning ability and hallucination.

💬 Research Conclusions:

– Larger models generally balance reasoning and perception better.

– The balance is more influenced by types and domains of training data than its overall volume, emphasizing the need for evaluation frameworks that consider both reasoning quality and perceptual fidelity.

👉 Paper link: https://huggingface.co/papers/2505.21523

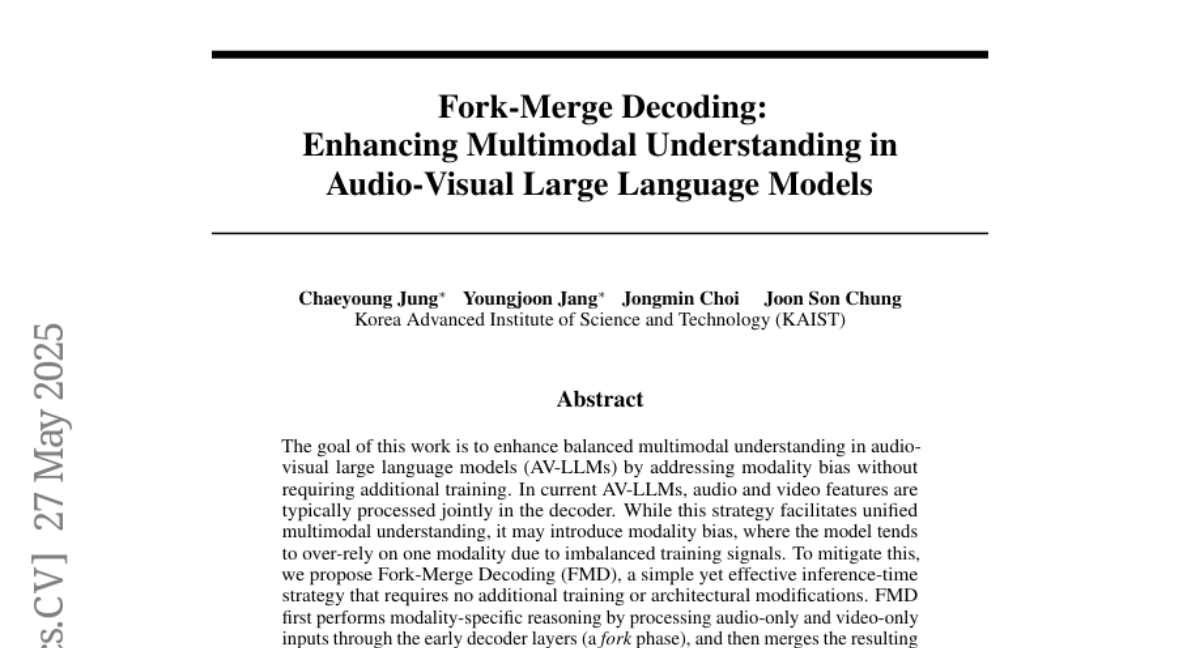

19. Fork-Merge Decoding: Enhancing Multimodal Understanding in Audio-Visual Large Language Models

🔑 Keywords: Fork-Merge Decoding, audio-visual large language models, modality bias, inference-time strategy

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The primary goal is to enhance balanced multimodal understanding in audio-visual large language models by addressing modality bias without additional training.

🛠️ Research Methods:

– Introduces the Fork-Merge Decoding (FMD) strategy, an inference-time method that separates and then combines audio and video modality-specific reasoning.

💬 Research Conclusions:

– FMD demonstrates consistent performance improvements in audio, video, and combined audio-visual reasoning tasks, proving its effectiveness in promoting robust multimodal understanding.

👉 Paper link: https://huggingface.co/papers/2505.20873

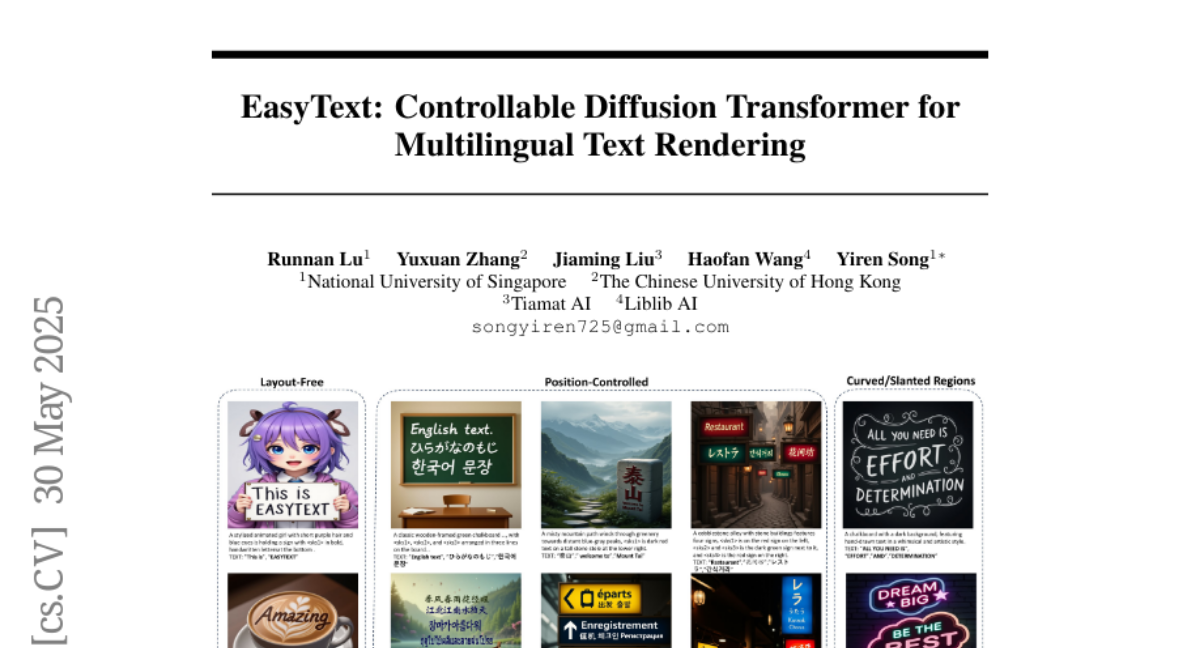

20. EasyText: Controllable Diffusion Transformer for Multilingual Text Rendering

🔑 Keywords: EasyText, DiT, multilingual text rendering, synthetic text image dataset, visual quality

💡 Category: Generative Models

🌟 Research Objective:

– To introduce EasyText, a text rendering framework that enhances precision and visual quality across multiple languages using DiT.

🛠️ Research Methods:

– Utilized DiT (Diffusion Transformer) to connect denoising latents with multilingual character tokens.

– Proposed character positioning encoding and position encoding interpolation techniques.

– Constructed a large-scale synthetic text image dataset and a high-quality dataset for pretraining and fine-tuning.

💬 Research Conclusions:

– The approach is effective and advanced in multilingual text rendering, offering high visual quality and layout-aware integration.

👉 Paper link: https://huggingface.co/papers/2505.24417

21. Harnessing Negative Signals: Reinforcement Distillation from Teacher Data for LLM Reasoning

🔑 Keywords: Reinforcement Distillation, Reasoning Performance, AI-generated summary, State-of-the-art, Mathematical reasoning

💡 Category: Natural Language Processing

🌟 Research Objective:

– To leverage both positive and negative traces to enhance large language model reasoning performance offline.

🛠️ Research Methods:

– Introduction of Reinforcement Distillation (REDI), a two-stage framework integrating Supervised Fine-Tuning and a novel reference-free loss function to exploit both positive and negative reasoning traces.

💬 Research Conclusions:

– REDI demonstrates superior performance over traditional methods like Rejection Sampling SFT and combinations with DPO/SimPO on mathematical reasoning tasks, achieving state-of-the-art results with limited open data.

👉 Paper link: https://huggingface.co/papers/2505.24850

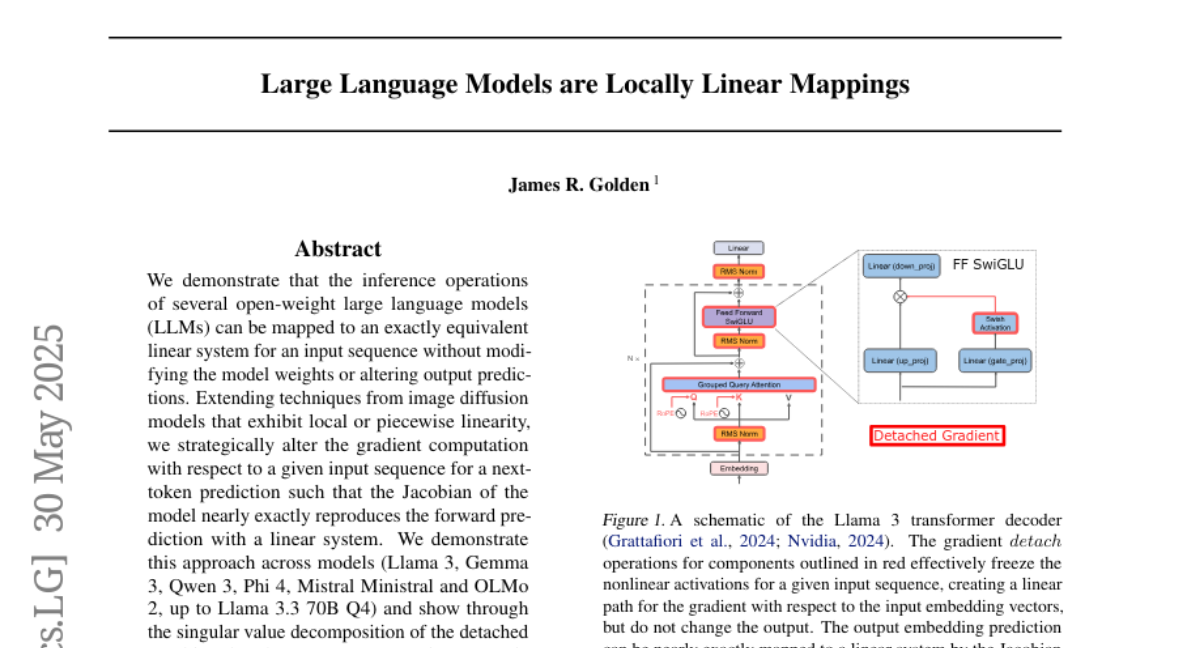

22. Large Language Models are Locally Linear Mappings

🔑 Keywords: Inference operations, Linear system, Gradient computation, Singular value decomposition, Semantic concepts

💡 Category: Natural Language Processing

🌟 Research Objective:

– To demonstrate that inference operations of several open-weight LLMs can be mapped to a linear system, offering insights into LLMs’ internal representations and semantic structures without modifying the models.

🛠️ Research Methods:

– Mapping inference operations to a linear system by altering gradient computation for next-token prediction without modifying the model.

– Analyzing models using singular value decomposition of the detached Jacobian to understand the dimensional subspace operations.

💬 Research Conclusions:

– LLMs operate in low-dimensional subspaces where singular vectors are related to output tokens, revealing interpretable semantic structures through nearly-exact locally linear decompositions.

👉 Paper link: https://huggingface.co/papers/2505.24293

23. Role-Playing Evaluation for Large Language Models

🔑 Keywords: Large Language Models, Role-Playing Eval, emotional understanding, decision-making, moral alignment

💡 Category: Natural Language Processing

🌟 Research Objective:

– Introduce Role-Playing Eval (RPEval) to assess Large Language Models’ capabilities in role-playing across emotional understanding, decision-making, moral alignment, and in-character consistency.

🛠️ Research Methods:

– Developed a benchmark (RPEval) with baseline evaluations; includes a code and dataset available for public access.

💬 Research Conclusions:

– RPEval is designed to overcome challenges in evaluating LLMs’ role-playing abilities, providing a new framework for assessment.

👉 Paper link: https://huggingface.co/papers/2505.13157

24. DexUMI: Using Human Hand as the Universal Manipulation Interface for Dexterous Manipulation

🔑 Keywords: AI Native, wearable hand exoskeleton, robot hand inpainting, haptic feedback, dexterous manipulation

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– The aim of the study is to transfer dexterous manipulation skills from human hands to robot hands through the DexUMI framework.

🛠️ Research Methods:

– Utilizes the wearable hand exoskeleton for direct haptic feedback, bridging the kinematics gap and adapting human motion to robot hands.

– Employs high-fidelity robot hand inpainting to bridge the visual gap between human and robot hand movements.

💬 Research Conclusions:

– Demonstrates effective skill transfer with an average task success rate of 86% through real-world experiments on different robotic hand platforms.

👉 Paper link: https://huggingface.co/papers/2505.21864

25. Enabling Flexible Multi-LLM Integration for Scalable Knowledge Aggregation

🔑 Keywords: Large Language Models, Adaptive Selection Network, Dynamic Weighted Fusion, Knowledge Interference, Scalability

💡 Category: Natural Language Processing

🌟 Research Objective:

– To propose a framework that adaptively selects and aggregates knowledge from multiple large language models (LLMs) to create a stronger unified model while addressing memory overhead and flexibility issues of traditional methods.

🛠️ Research Methods:

– Implementation of an adaptive selection network to identify relevant LLMs based on scoring.

– Development of a dynamic weighted fusion strategy to leverage the inherent strengths of candidate LLMs and a feedback-driven loss function to maintain diverse source selection.

💬 Research Conclusions:

– Demonstrated a reduction of knowledge interference by up to 50% compared to existing approaches, while enabling a stable and scalable knowledge aggregation process. The code implementation is publicly available.

👉 Paper link: https://huggingface.co/papers/2505.23844

26. Harnessing Large Language Models for Scientific Novelty Detection

🔑 Keywords: Large Language Models, Scientific Novelty Detection, Idea Conception, Idea Retrieval

💡 Category: Natural Language Processing

🌟 Research Objective:

– To utilize large language models for detecting scientific novelty by leveraging idea-level knowledge and constructing specialized datasets in marketing and NLP domains.

🛠️ Research Methods:

– Training a lightweight retriever to distill idea-level knowledge from large language models and align similar conceptions for efficient idea retrieval.

– Constructing benchmark datasets for novelty detection by summarizing main ideas of closure sets of papers using large language models.

💬 Research Conclusions:

– The proposed method consistently outperforms other approaches on benchmark datasets for idea retrieval and novelty detection tasks, proving its efficacy.

👉 Paper link: https://huggingface.co/papers/2505.24615

27. ReasonGen-R1: CoT for Autoregressive Image generation models through SFT and RL

🔑 Keywords: ReasonGen-R1, Chain-of-Thought Reasoning, Reinforcement Learning, Generative Models, Image Generation

💡 Category: Generative Models

🌟 Research Objective:

– The study aims to enhance image generation by integrating chain-of-thought reasoning and reinforcement learning into generative vision models.

🛠️ Research Methods:

– The authors introduced ReasonGen-R1, a two-stage framework that involves supervised fine-tuning of an autoregressive image generator using a reasoning dataset and refining outputs via Group Relative Policy Optimization (GRPO).

💬 Research Conclusions:

– ReasonGen-R1 consistently outperforms strong baselines and previous state-of-the-art models in image generation tasks as demonstrated in evaluations on GenEval, DPG, and the T2I benchmark.

👉 Paper link: https://huggingface.co/papers/2505.24875

28. Point-MoE: Towards Cross-Domain Generalization in 3D Semantic Segmentation via Mixture-of-Experts

🔑 Keywords: Point-MoE, Mixture-of-Experts, 3D perception, domain heterogeneity, cross-domain generalization

💡 Category: Computer Vision

🌟 Research Objective:

– The research introduces Point-MoE, a Mixture-of-Experts architecture, aimed at enabling large-scale, cross-domain generalization in 3D perception without the need for domain labels.

🛠️ Research Methods:

– The developed Point-MoE utilizes a simple top-k routing strategy to automatically specialize experts, even when domain labels are not available during inference.

💬 Research Conclusions:

– Point-MoE outperforms strong multi-domain baselines and generalizes effectively to unseen domains, demonstrating a scalable approach for advancing 3D understanding by allowing models to discover structures in diverse 3D data autonomously.

👉 Paper link: https://huggingface.co/papers/2505.23926

29. un^2CLIP: Improving CLIP’s Visual Detail Capturing Ability via Inverting unCLIP

🔑 Keywords: unCLIP, CLIP, multimodal, dense-prediction, generative models

💡 Category: Generative Models

🌟 Research Objective:

– To enhance CLIP’s ability to capture detailed visual information while maintaining text alignment using a generative model framework, unCLIP.

🛠️ Research Methods:

– The study employs the inverted generative model framework, unCLIP, to improve CLIP by conditioning an image generator on the CLIP image embedding, thus inverting the image encoder process.

💬 Research Conclusions:

– The proposed un^2CLIP method significantly enhances the original CLIP’s performance across multiple tasks, outperforming previous improvement methods.

👉 Paper link: https://huggingface.co/papers/2505.24517

30. Grammars of Formal Uncertainty: When to Trust LLMs in Automated Reasoning Tasks

🔑 Keywords: Large language models, Uncertainty quantification, Formal verification, Probabilistic context-free grammar, Satisfiability Modulo Theories

💡 Category: Natural Language Processing

🌟 Research Objective:

– The study aims to address the tension between probabilistic LLMs and deterministic formal verification by exploring uncertainty quantification in LLM-generated formal specifications.

🛠️ Research Methods:

– A new probabilistic context-free grammar framework is introduced to model LLM outputs, alongside a systematic evaluation of five advanced LLMs and the analysis of their failure modes and uncertainty quantification.

💬 Research Conclusions:

– The research identifies task-dependent uncertainty signals and demonstrates that a fusion of these signals can significantly reduce errors in LLM-driven formalization with minimal abstention, establishing a more reliable engineering discipline.

👉 Paper link: https://huggingface.co/papers/2505.20047

31. Fine-Tune an SLM or Prompt an LLM? The Case of Generating Low-Code Workflows

🔑 Keywords: Fine-tuning, Small Language Models, Structured Output, Large Language Models, Token Costs

💡 Category: Natural Language Processing

🌟 Research Objective:

– To investigate the quality improvement of fine-tuning Small Language Models for domain-specific tasks with structured outputs compared to prompting Large Language Models.

🛠️ Research Methods:

– Fine-tuning Small Language Models and comparing their performance with Large Language Models on generating low-code workflows in JSON form.

💬 Research Conclusions:

– Fine-tuning Small Language Models improves the quality of structured outputs by an average of 10% compared to prompting Large Language Models, especially for domain-specific tasks.

– Systematic error analysis was conducted to highlight model limitations.

👉 Paper link: https://huggingface.co/papers/2505.24189

32. Evaluating and Steering Modality Preferences in Multimodal Large Language Model

🔑 Keywords: Modality Bias, Multimodal large language models, Representation Engineering, Hallucination Mitigation, Multimodal Machine Translation

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The study aims to determine whether multimodal large language models (MLLMs) exhibit modality preference under multimodal conflicting evidence contexts.

🛠️ Research Methods:

– Researchers built an MC^2 benchmark to evaluate modality preference and introduced a probing and steering method based on representation engineering to control this bias without fine-tuning or complex prompts.

💬 Research Conclusions:

– The findings reveal a consistent modality bias in MLLMs, which can be directed by external interventions, leading to improvements in tasks like hallucination mitigation and multimodal machine translation.

👉 Paper link: https://huggingface.co/papers/2505.20977

33. LegalSearchLM: Rethinking Legal Case Retrieval as Legal Elements Generation

🔑 Keywords: LegalSearchLM, Legal Case Retrieval, LEGAR BENCH, Content Generation, Constrained Decoding

💡 Category: Natural Language Processing

🌟 Research Objective:

– To enhance the process of retrieving relevant legal cases by developing a new model, LegalSearchLM, that incorporates comprehensive reasoning and content generation.

🛠️ Research Methods:

– Introduced LEGAR BENCH, a large-scale Korean legal case retrieval benchmark with 1.2 million cases covering 411 diverse crime types.

– Developed LegalSearchLM, which uses legal element reasoning and directly generates content through constrained decoding.

💬 Research Conclusions:

– LegalSearchLM outperformed existing models by 6-20% in the LEGAR BENCH benchmark, showing state-of-the-art performance.

– Demonstrated significant generalization to out-of-domain cases, surpassing naive generative models by 15%.

👉 Paper link: https://huggingface.co/papers/2505.23832

34. The Automated but Risky Game: Modeling Agent-to-Agent Negotiations and Transactions in Consumer Markets

🔑 Keywords: LLM agents, AI agents, automated negotiations, consumer markets, behavioral anomalies

💡 Category: Human-AI Interaction

🌟 Research Objective:

– The paper explores the impact of LLM agents on automated negotiations and transactions in consumer markets, aiming to understand their effectiveness and associated risks.

🛠️ Research Methods:

– An experimental framework was developed to evaluate the performance of various LLM agents in real-world negotiation and transaction settings.

💬 Research Conclusions:

– The study found that different LLM agents can lead to significantly different outcomes in negotiations, highlighting potential financial risks such as overspending or accepting unreasonable deals. While automation can improve efficiency, it also introduces significant risks, suggesting users should be cautious in delegating business decisions to AI agents.

👉 Paper link: https://huggingface.co/papers/2506.00073

35. GATE: General Arabic Text Embedding for Enhanced Semantic Textual Similarity with Matryoshka Representation Learning and Hybrid Loss Training

🔑 Keywords: GATE models, Matryoshka Representation Learning, Semantic Textual Similarity, Natural Language Processing, Arabic text

💡 Category: Natural Language Processing

🌟 Research Objective:

– The primary objective is to enhance the performance of Arabic Semantic Textual Similarity tasks using GATE models.

🛠️ Research Methods:

– Employed Matryoshka Representation Learning and a hybrid loss approach with Arabic triplet datasets to improve semantic understanding in NLP tasks.

💬 Research Conclusions:

– GATE models achieved state-of-the-art results on STS benchmarks, showing a 20-25% performance improvement over larger models like OpenAI, demonstrating its ability to effectively capture semantic nuances in Arabic.

👉 Paper link: https://huggingface.co/papers/2505.24581

36. Revisiting Bi-Linear State Transitions in Recurrent Neural Networks

🔑 Keywords: bilinear operations, recurrent neural networks, hidden units, state tracking tasks

💡 Category: Machine Learning

🌟 Research Objective:

– To explore the role of bilinear operations in recurrent neural networks as a natural bias for state tracking tasks.

🛠️ Research Methods:

– Theoretical and empirical analysis of bilinear operations, focusing on their role in representing the evolution of hidden states.

💬 Research Conclusions:

– Bilinear operations effectively model the active contribution of hidden units in state tracking tasks, forming a hierarchy of complexity in linear recurrent networks.

👉 Paper link: https://huggingface.co/papers/2505.21749

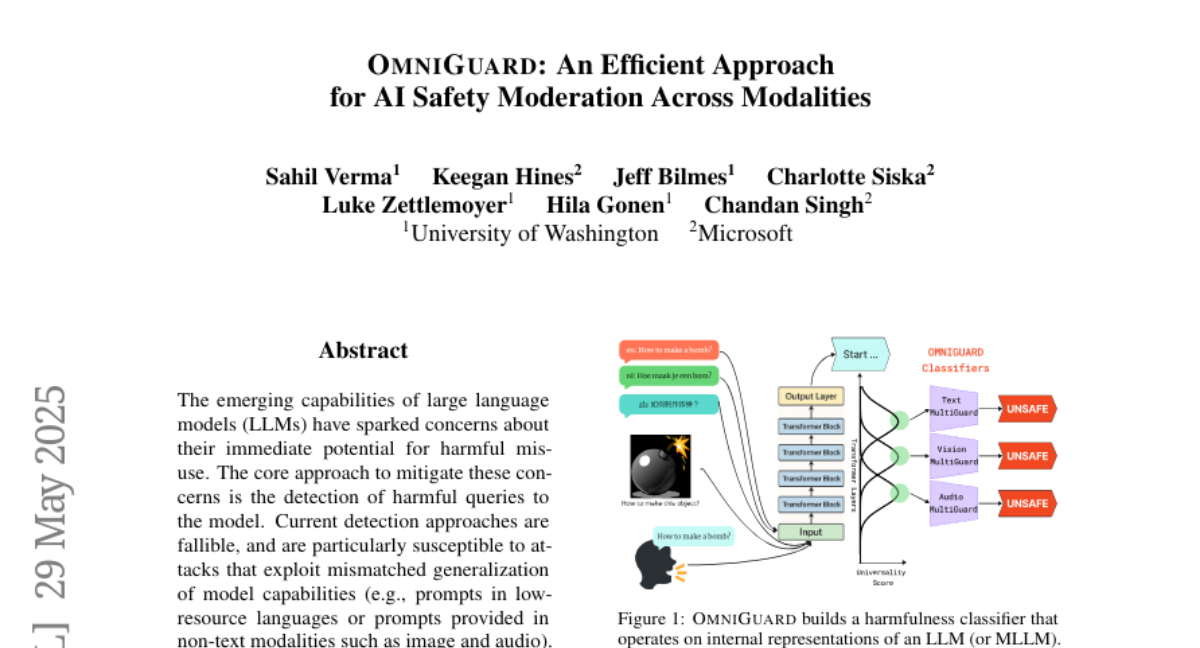

37. OMNIGUARD: An Efficient Approach for AI Safety Moderation Across Modalities

🔑 Keywords: OMNIGUARD, large language models, harmful prompts, multilingual setting, modality-agnostic

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To develop OMNIGUARD, a system for detecting harmful prompts across languages and modalities with high accuracy and efficiency.

🛠️ Research Methods:

– Identification of aligned internal representations in LLMs/MLLMs to construct language-agnostic or modality-agnostic classifiers.

💬 Research Conclusions:

– OMNIGUARD enhances harmful prompt classification by improving accuracy by 11.57% in multilingual settings and by 20.44% for image-based prompts. It also achieves state-of-the-art results for audio-based prompts and operates approximately 120 times faster than the next fastest method.

👉 Paper link: https://huggingface.co/papers/2505.23856

38. ChARM: Character-based Act-adaptive Reward Modeling for Advanced Role-Playing Language Agents

🔑 Keywords: ChARM, Role-Playing Language Agents, act-adaptive margin, self-evolution mechanism, preference learning

💡 Category: Natural Language Processing

🌟 Research Objective:

– The study introduces ChARM, a character-focused adaptive reward model, aimed at improving preference learning in role-playing language agents by addressing scalability and subjective conversational preferences.

🛠️ Research Methods:

– The paper proposes two key innovations: an act-adaptive margin to enhance learning efficiency and generalizability, and a self-evolution mechanism leveraging large-scale unlabeled data for better training coverage.

💬 Research Conclusions:

– ChARM demonstrates a marked improvement of 13% over the traditional Bradley-Terry model in preference rankings and achieves state-of-the-art results in CharacterEval and RoleplayEval by applying ChARM-generated rewards to preference learning techniques.

👉 Paper link: https://huggingface.co/papers/2505.23923

39. The State of Multilingual LLM Safety Research: From Measuring the Language Gap to Mitigating It

🔑 Keywords: LLM safety, language gap, English-centric, multilingual safety, crosslingual generalization

💡 Category: Natural Language Processing

🌟 Research Objective:

– The objective is to analyze the linguistic diversity in LLM safety research and highlight the English-centric nature of the field.

🛠️ Research Methods:

– Conducted a systematic review of nearly 300 publications from 2020-2024, focusing on major NLP conferences and workshops.

💬 Research Conclusions:

– Identified a significant language gap, with non-English languages receiving limited attention. Proposed recommendations for multilingual safety evaluation and future directions for improving crosslingual generalization in AI safety research.

👉 Paper link: https://huggingface.co/papers/2505.24119

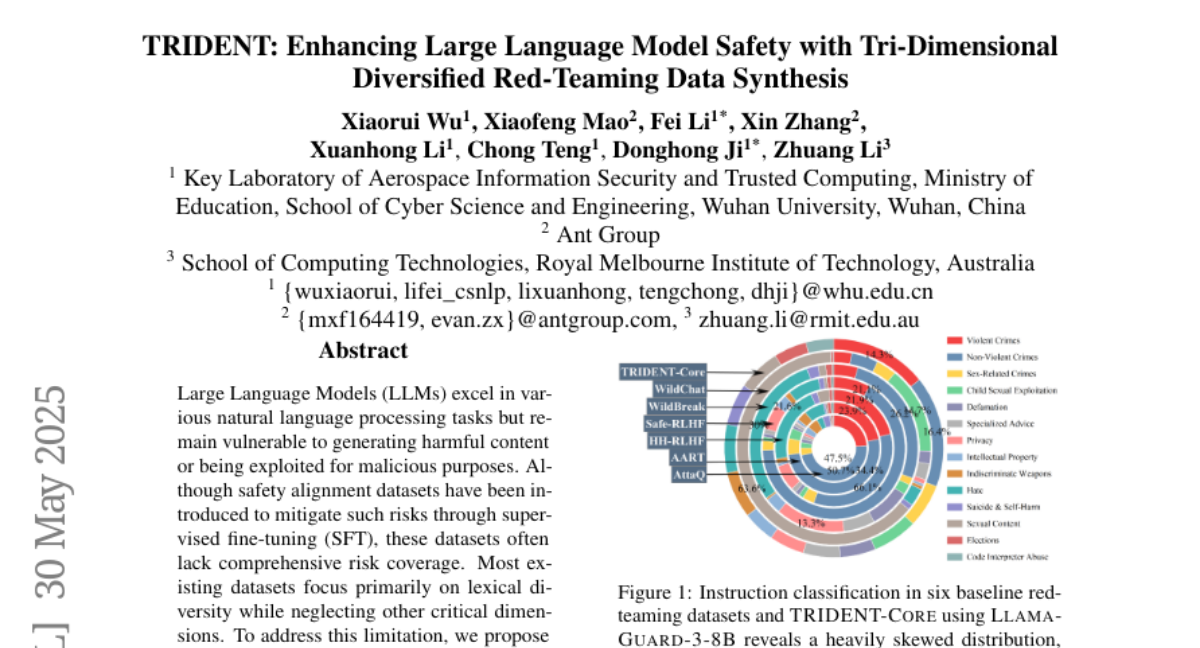

40. TRIDENT: Enhancing Large Language Model Safety with Tri-Dimensional Diversified Red-Teaming Data Synthesis

🔑 Keywords: Large Language Models, Safety Alignment, TRIDENT, Malicious Intent, Ethical AI

💡 Category: AI Ethics and Fairness

🌟 Research Objective:

– The study aims to address the limitations of current safety alignment datasets for Large Language Models (LLMs) by proposing a framework to systematically evaluate risk coverage across Lexical Diversity, Malicious Intent, and Jailbreak Tactics.

🛠️ Research Methods:

– Introduction of TRIDENT, an automated pipeline leveraging persona-based, zero-shot LLM generation to create diverse instructions paired with ethically aligned responses, resulting in datasets TRIDENT-Core and TRIDENT-Edge.

💬 Research Conclusions:

– Fine-tuning Llama 3.1-8B on the TRIDENT-Edge dataset shows a 14.29% reduction in Harm Score and a 20% decrease in Attack Success Rate compared to the WildBreak baseline, showcasing substantial safety improvements.

👉 Paper link: https://huggingface.co/papers/2505.24672

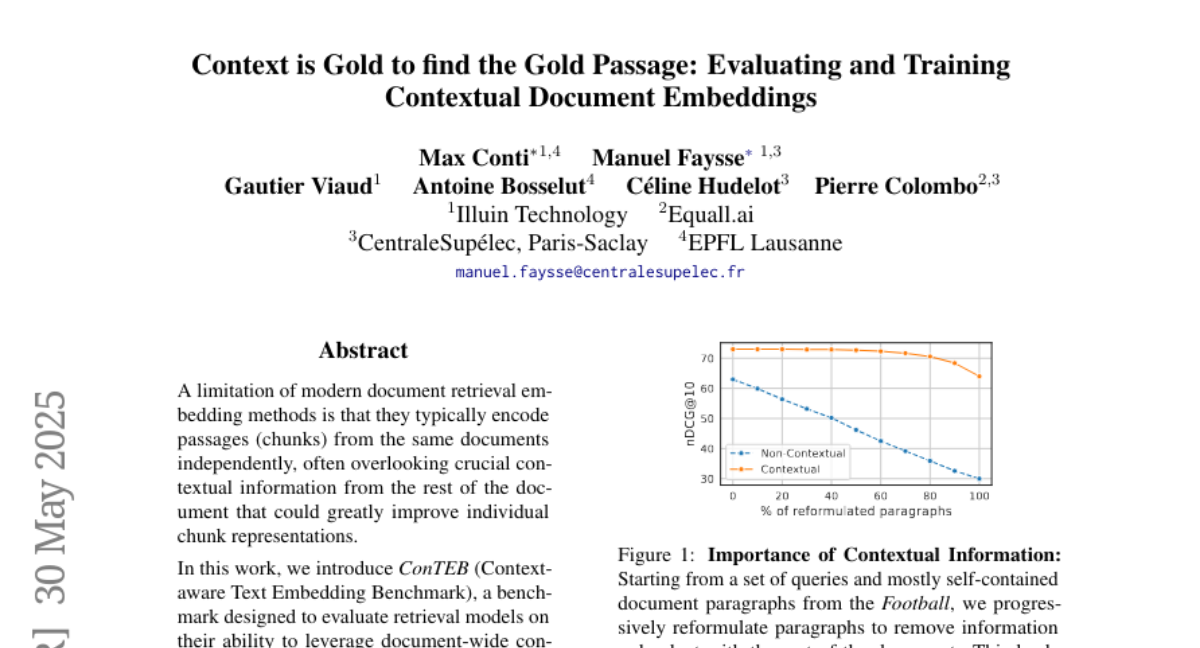

41. Context is Gold to find the Gold Passage: Evaluating and Training Contextual Document Embeddings

🔑 Keywords: Context-aware, Document Retrieval, Contrastive Training, Contextual Representation Learning

💡 Category: Natural Language Processing

🌟 Research Objective:

– The paper aims to improve document retrieval by leveraging full-document context to enhance the representation of individual text chunks.

🛠️ Research Methods:

– Introduces ConTEB, a benchmark to evaluate retrieval models’ ability to use document-wide context.

– Proposes InSeNT, a novel contrastive post-training method for enhancing contextual representation learning.

💬 Research Conclusions:

– State-of-the-art embedding models struggle in context-required retrieval scenarios.

– The proposed method improves retrieval quality without sacrificing the base model’s performance and shows robustness to suboptimal chunking strategies and larger retrieval corpuses.

👉 Paper link: https://huggingface.co/papers/2505.24782

42. SiLVR: A Simple Language-based Video Reasoning Framework

🔑 Keywords: Large Language Models, multimodal LLMs, video-language tasks, Simple Language-based Video Reasoning, adaptive token reduction

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To enhance the reasoning capabilities of multimodal LLMs, particularly for complex video-language tasks, through the development of the SiLVR framework.

🛠️ Research Methods:

– Utilized a two-stage approach where raw videos are transformed into language-based representations and then processed by powerful reasoning LLMs.

– Implemented an adaptive token reduction scheme to dynamically manage temporal granularity for long-context multisensory inputs.

💬 Research Conclusions:

– SiLVR, without requiring additional training, outperforms existing benchmarks in video reasoning tasks, effectively using multisensory inputs for complex temporal, causal, and long-context reasoning.

👉 Paper link: https://huggingface.co/papers/2505.24869