AI Native Daily Paper Digest – 20250618

1. MultiFinBen: A Multilingual, Multimodal, and Difficulty-Aware Benchmark for Financial LLM Evaluation

🔑 Keywords: Multilingual, Multimodal, LLMs, Benchmark, Financial Domain

💡 Category: AI in Finance

🌟 Research Objective:

– Introduce MultiFinBen, a comprehensive multilingual and multimodal benchmark for financial domain tasks.

🛠️ Research Methods:

– Evaluate large language models (LLMs) across various modalities (text, vision, audio) and linguistic settings on domain-specific tasks.

– Propose two novel tasks, PolyFiQA-Easy and PolyFiQA-Expert, requiring complex reasoning over mixed-language inputs, and OCR-embedded tasks, EnglishOCR and SpanishOCR, for financial QA.

💬 Research Conclusions:

– Despite their capabilities, state-of-the-art models struggle with complex cross-lingual and multimodal tasks in the financial domain, highlighting the challenges that remain.

– MultiFinBen is publicly released to support ongoing advancements in financial studies and applications.

👉 Paper link: https://huggingface.co/papers/2506.14028

2. Scaling Test-time Compute for LLM Agents

🔑 Keywords: test-time scaling, language agents, parallel sampling, verification, diversified rollouts

💡 Category: Natural Language Processing

🌟 Research Objective:

– This study aims to explore the effects of test-time scaling methods on the performance of large language agents.

🛠️ Research Methods:

– The research examines various test-time scaling strategies, including parallel sampling, sequential revision, and verification methods, to determine their impact on language agents.

💬 Research Conclusions:

– Applying test-time scaling can significantly improve agent performance.

– Agents benefit from knowing optimal moments to reflect on their actions.

– List-wise verification and result merging approaches are the most effective.

– Enhanced rollout diversity positively affects task performance of agents.

👉 Paper link: https://huggingface.co/papers/2506.12928

3. CMI-Bench: A Comprehensive Benchmark for Evaluating Music Instruction Following

🔑 Keywords: AI-generated summary, audio-text large language models, music information retrieval, CMI-Bench, instruction-following

💡 Category: Natural Language Processing

🌟 Research Objective:

– To introduce CMI-Bench, a comprehensive instruction-following benchmark for evaluating audio-text large language models on music information retrieval tasks.

🛠️ Research Methods:

– Reinterpreting traditional music information retrieval annotations as instruction-following formats.

– Use of standardized evaluation metrics for direct comparability with supervised approaches.

💬 Research Conclusions:

– CMI-Bench indicates significant performance gaps between audio-text LLMs and supervised models, revealing cultural, chronological, and gender biases.

– It establishes a unified foundation for evaluating music instruction following, promoting advancements in music-aware LLMs.

👉 Paper link: https://huggingface.co/papers/2506.12285

4. LongLLaDA: Unlocking Long Context Capabilities in Diffusion LLMs

🔑 Keywords: Diffusion LLMs, Auto-regressive LLMs, Long-context tasks, Rotary Position Embedding (RoPE), LongLLaDA

💡 Category: Natural Language Processing

🌟 Research Objective:

– The study aims to investigate the long-context performance of diffusion LLMs compared to auto-regressive LLMs and identify their unique characteristics.

🛠️ Research Methods:

– Systematic investigation and comparison of diffusion LLMs and auto-regressive LLMs, introduction of the LongLLaDA method for extending context windows, and analysis through the Rotary Position Embedding (RoPE) scaling theory.

💬 Research Conclusions:

– Diffusion LLMs maintain stable perplexity during context extrapolation and exhibit a local perception phenomenon in long-context tasks, outperforming auto-regressive models in some cases. The proposed LongLLaDA method effectively extends context windows and provides a new context extrapolation method for diffusion LLMs.

👉 Paper link: https://huggingface.co/papers/2506.14429

5. Reinforcement Learning with Verifiable Rewards Implicitly Incentivizes Correct Reasoning in Base LLMs

🔑 Keywords: RLVR, Large Language Models, CoT-Pass@K, Machine Reasoning, Training Dynamics

💡 Category: Machine Learning

🌟 Research Objective:

– To resolve the paradox of underperformance in RLVR-tuned models compared to base models by analyzing the limitations of the Pass@K metric.

🛠️ Research Methods:

– Introduction of a more precise evaluation metric, CoT-Pass@K, which demands correct reasoning paths alongside correct final answers.

– Empirical analysis of training dynamics to validate enhanced reasoning capabilities of RLVR.

💬 Research Conclusions:

– RLVR successfully incentivizes logical integrity and generalization of correct reasoning across all values of K, highlighting its potential to advance machine reasoning.

👉 Paper link: https://huggingface.co/papers/2506.14245

6. Xolver: Multi-Agent Reasoning with Holistic Experience Learning Just Like an Olympiad Team

🔑 Keywords: Xolver, multi-agent reasoning, large language models, experience-aware language agents, iterative refinement

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– To enhance the performance of large language models (LLMs) on complex reasoning tasks by integrating persistent memory and diverse experiential modalities.

🛠️ Research Methods:

– Introduction of Xolver, a training-free multi-agent reasoning framework that incorporates external and self-retrieval, tool use, collaborative interactions, and agent-driven evaluation to create an evolving memory of holistic experience.

💬 Research Conclusions:

– Xolver consistently outperforms specialized reasoning agents and achieves new best results on benchmarking tasks, demonstrating the importance of holistic experience learning for expert-level reasoning.

👉 Paper link: https://huggingface.co/papers/2506.14234

7. Efficient Medical VIE via Reinforcement Learning

🔑 Keywords: Reinforcement Learning, Medical VIE, Fine-tuning, Qwen2.5-VL-7B, Precision-recall reward mechanism

💡 Category: AI in Healthcare

🌟 Research Objective:

– The study aims to enhance the state-of-the-art performance in medical Visual Information Extraction (VIE) using a limited number of annotated samples.

🛠️ Research Methods:

– The research utilizes a Reinforcement Learning with Verifiable Rewards (RLVR) framework, fine-tuning Qwen2.5-VL-7B to balance precision and recall, using innovative sampling strategies, and focusing on dataset diversity.

💬 Research Conclusions:

– The RLVR framework significantly improves F1, precision, and recall in tasks similar to medical datasets, though it faces challenges in less similar domains, highlighting the necessity for domain-specific optimization.

👉 Paper link: https://huggingface.co/papers/2506.13363

8. Stream-Omni: Simultaneous Multimodal Interactions with Large Language-Vision-Speech Model

🔑 Keywords: Stream-Omni, large multimodal models, modality alignments, vision-grounded speech interaction, sequence-dimension concatenation

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To efficiently integrate text, vision, and speech modalities through more purposeful modality alignments for flexible multimodal interaction.

🛠️ Research Methods:

– Utilization of Stream-Omni, leveraging sequence-dimension concatenation for vision-text alignment and CTC-based layer-dimension mapping for speech-text alignment.

💬 Research Conclusions:

– Stream-Omni achieves strong performance on visual understanding, speech interaction, and vision-grounded speech interaction tasks with less data, offering a comprehensive multimodal experience.

👉 Paper link: https://huggingface.co/papers/2506.13642

9. Reasoning with Exploration: An Entropy Perspective

🔑 Keywords: Entropy, Reinforcement Learning, Exploratory Reasoning, Advantage Function, Language Models

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The study aims to enhance exploratory reasoning in language models by introducing an entropy-based term to the advantage function in reinforcement learning.

🛠️ Research Methods:

– Empirical analysis is conducted to reveal correlations between high-entropy regions and exploratory reasoning actions in language models. A minimal modification is made to the standard RL model by adding an entropy-based term.

💬 Research Conclusions:

– The method significantly improves performance on complex reasoning tasks, achieving gains on the Pass@K metric, highlighting the potential for longer and deeper reasoning chains within language models.

👉 Paper link: https://huggingface.co/papers/2506.14758

10. Can LLMs Generate High-Quality Test Cases for Algorithm Problems? TestCase-Eval: A Systematic Evaluation of Fault Coverage and Exposure

🔑 Keywords: TestCase-Eval, LLMs, Fault Coverage, Fault Exposure, algorithm problems

💡 Category: AI Systems and Tools

🌟 Research Objective:

– To introduce TestCase-Eval for systematic evaluation of LLMs in generating comprehensive and targeted test cases for algorithm problems.

🛠️ Research Methods:

– The benchmark includes 500 algorithm problems and 100,000 human-crafted solutions from the Codeforces platform, focusing on evaluating Fault Coverage and Fault Exposure.

💬 Research Conclusions:

– Provides a comprehensive assessment of 19 state-of-the-art open-source and proprietary LLMs, offering insights into their strengths and limitations in generating effective test cases.

👉 Paper link: https://huggingface.co/papers/2506.12278

11. QFFT, Question-Free Fine-Tuning for Adaptive Reasoning

🔑 Keywords: Question-Free Fine-Tuning, Long Chain-of-Thought, Fine-Tuning, Supervised Fine-Tuning

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– The study aims to enhance cognitive models’ efficiency and adaptability by introducing Question-Free Fine-Tuning (QFFT) that combines Short and Long Chain-of-Thought reasoning patterns.

🛠️ Research Methods:

– The authors recommend a fine-tuning method that omits input questions in training and learns from Long Chain-of-Thought responses to adaptively utilize both reasoning patterns.

💬 Research Conclusions:

– Experiments indicate that QFFT decreases response length by over 50% while maintaining similar performance levels to Supervised Fine-Tuning and performs better in noisy, out-of-domain, and low-resource scenarios.

👉 Paper link: https://huggingface.co/papers/2506.12860

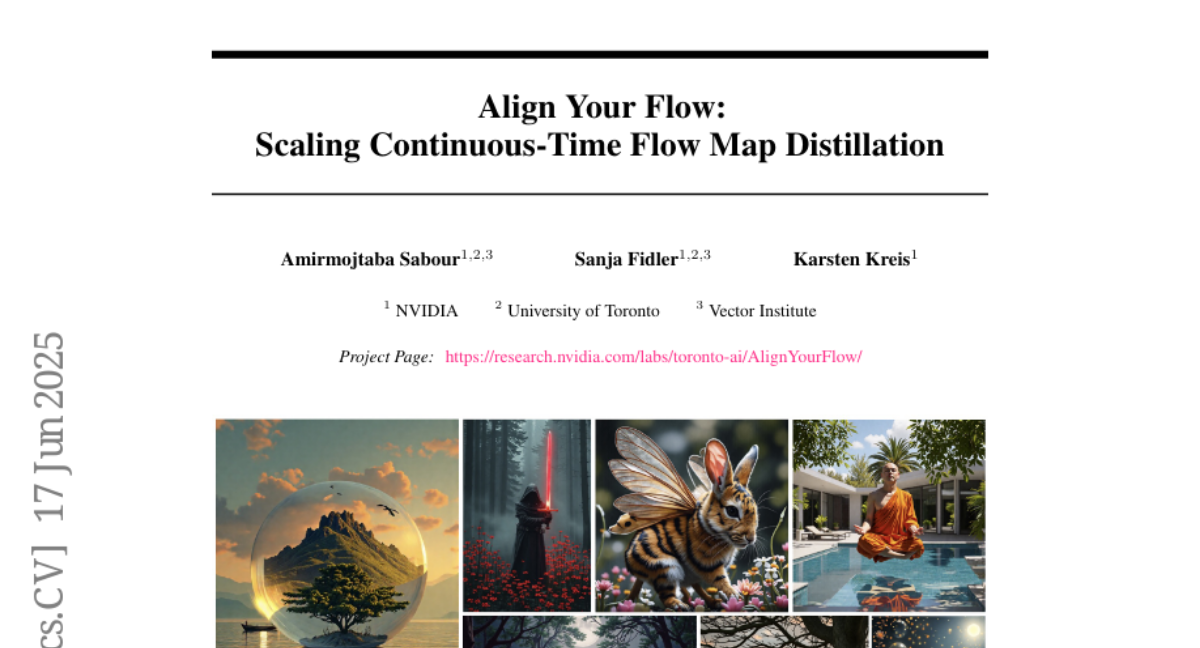

12. Align Your Flow: Scaling Continuous-Time Flow Map Distillation

🔑 Keywords: Flow maps, AI-Generated, Consistency models, Autoguidance, Adversarial finetuning

💡 Category: Generative Models

🌟 Research Objective:

– The study aims to introduce new continuous-time objectives and training techniques for flow maps to achieve state-of-the-art performance in few-step image and text-to-image generation.

🛠️ Research Methods:

– The researchers propose flow maps that generalize existing models by connecting any two noise levels in a single step, along with new training techniques and objectives. They use autoguidance for performance improvement and adversarial finetuning for enhancing model capabilities.

💬 Research Conclusions:

– The Align Your Flow models demonstrate state-of-the-art performance on image generation benchmarks with small, efficient neural networks, and outperform existing non-adversarially trained models in text-conditioned synthesis.

👉 Paper link: https://huggingface.co/papers/2506.14603

13. Guaranteed Guess: A Language Modeling Approach for CISC-to-RISC Transpilation with Testing Guarantees

🔑 Keywords: ISA-centric transpilation, large language models, software testing, CISC-to-RISC translation

💡 Category: AI Systems and Tools

🌟 Research Objective:

– The objective was to develop an ISA-centric transpilation pipeline that effectively translates low-level programs between complex and reduced hardware architectures to improve code portability and longevity.

🛠️ Research Methods:

– This research utilized a novel pipeline called GG, which combines pre-trained large language models with rigorous software testing constructs to produce candidate translations between instruction set architectures.

💬 Research Conclusions:

– The GG approach demonstrated high functional and semantic correctness in translations with 99% accuracy on HumanEval and 49% on BringupBench programs. Additionally, it outperformed the Rosetta 2 framework, with faster runtime, better energy efficiency, and improved memory usage, proving its effectiveness in real-world applications.

👉 Paper link: https://huggingface.co/papers/2506.14606

14. V-JEPA 2: Self-Supervised Video Models Enable Understanding, Prediction and Planning

🔑 Keywords: self-supervised learning, motion understanding, human action anticipation, video question-answering, robotic planning

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– To develop a model capable of understanding, predicting, and planning in the physical world using self-supervised learning from internet video data and minimal robot interaction.

🛠️ Research Methods:

– Utilized an action-free joint-embedding-predictive architecture (V-JEPA 2) pre-trained on a vast video and image dataset, and aligned it with a large language model to enhance video question-answering capabilities.

💬 Research Conclusions:

– Showcased state-of-the-art performance in motion understanding, human action anticipation, and multiple video question-answering tasks. Demonstrated effective robotic planning by deploying the model zero-shot on robotic arms without task-specific training.

👉 Paper link: https://huggingface.co/papers/2506.09985

15. CRITICTOOL: Evaluating Self-Critique Capabilities of Large Language Models in Tool-Calling Error Scenarios

🔑 Keywords: CRITICTOOL, large language models, tool learning, function-calling process, tool reflection ability

💡 Category: Natural Language Processing

🌟 Research Objective:

– Evaluate and enhance the robustness of large language models in handling errors during tool usage.

🛠️ Research Methods:

– Introduced CRITICTOOL, a comprehensive critique evaluation benchmark, based on a novel evolutionary strategy for dataset construction.

💬 Research Conclusions:

– Validated the generalization and effectiveness of the benchmark strategy through extensive experiments, providing new insights into tool learning in large language models.

👉 Paper link: https://huggingface.co/papers/2506.13977

16. EfficientVLA: Training-Free Acceleration and Compression for Vision-Language-Action Models

🔑 Keywords: Vision-Language-Action models, inference acceleration, pruning, visual tokens, caching

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To accelerate Vision-Language-Action models by addressing computational and memory bottlenecks.

🛠️ Research Methods:

– Implementing EfficientVLA framework by pruning language layers, optimizing visual token selection, and caching intermediate features in diffusion-based action head.

💬 Research Conclusions:

– Achieved 1.93X inference speedup and reduced FLOPs to 28.9% with minimal impact on success rate (0.6% drop) in the SIMPLER benchmark.

👉 Paper link: https://huggingface.co/papers/2506.10100

17. xbench: Tracking Agents Productivity Scaling with Profession-Aligned Real-World Evaluations

🔑 Keywords: AI agent capabilities, real-world productivity, Technology-Market Fit

💡 Category: AI Systems and Tools

🌟 Research Objective:

– To create a profession-aligned evaluation suite (xbench) that bridges the gap between AI agent capabilities and their economic value in professional settings.

🛠️ Research Methods:

– Evaluation tasks are defined by industry professionals focusing on commercially significant domains, such as Recruitment and Marketing.

💬 Research Conclusions:

– xbench provides metrics that correlate with productivity value and offer initial benchmarks for evaluating AI agents’ capabilities in real-world professional scenarios.

👉 Paper link: https://huggingface.co/papers/2506.13651

18. VideoMolmo: Spatio-Temporal Grounding Meets Pointing

🔑 Keywords: VideoMolmo, spatio-temporal localization, temporal attention mechanism, SAM2, AI Native

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The study aims to enhance spatio-temporal pointing accuracy and reasoning capabilities in real-world scenarios through a multimodal model, VideoMolmo, which incorporates advanced attention mechanisms and mask fusion.

🛠️ Research Methods:

– VideoMolmo’s architecture utilizes a temporal attention mechanism and a novel temporal mask fusion pipeline with SAM2 for bidirectional point propagation. It further simplifies the task for large language models by using a two-step decomposition process.

💬 Research Conclusions:

– VideoMolmo significantly improves spatio-temporal pointing accuracy and reasoning capabilities compared to existing models, evaluated on a curated dataset and a new benchmark, VPoS-Bench, spanning various real-world scenarios.

👉 Paper link: https://huggingface.co/papers/2506.05336

19. Ambient Diffusion Omni: Training Good Models with Bad Data

🔑 Keywords: Ambient Diffusion Omni, diffusion models, ImageNet FID, AI Native, noise damping

💡 Category: Generative Models

🌟 Research Objective:

– Enhance diffusion models using low-quality synthetic images and exploit natural image properties to improve ImageNet FID and text-to-image quality.

🛠️ Research Methods:

– Develop the Ambient Diffusion Omni framework leveraging spectral power law decay and locality; validate using synthetically corrupted images.

💬 Research Conclusions:

– Successfully improved diffusion models showing state-of-the-art ImageNet FID and significant enhancements in image quality and diversity for generative modeling.

👉 Paper link: https://huggingface.co/papers/2506.10038

20. Taming Polysemanticity in LLMs: Provable Feature Recovery via Sparse Autoencoders

🔑 Keywords: Sparse Autoencoders, feature recovery, statistical framework, bias adaptation, theoretical recovery guarantees

💡 Category: Foundations of AI

🌟 Research Objective:

– To enhance Sparse Autoencoders for effectively recovering monosemantic features in Large Language Models with theoretical guarantees.

🛠️ Research Methods:

– Development of a novel statistical framework introducing feature identifiability by modeling polysemantic features as sparse mixtures of monosemantic concepts.

– Introduction of a new SAE training algorithm using bias adaptation to ensure appropriate activation sparsity.

💬 Research Conclusions:

– The proposed algorithm theoretically recovers all monosemantic features under the new statistical model, and the empirical variant Group Bias Adaptation demonstrates superior performance on benchmark tests.

👉 Paper link: https://huggingface.co/papers/2506.14002

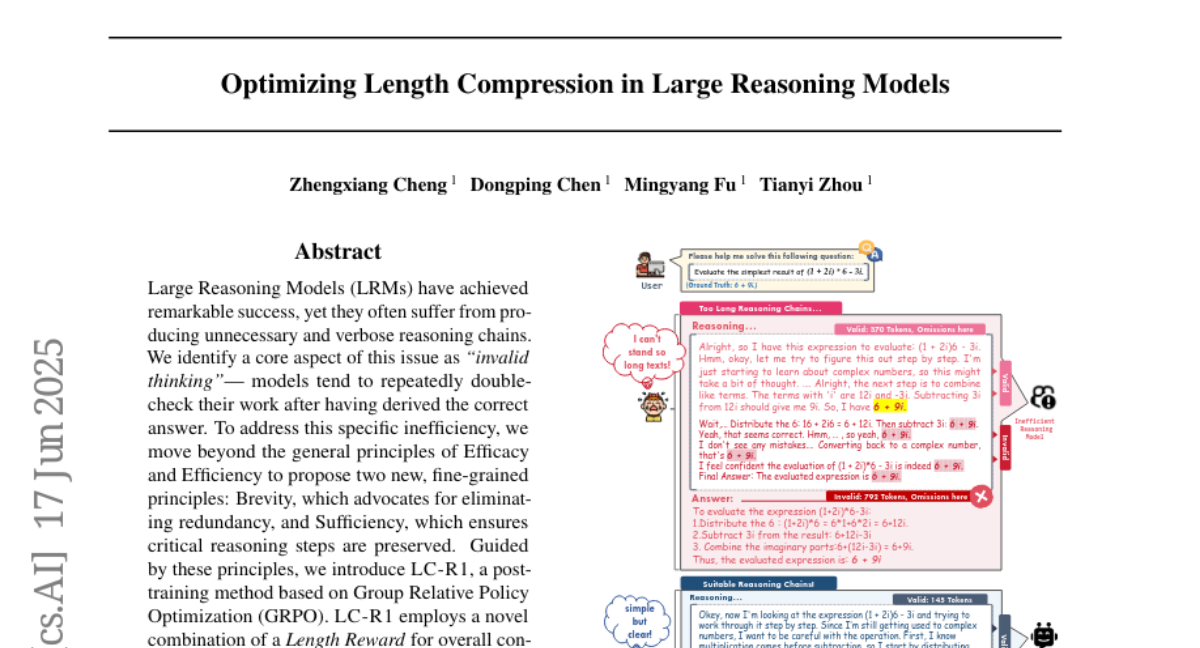

21. Optimizing Length Compression in Large Reasoning Models

🔑 Keywords: Large Reasoning Models, Brevity, Sufficiency, LC-R1, Post-training Method

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– The objective is to reduce unnecessary reasoning in Large Reasoning Models with minimal accuracy loss by using principles like Brevity and Sufficiency.

🛠️ Research Methods:

– Introduction of a post-training method called LC-R1 based on Group Relative Policy Optimization, utilizing a Length Reward for conciseness and a Compress Reward to remove invalid thinking.

💬 Research Conclusions:

– LC-R1 significantly reduces reasoning sequence length by approximately 50% while maintaining a marginal accuracy drop of only about 2%, proving its robustness and efficiency.

👉 Paper link: https://huggingface.co/papers/2506.14755

22. Router-R1: Teaching LLMs Multi-Round Routing and Aggregation via Reinforcement Learning

🔑 Keywords: Reinforcement Learning, multi-LLM routing, generalization, cost management

💡 Category: Reinforcement Learning

🌟 Research Objective:

– To improve multi-LLM routing and optimize performance-cost trade-offs through a reinforcement learning-based framework.

🛠️ Research Methods:

– Development of Router-R1, a framework that treats multi-LLM routing as a sequential decision process using “think” and “route” actions.

– Utilization of a rule-based reward system to guide learning focusing on format rewards, final outcome rewards, and cost rewards.

💬 Research Conclusions:

– Router-R1 demonstrates superior performance over strong baselines, effectively balancing performance and cost, and generalizing well to unseen models.

👉 Paper link: https://huggingface.co/papers/2506.09033

23. Mixture-of-Experts Meets In-Context Reinforcement Learning

🔑 Keywords: In-context reinforcement learning, mixture-of-experts, transformer-based decision models, multi-modality, task diversity

💡 Category: Reinforcement Learning

🌟 Research Objective:

– To enhance in-context reinforcement learning (ICRL) by addressing multi-modality and task diversity challenges using the T2MIR framework.

🛠️ Research Methods:

– Developed T2MIR, incorporating Token- and Task-wise MoE into transformer-based decision models.

– Introduced a contrastive learning method to improve task-wise routing using mutual information maximization.

💬 Research Conclusions:

– T2MIR significantly improves in-context learning capacity and outperforms various baselines, offering scalable architectural enhancements for ICRL.

👉 Paper link: https://huggingface.co/papers/2506.05426

24. CAMS: A CityGPT-Powered Agentic Framework for Urban Human Mobility Simulation

🔑 Keywords: Human mobility simulation, Large language models, Urban spaces, Agentic framework, CityGPT

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– To enhance human mobility simulation by integrating an agentic framework with urban-knowledgeable large language models, addressing limitations of traditional data-driven approaches.

🛠️ Research Methods:

– The proposed CAMS framework with modules: MobExtractor for mobility patterns, GeoGenerator for geospatial knowledge using CityGPT, and TrajEnhancer for trajectory generation with real preference alignment.

💬 Research Conclusions:

– CAMS demonstrates superior performance in realistic trajectory generation without external geospatial information and establishes a new paradigm in human mobility simulation by effectively modeling individual and collective mobility patterns.

👉 Paper link: https://huggingface.co/papers/2506.13599

25. Treasure Hunt: Real-time Targeting of the Long Tail using Training-Time Markers

🔑 Keywords: AI-generated summary, long-tail, prompt engineering, training protocols, generation attributes

💡 Category: Machine Learning

🌟 Research Objective:

– The research aims to optimize training protocols to enhance the performance and controllability of models on underrepresented use cases during inference.

🛠️ Research Methods:

– A detailed taxonomy of data characteristics and task provenance is developed to control and condition generation attributes during inference. A base model is fine-tuned to automatically infer these markers.

💬 Research Conclusions:

– The proposed approach significantly improves model performance, achieving up to a 9.1% gain in underrepresented domains and up to 14.1% improvements in specific tasks like CodeRepair. It also yields absolute improvements of 35.3% in certain evaluations.

👉 Paper link: https://huggingface.co/papers/2506.14702

26. Ring-lite: Scalable Reasoning via C3PO-Stabilized Reinforcement Learning for LLMs

🔑 Keywords: MoE architecture, reinforcement learning, optimization instability, entropy loss, multi-domain data integration

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The paper presents Ring-lite, a MoE-based large language model optimized via reinforcement learning to achieve efficient and robust reasoning capabilities.

🛠️ Research Methods:

– Introduces a joint training pipeline integrating distillation with RL.

– Proposes Constrained Contextual Computation Policy Optimization (C3PO) to enhance training stability and improve computational throughput.

– Uses entropy loss for selecting distillation checkpoints in RL training to improve performance-efficiency trade-offs.

💬 Research Conclusions:

– Matches the performance of state-of-the-art reasoning models while activating fewer parameters.

– Addresses optimization instability challenges in MoE RL training.

– Successfully integrates multi-domain data to address domain conflicts in mixed datasets.

👉 Paper link: https://huggingface.co/papers/2506.14731

27. From Bytes to Ideas: Language Modeling with Autoregressive U-Nets

🔑 Keywords: autoregressive U-Net, multi-scale view, character-level tasks, low-resource languages, semantic patterns

💡 Category: Natural Language Processing

🌟 Research Objective:

– The research introduces an autoregressive U-Net that embeds its own tokens to provide a multi-scale view of text sequences and improve handling of character-level tasks and low-resource languages.

🛠️ Research Methods:

– The model processes raw bytes and pools them into increasingly larger word groups, enabling predictions based on broader semantic patterns at deeper stages.

💬 Research Conclusions:

– By embedding tokenization within the model, it matches strong baselines for Byte Pair Encoding in shallow hierarchies and shows a promising trend in deeper layers.

– The approach allows the system to efficiently manage character-level tasks and knowledge transfer across low-resource languages.

👉 Paper link: https://huggingface.co/papers/2506.14761

28. Universal Jailbreak Suffixes Are Strong Attention Hijackers

🔑 Keywords: AI-generated summary, Suffix-based jailbreaks, Large language models, Adversarial suffixes, Safety alignment

💡 Category: Natural Language Processing

🌟 Research Objective:

– To investigate suffix-based jailbreaks that exploit adversarial suffixes to attack large language models, focusing on optimizing these suffixes to bypass safety alignment.

🛠️ Research Methods:

– Analysis of the GCG attack’s effectiveness, identifying the key mechanism related to information flow from adversarial suffixes, and quantifying its impact on hijacking the contextualization process.

💬 Research Conclusions:

– GCG’s suffix universality significantly enhances attack efficacy, which can be improved or mitigated efficiently with no additional computational cost. Access to the code and data is provided for further research.

👉 Paper link: https://huggingface.co/papers/2506.12880

29. TR2M: Transferring Monocular Relative Depth to Metric Depth with Language Descriptions and Scale-Oriented Contrast

🔑 Keywords: Multimodal inputs, Metric depth, Relative depth, Cross-modality attention, Contrastive learning

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Develop a framework (TR2M) to transfer relative depth estimation to metric depth with reduced scale uncertainty.

🛠️ Research Methods:

– Utilize both text descriptions and images as inputs with a cross-modality attention module and contrastive learning to enhance metric depth estimation.

💬 Research Conclusions:

– TR2M demonstrates superior performance in converting relative depth to metric depth across various datasets, showing robust zero-shot capabilities.

👉 Paper link: https://huggingface.co/papers/2506.13387

30. Alignment Quality Index (AQI) : Beyond Refusals: AQI as an Intrinsic Alignment Diagnostic via Latent Geometry, Cluster Divergence, and Layer wise Pooled

Representations

🔑 Keywords: Alignment Quality Index (AQI), large language models (LLMs), latent space, alignment faking, LITMUS dataset

💡 Category: Natural Language Processing

🌟 Research Objective:

– The paper introduces the Alignment Quality Index (AQI) as a novel metric to assess the alignment of large language models by analyzing latent space activations.

🛠️ Research Methods:

– AQI employs geometric and prompt-invariant measures like the Davies-Bouldin Score, Dunn Index, Xie-Beni Index, and Calinski-Harabasz Index to capture clustering quality and detect misalignments or jailbreak risks.

– Empirical tests are conducted using the LITMUS dataset across models trained under different conditions like DPO, GRPO, and RLHF.

💬 Research Conclusions:

– The study demonstrates AQI’s effectiveness in identifying hidden vulnerabilities that are not captured by traditional refusal metrics, thereby offering robust auditing for AI safety.

– The public release of the implementation aims to encourage further research in enhancing LLM alignment evaluation.

👉 Paper link: https://huggingface.co/papers/2506.13901

31. Graph Counselor: Adaptive Graph Exploration via Multi-Agent Synergy to Enhance LLM Reasoning

🔑 Keywords: Large Language Models, multi-agent collaboration, adaptive reasoning, GraphRAG, semantic consistency

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– The study aims to enhance Large Language Models by improving factual accuracy and generation quality in specialized domains through innovative knowledge integration techniques.

🛠️ Research Methods:

– The Graph Counselor method is developed, featuring multi-agent collaboration and the Adaptive Graph Information Extraction Module (AGIEM) to address inefficiencies in existing GraphRAG methods.

– Utilizes the Self-Reflection with Multiple Perspectives (SR) module to improve reasoning accuracy and semantic consistency through self-reflection and backward reasoning mechanisms.

💬 Research Conclusions:

– Graph Counselor demonstrates superior performance over existing methods in graph reasoning tasks, showcasing enhanced reasoning accuracy and generalization ability.

👉 Paper link: https://huggingface.co/papers/2506.03939

32. EMLoC: Emulator-based Memory-efficient Fine-tuning with LoRA Correction

🔑 Keywords: EMLoC, AI-generated summary, fine-tuning, LoRA, Emulator-based Memory-efficient

💡 Category: Machine Learning

🌟 Research Objective:

– Introduce EMLoC, a memory-efficient fine-tuning framework allowing large model adaptation within inference memory constraints.

🛠️ Research Methods:

– Utilize activation-aware SVD and LoRA within a lightweight emulator, compensating for misalignment between the original model and emulator with a novel algorithm.

💬 Research Conclusions:

– EMLoC outperforms other methods on multiple datasets, enabling practical fine-tuning of a 38B model on a single 24GB GPU without quantization.

👉 Paper link: https://huggingface.co/papers/2506.12015

33. DynaGuide: Steering Diffusion Polices with Active Dynamic Guidance

🔑 Keywords: DynaGuide, diffusion policies, external dynamics model, goal-conditioning, robustness

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– Develop DynaGuide, a steering method that improves diffusion policies with adaptability to multiple objectives and robustness.

🛠️ Research Methods:

– Use an external dynamics model during the diffusion denoising process to separate the dynamics model from the base policy.

– Conduct simulated and real experiments, including articulated CALVIN tasks, to compare DynaGuide with other steering approaches.

💬 Research Conclusions:

– DynaGuide outperforms goal-conditioning, especially with low-quality objectives, achieving a 70% steering success rate and surpassing traditional methods by 5.4x.

– Successfully implements novel behaviors in off-the-shelf real robot policies by steering preferences towards specific objects.

👉 Paper link: https://huggingface.co/papers/2506.13922

34. VisText-Mosquito: A Multimodal Dataset and Benchmark for AI-Based Mosquito Breeding Site Detection and Reasoning

🔑 Keywords: Multimodal dataset, Object detection, Segmentation, YOLOv9s, AI-based detection

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The study introduces VisText-Mosquito, a multimodal dataset to support automated detection and analysis of mosquito breeding sites.

🛠️ Research Methods:

– Utilizes models including YOLOv9s and YOLOv11n-Seg for detection and segmentation tasks, and a fine-tuned BLIP model for reasoning generation.

💬 Research Conclusions:

– YOLOv9s achieved high precision and mAP@50 for object detection, while YOLOv11n-Seg showed strong segmentation performance. The BLIP model demonstrated effective reasoning text generation. Data and code are available on GitHub.

👉 Paper link: https://huggingface.co/papers/2506.14629

35.