AI Native Daily Paper Digest – 20250703

1. Kwai Keye-VL Technical Report

🔑 Keywords: Multimodal Large Language Models, short-video understanding, vision-language alignment

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To enhance the comprehension of dynamic, information-dense short-form videos by developing the Kwai Keye-VL model with robust vision-language capabilities.

🛠️ Research Methods:

– Utilization of a large dataset exceeding 600 billion tokens with a focus on video, innovative training and post-training recipes, and a reinforcement learning phase to refine reasoning capabilities and model behavior.

💬 Research Conclusions:

– Keye-VL achieves state-of-the-art results on public video benchmarks, surpasses general image-based tasks, and shows significant advantages on the new KC-MMBench tailored for short-video scenarios.

👉 Paper link: https://huggingface.co/papers/2507.01949

2. LongAnimation: Long Animation Generation with Dynamic Global-Local Memory

🔑 Keywords: Animation colorization, Video generation model, Long-term color consistency

💡 Category: Generative Models

🌟 Research Objective:

– To achieve ideal long-term color consistency in animation through a dynamic global-local paradigm.

🛠️ Research Methods:

– Proposed LongAnimation, featuring SketchDiT, Dynamic Global-Local Memory, and Color Consistency Reward.

💬 Research Conclusions:

– LongAnimation is effective in maintaining both short-term and long-term color consistency in open-domain animation colorization tasks.

👉 Paper link: https://huggingface.co/papers/2507.01945

3. Depth Anything at Any Condition

🔑 Keywords: Depth Estimation, Monocular Depth Estimation, Adverse Weather, Zero-shot Capabilities, Unsupervised Learning

💡 Category: Computer Vision

🌟 Research Objective:

– Introduce DepthAnything-AC, a monocular depth estimation model capable of effective performance in various complex environmental conditions.

🛠️ Research Methods:

– Utilize unsupervised consistency regularization finetuning with minimal unlabeled data.

– Introduce a Spatial Distance Constraint to improve semantic boundaries and detail accuracy.

💬 Research Conclusions:

– The model demonstrates zero-shot capabilities across diverse benchmarks, effectively handling real-world adverse weather and synthetic corruption.

👉 Paper link: https://huggingface.co/papers/2507.01634

4. A Survey on Vision-Language-Action Models: An Action Tokenization Perspective

🔑 Keywords: Vision-Language-Action (VLA), action tokens, multimodal understanding, AI Native

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The survey aims to categorize and interpret existing VLA research through the lens of action tokenization, and provide insights for future directions and improvements.

🛠️ Research Methods:

– The research involves a systematic review and analysis of how VLA models utilize action tokens, categorizing them into different types including language description, code, affordance, and more.

💬 Research Conclusions:

– The study distills the strengths and limitations of each type of action token and highlights underexplored yet promising directions for VLA models, contributing guidance for future research towards general-purpose intelligence.

👉 Paper link: https://huggingface.co/papers/2507.01925

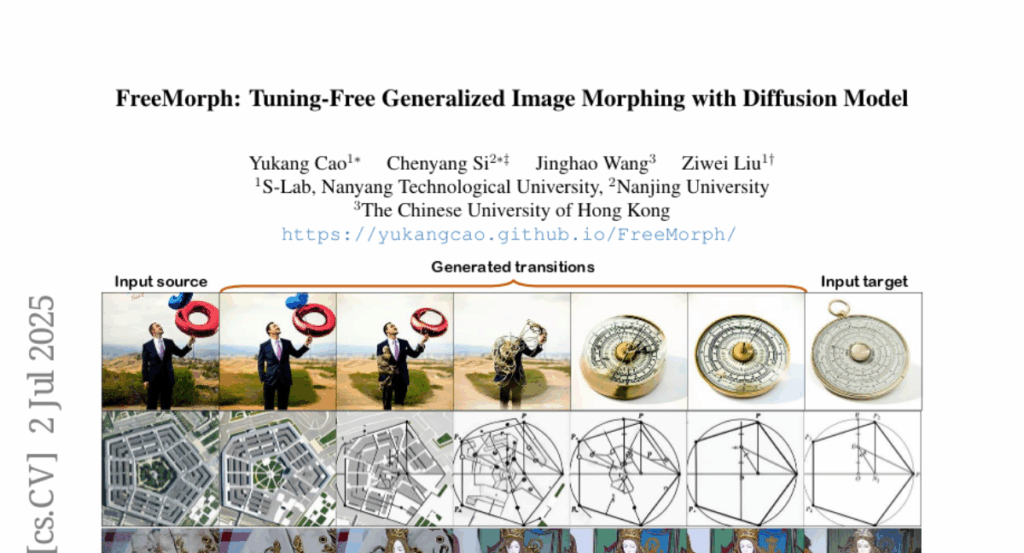

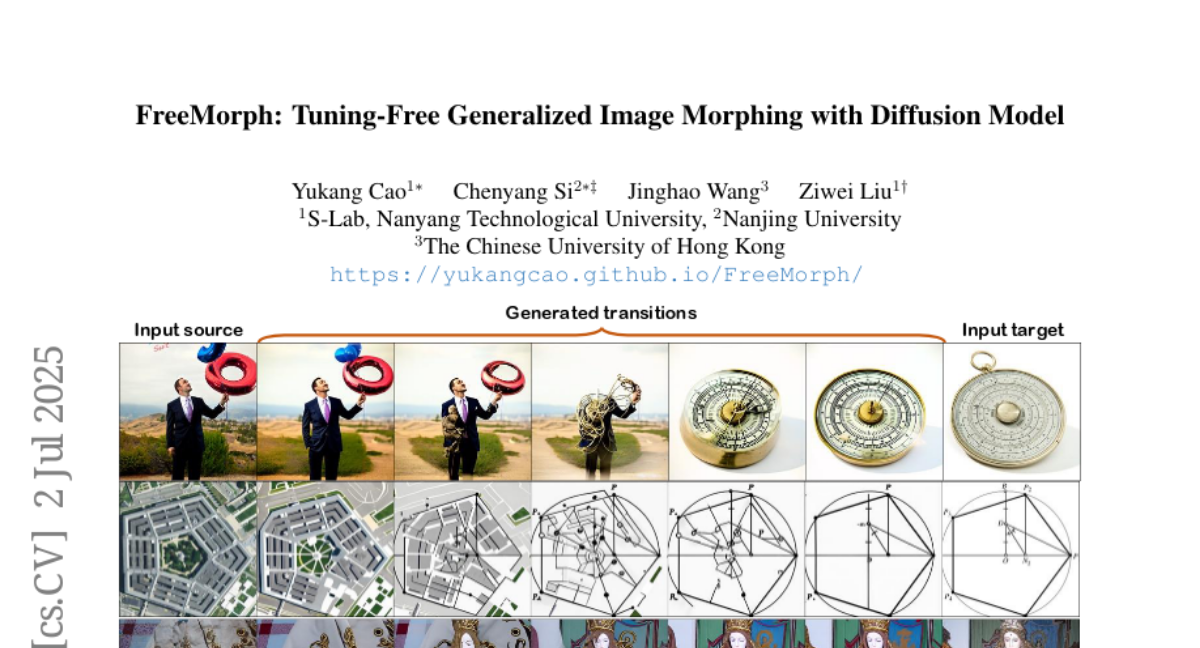

5. FreeMorph: Tuning-Free Generalized Image Morphing with Diffusion Model

🔑 Keywords: FreeMorph, image morphing, tuning-free, self-attention, diffusion models

💡 Category: Generative Models

🌟 Research Objective:

– Introduce FreeMorph, a tuning-free method aimed at improving image morphing for inputs with various semantics or layouts without per-instance training.

🛠️ Research Methods:

– Developed a guidance-aware spherical interpolation design to incorporate explicit guidance and address identity loss through modified self-attention modules.

– Introduced a step-oriented variation trend to ensure controlled and consistent transitions across input images.

💬 Research Conclusions:

– FreeMorph is significantly more efficient, being 10x ~ 50x faster than existing methods, and establishes a new state-of-the-art for image morphing.

👉 Paper link: https://huggingface.co/papers/2507.01953

6. Locality-aware Parallel Decoding for Efficient Autoregressive Image Generation

🔑 Keywords: Locality-aware Parallel Decoding, autoregressive image generation, Flexible Parallelized Autoregressive Modeling, Locality-aware Generation Ordering, ImageNet

💡 Category: Generative Models

🌟 Research Objective:

– The paper aims to accelerate autoregressive image generation processes while maintaining quality.

🛠️ Research Methods:

– The paper introduces two key techniques: Flexible Parallelized Autoregressive Modeling, which allows for arbitrary generation ordering and degrees of parallelization, and Locality-aware Generation Ordering, which minimizes intra-group dependencies and maximizes contextual support.

💬 Research Conclusions:

– The techniques significantly reduce generation steps and latency compared to previous models without compromising the generation quality on the ImageNet benchmark.

👉 Paper link: https://huggingface.co/papers/2507.01957

7. μ^2Tokenizer: Differentiable Multi-Scale Multi-Modal Tokenizer for Radiology Report Generation

🔑 Keywords: Automated radiology report generation, RRG, mu^2LLM, AI in Healthcare

💡 Category: AI in Healthcare

🌟 Research Objective:

– The paper aims to improve the accuracy and efficiency of radiology report generation from clinical imaging by addressing inherent complexities and evaluation challenges.

🛠️ Research Methods:

– Introduction of mu^2LLM, a multiscale multimodal large language model incorporating a mu^2Tokenizer, integrating multi-modal features for enhanced report generation using direct preference optimization guided by GREEN-RedLlama.

💬 Research Conclusions:

– The experimental results demonstrate that the proposed method outperforms existing approaches in generating reports from CT image datasets, showing high potential even with limited data.

👉 Paper link: https://huggingface.co/papers/2507.00316

8. MARVIS: Modality Adaptive Reasoning over VISualizations

🔑 Keywords: Foundation Models, Vision-Language Models, Modality Adaptive Reasoning

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The objective is to enhance the versatility of machine learning models by enabling small vision-language models to accurately predict across various data modalities without specialized training.

🛠️ Research Methods:

– Introduction of MARVIS, a training-free method transforming latent embedding spaces into visual representations to leverage spatial and fine-grained reasoning capabilities of vision-language models.

💬 Research Conclusions:

– MARVIS achieves competitive performance on vision, audio, biological, and tabular domains with a single 3B parameter model, outperforming Gemini by 16% on average, without requiring domain-specific training or exposing personally identifiable information.

👉 Paper link: https://huggingface.co/papers/2507.01544

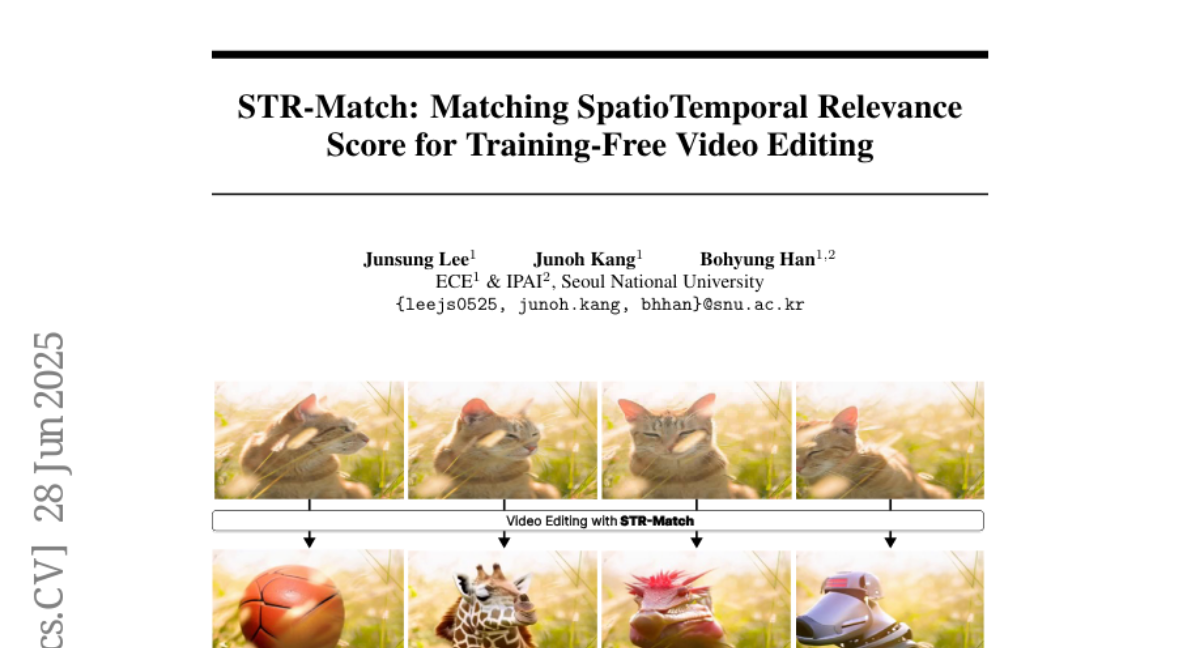

9. STR-Match: Matching SpatioTemporal Relevance Score for Training-Free Video Editing

🔑 Keywords: STR-Match, T2V diffusion models, latent optimization, spatial attention, temporal attention

💡 Category: Generative Models

🌟 Research Objective:

– The research aims to develop STR-Match, a training-free video editing algorithm that enhances video editing by ensuring spatiotemporal coherence and visual appeal through novel STR score.

🛠️ Research Methods:

– The method utilizes latent optimization guided by a novel STR score, employing 2D spatial and 1D temporal attention within T2V diffusion models to capture spatiotemporal pixel relevance without relying on costly 3D attention mechanisms.

💬 Research Conclusions:

– STR-Match significantly outperforms existing video editing methods in visual quality and spatiotemporal consistency, maintaining performance even during significant domain transformations and preserving key visual attributes.

👉 Paper link: https://huggingface.co/papers/2506.22868

10. JAM-Flow: Joint Audio-Motion Synthesis with Flow Matching

🔑 Keywords: JAM-Flow, Multi-Modal Diffusion Transformer, facial motion, speech, generative modeling

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The objective of this research is to introduce JAM-Flow, a unified framework that simultaneously synthesizes and conditions facial motion and speech.

🛠️ Research Methods:

– Utilization of flow matching and a novel Multi-Modal Diffusion Transformer (MM-DiT) architecture, integrating specialized Motion-DiT and Audio-DiT modules, with selective joint attention layers and temporally aligned positional embeddings.

💬 Research Conclusions:

– JAM-Flow advances multi-modal generative modeling by offering a practical solution for holistic audio-visual synthesis, capable of handling tasks like synchronized talking head generation and audio-driven animation within a single model.

👉 Paper link: https://huggingface.co/papers/2506.23552

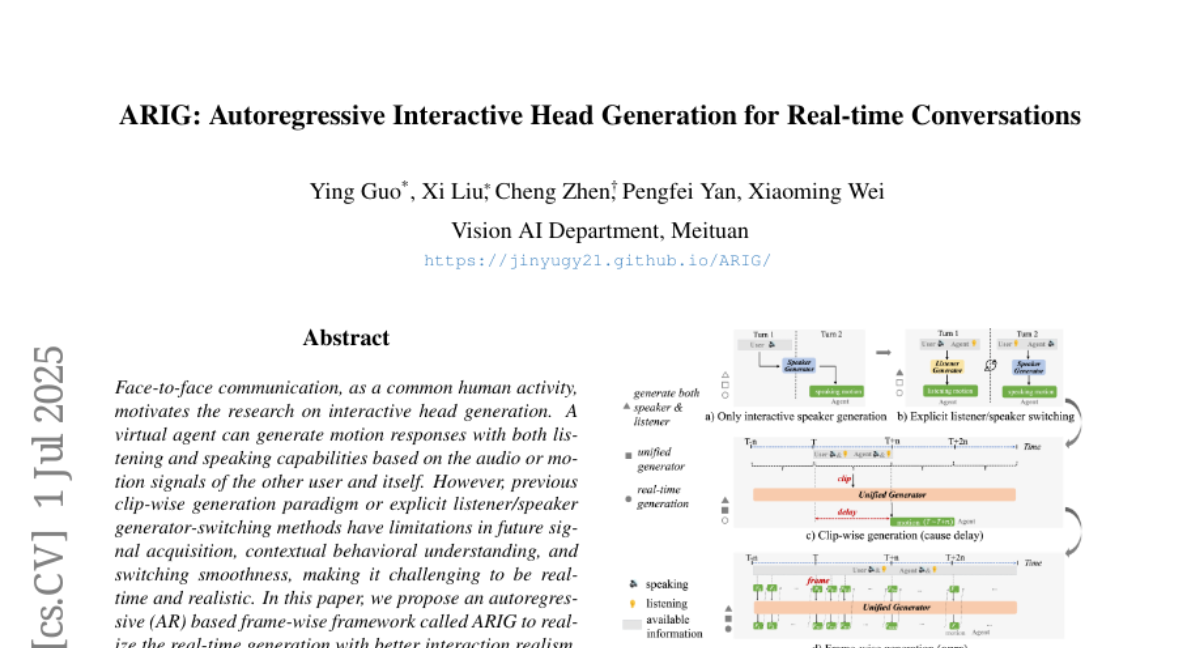

11. ARIG: Autoregressive Interactive Head Generation for Real-time Conversations

🔑 Keywords: Interactive head generation, Autoregressive framework, Real-time generation, Interactive behavior understanding, Conversational state understanding

💡 Category: Human-AI Interaction

🌟 Research Objective:

– To develop a real-time, realistic interactive head generation system using an autoregressive frame-wise framework called ARIG.

🛠️ Research Methods:

– Utilized a non-vector-quantized autoregressive process for motion prediction in continuous space.

– Emphasized interactive behavior and conversational state understanding using dual-track dual-modal signals.

💬 Research Conclusions:

– The proposed ARIG framework effectively enhances real-time generation and interaction realism, as validated by extensive experiments.

👉 Paper link: https://huggingface.co/papers/2507.00472

12.