AI Native Daily Paper Digest – 20250704

1. WebSailor: Navigating Super-human Reasoning for Web Agent

🔑 Keywords: WebSailor, LLM, proprietary agents, reasoning capabilities, complex information-seeking tasks

💡 Category: Reinforcement Learning

🌟 Research Objective:

– To enhance LLMs by improving their reasoning capabilities in complex information-seeking tasks to match proprietary agents.

🛠️ Research Methods:

– Utilization of structured sampling, information obfuscation, and an efficient RL algorithm known as Duplicating Sampling Policy Optimization (DUPO).

💬 Research Conclusions:

– WebSailor significantly outperforms all open-source agents in complex information-seeking tasks, effectively matching the performance of proprietary agents.

👉 Paper link: https://huggingface.co/papers/2507.02592

2. LangScene-X: Reconstruct Generalizable 3D Language-Embedded Scenes with TriMap Video Diffusion

🔑 Keywords: LangScene-X, 3D scene reconstruction, TriMap video diffusion model, Language Quantized Compressor

💡 Category: Generative Models

🌟 Research Objective:

– Introduce LangScene-X to generate 3D consistent information from sparse views for scene reconstruction and understanding.

🛠️ Research Methods:

– Utilize TriMap video diffusion model for generating appearance, geometry, and semantics.

– Employ Language Quantized Compressor for encoding language embeddings, enabling cross-scene generalization.

💬 Research Conclusions:

– LangScene-X demonstrates superior quality and generalizability over state-of-the-art methods in experiments with real-world data.

👉 Paper link: https://huggingface.co/papers/2507.02813

3. Heeding the Inner Voice: Aligning ControlNet Training via Intermediate Features Feedback

🔑 Keywords: InnerControl, text-to-image diffusion models, ControlNet, alignment loss, spatial consistency

💡 Category: Generative Models

🌟 Research Objective:

– To enhance spatial control and improve generation quality in text-to-image diffusion models by enforcing spatial consistency across all diffusion steps.

🛠️ Research Methods:

– The implementation of InnerControl uses lightweight convolutional probes to reconstruct input control signals from intermediate UNet features at each denoising step.

💬 Research Conclusions:

– InnerControl minimizes discrepancies throughout the entire diffusion process, improving control fidelity and generation quality, achieving state-of-the-art performance.

👉 Paper link: https://huggingface.co/papers/2507.02321

4. Skywork-Reward-V2: Scaling Preference Data Curation via Human-AI Synergy

🔑 Keywords: AI Native, Reinforcement Learning, Human-AI synergy, Preference Datasets

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The objective was to improve the quality and performance of open reward models in reinforcement learning from human feedback by addressing limitations in current preference datasets and utilizing a large-scale dataset, SynPref-40M.

🛠️ Research Methods:

– A synergistic human-AI curation pipeline was established, combining human annotation quality with AI scalability, and training involved a suite of models called Skywork-Reward-V2 on a subset of 26 million preference pairs.

💬 Research Conclusions:

– The Skywork-Reward-V2 models demonstrated state-of-the-art performance across seven major benchmarks, showing versatility, alignment with human preferences, and highlighting the significant potential of human-AI curation synergy in enhancing data quality.

👉 Paper link: https://huggingface.co/papers/2507.01352

5. IntFold: A Controllable Foundation Model for General and Specialized Biomolecular Structure Prediction

🔑 Keywords: IntFold, biomolecular structure prediction, attention kernel, AlphaFold3, confidence head

💡 Category: Foundations of AI

🌟 Research Objective:

– Introduce IntFold, a controllable foundation model for general and specialized biomolecular structure prediction.

🛠️ Research Methods:

– Utilize a customized attention kernel and adapters for predictive tasks.

– Implement a novel confidence head for docking quality assessment.

💬 Research Conclusions:

– IntFold achieves predictive accuracy on par with or exceeding state-of-the-art models like AlphaFold3.

– Capable of advanced predictions, including allosteric states, constrained structures, and binding affinity assessment.

– Provides nuanced docking quality assessments, especially for complex targets like antibody-antigen complexes.

👉 Paper link: https://huggingface.co/papers/2507.02025

6. Thinking with Images for Multimodal Reasoning: Foundations, Methods, and Future Frontiers

🔑 Keywords: Multimodal reasoning, Chain-of-Thought, dynamic mental sketchpad, AI Native, cognitive workspace

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The study aims to map the evolution of multimodal reasoning models towards a more integrated use of visual information, transitioning from static to dynamic roles in cognitive processes.

🛠️ Research Methods:

– The research provides a comprehensive review of foundational principles, outlines a three-stage framework of multimodal reasoning, and analyzes evaluation benchmarks and applications.

💬 Research Conclusions:

– The paper highlights a paradigm shift in AI from static image interaction to dynamic cognitive workspaces and identifies challenges and future directions in achieving more powerful and human-aligned multimodal AI.

👉 Paper link: https://huggingface.co/papers/2506.23918

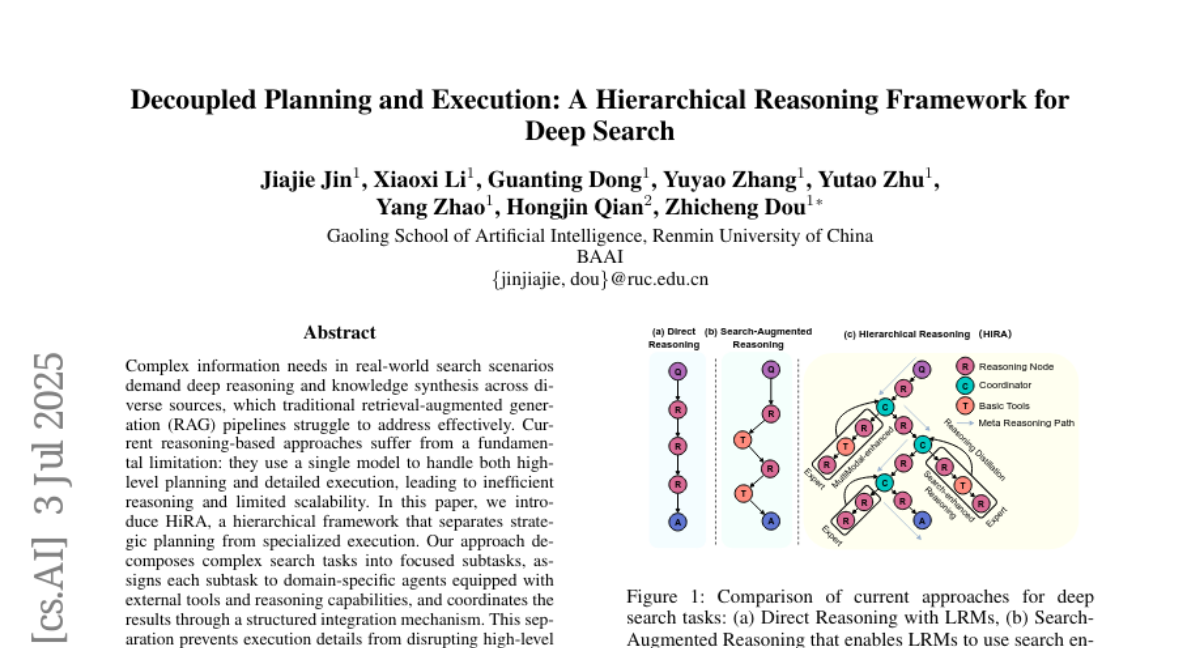

7. Decoupled Planning and Execution: A Hierarchical Reasoning Framework for Deep Search

🔑 Keywords: Hierarchical Framework, Strategic Planning, Specialized Execution, Complex Search Tasks, Cross-Modal Deep Search

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– Introduce HiRA, a hierarchical framework designed to improve efficiency and answer quality in deep search tasks by separating strategic planning from specialized execution.

🛠️ Research Methods:

– Decompose complex search tasks into focused subtasks handled by domain-specific agents equipped with external tools and reasoning capabilities.

💬 Research Conclusions:

– HiRA significantly outperforms existing retrieval-augmented generation and agent-based systems, showing improvements in answer quality and efficiency.

👉 Paper link: https://huggingface.co/papers/2507.02652

8. Fast and Simplex: 2-Simplicial Attention in Triton

🔑 Keywords: 2-simplicial Transformer, token efficiency, Triton kernel, scaling laws, reasoning tasks

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– The study aims to explore improvements in token efficiency for knowledge and reasoning tasks using the 2-simplicial Transformer over standard Transformers.

🛠️ Research Methods:

– Utilizes a 2-simplicial Transformer architecture with efficient Trilinear function implementation through a Triton kernel, generalizing standard dot-product attention.

💬 Research Conclusions:

– The 2-simplicial Transformer demonstrates superior token efficiency and performs better on mathematics, coding, reasoning, and logic tasks compared to standard Transformers, altering the scaling laws for these tasks.

👉 Paper link: https://huggingface.co/papers/2507.02754

9. Bourbaki: Self-Generated and Goal-Conditioned MDPs for Theorem Proving

🔑 Keywords: LLMs, Automated Theorem Proving, sG-MDPs, MCTS, Bourbaki

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– To improve the performance of large language models (LLMs) in the context of automated theorem proving (ATP), particularly on complex benchmarks like PutnamBench.

🛠️ Research Methods:

– Utilization of self-generated goal-conditioned MDPs (sG-MDPs) and Monte Carlo Tree Search (MCTS)-like algorithms to generate and pursue subgoals based on the evolving proof state.

💬 Research Conclusions:

– The proposed framework, instantiated in the Bourbaki system, achieved state-of-the-art results by solving 26 problems on PutnamBench with models at the 7B scale.

👉 Paper link: https://huggingface.co/papers/2507.02726

10. Can LLMs Identify Critical Limitations within Scientific Research? A Systematic Evaluation on AI Research Papers

🔑 Keywords: LimitGen, LLMs, peer review, literature retrieval, AI

💡 Category: Natural Language Processing

🌟 Research Objective:

– The paper introduces LimitGen, a benchmark designed to evaluate LLMs in identifying limitations in scientific research and to improve their feedback by using literature retrieval.

🛠️ Research Methods:

– A taxonomy of limitation types in scientific research focused on AI is developed to guide the study.

– LimitGen consists of two subsets: a synthetic dataset (LimitGen-Syn) and a human-written dataset (LimitGen-Human).

💬 Research Conclusions:

– The enhanced LLM systems, supported by literature retrieval, are better equipped to generate research paper limitations and provide more substantial peer review feedback.

👉 Paper link: https://huggingface.co/papers/2507.02694

11. Energy-Based Transformers are Scalable Learners and Thinkers

🔑 Keywords: Energy-Based Transformers, System 2 Thinking, Unsupervised Learning, Scaling Rate, Inference

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The research explores the potential to generalize System 2 Thinking in AI models using unsupervised learning without additional supervision or training.

🛠️ Research Methods:

– The introduction of Energy-Based Transformers (EBTs), a new class of Energy-Based Models (EBMs) that utilize gradient descent-based energy minimization to make predictions.

💬 Research Conclusions:

– EBTs demonstrated superior scaling during training and enhanced inference performance over existing models, including achieving a 35% higher scaling rate and a 29% performance improvement on language tasks.

👉 Paper link: https://huggingface.co/papers/2507.02092

12. Self-Correction Bench: Revealing and Addressing the Self-Correction Blind Spot in LLMs

🔑 Keywords: Self-Correction, Blind Spot, LLMs, Error Injection, Trustworthy AI

💡 Category: Natural Language Processing

🌟 Research Objective:

– To measure the self-correction blind spot in large language models (LLMs), identifying that training primarily on error-free responses contributes to this issue.

🛠️ Research Methods:

– Introduced a framework called Self-Correction Bench to systematically study the self-correction blind spot through controlled error injection at three complexity levels across 14 models.

💬 Research Conclusions:

– Found a 64.5% blind spot rate on average among models, which can be reduced by 89.3% simply by appending “Wait”, indicating the potential to activate self-correction capabilities. The study emphasizes improving LLM reliability and trustworthiness.

👉 Paper link: https://huggingface.co/papers/2507.02778

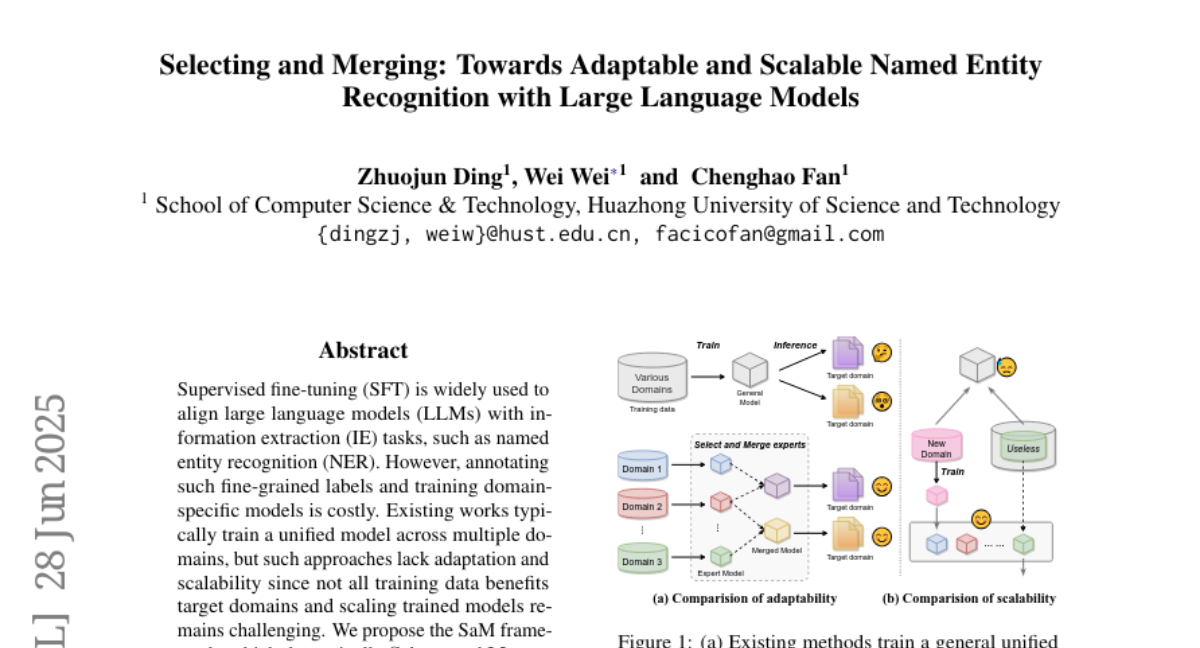

13. Selecting and Merging: Towards Adaptable and Scalable Named Entity Recognition with Large Language Models

🔑 Keywords: SaM framework, domain-specific models, large language models, scalability, information extraction

💡 Category: Natural Language Processing

🌟 Research Objective:

– Propose the SaM framework to dynamically select and merge pre-trained domain-specific models for efficient information extraction tasks.

🛠️ Research Methods:

– Selection of domain-specific experts based on domain similarity and performance on sampled instances, followed by merging to create task-specific models.

💬 Research Conclusions:

– SaM framework improves generalization across various domains without extra training, demonstrating a 10% performance enhancement over unified models. The framework allows convenient scalability through the addition or removal of experts.

👉 Paper link: https://huggingface.co/papers/2506.22813

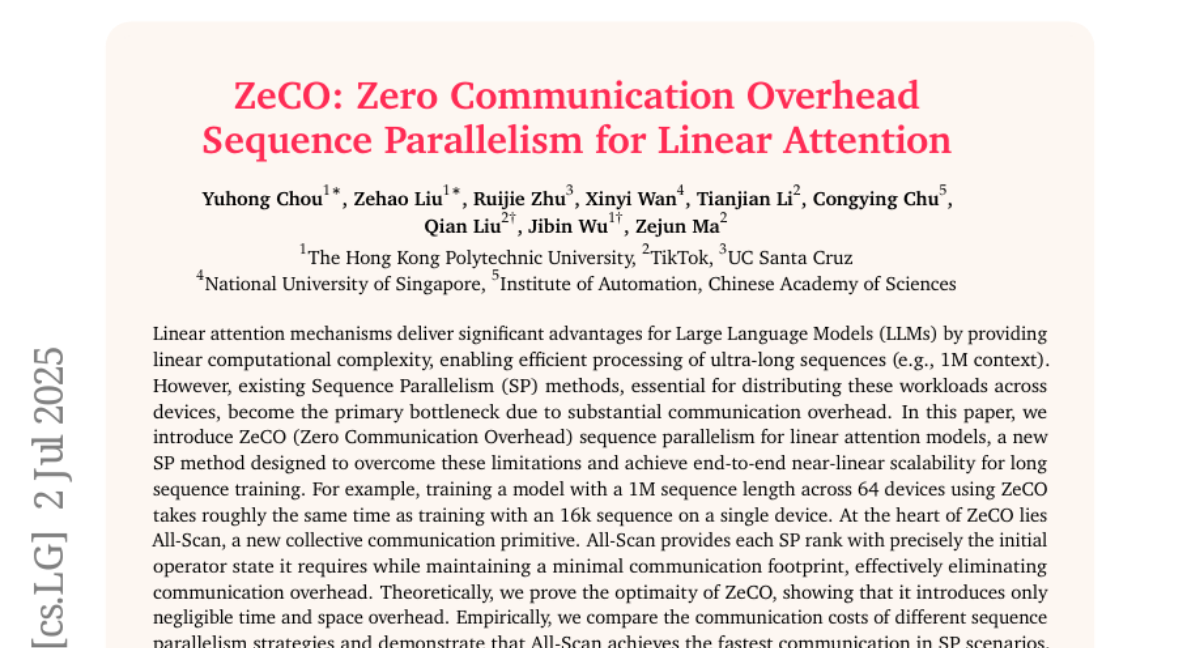

14. ZeCO: Zero Communication Overhead Sequence Parallelism for Linear Attention

🔑 Keywords: ZeCO, Zero Communication Overhead, Linear Attention, Sequence Parallelism, Large Language Models

💡 Category: Natural Language Processing

🌟 Research Objective:

– Introduce ZeCO, a zero communication overhead method for efficient training of large language models with ultra-long sequences across multiple devices.

🛠️ Research Methods:

– Developed a new sequence parallelism method using All-Scan, a collective communication primitive, to reduce communication overhead.

💬 Research Conclusions:

– ZeCO achieves near-linear scalability and improves speed by 60% on 256 GPUs with an 8M sequence length compared to the state-of-the-art methods, enabling efficient training on previously intractable sequence lengths.

👉 Paper link: https://huggingface.co/papers/2507.01004

15. AsyncFlow: An Asynchronous Streaming RL Framework for Efficient LLM Post-Training

🔑 Keywords: Asynchronous Streaming, Reinforcement Learning (RL), Large Language Models (LLMs), Distributed Data Storage, Unified Data Management

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The objective is to enhance the efficiency of the post-training phase of large language models through an innovative asynchronous streaming RL framework.

🛠️ Research Methods:

– The study introduces AsyncFlow, which includes a distributed data storage and transfer module, enabling fine-grained scheduling and unified data management. The framework is architecturally decoupled, employing service-oriented user interfaces.

💬 Research Conclusions:

– The framework yields a significant average throughput improvement of 1.59 times compared to baseline methods, providing valuable insights for future RL system designs.

👉 Paper link: https://huggingface.co/papers/2507.01663

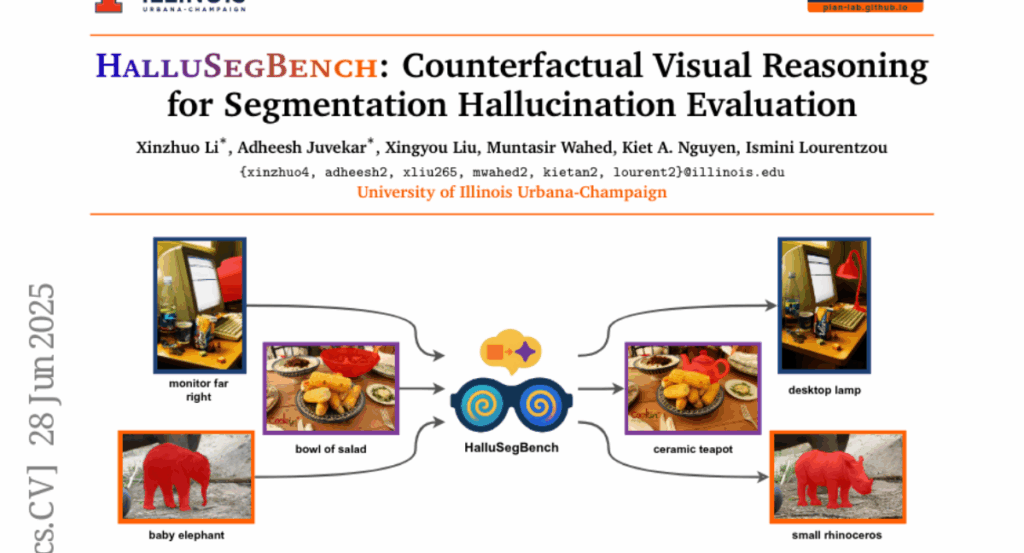

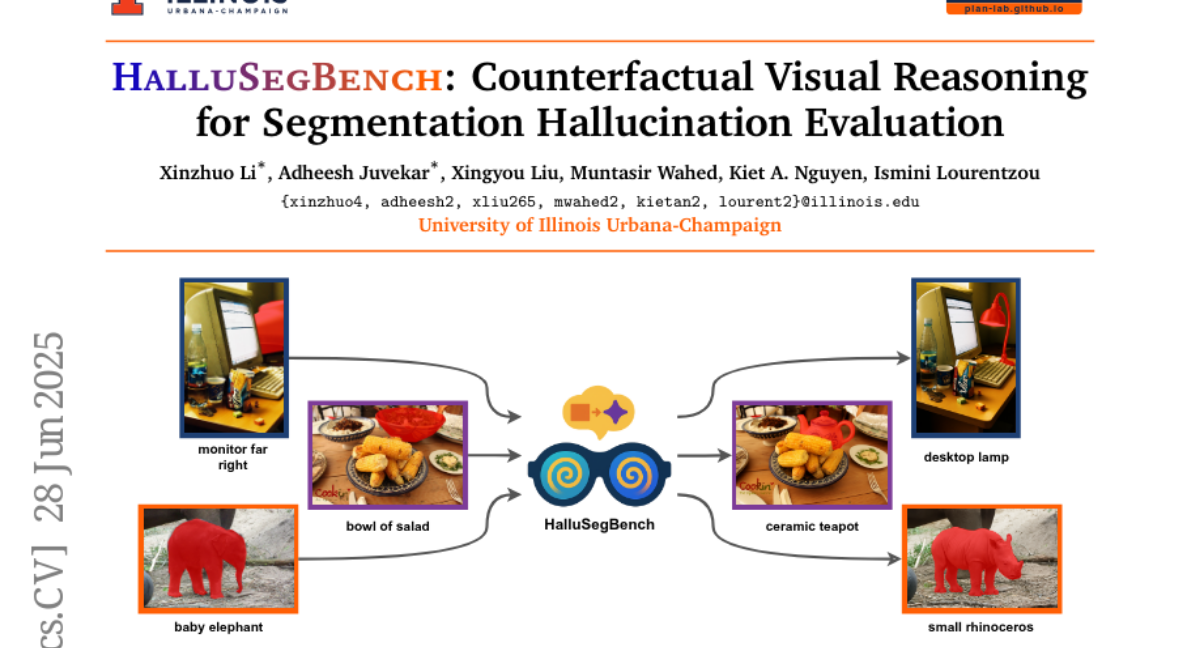

16. HalluSegBench: Counterfactual Visual Reasoning for Segmentation Hallucination Evaluation

🔑 Keywords: HalluSegBench, vision-language segmentation, hallucinations, counterfactual reasoning, segmentation masks

💡 Category: Computer Vision

🌟 Research Objective:

– The objective is to introduce HalluSegBench, a benchmark specifically designed to evaluate hallucinations in vision-language segmentation models through counterfactual visual reasoning.

🛠️ Research Methods:

– HalluSegBench includes a dataset of 1340 counterfactual instance pairs across 281 unique object classes and introduces novel metrics to quantify hallucination sensitivity under visually coherent scene edits.

💬 Research Conclusions:

– The study reveals that vision-driven hallucinations are more prevalent than label-driven ones in state-of-the-art models, indicating a persistent issue in false segmentation and emphasizing the importance of counterfactual reasoning to assess grounding fidelity.

👉 Paper link: https://huggingface.co/papers/2506.21546

17. CRISP-SAM2: SAM2 with Cross-Modal Interaction and Semantic Prompting for Multi-Organ Segmentation

🔑 Keywords: Multi-organ medical segmentation, CRISP-SAM2, Semantic Prompting, Medical Imaging, AI in Healthcare

💡 Category: AI in Healthcare

🌟 Research Objective:

– To address inaccuracies, geometric dependency, and spatial information loss in multi-organ medical segmentation using a novel approach.

🛠️ Research Methods:

– Developed CRISP-SAM2 model utilizing cross-modal interaction and semantic prompting based on SAM2.

– Employed a progressive cross-attention interaction mechanism to integrate visual and textual data for enhanced image analysis.

💬 Research Conclusions:

– CRISP-SAM2 outperformed existing models in comparative experiments across seven datasets.

– Demonstrated superior performance in addressing previously identified limitations, improving detailed understanding and localization in medical imaging.

👉 Paper link: https://huggingface.co/papers/2506.23121

18.