AI Native Daily Paper Digest – 20250714

1. Test-Time Scaling with Reflective Generative Model

🔑 Keywords: MetaStone-S1, Self-supervised Process Reward Model, Reflective Generative Model, Test Time Scaling, Scaling Law

💡 Category: Generative Models

🌟 Research Objective:

– The primary aim is to establish an efficient reasoning model, MetaStone-S1, utilizing fewer parameters while maintaining scalable performance.

🛠️ Research Methods:

– Employs a self-supervised process reward model (SPRM) that integrates the policy model and process reward model (PRM) into a unified interface without extra annotations.

– Provides three reasoning effort modes with different thinking lengths to suit test time scaling.

💬 Research Conclusions:

– MetaStone-S1 achieves comparable performance to OpenAI-o3-mini’s series with significantly fewer parameters (32B).

– The model is open-sourced to promote further research in the community.

👉 Paper link: https://huggingface.co/papers/2507.01951

2. CLiFT: Compressive Light-Field Tokens for Compute-Efficient and Adaptive Neural Rendering

🔑 Keywords: Neural Rendering, Compressed Light-Field Tokens, Multi-View Encoder, Latent-Space K-Means, Compute-Adaptive Renderer

💡 Category: Computer Vision

🌟 Research Objective:

– The paper presents a novel neural rendering approach that utilizes Compressed Light-Field Tokens (CLiFTs) to represent and render scenes efficiently across different computational budgets.

🛠️ Research Methods:

– The method employs a multi-view encoder to tokenize images based on camera poses and uses Latent-Space K-Means to select key rays. A multi-view condenser compresses token information into CLiFTs for rendering.

💬 Research Conclusions:

– The proposed approach, tested on RealEstate10K and DL3DV datasets, demonstrates significant data reduction while maintaining comparable rendering quality, offering trade-offs between data size, quality, and speed.

👉 Paper link: https://huggingface.co/papers/2507.08776

3. NeuralOS: Towards Simulating Operating Systems via Neural Generative Models

🔑 Keywords: NeuralOS, GUI, RNN, user inputs, diffusion-based rendering

💡 Category: AI Systems and Tools

🌟 Research Objective:

– To simulate operating system graphical user interfaces (GUIs) by predicting screen frames in response to user inputs.

🛠️ Research Methods:

– Combines recurrent neural networks (RNNs) with diffusion-based neural rendering, trained on a dataset from Ubuntu XFCE recordings.

💬 Research Conclusions:

– Successfully renders realistic GUI sequences, effectively capturing mouse interactions and predicting state transitions, although detailed keyboard interaction modeling remains challenging.

👉 Paper link: https://huggingface.co/papers/2507.08800

4. Open Vision Reasoner: Transferring Linguistic Cognitive Behavior for Visual Reasoning

🔑 Keywords: large language models, Multimodal LLMs, reinforcement learning, visual reasoning

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The research aims to enhance visual reasoning in large language models by implementing a two-stage paradigm.

🛠️ Research Methods:

– The study employs cold-start fine-tuning combined with multimodal reinforcement learning, utilizing the Qwen2.5-VL-7B model.

💬 Research Conclusions:

– The Open-Vision-Reasoner model sets a new benchmark in reasoning tasks, outperforming previous models with top scores on tasks like MATH500, MathVision, and MathVerse.

👉 Paper link: https://huggingface.co/papers/2507.05255

5. KV Cache Steering for Inducing Reasoning in Small Language Models

🔑 Keywords: Cache Steering, Language Models, Chain-of-Thought Reasoning, Multi-Step Reasoning, GPT-4o

💡 Category: Natural Language Processing

🌟 Research Objective:

– The paper aims to propose and validate a method called cache steering to improve reasoning abilities in small language models via a single intervention in the key-value cache.

🛠️ Research Methods:

– Cache steering is applied to induce chain-of-thought reasoning in models by leveraging GPT-4o-generated reasoning traces, constructing steering vectors without needing fine-tuning or prompt changes.

💬 Research Conclusions:

– Experimental results indicate that cache steering enhances both the reasoning structure and task performance compared to previous techniques, offering advantages in hyperparameter stability, efficiency, and ease of integration.

👉 Paper link: https://huggingface.co/papers/2507.08799

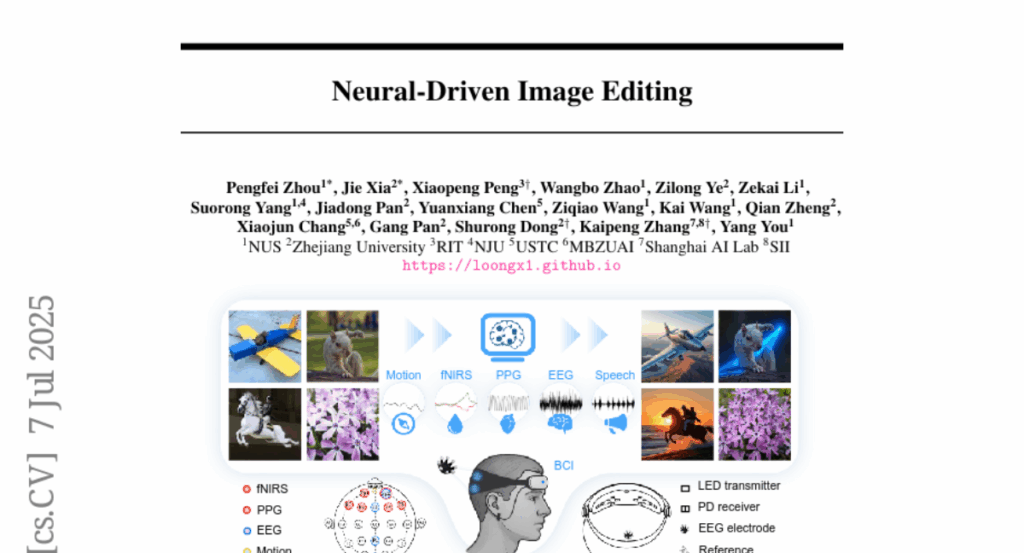

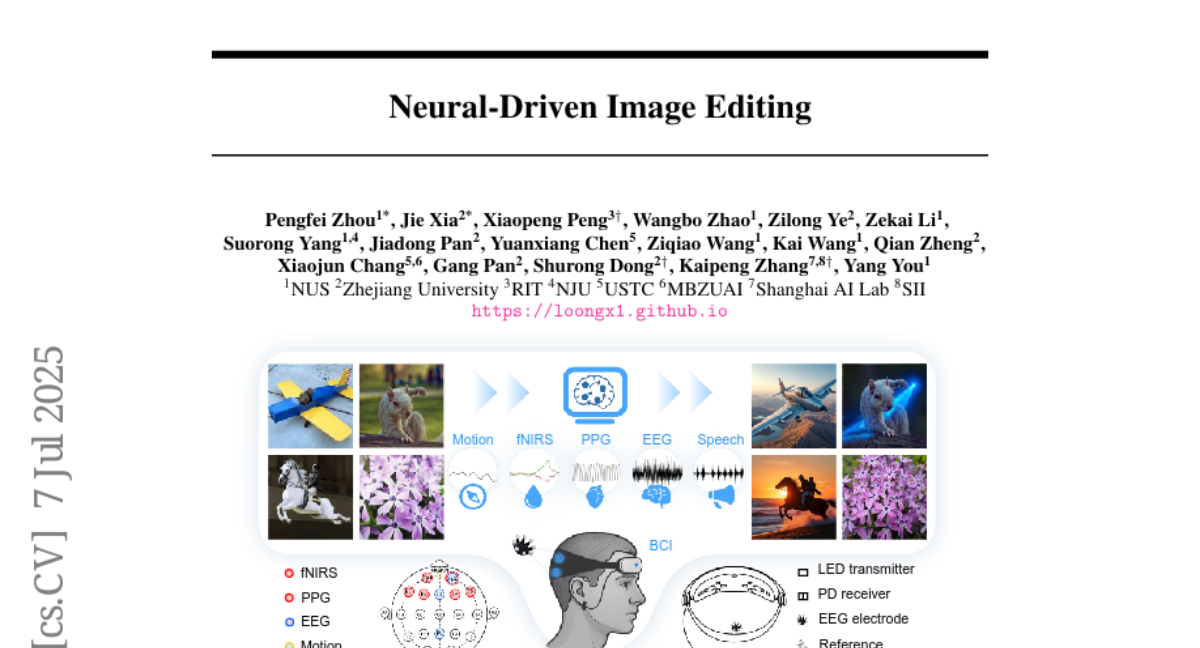

6. Neural-Driven Image Editing

🔑 Keywords: Multimodal neurophysiological signals, Brain-computer interfaces, Diffusion models, Contrastive learning, Generative models

💡 Category: Generative Models

🌟 Research Objective:

– This study introduces LoongX, a hands-free image editing approach leveraging multimodal neurophysiological signals, aimed at making image editing accessible to individuals with limited motor control or language abilities.

🛠️ Research Methods:

– LoongX integrates diffusion models trained on 23,928 image-editing pairs with synchronized EEG, fNIRS, PPG, and head motion signals. It utilizes a cross-scale state space (CS3) module and a dynamic gated fusion (DGF) module to encode and aggregate modality-specific features. Encoders are pre-trained using contrastive learning to align cognitive states with semantic intentions.

💬 Research Conclusions:

– The experiments demonstrate that LoongX’s performance is comparable to text-driven methods and surpasses them when neural signals are combined with speech, highlighting the potential of neural-driven generative models in cognitive-driven creative technologies.

👉 Paper link: https://huggingface.co/papers/2507.05397

7. Gemini 2.5: Pushing the Frontier with Advanced Reasoning, Multimodality, Long Context, and Next Generation Agentic Capabilities

🔑 Keywords: Gemini 2.X, State of the Art (SoTA), Multimodal Understanding, Reasoning Capabilities, Agentic Workflows

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Introducing and detailing the capabilities of the Gemini 2.X model family, focusing on Gemini 2.5 Pro and Gemini 2.5 Flash, and their application in complex problem-solving.

🛠️ Research Methods:

– Evaluation of model performance on frontier coding and reasoning benchmarks, emphasizing the integration of multimodal understanding and reasoning capabilities.

💬 Research Conclusions:

– Gemini 2.5 Pro achieves state-of-the-art performance in coding and reasoning, with advanced multimodal understanding and long-context processing. Gemini 2.5 Flash, along with earlier models, provides efficient performance at lower computational costs, establishing a balanced trade-off between capability and cost.

👉 Paper link: https://huggingface.co/papers/2507.06261

8. Lumos-1: On Autoregressive Video Generation from a Unified Model Perspective

🔑 Keywords: Lumos-1, Autoregressive Video Generator, MM-RoPE, AR-DF, Spatiotemporal Correlation

💡 Category: Generative Models

🌟 Research Objective:

– The objective was to develop Lumos-1, an autoregressive video generator that improves spatiotemporal correlation and frame-wise loss imbalance with fewer resources using a modified LLM architecture.

🛠️ Research Methods:

– Utilized a modified large language model architecture with MM-RoPE for enhanced frequency spectrum range and spatiotemporal data modeling.

– Introduced a token dependency strategy and AR-DF for handling intra-frame bidirectionality and inter-frame temporal causality to address frame-wise loss imbalance.

💬 Research Conclusions:

– Lumos-1 achieves competitive performance comparable to existing models but requires significantly fewer computational resources, being pre-trained on just 48 GPUs.

– The proposed methodologies like MM-RoPE and AR-DF ensure high-quality video generation, overcoming common challenges in autoregressive video models.

👉 Paper link: https://huggingface.co/papers/2507.08801

9. One Token to Fool LLM-as-a-Judge

🔑 Keywords: Generative reward models, Large Language Models (LLMs), Reinforcement Learning with Verifiable Rewards (RLVR), Data Augmentation

💡 Category: Generative Models

🌟 Research Objective:

– Investigate the vulnerabilities of generative reward models using Large Language Models (LLMs) in reinforcement learning and propose a solution to enhance their robustness.

🛠️ Research Methods:

– Examination of the vulnerabilities of LLMs in generative reward models across different datasets and prompt formats, and the implementation of a data augmentation strategy to train a more robust model.

💬 Research Conclusions:

– Generative reward models using LLMs are prone to manipulation; however, the introduction of a data augmentation strategy significantly increases the robustness of these models, necessitating the need for improved LLM-based evaluation methods.

👉 Paper link: https://huggingface.co/papers/2507.08794

10. Vision Foundation Models as Effective Visual Tokenizers for Autoregressive Image Generation

🔑 Keywords: Image Tokenizer, Pre-trained Vision Models, Token Efficiency, Autoregressive Generation, Semantic Fidelity

💡 Category: Generative Models

🌟 Research Objective:

– The study aims to improve image reconstruction, generation quality, and token efficiency by proposing a novel image tokenizer built on pre-trained vision foundation models.

🛠️ Research Methods:

– Utilizes a frozen vision foundation model as the encoder and introduces a region-adaptive quantization framework and a semantic reconstruction objective to reduce redundancy and preserve semantic fidelity.

💬 Research Conclusions:

– The proposed VFMTok image tokenizer achieves substantial improvements in image generation quality and efficiency, boosts autoregressive generation, accelerates model convergence, and enables high-fidelity class-conditional synthesis without classifier-free guidance.

👉 Paper link: https://huggingface.co/papers/2507.08441

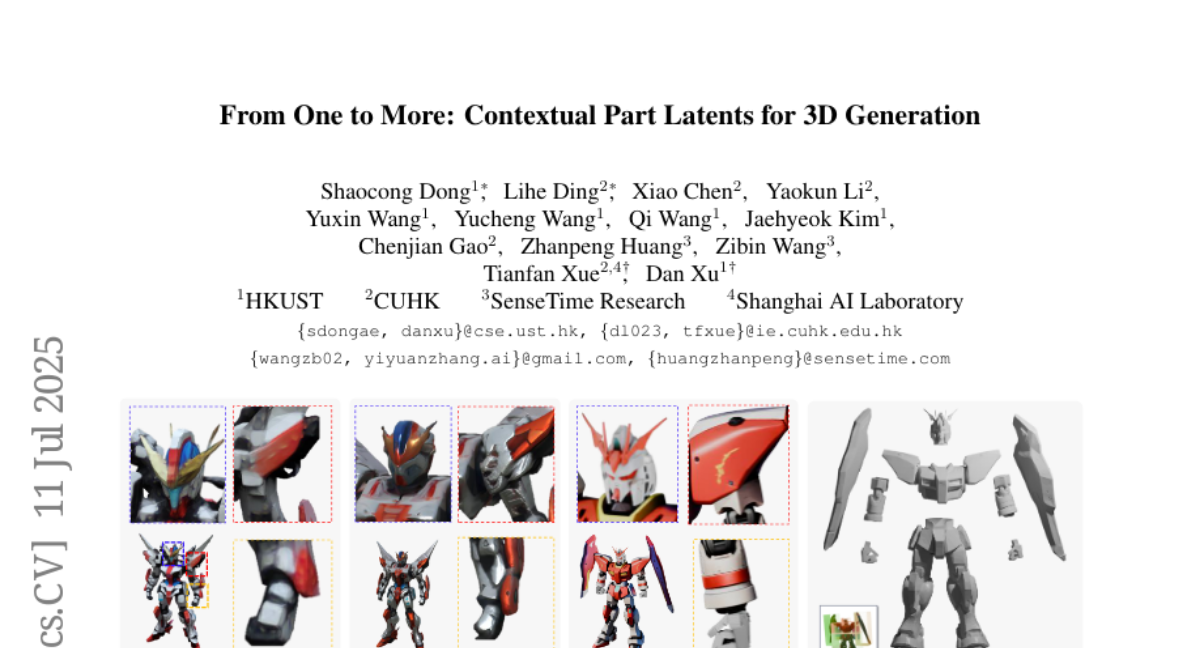

11. From One to More: Contextual Part Latents for 3D Generation

🔑 Keywords: 3D generation, part-aware diffusion framework, contextual parts, geometric coherence, part decomposition

💡 Category: Generative Models

🌟 Research Objective:

– The study aims to enhance 3D generation by decomposing objects into contextual parts to improve handling complexity, relationship modeling, and part-level conditioning.

🛠️ Research Methods:

– The researchers propose CoPart, a part-aware diffusion framework, alongside a mutual guidance strategy for fine-tuning diffusion models. They also construct Partverse, a large-scale dataset for training through automated mesh segmentation and human verification.

💬 Research Conclusions:

– CoPart demonstrates superior capabilities in part-level editing, articulated object generation, and scene composition with unprecedented controllability.

👉 Paper link: https://huggingface.co/papers/2507.08772

12. What Has a Foundation Model Found? Using Inductive Bias to Probe for World Models

🔑 Keywords: Foundation models, sequence prediction, inductive bias, task-specific heuristics, Newtonian mechanics

💡 Category: Foundations of AI

🌟 Research Objective:

– To evaluate if foundation models can truly capture deeper domain structures and generalize to new tasks.

🛠️ Research Methods:

– Developed an inductive bias probe technique to assess the alignment of foundation models’ inductive biases with synthetic datasets derived from world models.

💬 Research Conclusions:

– Foundation models excel in training tasks but struggle to apply learned structures, such as Newtonian mechanics, to new tasks, often relying on task-specific heuristics that do not generalize.

👉 Paper link: https://huggingface.co/papers/2507.06952

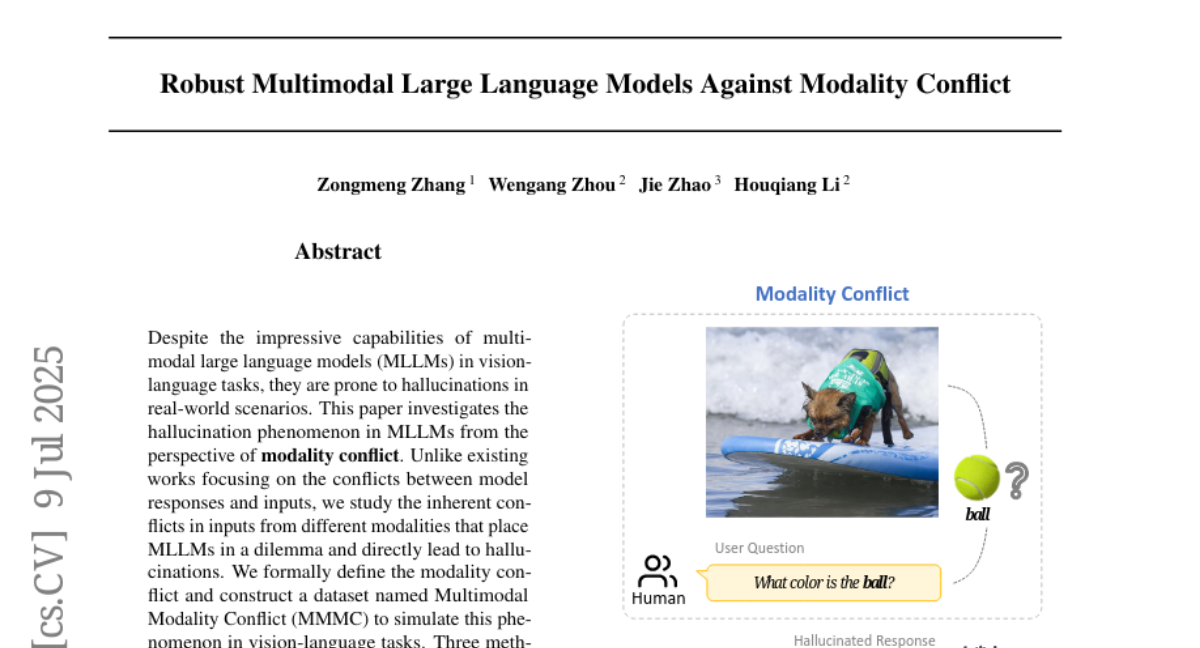

13. Robust Multimodal Large Language Models Against Modality Conflict

🔑 Keywords: Multimodal Large Language Models, Hallucinations, Modality Conflict, Reinforcement Learning, Vision-Language Tasks

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Investigate the hallucination phenomenon in Multimodal Large Language Models (MLLMs) focusing on modality conflict as a cause.

🛠️ Research Methods:

– Constructed a dataset named Multimodal Modality Conflict (MMMC).

– Proposed methods: prompt engineering, supervised fine-tuning, and reinforcement learning to mitigate hallucinations.

💬 Research Conclusions:

– Reinforcement Learning is the most effective strategy for alleviating hallucinations due to modality conflict.

– Supervised fine-tuning provides promising and stable performance.

👉 Paper link: https://huggingface.co/papers/2507.07151

14. BlockFFN: Towards End-Side Acceleration-Friendly Mixture-of-Experts with Chunk-Level Activation Sparsity

🔑 Keywords: Activation Sparsity, Mixture-of-Experts (MoE), Token-Level Sparsity (TLS), Chunk-Level Sparsity (CLS), Speculative Decoding

💡 Category: Natural Language Processing

🌟 Research Objective:

– The study introduces a novel MoE architecture, BlockFFN, to improve efficiency and performance of large language models by addressing issues in routing and sparsity patterns.

🛠️ Research Methods:

– Development of a new routing mechanism using ReLU activation and RMSNorm, alongside designing CLS-aware training objectives to bolster token-level and chunk-level sparsity.

– Implementation of efficient acceleration kernels that incorporate activation sparsity and speculative decoding for enhanced performance on end-side devices.

💬 Research Conclusions:

– BlockFFN demonstrates superior performance over existing MoE baselines, achieving significant token and chunk-level sparsity, with experimental results showing up to 3.67 times speedup on end-side devices compared to dense models.

– The research outcomes are publicly available, fostering further innovation and experimentation in the domain.

👉 Paper link: https://huggingface.co/papers/2507.08771

15. DOTResize: Reducing LLM Width via Discrete Optimal Transport-based Neuron Merging

🔑 Keywords: DOTResize, Model Compression, Transformer models, Neuron-level redundancies, Entropic regularization

💡 Category: Natural Language Processing

🌟 Research Objective:

– Introduce DOTResize, a novel method to compress Transformermodels by addressing neuron-level redundancies using Discrete Optimal Transport.

🛠️ Research Methods:

– Frame neuron width reduction as a Discrete Optimal Transport problem incorporating entropic regularization and matrix factorization within Transformer architecture.

💬 Research Conclusions:

– Demonstrates superior performance and reduced computational cost compared to pruning techniques across various large language model families.

👉 Paper link: https://huggingface.co/papers/2507.04517

16.