AI Native Daily Paper Digest – 20250717

1. Towards Agentic RAG with Deep Reasoning: A Survey of RAG-Reasoning Systems in LLMs

🔑 Keywords: Reasoning-Enhanced RAG, RAG-Enhanced Reasoning, Synergized RAG-Reasoning, Trustworthy, Human-Centric

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– To integrate reasoning and retrieval in Large Language Models to enhance factuality and multi-step inference.

🛠️ Research Methods:

– Analyzing and mapping how advanced reasoning optimizes Retrieval-Augmented Generation (RAG) stages.

– Exploring how retrieved knowledge supports complex inference in RAG-Enhanced Reasoning frameworks.

– Featuring Synergized RAG-Reasoning frameworks for iterative search and reasoning.

💬 Research Conclusions:

– Synergized RAG-Reasoning frameworks achieve state-of-the-art performance across knowledge-intensive benchmarks.

– The survey categorizes methods, datasets, and outlines future research directions for a more effective, multimodally-adaptive, and human-centric RAG-Reasoning approach.

👉 Paper link: https://huggingface.co/papers/2507.09477

2. PhysX: Physical-Grounded 3D Asset Generation

🔑 Keywords: 3D generative models, physical properties, PhysXNet, PhysXGen, generative physical AI

💡 Category: Generative Models

🌟 Research Objective:

– To address the lack of physical properties in 3D generative models by introducing PhysX, an end-to-end paradigm for physical-grounded 3D asset generation.

🛠️ Research Methods:

– Introduction of PhysXNet, a physics-annotated dataset with five foundational dimensions: absolute scale, material, affordance, kinematics, and function description.

– Development of PhysXGen, a feed-forward framework employing a dual-branch architecture to integrate physical knowledge into 3D asset generation.

💬 Research Conclusions:

– PhysX significantly enhances the physical plausibility of generated 3D assets, validated through extensive experiments demonstrating superior performance and generalization.

– The release of all code, data, and models aims to support future research in the field of generative physical AI.

👉 Paper link: https://huggingface.co/papers/2507.12465

3. SWE-Perf: Can Language Models Optimize Code Performance on Real-World Repositories?

🔑 Keywords: SWE-Perf, Large Language Models, code performance optimization, benchmark, GitHub repositories

💡 Category: AI Systems and Tools

🌟 Research Objective:

– Introduce SWE-Perf as the first benchmark specifically designed to evaluate Large Language Models on code performance optimization tasks using real-world repository data.

🛠️ Research Methods:

– Analyze 140 curated instances from performance-improving pull requests on GitHub, including codebases, target functions, performance tests, expert-authored patches, and executable environments.

💬 Research Conclusions:

– Identify a significant capability gap between existing Large Language Models and expert-level code optimization performance, highlighting opportunities for further research in this field.

👉 Paper link: https://huggingface.co/papers/2507.12415

4. MMHU: A Massive-Scale Multimodal Benchmark for Human Behavior Understanding

🔑 Keywords: Human Behavior Analysis, Autonomous Driving, Motion Prediction, Behavior Question Answering

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– The research introduces MMHU, a comprehensive benchmark for analyzing human behavior in autonomous driving, with the aim of enhancing driving safety by understanding human motions, trajectories, and intentions.

🛠️ Research Methods:

– The method involves collecting diverse data sources, such as Waymo and YouTube, and self-collected data to gather 57k human motion clips and 1.73M frames. A human-in-the-loop annotation pipeline is also developed to create detailed behavior captions.

💬 Research Conclusions:

– A thorough dataset analysis and the establishment of benchmarks for multiple tasks, including motion prediction and motion generation, offering a broad set of evaluation tools for human behavior in autonomous driving.

👉 Paper link: https://huggingface.co/papers/2507.12463

5. MOSPA: Human Motion Generation Driven by Spatial Audio

🔑 Keywords: Spatial Audio, Human Motion, MOSPA, Diffusion-based Framework, SAM Dataset

💡 Category: Generative Models

🌟 Research Objective:

– Introduce MOSPA, a diffusion-based generative framework, to model human motion in response to spatial audio and achieve state-of-the-art performance.

🛠️ Research Methods:

– Develop the SAM dataset containing high-quality spatial audio and motion data.

– Utilize a simple yet effective diffusion-based generative framework for modeling human motion driven by spatial audio.

💬 Research Conclusions:

– MOSPA successfully models the relationship between body motion and spatial audio, showing diverse and realistic human motions.

– The proposed method achieves state-of-the-art performance and will be open-sourced upon acceptance.

👉 Paper link: https://huggingface.co/papers/2507.11949

6. Seq vs Seq: An Open Suite of Paired Encoders and Decoders

🔑 Keywords: Large language model, Encoder-only, Decoder-only, Text generation, Open-source

💡 Category: Natural Language Processing

🌟 Research Objective:

– Introduce and compare encoder-only and decoder-only models using the SOTA open-data Ettin suite, ranging from 17 million to 1 billion parameters.

🛠️ Research Methods:

– Both encoder-only and decoder-only models are trained on up to 2 trillion tokens using the same recipes to achieve state-of-the-art results.

💬 Research Conclusions:

– Encoder-only models excel in classification and retrieval, while decoder-only models excel in text generation. Adapting models for cross-architecture tasks is less effective than using the original architecture.

– Open-source release of all training artifacts for community use.

👉 Paper link: https://huggingface.co/papers/2507.11412

7. DrafterBench: Benchmarking Large Language Models for Tasks Automation in Civil Engineering

🔑 Keywords: LLM agents, technical drawing revision, benchmark, open-source, function execution

💡 Category: AI Systems and Tools

🌟 Research Objective:

– To propose DrafterBench, an open-source benchmark, for evaluating LLM agents in technical drawing revision, focusing on structured data comprehension and function execution.

🛠️ Research Methods:

– Develop DrafterBench with twelve task types and 46 customized functions/tools, testing in 1920 tasks to assess AI agents’ capabilities in structured data comprehension, instruction following, and critical reasoning.

💬 Research Conclusions:

– DrafterBench offers detailed analysis of task accuracy and error statistics, helping provide deeper insight into agent capabilities and improvement targets for integrating LLMs into engineering applications.

👉 Paper link: https://huggingface.co/papers/2507.11527

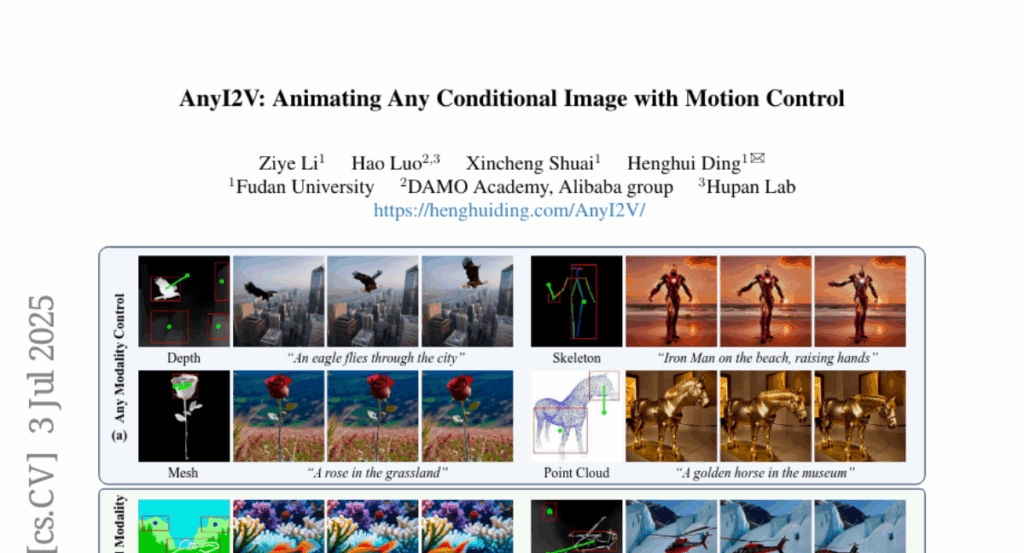

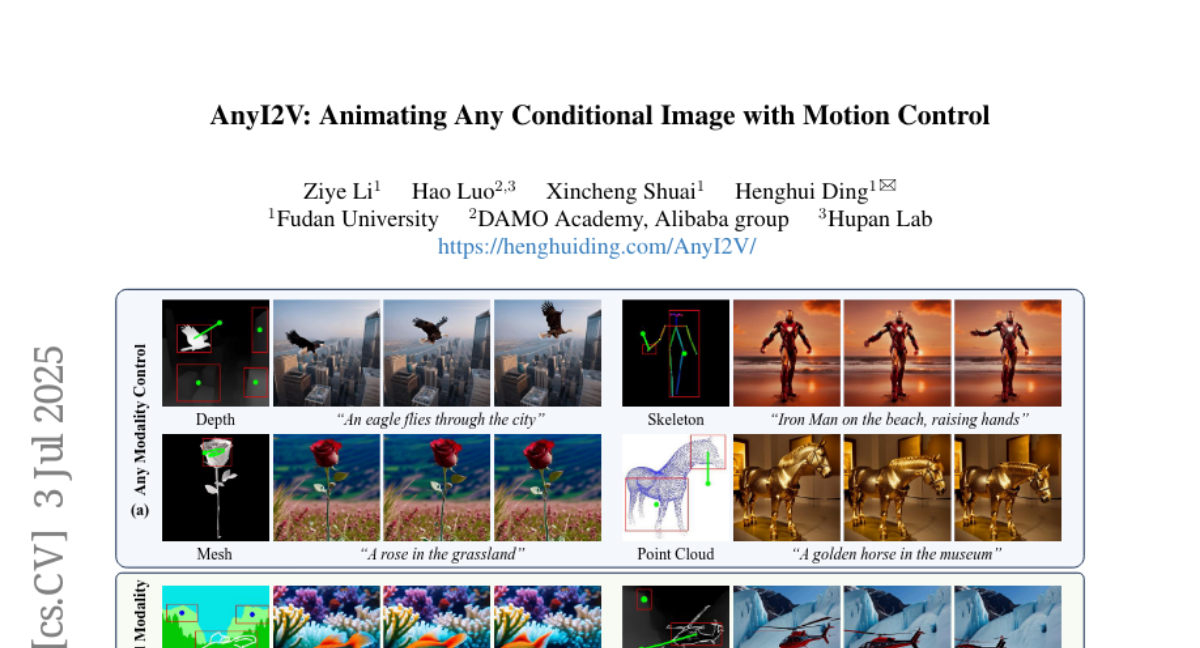

8. AnyI2V: Animating Any Conditional Image with Motion Control

🔑 Keywords: AI Native, motion trajectories, conditional images, video generation, style transfer

💡 Category: Generative Models

🌟 Research Objective:

– The primary goal is to propose a training-free framework, AnyI2V, that animates conditional images with user-defined motion trajectories to enhance video generation versatility and flexibility.

🛠️ Research Methods:

– Introducing AnyI2V, which supports broader modalities and mixed conditional inputs, enabling features like style transfer and video editing without training, and integrating user-defined motion trajectories.

💬 Research Conclusions:

– AnyI2V provides superior performance in spatial- and motion-controlled video generation and opens up new possibilities in flexible and versatile video synthesis, as evidenced by extensive experiments.

👉 Paper link: https://huggingface.co/papers/2507.02857

9. Lizard: An Efficient Linearization Framework for Large Language Models

🔑 Keywords: Subquadratic Architectures, Transformer-based LLMs, Hybrid Attention Mechanism, Gated Linear Attention, Hardware-Aware Training

💡 Category: Natural Language Processing

🌟 Research Objective:

– The research aims to develop “Lizard,” a linearization framework that transforms pre-trained Transformer-based LLMs into subquadratic architectures, allowing for efficient infinite-context generation while addressing memory and computational bottlenecks.

🛠️ Research Methods:

– Lizard utilizes a subquadratic attention mechanism approximating softmax attention, incorporates a gating module inspired by state-of-the-art linear models, and combines gated linear attention with sliding window attention enhanced by meta memory.

💬 Research Conclusions:

– The framework, Lizard, achieves near-lossless recovery of the teacher model’s performance on standard language modeling tasks and outperforms previous methods, notably improving by 18 points on the 5-shot MMLU benchmark and enhancing results on associative recall tasks.

👉 Paper link: https://huggingface.co/papers/2507.09025

10. SpatialTrackerV2: 3D Point Tracking Made Easy

🔑 Keywords: SpatialTrackerV2, 3D point tracking, monocular videos, end-to-end architecture, camera pose estimation

💡 Category: Computer Vision

🌟 Research Objective:

– Develop a feed-forward 3D point tracking method for monocular videos that integrates point tracking, monocular depth, and camera pose estimation into a unified end-to-end architecture.

🛠️ Research Methods:

– Decompose world-space 3D motion into scene geometry, camera ego-motion, and pixel-wise object motion with a fully differentiable and scalable architecture that trains on diverse datasets.

💬 Research Conclusions:

– Achieves a 30% performance improvement over existing 3D tracking methods and matches leading dynamic 3D reconstruction accuracy while operating 50 times faster.

👉 Paper link: https://huggingface.co/papers/2507.12462