AI Native Daily Paper Digest – 20250801

1. Seed-Prover: Deep and Broad Reasoning for Automated Theorem Proving

🔑 Keywords: Seed-Prover, Lean, Reinforcement Learning, Formal Verification, Mathematical Reasoning

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– The primary aim is to enhance formal theorem proving and automated mathematical reasoning by proposing Seed-Prover, a lemma-style reasoning model using Lean.

🛠️ Research Methods:

– The approach involves iteratively refining proofs using Lean feedback and integrating specialized geometry support through a geometry reasoning engine, Seed-Geometry.

– Three test-time inference strategies are designed to enable deep and broad reasoning.

💬 Research Conclusions:

– Seed-Prover has significantly surpassed previous state-of-the-art performance by solving a large portion of past formalized IMO problems, saturating MiniF2F, and achieving notable results on PutnamBench.

– The study highlights the effectiveness of utilizing formal verification with long chain-of-thought reasoning strategies in overcoming challenges of theorem proving.

👉 Paper link: https://huggingface.co/papers/2507.23726

2. Phi-Ground Tech Report: Advancing Perception in GUI Grounding

🔑 Keywords: GUI grounding, multimodal reasoning, Computer Use Agents, Phi-Ground, state-of-the-art

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The study aimed to improve the accuracy of GUI grounding in multimodal reasoning models to facilitate the development of Computer Use Agents.

🛠️ Research Methods:

– Conducted an empirical study on the training of grounding models, focusing on the entire process from data collection to model training.

💬 Research Conclusions:

– Developed the Phi-Ground model family, which achieved state-of-the-art performance on multiple benchmarks, significantly enhancing the accuracy of grounding models in agent settings.

👉 Paper link: https://huggingface.co/papers/2507.23779

3. C3: A Bilingual Benchmark for Spoken Dialogue Models Exploring Challenges in Complex Conversations

🔑 Keywords: Spoken Dialogue Models, ambiguity, context-dependency, benchmark dataset

💡 Category: Natural Language Processing

🌟 Research Objective:

– Evaluate the performance of Spoken Dialogue Models (SDMs) in understanding and emulating human conversations, specifically addressing challenges like ambiguity and context-dependency.

🛠️ Research Methods:

– Presentation of a benchmark dataset comprising 1,079 instances in English and Chinese, along with an LLM-based evaluation method aligned with human judgment.

💬 Research Conclusions:

– The dataset facilitates a comprehensive exploration of SDMs’ performance, illuminating their capability in tackling practical conversational challenges.

👉 Paper link: https://huggingface.co/papers/2507.22968

4. RecGPT Technical Report

🔑 Keywords: RecGPT, User Intent, Large Language Models, Recommender Systems, Taobao App

💡 Category: Natural Language Processing

🌟 Research Objective:

– The research aims to innovate the design of recommender systems by focusing on user intent, integrating large language models to enhance content diversity and user satisfaction.

🛠️ Research Methods:

– RecGPT employs a multi-stage training paradigm with reasoning-enhanced pre-alignment and self-training evolution, supported by a Human-LLM cooperative judge system.

💬 Research Conclusions:

– RecGPT delivers performance improvements for users, merchants, and platforms by ensuring increased content diversity and satisfaction, facilitating a sustainable recommendation ecosystem.

👉 Paper link: https://huggingface.co/papers/2507.22879

5. villa-X: Enhancing Latent Action Modeling in Vision-Language-Action Models

🔑 Keywords: VLA models, Latent actions, AI-generated, Dexterous hand manipulation, ViLLA framework

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– To enhance Visual-Language-Action (VLA) models by incorporating latent actions for improved robot manipulation.

🛠️ Research Methods:

– Introduction of villa-X, a novel Visual-Language-Latent-Action (ViLLA) framework.

💬 Research Conclusions:

– Villa-X achieves superior performance in both simulated (SIMPLER and LIBERO) and real-world robot setups (gripper and dexterous hand manipulation), suggesting a promising direction for future research.

👉 Paper link: https://huggingface.co/papers/2507.23682

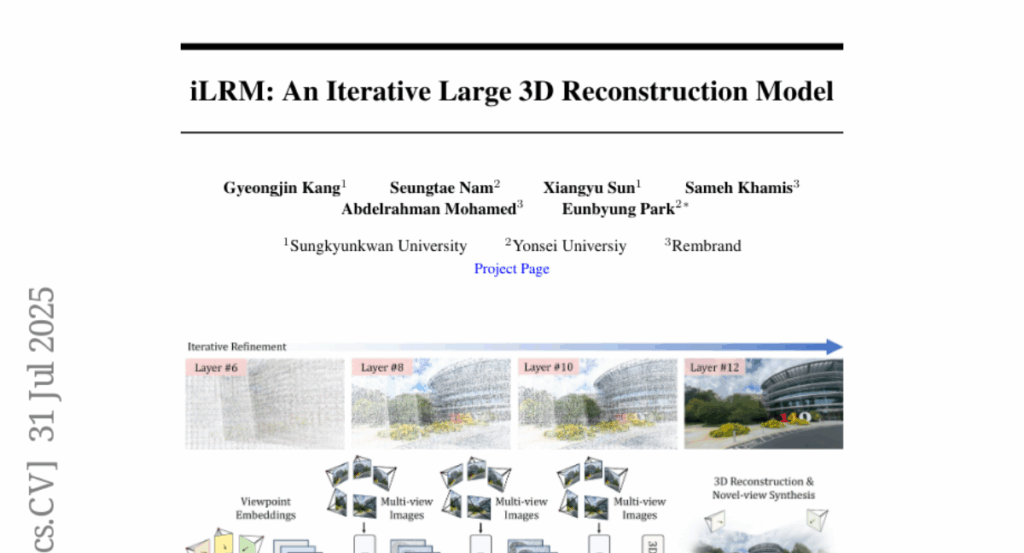

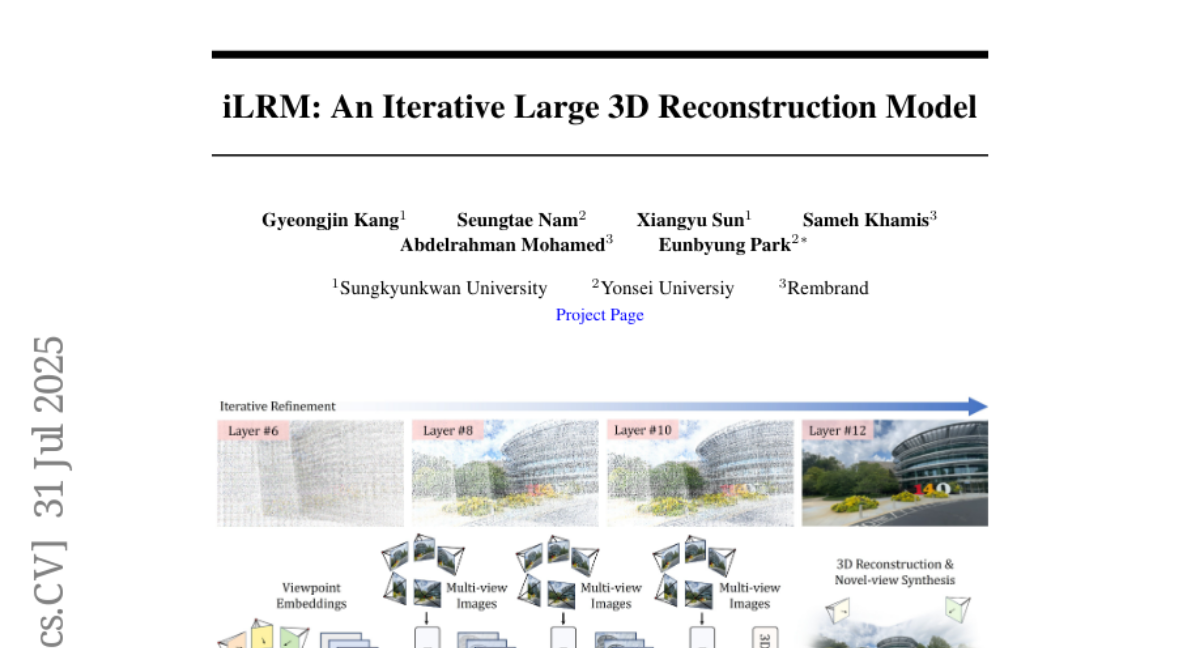

6. iLRM: An Iterative Large 3D Reconstruction Model

🔑 Keywords: 3D Reconstruction, iLRM, High-Resolution Information, Two-Stage Attention, Iterative Refinement

💡 Category: Computer Vision

🌟 Research Objective:

– To develop a scalable and efficient method for 3D reconstruction by introducing the iterative Large 3D Reconstruction Model (iLRM).

🛠️ Research Methods:

– Utilizes a two-stage attention scheme and decouples scene representation from input views to reduce computational costs.

– Employs an iterative refinement mechanism to generate high-fidelity 3D Gaussian representations.

💬 Research Conclusions:

– iLRM delivers enhanced 3D reconstruction quality and speed, demonstrating superior scalability by efficiently handling a larger number of input views with comparable computational costs.

👉 Paper link: https://huggingface.co/papers/2507.23277

7. Persona Vectors: Monitoring and Controlling Character Traits in Language Models

🔑 Keywords: Persona vectors, Large language models, Personality shifts, AI-generated summary

💡 Category: Natural Language Processing

🌟 Research Objective:

– The objective is to monitor and control personality changes in large language models during training and deployment using persona vectors.

🛠️ Research Methods:

– The study identifies persona vectors in the model’s activation space to track traits like evil, sycophancy, and hallucination propensity. The approach includes an automated method for extracting these vectors based on natural-language descriptions.

💬 Research Conclusions:

– Persona vectors allow monitoring and control of personality shifts during training and deployment. Personality changes, both intended and unintended after finetuning, correlate with shifts in persona vectors. These can be mitigated through post-hoc intervention or avoided with a preventative steering method. The method can flag training data that might cause undesirable personality changes.

👉 Paper link: https://huggingface.co/papers/2507.21509

8. Scalable Multi-Task Reinforcement Learning for Generalizable Spatial Intelligence in Visuomotor Agents

🔑 Keywords: Reinforcement Learning, Visuomotor Agents, Zero-shot Generalization, Spatial Reasoning, Cross-view Goal Specification

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The paper explores Reinforcement Learning’s potential to enhance generalizable spatial reasoning and interaction capabilities in 3D environments, specifically for visuomotor agents.

🛠️ Research Methods:

– It establishes cross-view goal specification as a unified multi-task goal space and proposes automated task synthesis in the Minecraft environment for large-scale multi-task training.

💬 Research Conclusions:

– Reinforcement Learning significantly increases interaction success rates by fourfold and allows zero-shot generalization in diverse environments, highlighting its potential in 3D simulated settings.

👉 Paper link: https://huggingface.co/papers/2507.23698

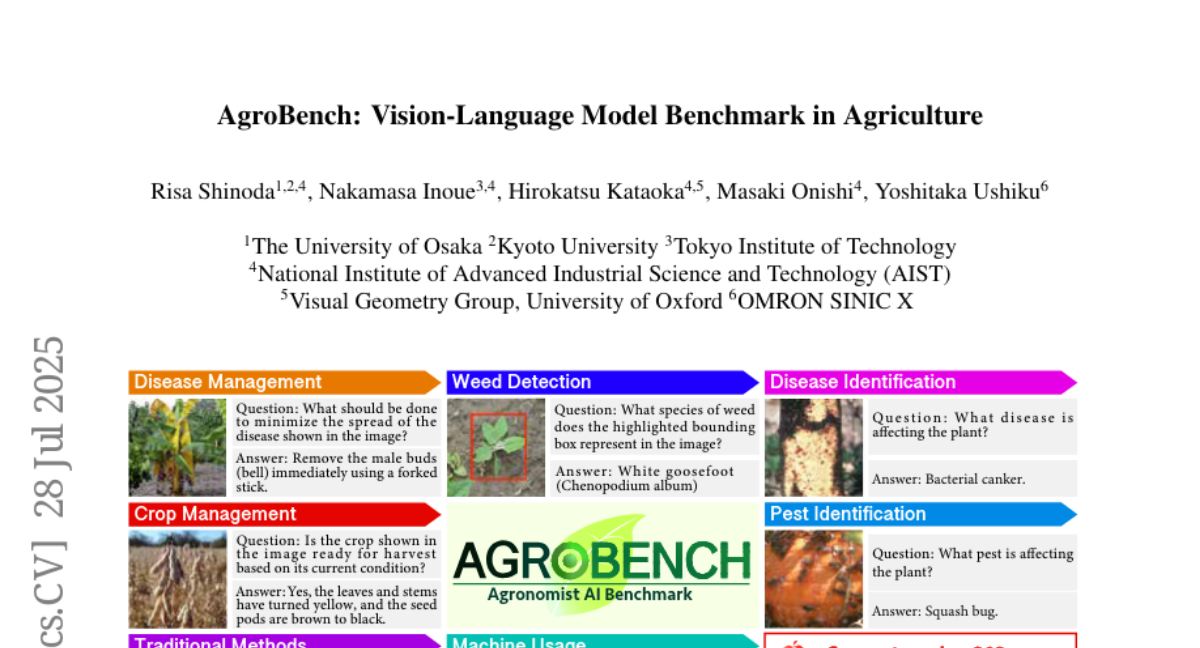

9. AgroBench: Vision-Language Model Benchmark in Agriculture

🔑 Keywords: vision-language models, AgroBench, fine-grained identification, weed identification, AI-generated summary

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The primary objective is to evaluate vision-language models (VLMs) across agricultural tasks and highlight areas for improvement, particularly in fine-grained identification like weed identification.

🛠️ Research Methods:

– Introducing AgroBench, a benchmark for evaluating VLM models across seven agricultural topics with expert-annotated categories.

💬 Research Conclusions:

– VLMs have room for improvement in precise identification tasks, with most open-source models performing close to random in weed identification; the study suggests pathways for future VLM development.

👉 Paper link: https://huggingface.co/papers/2507.20519

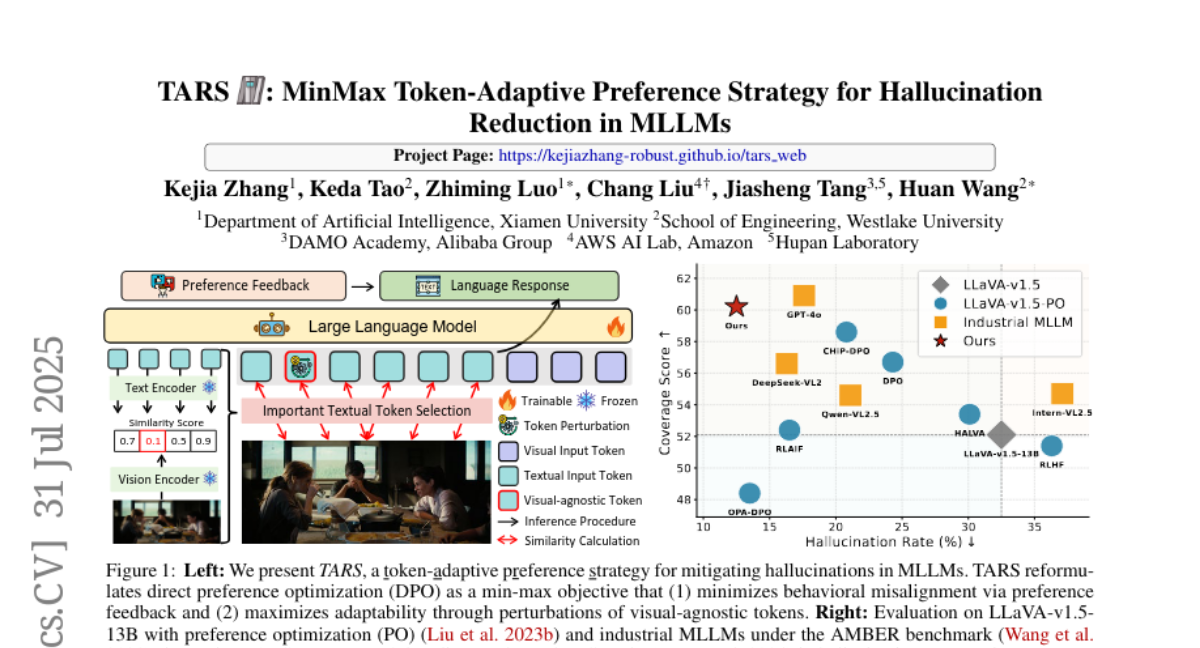

10. TARS: MinMax Token-Adaptive Preference Strategy for Hallucination Reduction in MLLMs

🔑 Keywords: TARS, Multimodal large language models, Hallucinations, Direct preference optimization, Token-adaptive preference strategy

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The study aims to improve the reliability of multimodal large language models by reducing hallucinations using a token-adaptive preference strategy called TARS.

🛠️ Research Methods:

– TARS reformulates direct preference optimization into a min-max optimization problem, maximizing token-level distributional shifts under semantic constraints and minimizing expected preference loss to decrease hallucination rates.

💬 Research Conclusions:

– TARS significantly reduces hallucination rates from 26.4% to 13.2% and cognition value from 2.5 to 0.4, outperforming standard DPO and matching GPT-4o on key metrics.

👉 Paper link: https://huggingface.co/papers/2507.21584

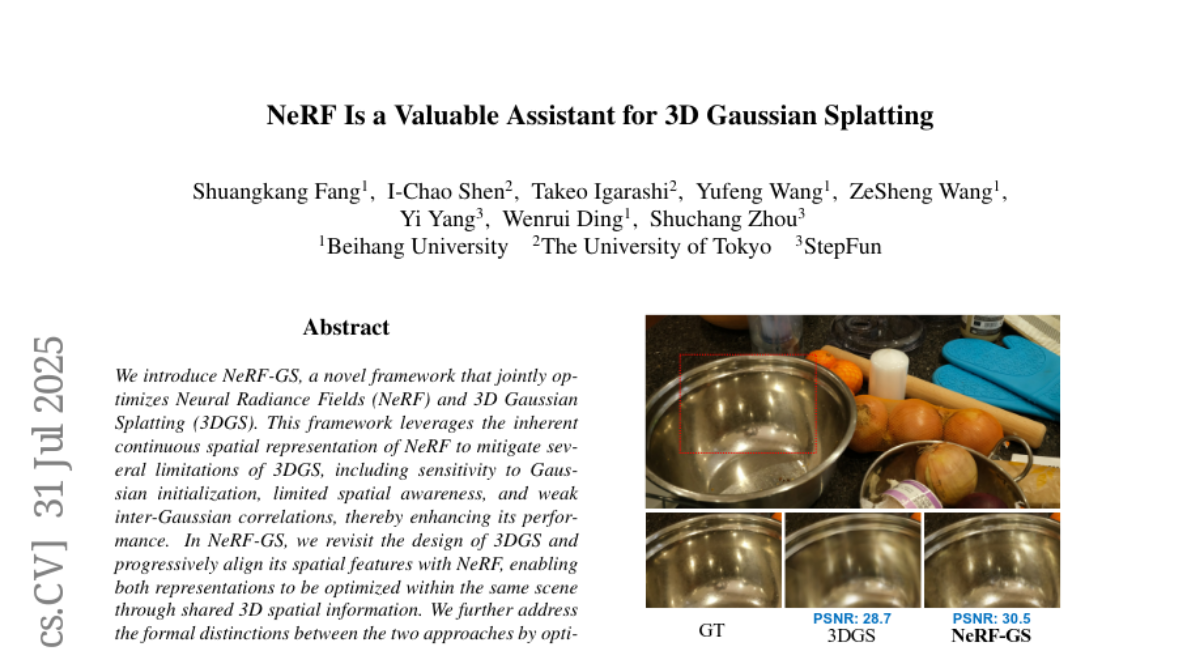

11. NeRF Is a Valuable Assistant for 3D Gaussian Splatting

🔑 Keywords: Neural Radiance Fields, 3D Gaussian Splatting, Spatial Representation, Hybrid Approaches

💡 Category: Computer Vision

🌟 Research Objective:

– Introduce NeRF-GS, a framework that optimizes both Neural Radiance Fields and 3D Gaussian Splatting for enhanced 3D scene representation and performance through shared spatial information.

🛠️ Research Methods:

– Joint optimization of NeRF and 3DGS to align spatial features, addressing limitations like Gaussian initialization sensitivity and weak spatial awareness by optimizing residual vectors for enhanced performance.

💬 Research Conclusions:

– NeRF-GS surpasses existing methods, achieving state-of-the-art performance, showcasing that NeRF and 3DGS are complementary in providing efficient hybrid approaches for 3D scene representation.

👉 Paper link: https://huggingface.co/papers/2507.23374

12. Beyond Linear Bottlenecks: Spline-Based Knowledge Distillation for Culturally Diverse Art Style Classification

🔑 Keywords: Kolmogorov-Arnold Networks (KANs), dual-teacher self-supervised frameworks, nonlinear feature correlations, style manifolds

💡 Category: Computer Vision

🌟 Research Objective:

– Improve art style classification by enhancing dual-teacher self-supervised frameworks using Kolmogorov-Arnold Networks to model nonlinear feature correlations and disentangle complex style manifolds.

🛠️ Research Methods:

– Replace conventional MLP projection and prediction heads with Kolmogorov-Arnold Networks in a dual-teacher knowledge distillation framework, retaining complementary guidance from teacher networks.

💬 Research Conclusions:

– The enhanced framework utilizing KANs demonstrates higher Top-1 accuracy on WikiArt and Pandora18k datasets compared to the base dual-teacher architecture, underscoring the importance of KANs in handling complex style representations.

👉 Paper link: https://huggingface.co/papers/2507.23436

13. On the Expressiveness of Softmax Attention: A Recurrent Neural Network Perspective

🔑 Keywords: Softmax attention, Linear attention, Transformer architectures, Quadratic memory requirement, Recurrent neural networks (RNNs)

💡 Category: Foundations of AI

🌟 Research Objective:

– To investigate why softmax attention is more expressive than linear attention by analyzing it using the recurrent form and RNN components.

🛠️ Research Methods:

– Derivation of the recurrent form of softmax attention to compare its expressiveness with linear attention.

– Description of softmax attention using the language of recurrent neural networks (RNNs) to dissect its components.

💬 Research Conclusions:

– The study explains that softmax attention’s expressiveness surpasses its counterparts due to its formulation, providing insights into the importance and interaction of its components.

👉 Paper link: https://huggingface.co/papers/2507.23632

14. Flow Equivariant Recurrent Neural Networks

🔑 Keywords: Equivariant neural network, Time-parameterized transformations, Recurrent neural networks, Symmetries, Sequence models

💡 Category: Foundations of AI

🌟 Research Objective:

– Extend equivariant neural network architectures to handle time-parameterized transformations, improving sequence models like RNNs for tasks involving moving stimuli.

🛠️ Research Methods:

– Introduced flow equivariance to standard RNN architectures, focusing on one-parameter Lie subgroups capturing natural transformations over time.

💬 Research Conclusions:

– Flow equivariant models outperform non-equivariant counterparts in training speed, length generalization, and velocity generalization on tasks like next step prediction and sequence classification.

👉 Paper link: https://huggingface.co/papers/2507.14793

15. Enhanced Arabic Text Retrieval with Attentive Relevance Scoring

🔑 Keywords: Dense Passage Retrieval, Attentive Relevance Scoring, semantic relevance, pre-trained Arabic language models, Natural Language Processing

💡 Category: Natural Language Processing

🌟 Research Objective:

– To develop an enhanced Dense Passage Retrieval framework specifically designed for the Arabic language to improve retrieval performance and ranking accuracy.

🛠️ Research Methods:

– The introduction of a novel Attentive Relevance Scoring mechanism that utilizes an adaptive scoring function to model semantic relevance more effectively.

– Integration of pre-trained Arabic language models and architectural refinements.

💬 Research Conclusions:

– The proposed framework significantly increases the ranking accuracy and improves retrieval performance when answering Arabic questions.

– The framework and its code are openly accessible to the public.

👉 Paper link: https://huggingface.co/papers/2507.23404

16. Efficient Machine Unlearning via Influence Approximation

🔑 Keywords: Influence Approximation Unlearning, Machine Unlearning, Incremental Learning, Gradient Optimization, AI-generated summary

💡 Category: Machine Learning

🌟 Research Objective:

– The objective is to address computational challenges in influence-based unlearning in machine learning models using the Influence Approximation Unlearning (IAU) algorithm.

🛠️ Research Methods:

– The paper establishes a theoretical link between incremental learning and unlearning to efficiently implement machine unlearning by leveraging incremental learning principles and gradient optimization.

💬 Research Conclusions:

– The IAU algorithm provides superior balance in terms of removal guarantee, unlearning efficiency, and model utility, outperforming state-of-the-art methods across various datasets and models.

👉 Paper link: https://huggingface.co/papers/2507.23257

17.