AI Native Daily Paper Digest – 20250910

1. Parallel-R1: Towards Parallel Thinking via Reinforcement Learning

🔑 Keywords: Parallel thinking, Reinforcement learning, Large language models, Progressive curriculum, Cold-start problem

💡 Category: Reinforcement Learning

🌟 Research Objective:

– Enhance the reasoning capabilities of large language models through parallel thinking enabled by a reinforcement learning framework.

🛠️ Research Methods:

– The use of a progressive curriculum to address the cold-start problem in training parallel thinking with reinforcement learning, transitioning from supervised fine-tuning to reinforcement learning for exploration and generalization.

💬 Research Conclusions:

– Parallel-R1 demonstrates significant performance improvements, including a 42.9% improvement over the baseline on AIME25, validating parallel thinking as an effective mid-training exploration scaffold.

👉 Paper link: https://huggingface.co/papers/2509.07980

2. Visual Representation Alignment for Multimodal Large Language Models

🔑 Keywords: VIRAL, MLLMs, vision-centric tasks, VFMs, visual alignment

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Introduce a regularization strategy, VIRAL, to align visual representations of Multimodal Large Language Models (MLLMs) with those of pre-trained Vision Foundation Models (VFMs) to enhance performance on vision-centric tasks.

🛠️ Research Methods:

– Employing VIRAL to ensure the alignment of internal visual representations between MLLMs and VFMs, thereby retaining fine-grained visual details and incorporating additional visual knowledge.

💬 Research Conclusions:

– VIRAL significantly improves the performance of MLLMs in reasoning over complex visual inputs and demonstrates consistent improvements across various tasks in multimodal benchmarks.

👉 Paper link: https://huggingface.co/papers/2509.07979

3. Mini-o3: Scaling Up Reasoning Patterns and Interaction Turns for Visual Search

🔑 Keywords: Mini-o3, Visual Probe Dataset, iterative data collection pipeline, over-turn masking strategy, reinforcement learning

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To introduce Mini-o3, a system that enhances deep, multi-turn reasoning capabilities in complex visual search tasks.

🛠️ Research Methods:

– Developed a Visual Probe Dataset and an iterative data collection pipeline to support diverse reasoning patterns.

– Introduced an over-turn masking strategy to balance training efficiency and test-time scalability in reinforcement learning.

💬 Research Conclusions:

– Mini-o3 significantly improves performance in visual search tasks, generating trajectories that scale beyond initial training constraints with increased accuracy.

👉 Paper link: https://huggingface.co/papers/2509.07969

4. Reconstruction Alignment Improves Unified Multimodal Models

🔑 Keywords: Reconstruction Alignment, AI-generated summary, Unified multimodal models, Visual understanding, Self-supervised reconstruction loss

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Introduce Reconstruction Alignment (RecA), a post-training method to enhance multimodal models.

🛠️ Research Methods:

– Utilize visual embeddings as dense prompts for post-training in Unified multimodal models (UMMs).

– Apply self-supervised reconstruction loss to align visual understanding and generation.

💬 Research Conclusions:

– RecA significantly improves image generation and editing fidelity across various multimodal model architectures with minimal computational resources.

– Outperforms larger models and enhances performance in benchmarks like GenEval, DPGBench, and editing benchmarks.

👉 Paper link: https://huggingface.co/papers/2509.07295

5. UMO: Scaling Multi-Identity Consistency for Image Customization via Matching Reward

🔑 Keywords: Unified Multi-identity Optimization, identity consistency, identity confusion, reinforcement learning, diffusion models

💡 Category: Generative Models

🌟 Research Objective:

– The objective of the research is to introduce UMO, a Unified Multi-identity Optimization framework that enhances identity consistency while reducing identity confusion in multi-reference image customization.

🛠️ Research Methods:

– UMO reformulates multi-identity generation as a global assignment optimization problem using a “multi-to-multi matching” paradigm, achieved through reinforcement learning on diffusion models.

– A scalable customization dataset with both synthesized and real multi-reference images is developed to facilitate UMO’s training.

💬 Research Conclusions:

– UMO significantly boosts identity consistency and diminishes identity confusion in image customization, setting a new state-of-the-art in identity preservation among open-source methods.

👉 Paper link: https://huggingface.co/papers/2509.06818

6. F1: A Vision-Language-Action Model Bridging Understanding and Generation to Actions

🔑 Keywords: Foresight Generation, Mixture-of-Transformer, Vision-Language-Action, Next-Scale Prediction, Modular Reasoning

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– The paper introduces F1, a pretrained Vision-Language-Action (VLA) framework, aimed at improving task success and generalization in dynamic environments.

🛠️ Research Methods:

– F1 employs a Mixture-of-Transformer architecture with modules for perception, foresight generation, and control, integrating foresight generation into the decision-making pipeline.

💬 Research Conclusions:

– The F1 framework significantly outperforms existing approaches in real-world tasks and simulation benchmarks by achieving higher task success rates and better generalization ability.

👉 Paper link: https://huggingface.co/papers/2509.06951

7. Language Self-Play For Data-Free Training

🔑 Keywords: Language Self-Play, Large Language Models, Reinforcement Learning, Self-Play, Instruction-Following

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The study aims to enhance large language models’ performance on instruction-following tasks without additional data using a novel approach called Language Self-Play (LSP).

🛠️ Research Methods:

– The methods leverage a game-theoretic framework of self-play where models improve by competing against themselves.

💬 Research Conclusions:

– The proposed LSP method allows pretrained models to surpass data-driven baselines, enhancing performance on challenging tasks through self-play alone.

👉 Paper link: https://huggingface.co/papers/2509.07414

8. Curia: A Multi-Modal Foundation Model for Radiology

🔑 Keywords: Curia, Foundation models, Cross-modality, Low-data regimes, AI in Healthcare

💡 Category: AI in Healthcare

🌟 Research Objective:

– Introduce Curia as a foundation model to enhance radiological interpretation by demonstrating broad generalization across imaging modalities and low-data settings.

🛠️ Research Methods:

– Curia was trained on a comprehensive corpus of cross-sectional imaging data from a major hospital, encompassing 150,000 exams and totaling 130 TB of data.

💬 Research Conclusions:

– Curia accurately identifies organs, detects conditions such as brain hemorrhages and myocardial infarctions, predicts tumor staging outcomes, and matches or exceeds the performance of radiologists and other foundation models.

👉 Paper link: https://huggingface.co/papers/2509.06830

9. Staying in the Sweet Spot: Responsive Reasoning Evolution via Capability-Adaptive Hint Scaffolding

🔑 Keywords: SEELE, RLVR, problem difficulty, hint length, exploration efficiency

💡 Category: Reinforcement Learning

🌟 Research Objective:

– Introduce a novel framework, SEELE, to dynamically adjust problem difficulty in RLVR to enhance exploration efficiency and performance in math reasoning tasks.

🛠️ Research Methods:

– Utilizes a supervision-aided RLVR framework that employs adaptive hint lengths, multi-round rollout sampling, and an item response theory model to align problem difficulty with model capability.

💬 Research Conclusions:

– SEELE improves exploration efficiency and outperforms existing methods such as GRPO and SFT by significant margins across various math reasoning benchmarks.

👉 Paper link: https://huggingface.co/papers/2509.06923

10. Emergent Hierarchical Reasoning in LLMs through Reinforcement Learning

🔑 Keywords: Reinforcement Learning, Large Language Models, HICRA, strategic planning, procedural correctness

💡 Category: Reinforcement Learning

🌟 Research Objective:

– To enhance the reasoning abilities of Large Language Models (LLMs) using a two-phase process involving procedural correctness and strategic planning.

🛠️ Research Methods:

– Introduction of the HIerarchy-Aware Credit Assignment (HICRA) algorithm which focuses on high-impact planning tokens.

💬 Research Conclusions:

– HICRA outperforms traditional reinforcement learning algorithms by concentrating optimization efforts on strategic bottlenecks, demonstrating advanced reasoning capabilities in LLMs.

👉 Paper link: https://huggingface.co/papers/2509.03646

11. Causal Attention with Lookahead Keys

🔑 Keywords: CAuSal aTtention, Lookahead Keys, autoregressive property, parallel training, validation perplexity

💡 Category: Natural Language Processing

🌟 Research Objective:

– To introduce CASTLE, an attention mechanism that updates keys with future context while maintaining autoregressive properties, aiming to improve language modeling performance.

🛠️ Research Methods:

– Developed a mechanism that updates each token’s keys as context unfolds, yet maintains autoregressive properties and derives a mathematical equivalence for efficient parallel training.

💬 Research Conclusions:

– CASTLE outperforms standard causal attention across model scales in language modeling benchmarks by reducing validation perplexity and enhancing performance on various downstream tasks.

👉 Paper link: https://huggingface.co/papers/2509.07301

12. SimpleQA Verified: A Reliable Factuality Benchmark to Measure Parametric Knowledge

🔑 Keywords: Large Language Models, SimpleQA Verified, Fact-checking, F1-score, Parametric Model Factuality

💡 Category: Natural Language Processing

🌟 Research Objective:

– The primary objective is to develop SimpleQA Verified, a benchmark aimed at accurately evaluating the factuality of Large Language Models by addressing previous benchmark limitations.

🛠️ Research Methods:

– The benchmark was created using a multi-stage filtering process, including de-duplication, topic balancing, and source reconciliation, to ensure reliability and challenge.

💬 Research Conclusions:

– SimpleQA Verified provides a more refined and reliable tool for evaluating factuality. It shows improvements in the autorater prompt, with Gemini 2.5 Pro achieving the highest F1-score of 55.6, surpassing models like GPT-5, thereby helping mitigate model hallucinations.

👉 Paper link: https://huggingface.co/papers/2509.07968

13. Directly Aligning the Full Diffusion Trajectory with Fine-Grained Human Preference

🔑 Keywords: Diffusion models, Human preferences, Direct-Align, Semantic Relative Preference Optimization, Aesthetic quality

💡 Category: Generative Models

🌟 Research Objective:

– Enhance the alignment of diffusion models with human preferences by optimizing computational efficiency and minimizing the need for offline reward adaptation.

🛠️ Research Methods:

– Introduce Direct-Align to predefine a noise prior for effective recovery of original images at any time step, avoiding over-optimization.

– Develop Semantic Relative Preference Optimization (SRPO) to allow online reward adjustments with text-conditioned signals, reducing offline fine-tuning dependency.

💬 Research Conclusions:

– By implementing these methods with the FLUX.1.dev model, the optimization process significantly improves human-evaluated realism and aesthetic quality, achieving more than a threefold enhancement.

👉 Paper link: https://huggingface.co/papers/2509.06942

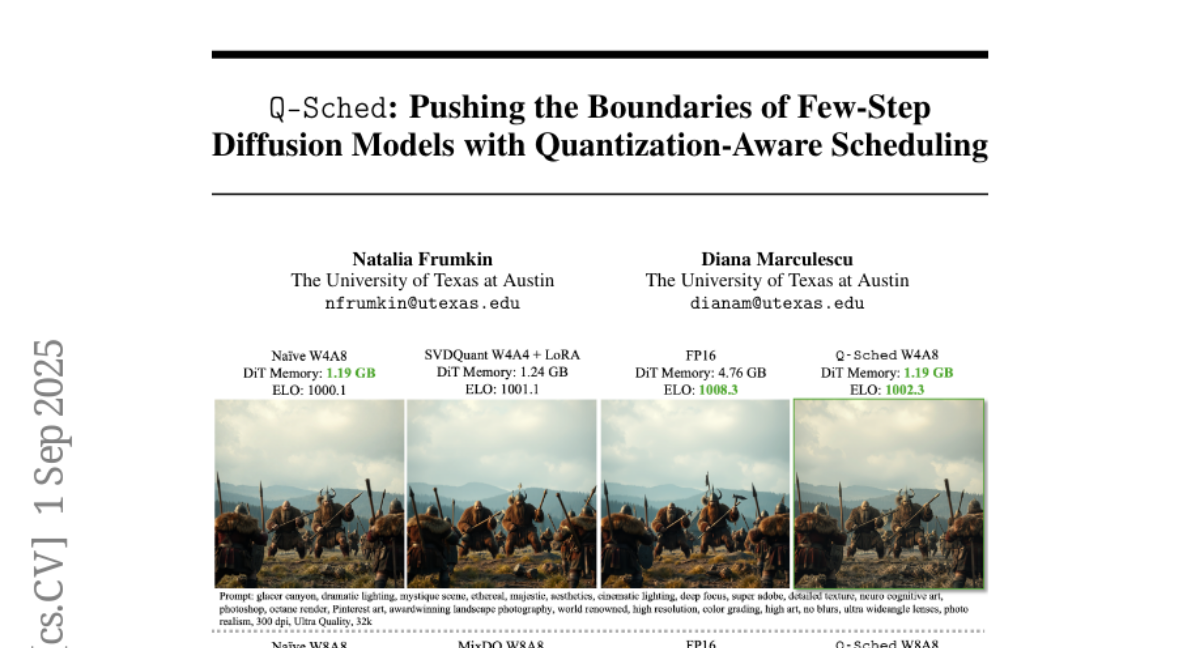

14. Q-Sched: Pushing the Boundaries of Few-Step Diffusion Models with Quantization-Aware Scheduling

🔑 Keywords: Q-Sched, post-training quantization, diffusion models, JAQ loss, FID improvement

💡 Category: Generative Models

🌟 Research Objective:

– The research introduces Q-Sched, a post-training quantization method to reduce model size by 4x while maintaining full-precision accuracy and improving image quality metrics.

🛠️ Research Methods:

– Q-Sched modifies the diffusion model scheduler rather than model weights, using JAQ loss to optimize quantization-aware pre-conditioning coefficients and avoiding full-precision inference during calibration.

💬 Research Conclusions:

– Q-Sched achieves significant improvements in image quality with a 15.5% and 16.6% FID improvement over FP16 models and is validated through a large-scale user study, proving effective on FLUX.1 and SDXL-Turbo.

👉 Paper link: https://huggingface.co/papers/2509.01624

15. Benchmarking Information Retrieval Models on Complex Retrieval Tasks

🔑 Keywords: retrieval models, complex retrieval tasks, LLM-based query expansion, state-of-the-art models

💡 Category: Natural Language Processing

🌟 Research Objective:

– The study aims to assess the performance of state-of-the-art retrieval models on a diverse and realistic set of complex retrieval tasks.

🛠️ Research Methods:

– Construction of a benchmark with complex retrieval tasks and evaluation of current retrieval models against these tasks.

– Exploration of the effects of LLM-based query expansion and rewriting on retrieval quality.

💬 Research Conclusions:

– Even state-of-the-art models struggle with high-quality retrieval in complex tasks.

– LLM-based query expansion does not consistently improve performance, and can even decrease the retrieval quality for the strongest models.

👉 Paper link: https://huggingface.co/papers/2509.07253

16. ΔL Normalization: Rethink Loss Aggregation in RLVR

🔑 Keywords: Delta L Normalization, Reinforcement Learning, Verifiable Rewards, Gradient Variance, Policy Loss

💡 Category: Reinforcement Learning

🌟 Research Objective:

– To propose Delta L Normalization as a method to reduce gradient variance and provide an unbiased policy loss estimate in Reinforcement Learning with Verifiable Rewards (RLVR).

🛠️ Research Methods:

– Analyzed the effect of varying response lengths on policy loss both theoretically and empirically.

– Reformulated the problem as finding a minimum-variance unbiased estimator.

💬 Research Conclusions:

– Delta L Normalization offers unbiased estimates and minimizes gradient variance, achieving superior performance across different model sizes, response lengths, and tasks.

– Proven effectiveness is supported by extensive experiments, with code available on GitHub.

👉 Paper link: https://huggingface.co/papers/2509.07558

17. From Noise to Narrative: Tracing the Origins of Hallucinations in Transformers

🔑 Keywords: Transformer Models, Hallucinations, Sparse Autoencoders, Input Space, Semantic Concepts

💡 Category: Generative Models

🌟 Research Objective:

– To investigate how and when hallucinations arise in pre-trained Transformer models under conditions of controlled uncertainty.

🛠️ Research Methods:

– Utilized sparse autoencoders to capture concept representations and conducted systematic experiments testing semantic activation as input information became unstructured.

💬 Research Conclusions:

– Revealed that Transformer models tend to activate input-insensitive semantic features under uncertainty, leading to hallucinations, which can be predicted from internal activations. This insight has implications for AI alignment with human values, safety, and managing hallucination risks.

👉 Paper link: https://huggingface.co/papers/2509.06938

18.