AI Native Daily Paper Digest – 20251001

1. MCPMark: A Benchmark for Stress-Testing Realistic and Comprehensive MCP Use

🔑 Keywords: MCPMark, LLMs, MCP, AI Agents, Benchmarking

💡 Category: AI Systems and Tools

🌟 Research Objective:

– The research aims to develop MCPMark, a comprehensive benchmark for evaluating MCP use in real-world workflows, focusing on diverse tasks that require enriched interactions with the environment.

🛠️ Research Methods:

– The study utilizes 127 high-quality tasks collaboratively designed by domain experts and AI Agents, employing a minimal agent framework and a tool-calling loop for evaluation.

💬 Research Conclusions:

– MCPMark reveals that current LLMs perform poorly on complex, real-world tasks, with top models like gpt-5-medium achieving only 52.56% pass@1. The extensive tool calls and execution turns indicate the stress-testing nature of MCPMark compared to existing benchmarks.

👉 Paper link: https://huggingface.co/papers/2509.24002

2. The Dragon Hatchling: The Missing Link between the Transformer and Models of the Brain

🔑 Keywords: Biologically Inspired, Large Language Model, Hebbian Learning, Interpretability, Transformer-like Performance

💡 Category: Natural Language Processing

🌟 Research Objective:

– Introduce Dragon Hatchling (BDH), a biologically inspired Large Language Model designed to maintain interpretability while achieving Transformer-like performance.

🛠️ Research Methods:

– Utilizes a scale-free network architecture with Hebbian learning and spiking neurons to emulate brain-like generalization.

– Implements a GPU-friendly, attention-based state sequence learning architecture.

💬 Research Conclusions:

– BDH matches GPT2’s performance on language and translation tasks with the same parameters and training data.

– Demonstrates inherent interpretability and monosemanticity, offering insights into synaptic plasticity and brain model representation.

👉 Paper link: https://huggingface.co/papers/2509.26507

3. Vision-Zero: Scalable VLM Self-Improvement via Strategic Gamified Self-Play

🔑 Keywords: Vision-Zero, vision-language models, reinforcement learning, Strategic Self-Play Framework, Iterative Self-Play Policy Optimization

💡 Category: Reinforcement Learning

🌟 Research Objective:

– Propose Vision-Zero, a framework for enhancing vision-language models through self-improvement using competitive visual games, without human annotation.

🛠️ Research Methods:

– Strategic Self-Play Framework trains models in “Who Is the Spy”-style games, utilizing arbitrary image gameplay to enhance reasoning abilities and generalization.

– Iterative Self-Play Policy Optimization (Iterative-SPO) combines self-play with reinforcement learning, avoiding performance plateau and achieving sustained improvements.

💬 Research Conclusions:

– Vision-Zero achieves state-of-the-art performance in reasoning, chart question answering, and vision-centric understanding tasks using label-free data.

👉 Paper link: https://huggingface.co/papers/2509.25541

4. Winning the Pruning Gamble: A Unified Approach to Joint Sample and Token Pruning for Efficient Supervised Fine-Tuning

🔑 Keywords: Quadrant-based Tuning, Large Language Models, Data Pruning, Error-Uncertainty Plane, Dynamic Pruning

💡 Category: Natural Language Processing

🌟 Research Objective:

– Introduce a unified framework, Quadrant-based Tuning (Q-Tuning), to optimize both sample and token pruning for efficient supervised fine-tuning of large language models.

🛠️ Research Methods:

– Developed the Error-Uncertainty (EU) Plane to assess utility across samples and tokens.

– Implemented a two-stage strategy with sample-level triage and asymmetric token-pruning, preserving rich informative examples and trimming less necessary tokens.

💬 Research Conclusions:

– Q-Tuning significantly enhances data utilization, achieving a +38% improvement over full-data baselines using only 12.5% of the training data.

– Sets new performance benchmarks across diverse tests, providing a scalable, budget-friendly solution for training large language models under data constraints.

👉 Paper link: https://huggingface.co/papers/2509.23873

5. TruthRL: Incentivizing Truthful LLMs via Reinforcement Learning

🔑 Keywords: Large Language Models, Reinforcement Learning, Hallucination, Truthfulness, Abstention

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The primary objective is to enhance the truthfulness of large language models (LLMs) by balancing accuracy and abstention to reduce hallucinations.

🛠️ Research Methods:

– The implementation of TruthRL using a ternary reward system in reinforcement learning to distinguish between correct answers, hallucinations, and abstentions.

💬 Research Conclusions:

– TruthRL significantly reduces hallucinations by 28.9% and improves truthfulness by 21.1% across various benchmarks, demonstrating superior performance over vanilla RL methods.

👉 Paper link: https://huggingface.co/papers/2509.25760

6. Learning to See Before Seeing: Demystifying LLM Visual Priors from Language Pre-training

🔑 Keywords: Large Language Models, Visual Priors, Pre-training, Multimodal Data, Visual Reasoning

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To explore how Large Language Models (LLMs) develop and utilize visual priors for vision tasks without direct visual data during language pre-training.

🛠️ Research Methods:

– Conducted over 100 controlled experiments using 500,000 GPU-hours, involving LLM pre-training to visual alignment and supervised multimodal fine-tuning across various model scales and data categories.

💬 Research Conclusions:

– Identified that LLMs develop latent visual reasoning and perception abilities during pre-training, transferable to visual tasks, and proposed a data-centric recipe for pre-training vision-aware LLMs.

👉 Paper link: https://huggingface.co/papers/2509.26625

7. OceanGym: A Benchmark Environment for Underwater Embodied Agents

🔑 Keywords: OceanGym, Multi-modal Large Language Models, underwater embodied agents, perception, adaptability

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– To develop OceanGym, a comprehensive benchmark for evaluating underwater embodied agents in challenging ocean environments, facilitating advancements in AI for perception, planning, and adaptability.

🛠️ Research Methods:

– Utilization of Multi-modal Large Language Models (MLLMs) to integrate perception, memory, and sequential decision-making in a unified agent framework for complex underwater tasks.

💬 Research Conclusions:

– Experiments show significant gaps between current MLLM-driven agents and human experts, emphasizing the challenges of deploying AI in underwater environments. OceanGym provides a high-fidelity platform for developing and testing robust embodied AI solutions.

👉 Paper link: https://huggingface.co/papers/2509.26536

8. More Thought, Less Accuracy? On the Dual Nature of Reasoning in Vision-Language Models

🔑 Keywords: Vision-Language Models, Multimodal Reasoning, Visual Forgetting, VAPO-Thinker-7B, Vision-Anchored Policy Optimization

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Enhance multimodal reasoning by anchoring the process to visual information to improve performance on visual tasks without compromising logical inference.

🛠️ Research Methods:

– Implementation of Vision-Anchored Policy Optimization as a mechanism to guide reasoning toward visually grounded trajectories, countering visual forgetting.

💬 Research Conclusions:

– VAPO-Thinker-7B significantly boosts reliance on visual information, achieving state-of-the-art results across various benchmarks.

– While multimodal reasoning improves logical inference, it can impair perceptual grounding, which VAPO addresses by reducing recognition failures.

👉 Paper link: https://huggingface.co/papers/2509.25848

9. DC-VideoGen: Efficient Video Generation with Deep Compression Video Autoencoder

🔑 Keywords: DC-VideoGen, Deep Compression, video diffusion model, inference latency

💡 Category: Generative Models

🌟 Research Objective:

– To introduce an acceleration framework, DC-VideoGen, that enhances video generation efficiency for pre-trained diffusion models by utilizing a deep compression latent space.

🛠️ Research Methods:

– The framework employs a Deep Compression Video Autoencoder with chunk-causal temporal design and AE-Adapt-V strategy, enabling efficient adaptation with lightweight fine-tuning.

💬 Research Conclusions:

– The adaptation of the Wan-2.1-14B model using DC-VideoGen significantly reduces inference latency by up to 14.8x without quality loss and allows high-resolution video generation on a single GPU.

👉 Paper link: https://huggingface.co/papers/2509.25182

10. Thinking-Free Policy Initialization Makes Distilled Reasoning Models More Effective and Efficient Reasoners

🔑 Keywords: TFPI, RLVR, AI-generated summary, RL convergence, token-efficient reasoning models

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The research aims to enhance performance and reduce token usage in Reinforcement Learning with Verifiable Reward (RLVR) by introducing Thinking-Free Policy Initialization (TFPI).

🛠️ Research Methods:

– The TFPI framework discards thinking content during training through a ThinkFree operation, which aids in bridging long Chain-of-Thought distillation and standard RLVR.

💬 Research Conclusions:

– The study demonstrates that TFPI accelerates RL convergence, improves accuracy, and achieves more token-efficient reasoning models without needing specialized rewards or complex training designs.

👉 Paper link: https://huggingface.co/papers/2509.26226

11. Who’s Your Judge? On the Detectability of LLM-Generated Judgments

🔑 Keywords: J-Detector, Large Language Model, judgment detection, biases, LLM-enhanced features

💡 Category: Natural Language Processing

🌟 Research Objective:

– To propose and formalize the task of detecting LLM-generated judgments, focusing on addressing biases and vulnerabilities in sensitive scenarios.

🛠️ Research Methods:

– Development of J-Detector, a neural detector using linguistic and LLM-enhanced features, to identify judgments without relying on textual feedback.

💬 Research Conclusions:

– J-Detector demonstrates effectiveness and interpretability in detecting LLM-generated judgments, enabling the quantification of biases in various datasets.

– The study validates the practical utility of judgment detection in real-world scenarios.

👉 Paper link: https://huggingface.co/papers/2509.25154

12. Thinking Sparks!: Emergent Attention Heads in Reasoning Models During Post Training

🔑 Keywords: post-training techniques, supervised fine-tuning, reinforcement learning, attention heads, structured reasoning

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– The study aims to elucidate how post-training techniques, like supervised fine-tuning and reinforcement learning, contribute to the emergence and evolution of functionally specialized attention heads in large reasoning models.

🛠️ Research Methods:

– Utilization of circuit analysis to study the effects of different training regimes on the development of attention heads. Comparative analysis across different model families and training strategies, including distillation, SFT, and group relative policy optimization.

💬 Research Conclusions:

– The research reveals that specialized attention heads evolve with varied training regimes, impacting the model’s reasoning capabilities. The study identifies a tension between complex reasoning and elementary computations, suggesting that effective training policies need to balance sophisticated reasoning with reliable execution to avoid performance trade-offs like over-thinking failure modes.

👉 Paper link: https://huggingface.co/papers/2509.25758

13. dParallel: Learnable Parallel Decoding for dLLMs

🔑 Keywords: dParallel, Diffusion large language models, Parallel decoding, Certainty-forcing distillation

💡 Category: Natural Language Processing

🌟 Research Objective:

– The objective is to enhance the parallel decoding capability of Diffusion Large Language Models (dLLMs) by introducing a method called dParallel, reducing decoding steps without compromising performance.

🛠️ Research Methods:

– A novel training strategy named certainty-forcing distillation is deployed, aimed at distilling the model to achieve high certainty on masked tokens rapidly and in parallel.

💬 Research Conclusions:

– dParallel effectively reduces decoding steps dramatically (from 256 to 30 on GSM8K and from 256 to 24 on MBPP), achieving significant speedups (8.5x and 10.5x respectively) while maintaining model performance.

👉 Paper link: https://huggingface.co/papers/2509.26488

14. VitaBench: Benchmarking LLM Agents with Versatile Interactive Tasks in Real-world Applications

🔑 Keywords: LLM-based agents, VitaBench, real-world settings, interactive tasks, AI Systems and Tools

💡 Category: AI Systems and Tools

🌟 Research Objective:

– Introduction of VitaBench, a benchmark designed to evaluate LLM-based agents on complex, real-world interactive tasks.

🛠️ Research Methods:

– Utilization of 66 tools to create 100 cross-scenario tasks and 300 single-scenario tasks that test agents’ capabilities across temporal and spatial reasoning, utilization of complex tools, and proactive task management.

💬 Research Conclusions:

– Comprehensive evaluation reveals limited success rates, with even advanced models achieving only a 30% success rate on cross-scenario tasks, underscoring the challenge’s significance in pushing the boundaries of AI agent development.

👉 Paper link: https://huggingface.co/papers/2509.26490

15. Learning Human-Perceived Fakeness in AI-Generated Videos via Multimodal LLMs

🔑 Keywords: DeeptraceReward, AI-generated videos, deepfake traces, multimodal language models, reward models

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The study introduces DeeptraceReward, a benchmark dataset designed to annotate human-perceived deepfake traces in AI-generated videos, to train and evaluate multimodal language models for video generation detection.

🛠️ Research Methods:

– DeeptraceReward comprises 4.3K detailed annotations across 3.3K high-quality videos, providing natural-language explanations, bounding-box regions, and temporal labels for perceived traces. The research develops reward models mimicking human judgments in these contexts.

💬 Research Conclusions:

– The 7B reward model trained on DeeptraceReward surpasses GPT-5 by 34.7% in identifying, grounding, and explaining fake clues. The analysis shows that fine-grained deepfake trace detection presents more challenges than binary classification, with a notable difficulty progression from natural language explanations to spatial and temporal labeling.

👉 Paper link: https://huggingface.co/papers/2509.22646

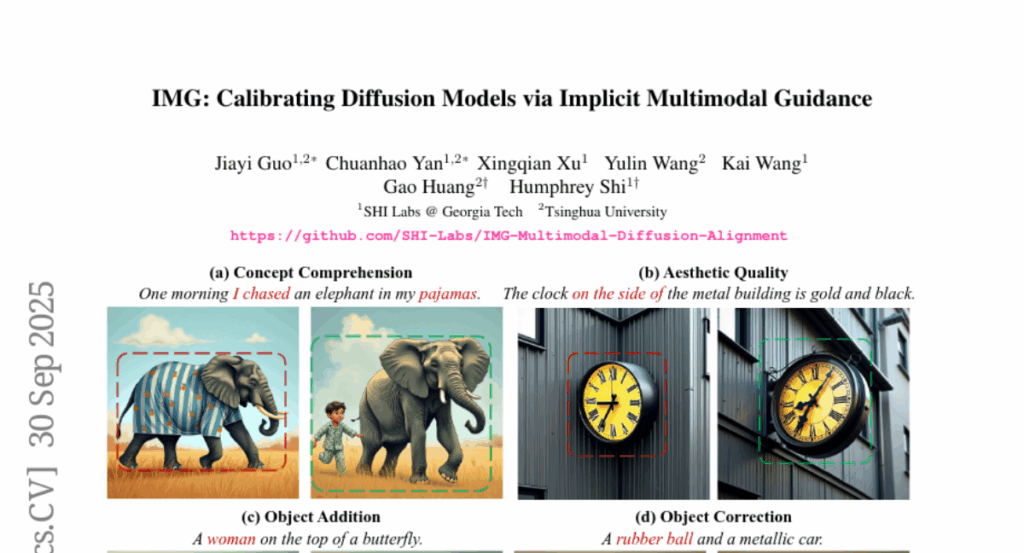

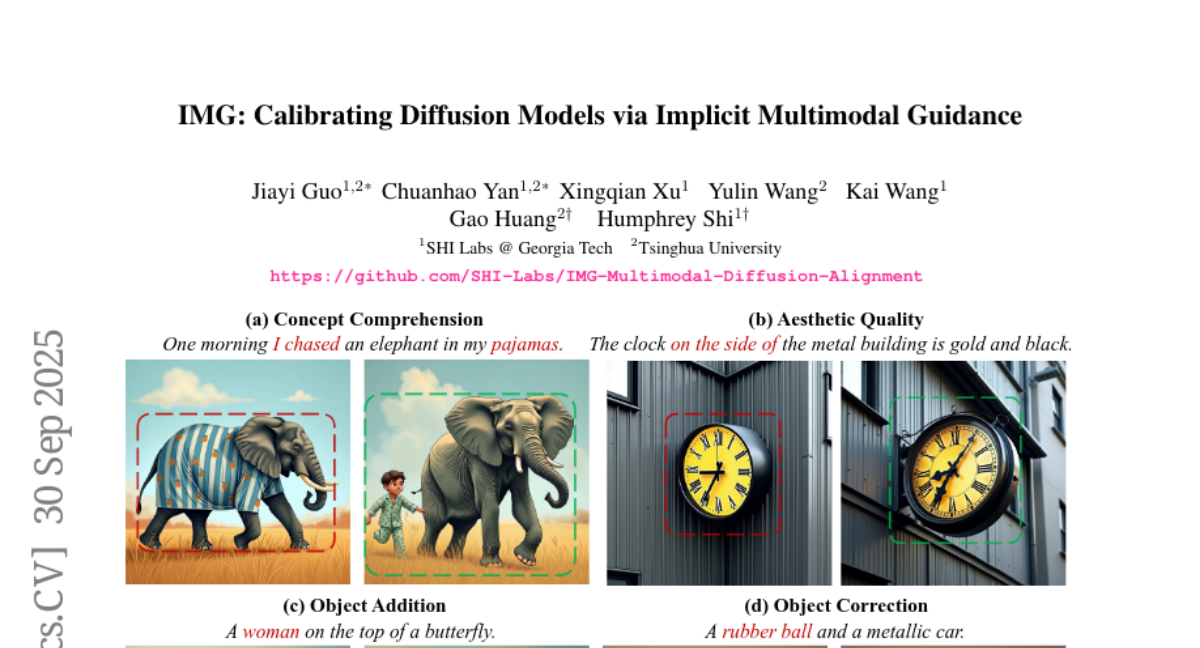

16. IMG: Calibrating Diffusion Models via Implicit Multimodal Guidance

🔑 Keywords: Implicit Multimodal Guidance, multimodal alignment, diffusion-generated images, multimodal large language model, Implicit Aligner

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The objective is to enhance multimodal alignment between diffusion-generated images and input prompts without using additional data or editing processes.

🛠️ Research Methods:

– The study introduces Implicit Multimodal Guidance (IMG), utilizing a multimodal large language model to detect misalignments and an Implicit Aligner to adjust diffusion conditioning features.

💬 Research Conclusions:

– IMG demonstrates superior performance over existing alignment methods and serves as a flexible adapter to enhance other finetuning-based alignment methods.

👉 Paper link: https://huggingface.co/papers/2509.26231

17. Efficient Audio-Visual Speech Separation with Discrete Lip Semantics and Multi-Scale Global-Local Attention

🔑 Keywords: AVSS, dual-path lightweight video encoder, AI-generated summary, global-local attention blocks

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Introduce Dolphin, an efficient Audio-Visual Speech Separation (AVSS) method that reduces computational cost while maintaining high separation quality.

🛠️ Research Methods:

– Utilizes DP-LipCoder, a dual-path lightweight video encoder, to transform lip-motion into discrete audio-aligned semantic tokens.

– Incorporates a lightweight encoder-decoder separator with global-local attention blocks to capture multi-scale dependencies efficiently.

💬 Research Conclusions:

– Dolphin outperforms the state-of-the-art in separation quality and efficiency, achieving over 50% reduction in parameters, more than 2.4x reduction in MACs, and over 6x faster GPU inference speed.

– Provides a practical and deployable AVSS solution suitable for real-world applications with publicly available resources.

👉 Paper link: https://huggingface.co/papers/2509.23610

18. MotionRAG: Motion Retrieval-Augmented Image-to-Video Generation

🔑 Keywords: MotionRAG, diffusion models, Context-Aware Motion Adaptation, retrieval-augmented framework, zero-shot generalization

💡 Category: Generative Models

🌟 Research Objective:

– To enhance video generation by improving motion realism through the integration of motion priors from reference videos using a retrieval-augmented framework.

🛠️ Research Methods:

– Developed a retrieval-based pipeline using a video encoder and specialized resamplers to extract high-level motion features.

– Utilized an in-context learning approach via a causal transformer architecture for motion adaptation.

– Implemented an attention-based motion injection adapter for integrating motion features into pretrained video diffusion models.

💬 Research Conclusions:

– The framework achieves significant improvements in motion realism with negligible computational overhead and facilitates zero-shot generalization to new domains by updating the retrieval database without further retraining.

👉 Paper link: https://huggingface.co/papers/2509.26391

19. DeepScientist: Advancing Frontier-Pushing Scientific Findings Progressively

🔑 Keywords: DeepScientist, Bayesian Optimization, AI Tasks, Findings Memory, Scientific Discovery

💡 Category: AI Systems and Tools

🌟 Research Objective:

– Introduce DeepScientist, an AI system that autonomously conducts scientific discovery using Bayesian Optimization to surpass human state-of-the-art methods.

🛠️ Research Methods:

– Utilizes a hierarchical evaluation process comprising “hypothesize, verify, and analyze” alongside Findings Memory to explore and exploit hypotheses over extensive GPU hours.

💬 Research Conclusions:

– Generated approximately 5,000 scientific ideas with 1100 validated, outperforming human state-of-the-art methods on three AI tasks by significant margins.

– Open-source commitment for further research enablement.

👉 Paper link: https://huggingface.co/papers/2509.26603

20. DA^2: Depth Anything in Any Direction

🔑 Keywords: Panoramic depth estimation, zero-shot generalization, data curation engine, SphereViT, spherical geometric consistency

💡 Category: Computer Vision

🌟 Research Objective:

– To develop DA², a zero-shot generalizable and end-to-end panoramic depth estimator addressing challenges like data scarcity and spherical distortions.

🛠️ Research Methods:

– Utilized a data curation engine to generate high-quality panoramic depth data from perspective and created a large dataset of panoramic RGB-depth pairs.

– Implemented SphereViT to handle spherical distortions and enforce spherical geometric consistency in panoramic image features.

💬 Research Conclusions:

– DA² achieves state-of-the-art performance with a significant 38% improvement on AbsRel over the strongest zero-shot baseline.

– Demonstrated superior zero-shot generalization and efficiency compared to previous methods. The code and curated data will be publicly available.

👉 Paper link: https://huggingface.co/papers/2509.26618

21. Mem-α: Learning Memory Construction via Reinforcement Learning

🔑 Keywords: Mem-alpha, reinforcement learning, memory management, generalization, large language models

💡 Category: Natural Language Processing

🌟 Research Objective:

– The primary objective is to enhance memory management in large language models through Mem-alpha, a reinforcement learning framework, to improve performance and generalization in understanding long-term information.

🛠️ Research Methods:

– The framework trains agents using a specialized dataset featuring diverse multi-turn interaction patterns and evaluation questions. Agents learn memory management by processing sequential information to extract, store, and update content effectively, using a reward signal based on question-answering accuracy.

💬 Research Conclusions:

– Mem-alpha demonstrates significant improvements over existing memory-augmented agents. It generalizes remarkably well, achieving effective performance on sequences over 13 times the training length.

👉 Paper link: https://huggingface.co/papers/2509.25911

22. Muon Outperforms Adam in Tail-End Associative Memory Learning

🔑 Keywords: Muon optimizer, LLMs, associative memory, heavy-tailed data, balanced learning

💡 Category: Machine Learning

🌟 Research Objective:

– To investigate and explain the underlying success mechanisms of the Muon optimizer when training Large Language Models (LLMs), particularly its interaction with associative memory parameters.

🛠️ Research Methods:

– Examined the impact of Muon on transformer components by ablating the components it optimizes, focusing on Value and Output (VO) attention weights and Feed-Forward Networks (FFNs).

– Conducted theoretical analyses using a one-layer associative memory model under class-imbalanced data to compare the performance of Muon and Adam optimizers.

💬 Research Conclusions:

– Muon optimizer achieves more isotropic singular spectra and effectively optimizes tail classes in heavy-tailed data distributions, resulting in more balanced and effective learning compared to the Adam optimizer.

– The update rule of Muon aligns with the outer-product structure of linear associative memories, which promotes consistent balanced learning across classes.

👉 Paper link: https://huggingface.co/papers/2509.26030

23. OffTopicEval: When Large Language Models Enter the Wrong Chat, Almost Always!

🔑 Keywords: Operational Safety, OffTopicEval, Prompt-based Steering, Large Language Model (LLM), System-prompt Grounding

💡 Category: Natural Language Processing

🌟 Research Objective:

– The study aims to address operational safety in Large Language Models (LLMs) and proposes OffTopicEval as a benchmark for this purpose.

🛠️ Research Methods:

– Evaluation of operational safety across six model families using OffTopicEval, alongside the introduction of prompt-based steering methods like query grounding and system-prompt grounding to improve out-of-distribution refusal.

💬 Research Conclusions:

– Despite variations in performance, all evaluated LLMs show high operational unsafety. However, prompt-based steering methods demonstrate potential in significantly enhancing the operational safety of these models.

👉 Paper link: https://huggingface.co/papers/2509.26495

24. Humanline: Online Alignment as Perceptual Loss

🔑 Keywords: Online alignment, Prospect theory, Perceptual bias, Humanline variants

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The study aims to bridge the performance gap between online and offline alignment methods by introducing perceptual biases into offline training.

🛠️ Research Methods:

– The research utilizes prospect theory to propose a human-centric explanation for online alignment methods’ superiority and suggests integrating perceptual distortions of probability into objectives.

💬 Research Conclusions:

– Introducing perceptual and human-centric biases into training objectives, even with offline off-policy data, can match the performance of online alignment methods on various tasks.

👉 Paper link: https://huggingface.co/papers/2509.24207

25. InfoAgent: Advancing Autonomous Information-Seeking Agents

🔑 Keywords: InfoAgent, deep research agent, data synthesis pipeline, self-hosted search infrastructure, reinforcement learning

💡 Category: AI Systems and Tools

🌟 Research Objective:

– The primary aim is to develop InfoAgent, a deep research agent that leverages a custom data synthesis pipeline and self-hosted search infrastructure to outperform existing agents in tool use and reasoning.

🛠️ Research Methods:

– The construction of entity trees, sub-tree sampling with entity fuzzification to increase query difficulty and a two-stage post-training process using cold-start supervised finetuning followed by reinforcement learning to enhance tool use and reasoning.

💬 Research Conclusions:

– InfoAgent demonstrates superior performance achieving higher accuracy rates on benchmarks such as BrowseComp, BrowseComp-ZH, and Xbench-DS when compared to previous open-source agents like WebSailor-72B and DeepDive-32B.

👉 Paper link: https://huggingface.co/papers/2509.25189

26. Voice Evaluation of Reasoning Ability: Diagnosing the Modality-Induced Performance Gap

🔑 Keywords: Reasoning Ability, Voice-Interactive Systems, Text-Versus-Voice Comparison, Real-Time Interaction, Low-Latency Plateau

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– VERA aims to benchmark the reasoning abilities of voice-interactive systems under real-time conversational constraints and compare their performance with text models.

🛠️ Research Methods:

– The study evaluates 2,931 voice-native episodes derived from text benchmarks across five tracks (Math, Web, Science, Long-Context, Factual) to preserve reasoning difficulty, conducting direct comparisons of voice and text models.

💬 Research Conclusions:

– The research reveals significant performance disparities, with voice systems underperforming compared to text models. It demonstrates a low-latency plateau for fast voice systems and highlights the limitations of common mitigation strategies, emphasizing the need for improved architectural designs to decouple reasoning from narration for real-time voice assistant development.

👉 Paper link: https://huggingface.co/papers/2509.26542

27. A Cartography of Open Collaboration in Open Source AI: Mapping Practices, Motivations, and Governance in 14 Open Large Language Model Projects

🔑 Keywords: open large language models, democratizing AI, open collaboration, organizational models, community engagement

💡 Category: Natural Language Processing

🌟 Research Objective:

– To explore the collaboration methods in the development and reuse lifecycle of open large language models (LLMs) and identify diverse motivations and organizational models among developers.

🛠️ Research Methods:

– Conducted an exploratory analysis through semi-structured interviews with developers from 14 open LLM projects across various sectors such as grassroots projects, research institutes, startups, and Big Tech companies worldwide.

💬 Research Conclusions:

– Collaboration in open LLM projects extends beyond the models themselves, involving datasets, benchmarks, open-source frameworks, leaderboards, knowledge sharing, and compute partnerships.

– Developers have diverse motivations including democratizing AI access, promoting open science, building regional ecosystems, and expanding language representation.

– Open LLM projects showcase five distinct organizational models, varying in control centralization and community engagement strategies.

👉 Paper link: https://huggingface.co/papers/2509.25397

28. Attention as a Compass: Efficient Exploration for Process-Supervised RL in Reasoning Models

🔑 Keywords: PSRL, AttnRL, Reinforcement Learning, Large Language Models, attention scores

💡 Category: Reinforcement Learning

🌟 Research Objective:

– To introduce a novel PSRL framework (AttnRL) that enhances exploration efficiency in reasoning models by leveraging high attention positions and adaptive sampling strategies.

🛠️ Research Methods:

– Development of a framework that branches from positions with high attention scores and employs an adaptive sampling strategy based on problem difficulty and historical batch size.

– Implementation of a one-step off-policy training pipeline to improve sampling efficiency.

💬 Research Conclusions:

– The AttnRL framework consistently outperforms existing methods in mathematical reasoning benchmarks in terms of performance and sampling/training efficiency.

👉 Paper link: https://huggingface.co/papers/2509.26628