AI Native Daily Paper Digest – 20251023

1. Every Attention Matters: An Efficient Hybrid Architecture for Long-Context Reasoning

🔑 Keywords: Ring-linear model series, hybrid architecture, linear attention, softmax attention, reinforcement learning

💡 Category: Machine Learning

🌟 Research Objective:

– The objective is to introduce the Ring-linear model series, focusing on improving training efficiency and reducing inference costs through a hybrid architecture that combines linear and softmax attention mechanisms.

🛠️ Research Methods:

– Utilized a hybrid architecture integrating linear and softmax attention to decrease I/O and computational overhead.

– Explored different attention mechanism ratios for optimal model structure.

– Leveraged a self-developed FP8 operator library for better training efficiency.

💬 Research Conclusions:

– The Ring-linear models demonstrate significant cost reductions in inference, performing at 1/10 the cost compared to traditional models.

– Enhanced training efficiency and maintained SOTA performance in long-term optimization for complex reasoning tasks.

👉 Paper link: https://huggingface.co/papers/2510.19338

2. BAPO: Stabilizing Off-Policy Reinforcement Learning for LLMs via Balanced Policy Optimization with Adaptive Clipping

🔑 Keywords: BAlanced Policy Optimization, Adaptive Clipping, Reinforcement Learning, policy entropy, large language models

💡 Category: Reinforcement Learning

🌟 Research Objective:

– To address the challenges in off-policy reinforcement learning by improving sample efficiency, stability, and performance in large language models through dynamic adjustment of clipping bounds.

🛠️ Research Methods:

– Theoretical and empirical analysis identifies key imbalances in optimizations and introduces the Entropy-Clip Rule.

– Proposal and implementation of BAlanced Policy Optimization with Adaptive Clipping (BAPO) to dynamically adjust clipping bounds.

💬 Research Conclusions:

– BAPO enhances fast, stable, and data-efficient training across diverse off-policy scenarios.

– BAPO models surpassed open-source and proprietary counterparts in benchmarks, demonstrating state-of-the-art performance.

👉 Paper link: https://huggingface.co/papers/2510.18927

3. Language Models are Injective and Hence Invertible

🔑 Keywords: Transformer language models, injective, transparency, continuous representations, SipIt

💡 Category: Natural Language Processing

🌟 Research Objective:

– To establish the injectivity of transformer language models from initial training, allowing exact reconstruction of input from hidden activations.

🛠️ Research Methods:

– Mathematical proof and billions of empirical collision tests on state-of-the-art language models to verify injectivity.

– Introduction of SipIt algorithm for efficient reconstruction of input text from hidden activations.

💬 Research Conclusions:

– Transformer language models are proven to be injective and lossless, with implications for improving transparency, interpretability, and safe deployment.

👉 Paper link: https://huggingface.co/papers/2510.15511

4. LoongRL:Reinforcement Learning for Advanced Reasoning over Long Contexts

🔑 Keywords: LoongRL, Reinforcement Learning, Long-Context Reasoning, multi-hop QA, KeyChain

💡 Category: Reinforcement Learning

🌟 Research Objective:

– LoongRL aims to improve long-context reasoning in large language models by transforming short multi-hop QA into high-difficulty tasks, enhancing accuracy and generalization.

🛠️ Research Methods:

– The study introduces KeyChain, a synthesis approach that converts short multi-hop QA into complex long-context tasks. This method involves inserting UUID chains to create distractions, requiring the model to use a plan-retrieve-reason-recheck pattern to identify and answer the true question.

💬 Research Conclusions:

– The application of LoongRL significantly enhances long-context multi-hop QA accuracy, achieving substantial gains on model tests and rivaling much larger models. It maintains the model’s short-context reasoning abilities while successfully passing all stress tests.

👉 Paper link: https://huggingface.co/papers/2510.19363

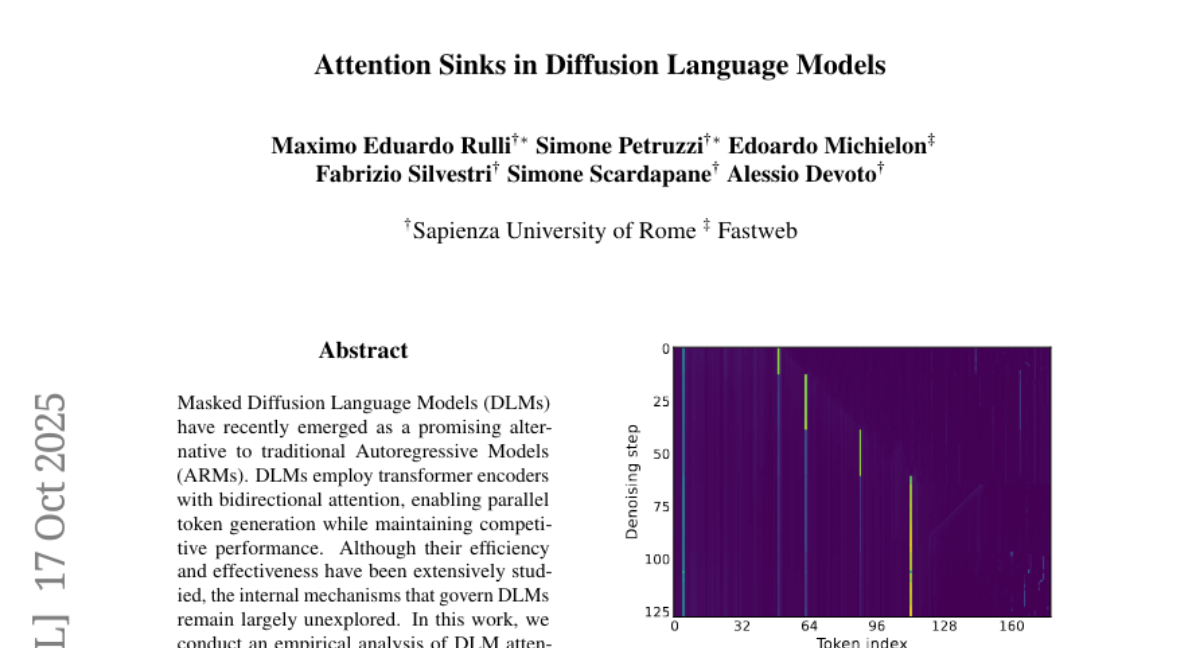

5. Attention Sinks in Diffusion Language Models

🔑 Keywords: Masked Diffusion Language Models, Autoregressive Models, attention sinking, robustness, bidirectional attention

💡 Category: Natural Language Processing

🌟 Research Objective:

– The study aims to empirically analyze the attention patterns in Masked Diffusion Language Models and compare them to Autoregressive Models, focusing specifically on the phenomenon of attention sinking.

🛠️ Research Methods:

– Conducted an empirical analysis of attention patterns within Masked Diffusion Language Models, noting their dynamic sink positions and robustness against removal.

💬 Research Conclusions:

– Masked Diffusion Language Models exhibit attention sinks with distinct characteristics that differ from Autoregressive Models, such as dynamic sink positions and greater robustness, as masking sinks only slightly degrades performance.

👉 Paper link: https://huggingface.co/papers/2510.15731

6. GigaBrain-0: A World Model-Powered Vision-Language-Action Model

🔑 Keywords: GigaBrain-0, VLA foundation model, world model-generated data, cross-task generalization, policy robustness

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– Introduce GigaBrain-0, a VLA foundation model to improve real-world performance on complex manipulation tasks by leveraging world model-generated data.

🛠️ Research Methods:

– Utilize various types of data generation (e.g., video, real2real, human, view, sim2real transfers).

– Incorporate RGBD input modeling and embodied Chain-of-Thought (CoT) supervision to enhance spatial reasoning and policy robustness.

💬 Research Conclusions:

– GigaBrain-0 significantly surpasses traditional models in terms of generalization and performance across different appearances, placements, and viewpoints.

– Introduction of GigaBrain-0-Small, a lightweight variant, ensures efficiency on devices like NVIDIA Jetson AGX Orin.

👉 Paper link: https://huggingface.co/papers/2510.19430

7. Unified Reinforcement and Imitation Learning for Vision-Language Models

🔑 Keywords: Unified Reinforcement and Imitation Learning, Vision-Language Models (VLMs), Lightweight Models, Reinforcement Learning, Text Generation

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Introduce Unified Reinforcement and Imitation Learning (RIL) to create efficient and lightweight vision-language models.

🛠️ Research Methods:

– Combines reinforcement learning with adversarial imitation learning using an LLM-based discriminator to guide smaller student models by mimicking larger teacher models.

💬 Research Conclusions:

– The proposed RIL algorithm enables student models to achieve performance gains, significantly narrowing and occasionally surpassing the performance gap with leading state-of-the-art open- and closed-source VLMs.

👉 Paper link: https://huggingface.co/papers/2510.19307

8. VideoAgentTrek: Computer Use Pretraining from Unlabeled Videos

🔑 Keywords: VideoAgentTrek, Video2Action, GUI interaction data, AI-generated summary, supervised fine-tuning

💡 Category: AI Systems and Tools

🌟 Research Objective:

– Develop VideoAgentTrek to automatically extract and utilize GUI interaction data from web-scale videos for training computer-use agents without manual annotation.

🛠️ Research Methods:

– Utilized a pipeline combining Video2Action, with components including a video grounding model and an action-content recognizer, applied to 39,000 YouTube tutorial videos.

💬 Research Conclusions:

– The pipeline improved task success rates from 9.3% to 15.8% and step accuracy from 64.1% to 69.3%, demonstrating that internet videos can effectively provide high-quality supervision for training computer-use agents.

👉 Paper link: https://huggingface.co/papers/2510.19488

9. DaMo: Data Mixing Optimizer in Fine-tuning Multimodal LLMs for Mobile Phone Agents

🔑 Keywords: AI-generated summary, DaMo, Multimodal Large Language Models, Mobile Phone Agents, PhoneAgentBench

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To develop DaMo, an optimizer for data mixtures aimed at enhancing the performance of Multimodal Large Language Models in mobile phone tasks.

🛠️ Research Methods:

– Employed a trainable network to predict optimal data mixtures by forecasting task performance.

– Introduced PhoneAgentBench, a benchmark for evaluating MLLMs on multimodal mobile phone tasks.

💬 Research Conclusions:

– DaMo achieved a 3.38% improvement in performance on PhoneAgentBench compared to other methods.

– Demonstrated a superior 2.57% average score improvement across multiple established benchmarks.

– Proved scalability and effectiveness in optimizing different model architectures with a 12.47% improvement on the BFCL-v3 task.

👉 Paper link: https://huggingface.co/papers/2510.19336

10. Pico-Banana-400K: A Large-Scale Dataset for Text-Guided Image Editing

🔑 Keywords: Pico-Banana-400K, instruction-based image editing, multimodal models, text-guided image editing, Nano-Banana

💡 Category: Computer Vision

🌟 Research Objective:

– Introduction of Pico-Banana-400K, a comprehensive dataset for instruction-based image editing, designed to overcome current limitations in available datasets.

🛠️ Research Methods:

– Constructed using Nano-Banana to generate diverse edit pairs from real images, maintaining content integrity and instruction faithfulness through MLLM-based quality scoring.

💬 Research Conclusions:

– Pico-Banana-400K offers a robust foundation for training and benchmarking advancements in text-guided image editing models with its large-scale, high-quality, and diverse content.

👉 Paper link: https://huggingface.co/papers/2510.19808

11. olmOCR 2: Unit Test Rewards for Document OCR

🔑 Keywords: olmOCR 2, Reinforcement Learning, State-of-the-art, OCR, Verifiable Rewards

💡 Category: Computer Vision

🌟 Research Objective:

– Develop and enhance an OCR system, olmOCR 2, for converting digitized print documents into naturally ordered plain text using an advanced vision language model.

🛠️ Research Methods:

– Utilized a specialized 7B vision language model trained with reinforcement learning with verifiable rewards, incorporating synthetic documents and extensive unit tests.

💬 Research Conclusions:

– Achieved state-of-the-art performance in OCR tasks, particularly enhancing math formula conversion, table parsing, and handling multi-column layouts with the olmOCR-Bench benchmark.

👉 Paper link: https://huggingface.co/papers/2510.19817

12. Directional Reasoning Injection for Fine-Tuning MLLMs

🔑 Keywords: Multimodal Large Language Models, Reasoning Ability, Supervised Fine-Tuning, Model Merging, Gradient Space

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To enhance the reasoning ability of multimodal large language models (MLLMs) while reducing computational costs.

🛠️ Research Methods:

– Utilization of a method called Directional Reasoning Injection for Fine-Tuning (DRIFT) to transfer reasoning knowledge in the gradient space.

💬 Research Conclusions:

– DRIFT consistently improves reasoning performance over naive merging and supervised fine-tuning, achieving efficiency comparable to training-heavy methods at significantly lower costs.

👉 Paper link: https://huggingface.co/papers/2510.15050

13. FinSight: Towards Real-World Financial Deep Research

🔑 Keywords: FinSight, Multi-agent framework, CAVM architecture, Iterative Vision-Enhanced Mechanism, Chain-of-Analysis

💡 Category: AI in Finance

🌟 Research Objective:

– Introduce FinSight, a novel multi-agent framework designed to generate high-quality multimodal financial reports.

🛠️ Research Methods:

– Utilizes the CAVM architecture for flexible data collection and report generation.

– Employs an Iterative Vision-Enhanced Mechanism for refining visual outputs.

– Features a two-stage Writing Framework to ensure coherent and citation-aware reporting.

💬 Research Conclusions:

– FinSight demonstrates superior factual accuracy, analytical depth, and presentation quality, outperforming existing systems.

👉 Paper link: https://huggingface.co/papers/2510.16844

14. Decomposed Attention Fusion in MLLMs for Training-Free Video Reasoning Segmentation

🔑 Keywords: Decomposed Attention Fusion, Multimodal large language models, Video Object Segmentation, Attention Maps, Video QA Task

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Enhance video object segmentation by refining attention maps in a training-free manner using DecAF.

🛠️ Research Methods:

– Developed Decomposed Attention Fusion (DecAF) with contrastive object-background fusion and complementary video-frame fusion mechanisms.

– Utilized attention-guided SAM2 prompting for fine-grained mask extraction.

💬 Research Conclusions:

– DecAF effectively suppresses irrelevant activations, enhances object-focused cues, and outperforms existing training-free methods.

– Achieves performance on par with training-based methods in video object segmentation benchmarks.

👉 Paper link: https://huggingface.co/papers/2510.19592

15. OmniNWM: Omniscient Driving Navigation World Models

🔑 Keywords: OmniNWM, panoramic navigation world model, 3D occupancy, rule-based dense rewards, autonomous driving

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– Introduce OmniNWM, a unified world model that enhances autonomous driving through comprehensive state, action, and reward dimensions.

🛠️ Research Methods:

– Utilizes Plucker ray-maps for precise control and a forcing strategy for high-quality video generation.

– Leverages 3D occupancy to define dense rewards without relying on external models.

💬 Research Conclusions:

– OmniNWM demonstrates state-of-the-art performance in video generation, control accuracy, and stability, offering a reliable closed-loop evaluation framework.

👉 Paper link: https://huggingface.co/papers/2510.18313

16. Are they lovers or friends? Evaluating LLMs’ Social Reasoning in English and Korean Dialogues

🔑 Keywords: large language models, social reasoning, interpersonal relationships, dialogue dataset, socially-aware language models

💡 Category: Human-AI Interaction

🌟 Research Objective:

– This study introduces SCRIPTS, a dialogue dataset in English and Korean, to evaluate the social reasoning capabilities of large language models in inferring interpersonal relationships.

🛠️ Research Methods:

– Analysis involves evaluating nine models on their ability to determine probabilistic relational labels in a dataset annotated by native Korean and English speakers.

💬 Research Conclusions:

– Current language models show significant shortcomings in social reasoning, with performance disparities between languages and challenges in recognizing interpersonal nuances. The research highlights the necessity for developing socially-aware language models.

👉 Paper link: https://huggingface.co/papers/2510.19028

17. KORE: Enhancing Knowledge Injection for Large Multimodal Models via Knowledge-Oriented Augmentations and Constraints

🔑 Keywords: KORE, knowledge adaptation, knowledge retention, catastrophic forgetting

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The main objective of the research is to introduce KORE, a method designed to inject new knowledge into large multimodal models while preserving existing knowledge.

🛠️ Research Methods:

– KORE employs knowledge-oriented augmentations and covariance matrix constraints. It structures knowledge items for more effective learning and stores previous knowledge in the covariance matrix of linear layer activations, minimizing interference during fine-tuning.

💬 Research Conclusions:

– KORE significantly improves new knowledge injection performance and effectively mitigates catastrophic forgetting, as demonstrated by extensive experiments on various large multimodal models.

👉 Paper link: https://huggingface.co/papers/2510.19316

18. ColorAgent: Building A Robust, Personalized, and Interactive OS Agent

🔑 Keywords: AI Agent, Reinforcement Learning, Multi-Agent Framework, Personalized Interaction, Android

💡 Category: Human-AI Interaction

🌟 Research Objective:

– The objective is to develop ColorAgent, an OS agent, that engages in long-horizon, personalized, and proactive user interactions.

🛠️ Research Methods:

– Utilizes step-wise reinforcement learning and a multi-agent framework to enhance the model’s capabilities for robust and personalized user engagement.

💬 Research Conclusions:

– ColorAgent achieved high success rates on AndroidWorld and AndroidLab benchmarks, setting a new state of the art while acknowledging current evaluation limitations.

👉 Paper link: https://huggingface.co/papers/2510.19386

19. From Charts to Code: A Hierarchical Benchmark for Multimodal Models

🔑 Keywords: Chart2Code, Multimodal Models, Code Generation, Hierarchical Benchmark

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Introduce Chart2Code, a hierarchical benchmark designed to evaluate the chart understanding and code generation capabilities of Large Multimodal Models (LMMs), with increasing complexity across three levels.

🛠️ Research Methods:

– The benchmark contains 2,023 tasks and evaluates 25 SoTA LMMs using multi-level evaluation metrics to assess code correctness and the visual fidelity of rendered charts.

💬 Research Conclusions:

– The benchmark highlights the challenges in the field, as demonstrated by GPT-5’s performance, driving advances in multimodal reasoning and the development of more robust general-purpose LMMs.

👉 Paper link: https://huggingface.co/papers/2510.17932

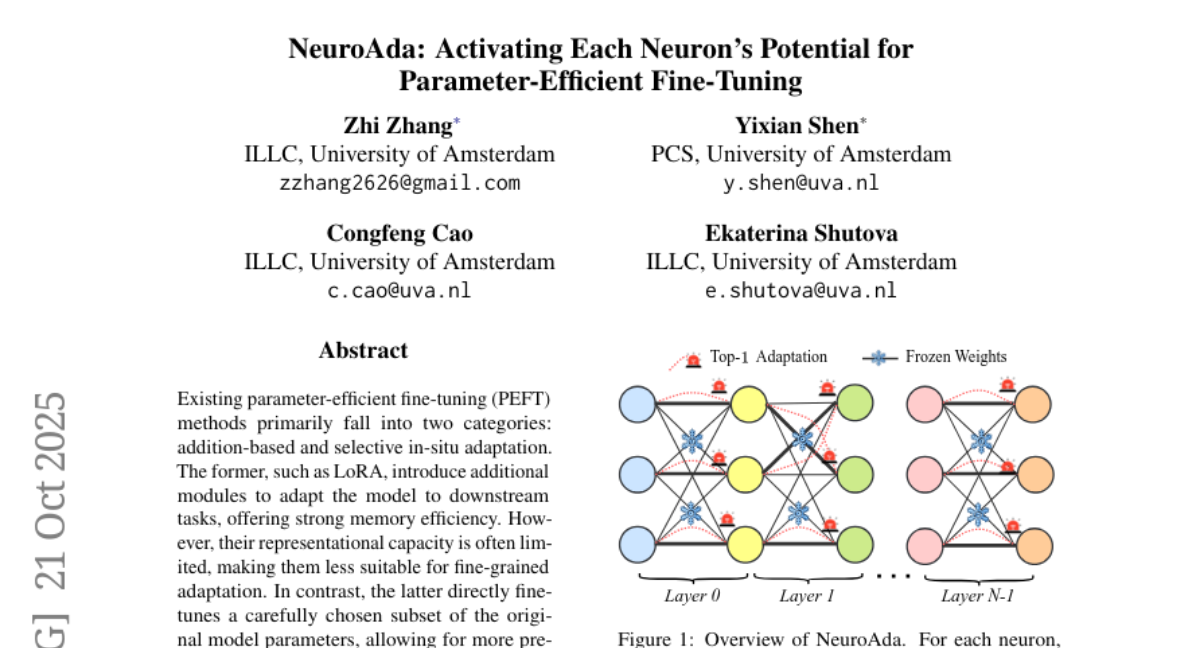

20. NeuroAda: Activating Each Neuron’s Potential for Parameter-Efficient Fine-Tuning

🔑 Keywords: NeuroAda, PEFT, bypass connections, parameter-efficient fine-tuning, memory efficiency

💡 Category: Natural Language Processing

🌟 Research Objective:

– To develop NeuroAda, a parameter-efficient fine-tuning method that allows fine-grained model adaptation while maintaining high memory efficiency.

🛠️ Research Methods:

– Combines selective adaptation with the introduction of bypass connections, updating only these connections during fine-tuning.

💬 Research Conclusions:

– NeuroAda achieves state-of-the-art performance in both natural language generation and understanding tasks, achieving significant memory savings with as little as ≤0.02% trainable parameters.

👉 Paper link: https://huggingface.co/papers/2510.18940

21. ProfBench: Multi-Domain Rubrics requiring Professional Knowledge to Answer and Judge

🔑 Keywords: ProfBench, Large Language Models (LLMs), Human-Expert Evaluation, Performance Disparities, Extended Thinking

💡 Category: Natural Language Processing

🌟 Research Objective:

– To evaluate large language models (LLMs) in professional domains through human-expert evaluation criteria.

🛠️ Research Methods:

– Development of ProfBench, which includes over 7000 response-criterion pairs evaluated by human-experts in diverse professional fields.

– Utilization of robust and cost-effective LLM-Judges to assess ProfBench rubrics while addressing self-enhancement bias.

💬 Research Conclusions:

– ProfBench presents significant challenges to state-of-the-art LLMs, with top models achieving only 65.9% performance.

– Notable performance disparities exist between proprietary and open-weight models.

– Insights highlight the role of extended thinking in tackling complex professional-domain tasks.

👉 Paper link: https://huggingface.co/papers/2510.18941

22. Steering Autoregressive Music Generation with Recursive Feature Machines

🔑 Keywords: Recursive Feature Machines, AI-generated music, control, activation space, MusicGen

💡 Category: Generative Models

🌟 Research Objective:

– The study introduces MusicRFM to provide real-time, fine-grained control over AI-generated music by steering internal activations of pre-trained models, enhancing musical note accuracy with minimal impact on prompt fidelity.

🛠️ Research Methods:

– MusicRFM employs Recursive Feature Machines to analyze internal gradients and identify concept directions in the activation space, corresponding to musical attributes. Lightweight RFM probes are trained to discover these directions, which are then injected back into the model during inference.

💬 Research Conclusions:

– The method effectively balances control and generation quality, improving target musical note accuracy from 0.23 to 0.82, while maintaining prompt adherence close to the baseline. The research also releases code to encourage further exploration in the music domain.

👉 Paper link: https://huggingface.co/papers/2510.19127

23. TheMCPCompany: Creating General-purpose Agents with Task-specific Tools

🔑 Keywords: AI-generated summary, REST APIs, Tool-calling agents, Model Context Protocol, Large Language Models

💡 Category: AI Systems and Tools

🌟 Research Objective:

– Evaluate tool-calling agents using REST APIs for real-world service interactions, focusing on performance in simple versus complex enterprise environments.

🛠️ Research Methods:

– Developed TheMCPCompany benchmark with over 18,000 tools via REST APIs. Utilized manually annotated ground-truth tools to test potential improvements in efficiency and cost using tool-calling agents.

💬 Research Conclusions:

– Advanced reasoning models excel in simpler environments but face significant challenges in complex enterprise settings. Improving reasoning and retrieval models is necessary for effectively navigating and solving complex tasks using tens of thousands of tools.

👉 Paper link: https://huggingface.co/papers/2510.19286

24. RIR-Mega: a large-scale simulated room impulse response dataset for machine learning and room acoustics modeling

🔑 Keywords: RIR-Mega, Room impulse responses, RT60, Hugging Face Datasets, Random Forest

💡 Category: AI Systems and Tools

🌟 Research Objective:

– To present RIR-Mega, a large dataset of simulated room impulse responses designed to aid in dereverberation, robust speech recognition, source localization, and room acoustics estimation.

🛠️ Research Methods:

– Utilizes a compact and machine-friendly metadata schema, a Hugging Face Datasets loader, scripts for metadata checks and checksums, and a small Random Forest model on lightweight time and spectral features to predict RT60 from waveforms.

💬 Research Conclusions:

– The dataset shows a mean absolute error near 0.013 s and a root mean square error near 0.022 s, supporting reproducible studies with public access to the dataset and code.

👉 Paper link: https://huggingface.co/papers/2510.18917

25. MINED: Probing and Updating with Multimodal Time-Sensitive Knowledge for Large Multimodal Models

🔑 Keywords: Large Multimodal Models, Temporal Awareness, Knowledge Editing

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– To propose MINED, a benchmark that evaluates temporal awareness in Large Multimodal Models (LMMs) by assessing six key dimensions through 11 tasks.

🛠️ Research Methods:

– Construction of MINED using Wikipedia by two professional annotators, focusing on time-sensitive knowledge samples spanning six knowledge types.

💬 Research Conclusions:

– Gemini-2.5-Pro excels in temporal awareness with the highest CEM score, while most open-source LMMs fall short. LMMs have strong performance in organization knowledge but struggle with sports knowledge.

– Knowledge editing methods show potential in updating LMMs’ time-sensitive knowledge effectively in single editing scenarios.

👉 Paper link: https://huggingface.co/papers/2510.19457

26. Learning from the Best, Differently: A Diversity-Driven Rethinking on Data Selection

🔑 Keywords: Orthogonal Diversity-Aware Selection, Large Language Models, Principal Component Analysis, Diversity, Quality

💡 Category: Natural Language Processing

🌟 Research Objective:

– Enhance large language model performance through a novel data selection algorithm that balances quality and diversity.

🛠️ Research Methods:

– The Orthogonal Diversity-Aware Selection (ODiS) algorithm uses orthogonal decomposition of evaluation dimensions via Principal Component Analysis to ensure diverse data selection. A Roberta-based scorer evaluates and selects top-scored data within orthogonal dimensions.

💬 Research Conclusions:

– Models trained with ODiS-selected data outperform baselines on benchmarks, showcasing the necessity of orthogonal, diversity-aware data selection for improved model performance.

👉 Paper link: https://huggingface.co/papers/2510.18909

27. AlphaOPT: Formulating Optimization Programs with Self-Improving LLM Experience Library

🔑 Keywords: Optimization modeling, AlphaOPT, LLM, Feedback Learning, AI Systems and Tools

💡 Category: AI Systems and Tools

🌟 Research Objective:

– The research aims to improve optimization modeling across industries using AlphaOPT, a self-improving library that allows a Language Learning Model (LLM) to learn from limited demonstrations and solver feedback without retraining.

🛠️ Research Methods:

– AlphaOPT operates through a continual two-phase cycle: Library Learning which reflects on failed attempts for better insights, and Library Evolution which refines applicability conditions to enhance task transferability.

💬 Research Conclusions:

– AlphaOPT efficiently learns from limited demonstrations and continually expands without costly retraining. It makes knowledge explicit and interpretable, showing a steady improvement with data, and outperforms baselines on the out-of-distribution OptiBench dataset.

👉 Paper link: https://huggingface.co/papers/2510.18428