AI Native Daily Paper Digest – 20251029

1. InteractComp: Evaluating Search Agents With Ambiguous Queries

🔑 Keywords: Interactive Mechanisms, Query Ambiguity, Search Agents, Benchmark, Interaction Capabilities

💡 Category: Human-AI Interaction

🌟 Research Objective:

– The paper aims to introduce InteractComp, a benchmark designed to evaluate how well search agents can recognize and resolve query ambiguity through interaction.

🛠️ Research Methods:

– A target-distractor methodology was employed, constructing 210 expert-curated questions across 9 domains to test agents’ ability to handle genuine ambiguity via interaction.

💬 Research Conclusions:

– Evaluation of 17 models revealed poor performance, with the best model achieving only 13.73% accuracy. It was found that interaction capabilities have stagnated over 15 months despite improvements in search performance.

👉 Paper link: https://huggingface.co/papers/2510.24668

2. Tongyi DeepResearch Technical Report

🔑 Keywords: Large Language Model, Agentic Capabilities, End-to-End Training Framework, Scalable Reasoning, AI-generated Summary

💡 Category: Natural Language Processing

🌟 Research Objective:

– Introduce Tongyi DeepResearch, an agentic large language model designed for long-horizon, deep information-seeking research tasks.

🛠️ Research Methods:

– Developed using an end-to-end training framework combining agentic mid-training and post-training with an automated data synthesis pipeline.

💬 Research Conclusions:

– Achieves state-of-the-art performance on multiple agentic deep research benchmarks and is open-sourced to support the community.

👉 Paper link: https://huggingface.co/papers/2510.24701

3. AgentFold: Long-Horizon Web Agents with Proactive Context Management

🔑 Keywords: LLM-based web agents, context management, AgentFold, cognitive workspace, deep consolidations

💡 Category: Natural Language Processing

🌟 Research Objective:

– Introduce AgentFold, a novel proactive context management paradigm for improving performance of LLM-based web agents on long-horizon tasks.

🛠️ Research Methods:

– Utilize dynamic context folding inspired by human cognitive processes for context management, enabling granular condensations and deep consolidations.

💬 Research Conclusions:

– AgentFold-30B-A3B achieves superior performance on benchmarks, surpassing larger open-source models and proprietary agents.

👉 Paper link: https://huggingface.co/papers/2510.24699

4. RoboOmni: Proactive Robot Manipulation in Omni-modal Context

🔑 Keywords: Multimodal Large Language Models, Vision-Language-Action, intention recognition, Perceiver-Thinker-Talker-Executor, RoboOmni

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– To develop the RoboOmni framework using an end-to-end omni-modal LLM to improve robotic manipulation by inferring user intentions from spoken dialogue, environmental sounds, and visual cues.

🛠️ Research Methods:

– Introduction of cross-modal contextual instructions for intent derivation.

– Development of RoboOmni, which unifies intention recognition, interaction confirmation, and action execution through spatiotemporal fusion of auditory and visual signals.

– Creation of the OmniAction dataset comprising 140k episodes to enhance training for proactive intention recognition.

💬 Research Conclusions:

– RoboOmni surpasses current text- and ASR-based baselines in success rate, inference speed, and provides effective proactive assistance in both simulation and real-world settings.

👉 Paper link: https://huggingface.co/papers/2510.23763

5. Game-TARS: Pretrained Foundation Models for Scalable Generalist Multimodal Game Agents

🔑 Keywords: generalist agents, unified action space, multimodal data, large-scale pre-training

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The objective is to develop Game-TARS, a generalist game agent with a unified action space that excels across various domains and benchmarks through large-scale pre-training.

🛠️ Research Methods:

– Game-TARS is trained with human-aligned native keyboard-mouse inputs, using a scalable action space and techniques like a decaying continual loss and Sparse-Thinking strategy to enhance reasoning while controlling inference cost.

💬 Research Conclusions:

– Game-TARS demonstrates superior performance in open-world Minecraft tasks, nearly matches the generality of humans in unseen web 3d games, and surpasses state-of-the-art models in FPS benchmarks. It highlights the potential of scalable action representations and large-scale pre-training in developing broad computer-use abilities.

👉 Paper link: https://huggingface.co/papers/2510.23691

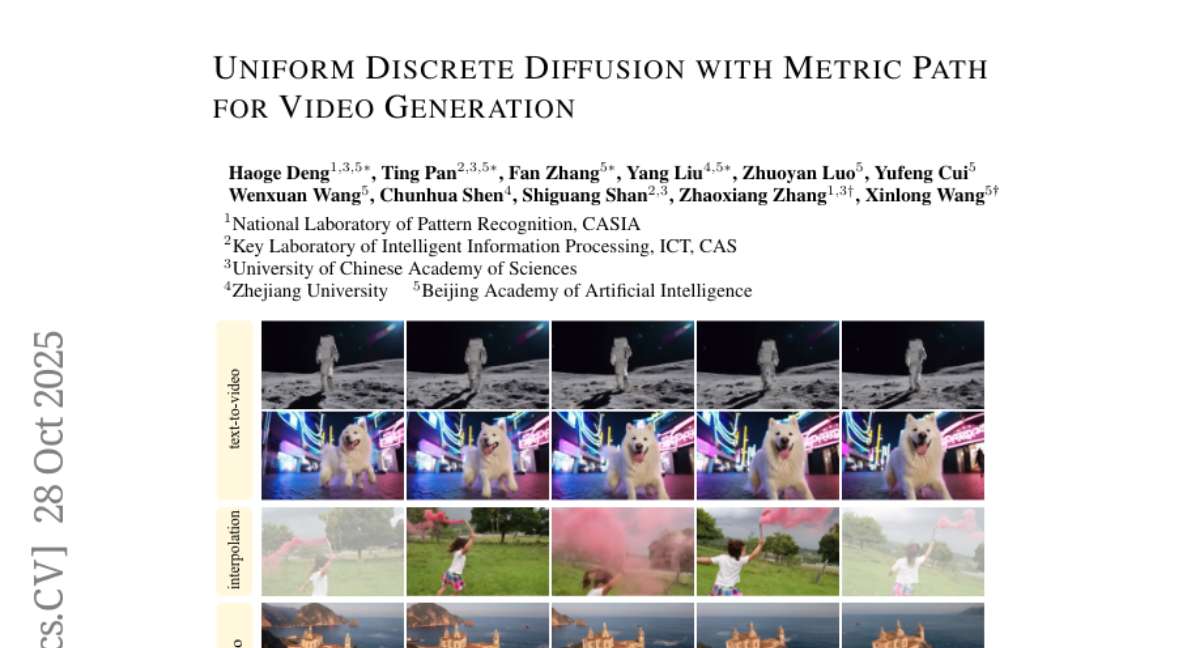

6. Uniform Discrete Diffusion with Metric Path for Video Generation

🔑 Keywords: URSA, discrete generative model, Linearized Metric Path, Resolution-dependent Timestep Shifting, video generation

💡 Category: Generative Models

🌟 Research Objective:

– The main aim is to bridge the gap between discrete and continuous approaches in video generation, enhancing scalability to high-resolution and long-duration synthesis with fewer inference steps.

🛠️ Research Methods:

– Utilization of the URSA framework, which incorporates Linearized Metric Path and Resolution-dependent Timestep Shifting. An asynchronous temporal fine-tuning strategy further extends its capabilities to tasks like interpolation and image-to-video generation.

💬 Research Conclusions:

– URSA consistently outperforms existing discrete models, achieving performances comparable to state-of-the-art continuous diffusion methods in both video and image generation tasks. Code and models are made publicly available for further exploration.

👉 Paper link: https://huggingface.co/papers/2510.24717

7. OSWorld-MCP: Benchmarking MCP Tool Invocation In Computer-Use Agents

🔑 Keywords: OSWorld-MCP, multimodal agents, tool invocation, GUI operation, decision-making

💡 Category: AI Systems and Tools

🌟 Research Objective:

– To introduce OSWorld-MCP, the first comprehensive benchmark for evaluating computer-use agents’ capabilities in tool invocation, GUI operation, and decision-making in real-world scenarios.

🛠️ Research Methods:

– Developed a novel automated code-generation pipeline and combined its tools with curated selections from existing tools. Conducted extensive evaluations with rigorous manual validation resulting in 158 high-quality tools for assessment.

💬 Research Conclusions:

– OSWorld-MCP shows that integrating tool invocation significantly improves task success rates and sets a new standard for multimodal agents’ performance in complex environments, although tool invocation rates indicate there is still room for improvement.

👉 Paper link: https://huggingface.co/papers/2510.24563

8. Repurposing Synthetic Data for Fine-grained Search Agent Supervision

🔑 Keywords: Entity-aware Group Relative Policy Optimization, E-GRPO, Reinforcement Learning, Question-Answering, Entity Match Rate

💡 Category: Reinforcement Learning

🌟 Research Objective:

– To enhance search agents by integrating entity information into the reward function, thereby improving accuracy and efficiency in knowledge-intensive tasks.

🛠️ Research Methods:

– Introduction of a novel framework called Entity-aware Group Relative Policy Optimization (E-GRPO), which formulates a dense entity-aware reward function and assigns partial rewards to incorrect samples based on their entity match rate.

💬 Research Conclusions:

– E-GRPO consistently outperforms the GRPO baseline, achieving superior accuracy and more efficient reasoning policies that require fewer tool calls, demonstrating a more effective approach to training search agents.

👉 Paper link: https://huggingface.co/papers/2510.24694

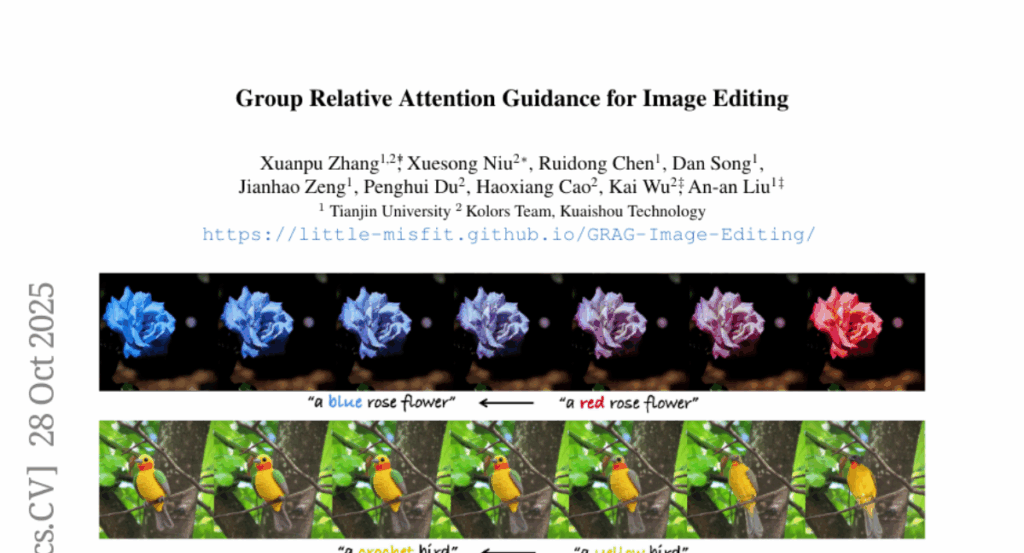

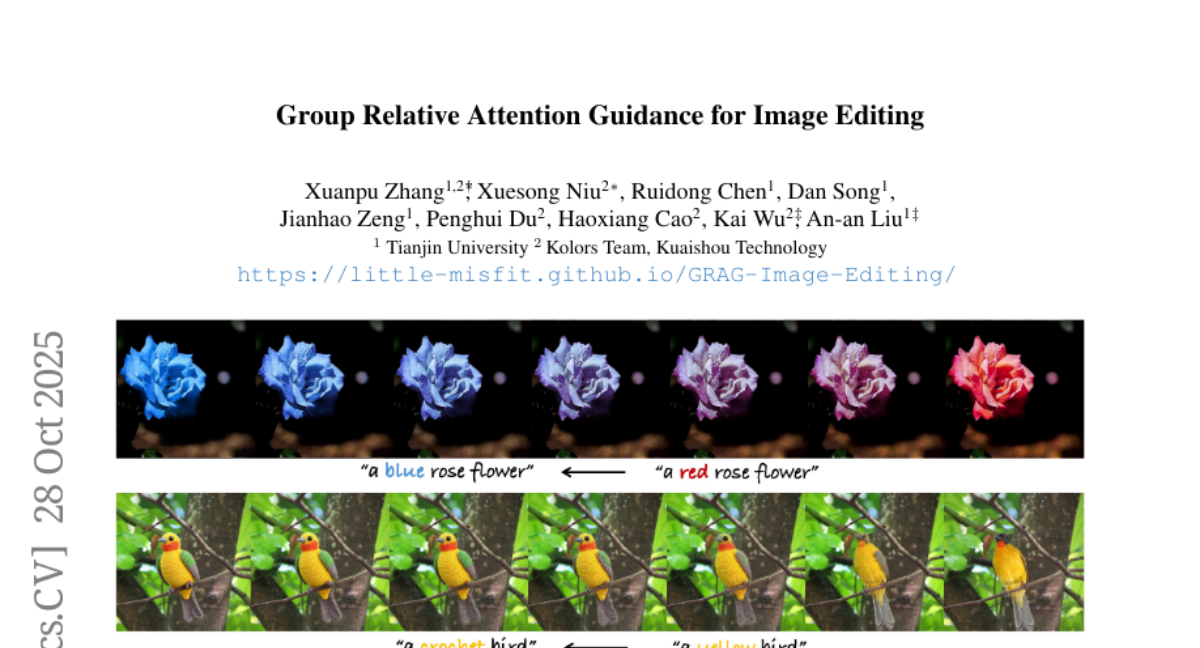

9. Group Relative Attention Guidance for Image Editing

🔑 Keywords: Group Relative Attention Guidance, Diffusion-in-Transformer, MM-Attention, bias vector, Classifier-Free Guidance

💡 Category: Generative Models

🌟 Research Objective:

– Enhance image editing quality by introducing Group Relative Attention Guidance in Diffusion-in-Transformer models for fine-grained control over editing intensity.

🛠️ Research Methods:

– Investigation of MM-Attention mechanism to understand token and bias vector relationships, leading to the modulation of token deltas for improved editing control.

💬 Research Conclusions:

– GRAG provides smoother and more precise editing intensity control compared to existing methods, requires minimal integration effort, and improves overall image editing quality.

👉 Paper link: https://huggingface.co/papers/2510.24657

10. AgentFrontier: Expanding the Capability Frontier of LLM Agents with ZPD-Guided Data Synthesis

🔑 Keywords: ZPD-guided, large language model, data synthesis, state-of-the-art performance, complex reasoning tasks

💡 Category: Natural Language Processing

🌟 Research Objective:

– The objective is to enhance the capabilities of large language models by training them on tasks just beyond their current abilities, using a ZPD-guided data synthesis approach.

🛠️ Research Methods:

– Introduced the AgentFrontier Engine, an automated pipeline to synthesize high-quality, multidisciplinary data within the LLM’s Zone of Proximal Development.

– Utilized both continued pre-training with knowledge-intensive data and targeted post-training on complex reasoning tasks.

💬 Research Conclusions:

– The approach results in the AgentFrontier-30B-A3B model achieving state-of-the-art results on demanding benchmarks, demonstrating the scalability and effectiveness of ZPD-guided data synthesis in building advanced LLM agents.

👉 Paper link: https://huggingface.co/papers/2510.24695

11. WebLeaper: Empowering Efficiency and Efficacy in WebAgent via Enabling Info-Rich Seeking

🔑 Keywords: WebLeaper, Large Language Model, information seeking, tree-structured reasoning, search efficiency

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– The study aims to improve information seeking efficiency and effectiveness by constructing high-coverage tasks and generating efficient solution trajectories.

🛠️ Research Methods:

– The research introduces the WebLeaper framework, utilizing tree-structured reasoning and curated Wikipedia tables with three task synthesis variants: Basic, Union, and Reverse-Union.

💬 Research Conclusions:

– The results from experiments on five information seeking benchmarks demonstrate consistent improvements in both effectiveness and efficiency over strong baselines.

👉 Paper link: https://huggingface.co/papers/2510.24697

12. ParallelMuse: Agentic Parallel Thinking for Deep Information Seeking

🔑 Keywords: ParallelMuse, deep information-seeking agents, parallel thinking, reasoning trajectories

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– To enhance problem-solving capabilities by improving efficiency in path reuse and reasoning compression in deep information-seeking agents.

🛠️ Research Methods:

– A two-stage paradigm: Functionality-Specified Partial Rollout and Compressed Reasoning Aggregation, aimed at improving exploration efficiency and synthesizing coherent answers.

💬 Research Conclusions:

– The proposed methodology demonstrates up to 62% performance improvement with a 10-30% reduction in exploratory token consumption.

👉 Paper link: https://huggingface.co/papers/2510.24698

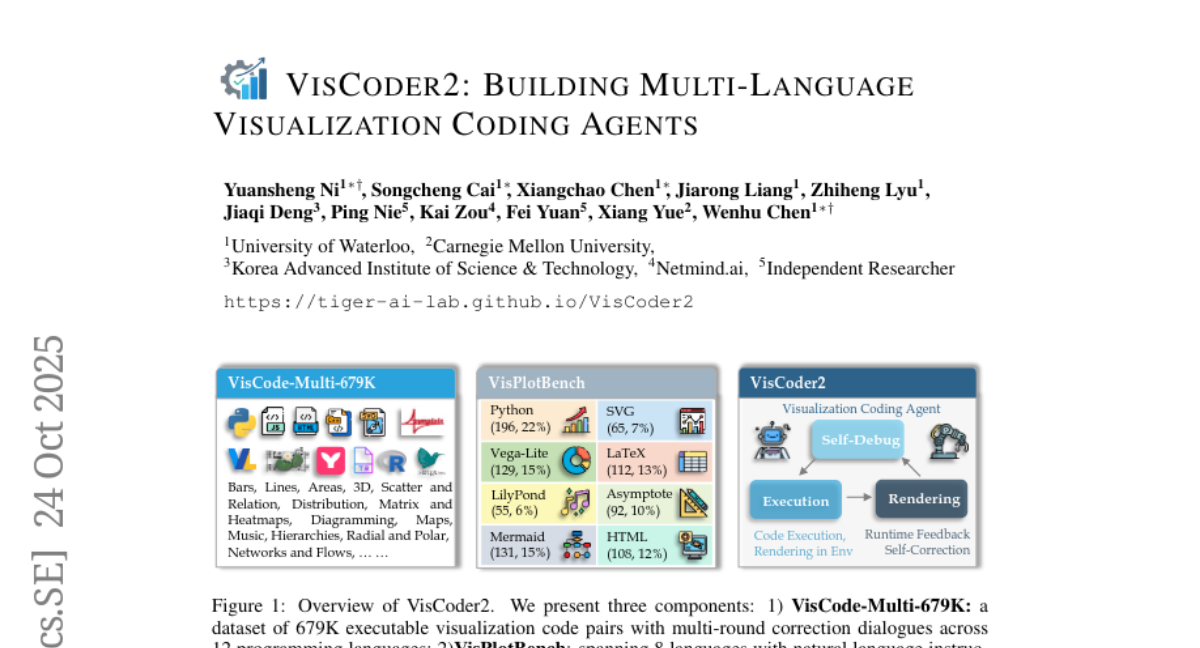

13. VisCoder2: Building Multi-Language Visualization Coding Agents

🔑 Keywords: VisCoder2, VisCode-Multi-679K, VisPlotBench, Large language models, Visualization code

💡 Category: AI Systems and Tools

🌟 Research Objective:

– Address limitations in current coding agents by introducing robust datasets and models for visualization code generation and debugging.

🛠️ Research Methods:

– Developed VisCode-Multi-679K, a large-scale dataset for multi-language visualization.

– Created VisPlotBench, a benchmark for evaluating iterative self-debugging in visualization tasks.

💬 Research Conclusions:

– The VisCoder2 model family significantly surpasses open-source models and comes close to proprietary ones while improving execution pass rates, especially in complex languages.

👉 Paper link: https://huggingface.co/papers/2510.23642

14. Latent Sketchpad: Sketching Visual Thoughts to Elicit Multimodal Reasoning in MLLMs

🔑 Keywords: Latent Sketchpad, Multimodal Large Language Models, internal visual scratchpad, generative visual thought, human-computer interaction

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To enhance the reasoning and visual thinking capabilities of Multimodal Large Language Models by integrating an internal visual scratchpad.

🛠️ Research Methods:

– Introduction of Latent Sketchpad featuring Context-Aware Vision Head and Sketch Decoder to enable generative visual thought and interpretation.

– Evaluation conducted on a new dataset, MazePlanning, across various MLLMs including Gemma3 and Qwen2.5-VL.

💬 Research Conclusions:

– The Latent Sketchpad framework demonstrates enhanced reasoning performance and generalizes effectively across different MLLMs, opening possibilities for enriched human-computer interaction and broader applications.

👉 Paper link: https://huggingface.co/papers/2510.24514

15. STAR-Bench: Probing Deep Spatio-Temporal Reasoning as Audio 4D Intelligence

🔑 Keywords: Audio 4D intelligence, STAR-Bench, sound dynamics, Foundational Acoustic Perception, Holistic Spatio-Temporal Reasoning

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– This study presents STAR-Bench as a benchmark designed to evaluate Audio 4D intelligence, focusing on sound dynamics in both time and 3D space, aiming to uncover gaps in fine-grained perceptual reasoning of current models.

🛠️ Research Methods:

– The research implements STAR-Bench, constituting a Foundational Acoustic Perception setting and a Holistic Spatio-Temporal Reasoning setting, combining procedure-synthesized and physics-simulated audio with human annotation processes.

💬 Research Conclusions:

– STAR-Bench uncovers significant gaps in model capabilities, especially when compared to human performance, and it highlights the limitations in both closed-source and open-source models in terms of perception, knowledge, and reasoning.

👉 Paper link: https://huggingface.co/papers/2510.24693

16. Routing Matters in MoE: Scaling Diffusion Transformers with Explicit Routing Guidance

🔑 Keywords: ProMoE, Mixture-of-Experts, conditional routing, prototypical routing, AI-generated summary

💡 Category: Computer Vision

🌟 Research Objective:

– To enhance expert specialization in Diffusion Transformers through ProMoE, achieving state-of-the-art performance on ImageNet.

🛠️ Research Methods:

– Introduced a two-step router in the MoE framework with conditional and prototypical routing to partition image tokens and refine their assignments based on semantic content.

💬 Research Conclusions:

– ProMoE surpasses state-of-the-art methods in ImageNet benchmarks, with routing contrastive loss enhancing intra-expert coherence and inter-expert diversity, showing the importance of semantic guidance in vision MoE.

👉 Paper link: https://huggingface.co/papers/2510.24711

17. Critique-RL: Training Language Models for Critiquing through Two-Stage Reinforcement Learning

🔑 Keywords: Critique-RL, reinforcement learning, critiquing language models, discriminability, helpfulness

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The paper introduces Critique-RL, an online reinforcement learning approach aimed at enhancing critiquing language models without strong supervision by utilizing a two-stage optimization strategy.

🛠️ Research Methods:

– The methodology involves a two-player paradigm where the actor generates responses and the critic provides feedback. It emphasizes a two-stage optimization: Stage I focuses on improving the critic’s discriminability with direct, rule-based rewards, while Stage II targets the critic’s helpfulness through indirect rewards based on actor refinement.

💬 Research Conclusions:

– The experimentation with Critique-RL reveals substantial performance improvements, such as a 9.02% gain in in-domain tasks and a 5.70% gain in out-of-domain tasks for Qwen2.5-7B, underscoring its potential effectiveness.

👉 Paper link: https://huggingface.co/papers/2510.24320

18. Agent Data Protocol: Unifying Datasets for Diverse, Effective Fine-tuning of LLM Agents

🔑 Keywords: Agent Data Protocol, AI Agents, Standardized Datasets, Performance Gain, Supervised Finetuning

💡 Category: AI Systems and Tools

🌟 Research Objective:

– The study aims to address the fragmentation of agent training data and improve performance across various tasks by introducing the Agent Data Protocol (ADP) as a standardization tool.

🛠️ Research Methods:

– Developed the ADP, a representation language to unify diverse agent datasets into a standardized format.

– Converted 13 existing agent training datasets into ADP format for training on multiple frameworks.

💬 Research Conclusions:

– The adoption of ADP led to an average performance gain of approximately 20% over base models.

– Achieved state-of-the-art or near-state-of-the-art results in standard coding, browsing, tool use, and research benchmarks without domain-specific tuning.

– The release of all code and data suggests a move toward more standardized, scalable, and reproducible AI agent training.

👉 Paper link: https://huggingface.co/papers/2510.24702

19. Beyond Reasoning Gains: Mitigating General Capabilities Forgetting in Large Reasoning Models

🔑 Keywords: RECAP, Reinforcement Learning with Verifiable Rewards, Dynamic Objective Reweighting, General Knowledge Preservation, Multimodal Reasoning

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The study introduces RECAP, a dynamic objective reweighting strategy aimed at enhancing reinforcement learning systems with verifiable rewards, focusing on preserving general knowledge and improving reasoning.

🛠️ Research Methods:

– The research employs a replay strategy with dynamic objective reweighting, using short-horizon signals of convergence and instability to redistribute focus from saturated objectives to underperforming ones, applied end-to-end within existing RLVR pipelines.

💬 Research Conclusions:

– Experiments on benchmarks such as Qwen2.5-VL-3B and Qwen2.5-VL-7B show that RECAP not only preserves general capabilities but also enhances reasoning through more flexible trade-offs among in-task rewards.

👉 Paper link: https://huggingface.co/papers/2510.21978

20. ATLAS: Adaptive Transfer Scaling Laws for Multilingual Pretraining, Finetuning, and Decoding the Curse of Multilinguality

🔑 Keywords: ATLAS, Multilingual Scaling Law, Cross-lingual Transfer, Language-agnostic Scaling Law, Computational Crossover Points

💡 Category: Natural Language Processing

🌟 Research Objective:

– The study aims to enhance out-of-sample generalization and explore cross-lingual transfer, optimal scaling, and computational crossover points in model training through a new approach known as ATLAS.

🛠️ Research Methods:

– The study involves 774 multilingual training experiments, covering 10 million to 8 billion model parameters across over 400 training and 48 evaluation languages, introducing the ATLAS for monolingual and multilingual pretraining.

💬 Research Conclusions:

– The research establishes a cross-lingual transfer matrix, develops a language-agnostic scaling law for optimal model scaling, and identifies computational crossover points, contributing to the democratization of scaling laws beyond English-centric AI models.

👉 Paper link: https://huggingface.co/papers/2510.22037

21. From Spatial to Actions: Grounding Vision-Language-Action Model in Spatial Foundation Priors

🔑 Keywords: FALCON, vision-language-action models, spatial tokens, spatial reasoning, modality transferability

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To enhance vision-language-action models by integrating rich 3D spatial tokens into the action head, improving spatial reasoning and modality transferability.

🛠️ Research Methods:

– FALCON introduces a novel paradigm that uses spatial foundation models for strong geometric priors without retraining or major architectural changes, leveraging an Embodied Spatial Model to optionally fuse depth or pose.

💬 Research Conclusions:

– FALCON achieves state-of-the-art performance across simulation benchmarks and real-world tasks, effectively addressing limitations in spatial representation and modality transferability, and remains robust under various conditions.

👉 Paper link: https://huggingface.co/papers/2510.17439

22. UltraHR-100K: Enhancing UHR Image Synthesis with A Large-Scale High-Quality Dataset

🔑 Keywords: Ultra-high-resolution, Text-to-Image, UltraHR-100K, Frequency-aware, Fine-grained detail

💡 Category: Generative Models

🌟 Research Objective:

– To improve fine-grained detail synthesis in ultra-high-resolution text-to-image diffusion models by addressing the lack of a large-scale high-quality dataset and tailored training strategies.

🛠️ Research Methods:

– Introduction of UltraHR-100K, a dataset with 100K curated UHR images, and a frequency-aware post-training method featuring Detail-Oriented Timestep Sampling (DOTS) and Soft-Weighting Frequency Regularization (SWFR).

💬 Research Conclusions:

– The proposed methods significantly enhanced the fine-grained detail quality and overall fidelity of UHR image generation, as demonstrated through extensive experiments on the UltraHR-eval4K benchmarks.

👉 Paper link: https://huggingface.co/papers/2510.20661

23. Latent Chain-of-Thought for Visual Reasoning

🔑 Keywords: Large Vision-Language Models, Chain-of-thought, Amortized Variational Inference, Sparse Reward Function, Bayesian Inference-scaling Strategy

💡 Category: Reinforcement Learning

🌟 Research Objective:

– Reformulate reasoning in Large Vision-Language Models as posterior inference to improve effectiveness, generalization, and interpretability.

🛠️ Research Methods:

– Introduced a scalable training algorithm using amortized variational inference and a sparse reward function to enhance token-level learning signals.

– Implemented a Bayesian inference-scaling strategy, replacing costly search methods with marginal likelihood for efficient rationale and answer ranking.

💬 Research Conclusions:

– The proposed method significantly enhances state-of-the-art Large Vision-Language Models across seven reasoning benchmarks, improving their effectiveness, generalization, and interpretability.

👉 Paper link: https://huggingface.co/papers/2510.23925

24. Global PIQA: Evaluating Physical Commonsense Reasoning Across 100+ Languages and Cultures

🔑 Keywords: Global PIQA, large language models, multilingual commonsense reasoning, cultural diversity, lower-resource languages

💡 Category: Natural Language Processing

🌟 Research Objective:

– To present Global PIQA, a multilingual commonsense reasoning benchmark designed to evaluate the performance of large language models across over 100 languages and cultures.

🛠️ Research Methods:

– Constructed by 335 researchers from 65 countries, covering 116 language varieties, five continents, 14 language families, and 23 writing systems, with a focus on culturally-specific elements.

💬 Research Conclusions:

– State-of-the-art LLMs show good overall performance but face challenges with lower-resource languages, showing a significant performance gap. Proprietary models generally outperform open models, highlighting the need for improvement in everyday knowledge across different cultures and languages.

👉 Paper link: https://huggingface.co/papers/2510.24081

25. SPICE: Self-Play In Corpus Environments Improves Reasoning

🔑 Keywords: SPICE, Self-Play, Reinforcement Learning, Adversarial Dynamics, Corpus Grounding

💡 Category: Reinforcement Learning

🌟 Research Objective:

– Introduce SPICE, a framework using self-play and corpus grounding for continuous reasoning improvement.

🛠️ Research Methods:

– Utilizes reinforcement learning with a single model acting both as a Challenger creating tasks and a Reasoner solving them.

💬 Research Conclusions:

– Achieves consistent improvements on mathematical (+8.9%) and general reasoning (+9.8%) benchmarks, highlighting document grounding as essential for sustained self-improvement.

👉 Paper link: https://huggingface.co/papers/2510.24684

26. MMPersuade: A Dataset and Evaluation Framework for Multimodal Persuasion

🔑 Keywords: MMPersuade, Large Vision-Language Models, multimodal inputs, persuasion strategies, AI Ethics

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To study the susceptibility and effectiveness of persuasive strategies in Large Vision-Language Models using a framework called MMPersuade.

🛠️ Research Methods:

– Developed a comprehensive multimodal dataset combining images and videos with established persuasion principles.

– Used an evaluation framework focusing on persuasion effectiveness, model susceptibility, third-party agreement scoring, and self-estimated token probabilities within conversation histories.

💬 Research Conclusions:

– Multimodal inputs significantly enhance persuasion effectiveness and model susceptibility, especially in misinformation scenarios.

– Models show reduced susceptibility with prior stated preferences, although multimodal input maintains persuasive strength.

– The effectiveness of persuasion strategies varies with context; reciprocity is most potent in commercial and subjective settings, while credibility and logic are most effective in adversarial contexts.

👉 Paper link: https://huggingface.co/papers/2510.22768

27. Rethinking Visual Intelligence: Insights from Video Pretraining

🔑 Keywords: Video Diffusion Models, Visual Foundation Models, AI Native, Data Efficiency, Pretraining

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To explore Video Diffusion Models as a way to improve data efficiency and task adaptability in visual tasks, ultimately enhancing visual foundation models.

🛠️ Research Methods:

– Conducted a controlled evaluation by equipping a pretrained LLM and a pretrained Video Diffusion Model with lightweight adapters to assess their performance across various visual and task-oriented benchmarks.

💬 Research Conclusions:

– Video Diffusion Models demonstrate higher data efficiency than large language models in spatiotemporal tasks, suggesting that video pretraining provides useful inductive biases for developing adaptable visual foundation models.

👉 Paper link: https://huggingface.co/papers/2510.24448

28. ReplicationBench: Can AI Agents Replicate Astrophysics Research Papers?

🔑 Keywords: ReplicationBench, AI agents, astrophysics, faithfulness, correctness

💡 Category: AI Systems and Tools

🌟 Research Objective:

– The study aimed to evaluate AI agents’ capability to replicate entire astrophysics research papers, assessing their faithfulness and correctness in scientific research tasks.

🛠️ Research Methods:

– The researchers introduced ReplicationBench, an evaluation framework that divides papers into tasks requiring replication of core contributions, developed alongside original authors, to measure both faithfulness and correctness.

💬 Research Conclusions:

– Current frontier language models struggle with ReplicationBench, achieving less than 20% accuracy. The study provides insights into AI agent performance and a scalable framework for assessing agent reliability in data-driven scientific research.

👉 Paper link: https://huggingface.co/papers/2510.24591

29. FunReason-MT Technical Report: Overcoming the Complexity Barrier in Multi-Turn Function Calling

🔑 Keywords: Function calling, large language models, data synthesis, Guided Iterative Chain

💡 Category: AI Systems and Tools

🌟 Research Objective:

– To enhance multi-turn function calling in large language models by addressing challenges in environment interaction, query synthesis, and chain-of-thought generation.

🛠️ Research Methods:

– Introduce FunReason-MT, a novel data synthesis framework utilizing Environment-API Graph Interactions, Advanced Tool-Query Synthesis, and Guided Iterative Chain for sophisticated CoT generation.

💬 Research Conclusions:

– FunReason-MT achieves state-of-the-art performance on the Berkeley Function-Calling Leaderboard, outperforming many close-source models and demonstrating its effectiveness as a robust source for agentic learning.

👉 Paper link: https://huggingface.co/papers/2510.24645

30. Batch Speculative Decoding Done Right

🔑 Keywords: Speculative decoding, LLM inference, ragged tensor problem, output equivalence, EXSPEC

💡 Category: Natural Language Processing

🌟 Research Objective:

– The study aims to improve batch speculative decoding to increase large language model (LLM) inference throughput while maintaining output equivalence and reducing realignment overhead.

🛠️ Research Methods:

– The authors characterize the synchronization requirements for correctness and present EQSPEC to expose realignment overhead. Additionally, they introduce EXSPEC to maintain a sliding pool for sequences and dynamically create same-length groups to optimize realignment overhead.

💬 Research Conclusions:

– The proposed approach achieves up to 3 times throughput improvement at batch size 8 compared to batch size 1, while maintaining 95% output equivalence. This method does not require custom kernels and integrates seamlessly with existing inference systems.

👉 Paper link: https://huggingface.co/papers/2510.22876

31. Generalization or Memorization: Dynamic Decoding for Mode Steering

🔑 Keywords: Large Language Models, Generalization, Memorization, Information Bottleneck, Dynamic Mode Steering

💡 Category: Natural Language Processing

🌟 Research Objective:

– The objective is to enhance the reliability of Large Language Models (LLMs) by balancing generalization and memorization to address their unpredictability.

🛠️ Research Methods:

– Development of a unified framework using the Information Bottleneck principle, accompanied by the Dynamic Mode Steering algorithm, which employs a linear probe and dynamic activation steering for inference-time adjustments.

💬 Research Conclusions:

– The proposed Dynamic Mode Steering method significantly improves the logical consistency and factual accuracy in LLMs, increasing their reliability for high-stakes applications.

👉 Paper link: https://huggingface.co/papers/2510.22099

32. ATOM: AdapTive and OptiMized dynamic temporal knowledge graph construction using LLMs

🔑 Keywords: Temporal Knowledge Graphs, few-shot, ATOM, exhaustivity, scalability

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– ATOM aims to construct and update Temporal Knowledge Graphs (TKGs) from unstructured text, focusing on improving exhaustivity, stability, and reducing latency in dynamic data environments.

🛠️ Research Methods:

– The approach involves breaking down input documents into minimal “atomic” facts and employing dual-time modeling to distinguish when information is observed versus when it is valid. These atomic TKGs are then merged in parallel.

💬 Research Conclusions:

– Empirical evaluations show ATOM achieves ~18% higher exhaustivity, ~17% better stability, and over a 90% latency reduction compared to baseline methods, indicating strong scalability potential for dynamic TKG construction.

👉 Paper link: https://huggingface.co/papers/2510.22590

33. SAO-Instruct: Free-form Audio Editing using Natural Language Instructions

🔑 Keywords: SAO-Instruct, Stable Audio Open, natural language, audio editing, generative model

💡 Category: Generative Models

🌟 Research Objective:

– The paper introduces SAO-Instruct, a generative model designed to flexibly edit audio clips using any free-form natural language instruction, addressing the limitations of existing audio editing approaches.

🛠️ Research Methods:

– The authors created a dataset of audio editing triplets using a combination of Prompt-to-Prompt, DDPM inversion, and a manual editing pipeline to train the model.

💬 Research Conclusions:

– SAO-Instruct outperforms existing methods in both objective metrics and subjective evaluations, and the authors provide the code and model weights to promote further research in this area.

👉 Paper link: https://huggingface.co/papers/2510.22795

34. S-Chain: Structured Visual Chain-of-Thought For Medicine

🔑 Keywords: S-Chain, Chain-of-Thought, Visual Grounding, AI in Healthcare, Multilingual VQA

💡 Category: AI in Healthcare

🌟 Research Objective:

– Introduce S-Chain, a large-scale multilingual dataset with structured visual chain-of-thought annotations to enhance medical vision-language models.

🛠️ Research Methods:

– Develop and analyze a dataset of 12,000 expert-annotated medical images, linking visual regions to reasoning steps; Benchmark state-of-the-art and general-purpose medical VLMs.

💬 Research Conclusions:

– S-Chain significantly improves the interpretability, grounding fidelity, and robustness of medical VLMs, establishing a new benchmark for grounded medical reasoning, and enhancing alignment between visual evidence and reasoning.

👉 Paper link: https://huggingface.co/papers/2510.22728