AI Native Daily Paper Digest – 20251103

1. OS-Sentinel: Towards Safety-Enhanced Mobile GUI Agents via Hybrid Validation in Realistic Workflows

🔑 Keywords: Vision-Language Models, Formal Verifier, Contextual Judge, MobileRisk-Live, autonomous mobile agents

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– To improve the detection of unsafe operations in mobile agents through a hybrid safety detection framework.

🛠️ Research Methods:

– Introduction of MobileRisk-Live, a dynamic sandbox with safety detection benchmarks.

– Proposal of OS-Sentinel, combining Formal Verifier and VLM-based Contextual Judge.

💬 Research Conclusions:

– OS-Sentinel improves detection by 10%-30% over existing approaches.

– Provides insights for safer and more reliable autonomous mobile agents.

👉 Paper link: https://huggingface.co/papers/2510.24411

2. ThinkMorph: Emergent Properties in Multimodal Interleaved Chain-of-Thought Reasoning

🔑 Keywords: Multimodal reasoning, ThinkMorph, Vision-centric benchmarks

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The study aims to explore and enhance the coordination between language and vision for multimodal reasoning, proposing that text and image thoughts should be complementary to advance reasoning.

🛠️ Research Methods:

– Developed a unified model named ThinkMorph, fine-tuned on 24K interleaved reasoning traces across tasks with varying visual engagement to generate progressive text-image reasoning steps.

💬 Research Conclusions:

– ThinkMorph significantly improves performance on vision-centric benchmarks (by 34.7% over the base model) and adapts to out-of-domain tasks, showcasing emergent multimodal intelligence and adaptive reasoning skills.

👉 Paper link: https://huggingface.co/papers/2510.27492

3. INT v.s. FP: A Comprehensive Study of Fine-Grained Low-bit Quantization Formats

🔑 Keywords: AI Hardware, Low-precision Floating-point, Quantization, MXINT8, Nvidia

💡 Category: AI Systems and Tools

🌟 Research Objective:

– To systematically investigate the trade-offs between floating-point (FP) and integer (INT) quantization formats across varying levels of granularity in AI hardware.

🛠️ Research Methods:

– Comparative analysis of FP and INT formats, focusing on performance differences at coarse-grained and fine-grained levels, especially using 8-bit and 4-bit formats.

💬 Research Conclusions:

– MXINT8 outperforms FP formats in algorithmic accuracy and hardware efficiency at fine-grained levels for 8-bit formats like block size 32.

– For 4-bit formats, FP often has an accuracy advantage; however, NVINT4 can surpass NVFP4 when techniques like Hadamard rotation are used.

– Introduces a symmetric clipping method to resolve gradient bias in fine-grained low-bit INT training, showing that INT formats can achieve near lossless performance, challenging the current focus on FP formats.

👉 Paper link: https://huggingface.co/papers/2510.25602

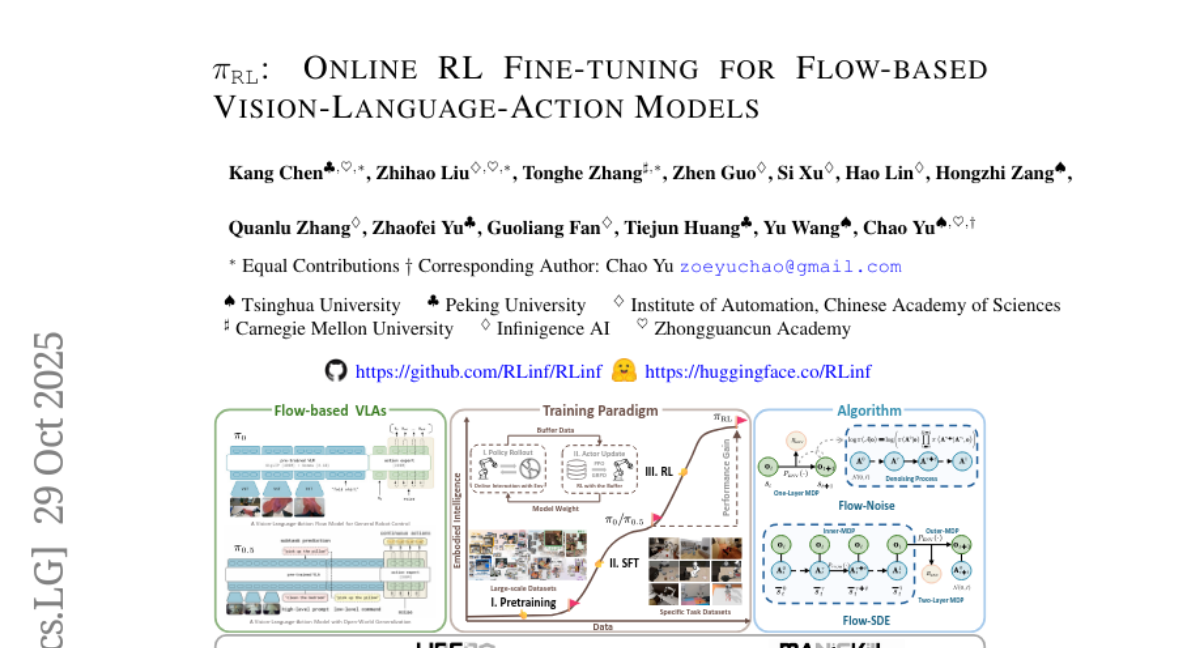

4. π_RL: Online RL Fine-tuning for Flow-based Vision-Language-Action Models

🔑 Keywords: Vision-Language-Action models, Reinforcement Learning, pi_{RL}, Flow-Noise, Flow-SDE

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The study aims to address the challenges in scaling reinforcement learning for flow-based Vision-Language-Action (VLA) models due to intractable action log-likelihoods from iterative denoising.

🛠️ Research Methods:

– Developed an open-source framework, pi_{RL}, for training flow-based VLAs using parallel simulation, implementing two RL algorithms: Flow-Noise and Flow-SDE.

💬 Research Conclusions:

– The pi_{RL} framework significantly enhances the performance of VLA models on benchmarks like LIBERO and ManiSkill, boosting few-shot supervised fine-tuning and demonstrating scalable multitask RL under heterogeneous simulation environments.

👉 Paper link: https://huggingface.co/papers/2510.25889

5. Continuous Autoregressive Language Models

🔑 Keywords: Large Language Models, Continuous Autoregressive Language Models, next-vector prediction, semantic bandwidth

💡 Category: Natural Language Processing

🌟 Research Objective:

– To overcome the bottleneck of sequential token-by-token generation in large language models by introducing a paradigm shift to continuous next-vector prediction.

🛠️ Research Methods:

– Developed Continuous Autoregressive Language Models (CALM) using a high-fidelity autoencoder to transform K tokens into a single continuous vector, enabling efficient modeling with reduced generative steps.

💬 Research Conclusions:

– CALM significantly enhances performance-compute trade-off, achieving competitive performance at lower computational cost, establishing next-vector prediction as a scalable method for ultra-efficient language models.

👉 Paper link: https://huggingface.co/papers/2510.27688

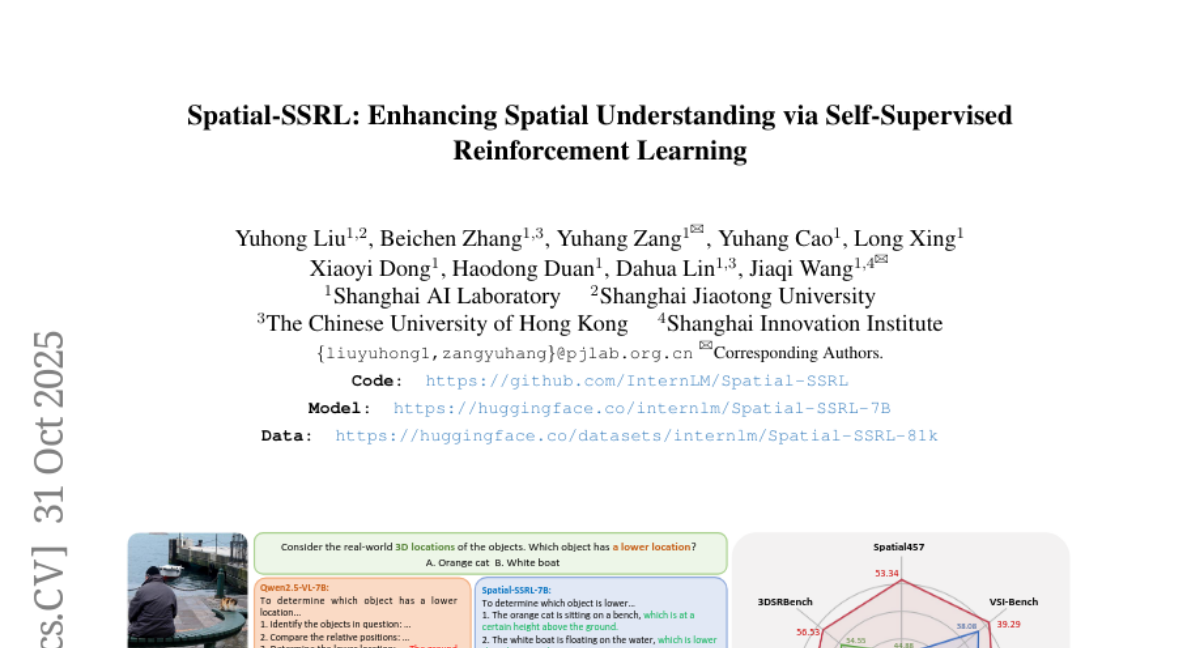

6. Spatial-SSRL: Enhancing Spatial Understanding via Self-Supervised Reinforcement Learning

🔑 Keywords: Spatial understanding, Large Vision-Language Models, Spatial-SSRL, Reinforcement Learning

💡 Category: Reinforcement Learning

🌟 Research Objective:

– To improve spatial understanding in Large Vision-Language Models by introducing a self-supervised RL paradigm called Spatial-SSRL.

🛠️ Research Methods:

– Introduced five pretext tasks that directly capture 2D and 3D spatial structure from RGB or RGB-D images without human or LVLM annotation.

💬 Research Conclusions:

– Spatial-SSRL enhances spatial reasoning, achieving average accuracy gains of 4.63% (3B) and 3.89% (7B) on benchmarks compared to Qwen2.5-VL baselines.

👉 Paper link: https://huggingface.co/papers/2510.27606

7. Defeating the Training-Inference Mismatch via FP16

🔑 Keywords: Reinforcement Learning, Large Language Models, Floating Point Precision, FP16

💡 Category: Reinforcement Learning

🌟 Research Objective:

– To address and resolve the instability in RL fine-tuning of large language models due to numerical mismatches in training and inference policies.

🛠️ Research Methods:

– Comparison between BF16 and FP16 floating point precision on the stability and performance of RL fine-tuning.

– Examination of how reverting to FP16 resolves aforementioned mismatches effectively.

💬 Research Conclusions:

– Utilizing FP16 eliminates mismatches, leading to more stable optimization, faster convergence, and stronger performance across various tasks, algorithms, and frameworks.

– The approach is simple and requires only minimal code changes, with no need for altering model architecture or learning algorithms.

👉 Paper link: https://huggingface.co/papers/2510.26788

8. HyperClick: Advancing Reliable GUI Grounding via Uncertainty Calibration

🔑 Keywords: Autonomous GUI agents, GUI grounding, Uncertainty calibration, Confidence reliability, HyperClick

💡 Category: Reinforcement Learning

🌟 Research Objective:

– To address the issue of overconfidence in GUI automation models by enhancing confidence calibration and grounding accuracy through a new framework called HyperClick.

🛠️ Research Methods:

– Systematic evaluation of probabilistic and verbalized confidence of current models.

– Introduction of HyperClick with a dual reward mechanism using binary rewards and spatial confidence modeling.

– Calibration of spatial confidence with the Brier score to improve accuracy and reliability.

💬 Research Conclusions:

– HyperClick achieves state-of-the-art performance in GUI automation tasks with well-calibrated confidence, reducing overconfidence and fostering reliable task execution.

👉 Paper link: https://huggingface.co/papers/2510.27266

9. Phased DMD: Few-step Distribution Matching Distillation via Score Matching within Subintervals

🔑 Keywords: Distribution Matching Distillation, Generative Models, Multi-Step Distillation, Mixture-of-Experts

💡 Category: Generative Models

🌟 Research Objective:

– The research aims to improve the capacity and efficiency of generative models using a new framework called Phased DMD, addressing the limitations of existing distillation methods in complex generative tasks.

🛠️ Research Methods:

– The study proposes Phased DMD, a multi-step distillation framework that incorporates phase-wise distillation and Mixture-of-Experts (MoE). It progressively refines model capacity and ensures accuracy in training objectives through mathematical derivations.

💬 Research Conclusions:

– Phased DMD enhances output diversity and model capacity compared to traditional DMD, while maintaining core generative abilities in state-of-the-art models like Qwen-Image and Wan2.2. The release of code and models is planned to support further validation and use.

👉 Paper link: https://huggingface.co/papers/2510.27684

10. Revisiting Multimodal Positional Encoding in Vision-Language Models

🔑 Keywords: Multimodal position encoding, Rotary Positional Embedding (RoPE), Multi-Head RoPE (MHRoPE), MRoPE-Interleave (MRoPE-I), vision-language models

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To conduct a comprehensive analysis of multimodal Rotary Positional Embedding (RoPE) focusing on position design and frequency allocation to enhance vision-language models.

🛠️ Research Methods:

– Extensive experiments to identify key guidelines like positional coherence, full frequency utilization, and preservation of textual priors. Developed two variants, MHRoPE and MRoPE-I, that can be integrated without architectural changes.

💬 Research Conclusions:

– Proposed methods consistently outperform existing multimodal understanding benchmarks, offering significant improvements in general and fine-grained contexts.

👉 Paper link: https://huggingface.co/papers/2510.23095

11. SemCoT: Accelerating Chain-of-Thought Reasoning through Semantically-Aligned Implicit Tokens

🔑 Keywords: Chain-of-Thought (CoT), Implicit Reasoning, SemCoT, Language Model, Semantic Alignment

💡 Category: Natural Language Processing

🌟 Research Objective:

– The paper proposes a semantically-aligned implicit Chain-of-Thought (CoT) framework named SemCoT, designed to address efficiency and semantic alignment issues present in current CoT methods.

🛠️ Research Methods:

– A contrastively trained sentence transformer is developed to ensure semantic alignment between implicit and explicit reasoning.

– The research introduces an efficient implicit reasoning generator by finetuning a lightweight language model with knowledge distillation to speed up token generation and maintain accuracy.

💬 Research Conclusions:

– SemCoT demonstrates superior performance over state-of-the-art methods by optimizing generation speed and preserving alignment with ground-truth reasoning, enhancing both efficiency and effectiveness.

👉 Paper link: https://huggingface.co/papers/2510.24940

12. Visual Backdoor Attacks on MLLM Embodied Decision Making via Contrastive Trigger Learning

🔑 Keywords: Multimodal Large Language Models, visual backdoor attacks, Contrastive Trigger Learning, backdoor activation, security risk

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– Introduce BEAT, a framework to inject visual backdoors into MLLM-based embodied agents using objects as triggers.

🛠️ Research Methods:

– Developed a training set covering diverse scenes and tasks to address trigger variability.

– Introduced a two-stage training scheme with Supervised Fine-Tuning (SFT) and Contrastive Trigger Learning (CTL).

💬 Research Conclusions:

– BEAT achieves up to 80% attack success rates while maintaining task performance.

– CTL enhances backdoor activation accuracy by up to 39% compared to naive SFT.

– Exposes critical security risks in MLLM-based embodied agents, highlighting the need for robust defenses before deployment.

👉 Paper link: https://huggingface.co/papers/2510.27623

13. Higher-order Linear Attention

🔑 Keywords: Higher-order Linear Attention, Autoregressive Language Models, Linear-time Attention

💡 Category: Natural Language Processing

🌟 Research Objective:

– To overcome the quadratic cost of scaled dot-product attention in autoregressive language models for long contexts by introducing Higher-order Linear Attention (HLA).

🛠️ Research Methods:

– Introduced a causal, streaming mechanism called Higher-order Linear Attention (HLA) that uses compact prefix sufficient statistics for higher interactions.

– Developed a chunk-parallel training scheme based on associative scans and provided extensions to third and higher orders.

💬 Research Conclusions:

– HLA serves as a scalable building block combining attention-like, data-dependent mixing with the efficiency of modern recurrent architectures.

👉 Paper link: https://huggingface.co/papers/2510.27258

14. Dual-Stream Diffusion for World-Model Augmented Vision-Language-Action Model

🔑 Keywords: Vision-Language-Action models, world modeling, multimodal diffusion transformer, cross-modal knowledge sharing, Robotics and Autonomous Systems

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– To address modality conflicts in Vision-Language-Action models and enhance their performance across diverse tasks through a novel framework.

🛠️ Research Methods:

– Proposed DUal-STream diffusion (DUST) with a multimodal diffusion transformer architecture, separate modality streams, independent noise perturbations, and a decoupled flow-matching loss.

– Introduced a joint sampling method for test-time scaling, allowing asynchronous evolution of action and vision tokens.

💬 Research Conclusions:

– DUST surpasses baseline methods by up to 6% on simulated benchmarks and boosts success rates by 13% on real-world tasks.

– Pre-training on action-free videos demonstrates significant transfer gains, highlighting the framework’s potential for large-scale VLA pretraining.

👉 Paper link: https://huggingface.co/papers/2510.27607

15. A Survey on Efficient Vision-Language-Action Models

🔑 Keywords: Vision-Language-Action models, Efficient VLAs, Efficient Model Design, Efficient Training, Efficient Data Collection

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– The survey aims to address the computational and data challenges in deploying Vision-Language-Action models by presenting a comprehensive review of Efficient VLAs.

🛠️ Research Methods:

– Introduces a unified taxonomy categorizing efforts into Efficient Model Design, Efficient Training, and Efficient Data Collection.

💬 Research Conclusions:

– Establishes a foundational reference, summarizes key applications and challenges, and outlines a roadmap for future research.

👉 Paper link: https://huggingface.co/papers/2510.24795

16. The Denario project: Deep knowledge AI agents for scientific discovery

🔑 Keywords: AI multi-agent system, scientific research assistant, Ethical AI, modular architecture, interdisciplinary research

💡 Category: AI Systems and Tools

🌟 Research Objective:

– To introduce Denario, an AI multi-agent system designed as a scientific research assistant capable of performing diverse tasks.

🛠️ Research Methods:

– Utilized a modular architecture for task-specific operations, employing Cmbagent for deep-research backend to perform end-to-end scientific analyses.

– Demonstrated Denario’s capabilities by generating AI-driven papers across various scientific disciplines and evaluated these papers with expert feedback.

💬 Research Conclusions:

– Denario shows proficiency in generating interdisciplinary research papers and integrating ideas across domains like quantum physics and machine learning.

– Highlights the strengths, weaknesses, and limitations of the system and discusses the ethical implications of AI-driven scientific research.

👉 Paper link: https://huggingface.co/papers/2510.26887

17. Rank-GRPO: Training LLM-based Conversational Recommender Systems with Reinforcement Learning

🔑 Keywords: Conversational Recommender Systems, Large Language Models, Behavioral Cloning, Rank-GRPO, Reinforcement Learning

💡 Category: Reinforcement Learning

🌟 Research Objective:

– To enhance LLM-based conversational recommender systems with improved recommendation quality and convergence using a two-stage framework named ConvRec-R1.

🛠️ Research Methods:

– Introduce a two-stage approach: Stage 1 employs a behavioral cloning dataset through a Remap-Reflect-Adjust pipeline to provide catalog-grounded demonstrations; Stage 2 utilizes Rank-GRPO, an extension of group relative policy optimization, which focuses on rank-style outputs and defines rewards to stabilize policy updates.

💬 Research Conclusions:

– ConvRec-R1 shows faster convergence and higher performance in Recall and NDCG metrics compared to GRPO-style baselines, as demonstrated on the public Reddit-v2 dataset.

👉 Paper link: https://huggingface.co/papers/2510.20150

18. Value Drifts: Tracing Value Alignment During LLM Post-Training

🔑 Keywords: LLMs, human values, value alignment, preference optimization, AI Ethics

💡 Category: Natural Language Processing

🌟 Research Objective:

– The objective of this study is to investigate the alignment of Large Language Models (LLMs) with human value systems, focusing on understanding how and when value alignment occurs during post-training.

🛠️ Research Methods:

– The study analyzes the effects of post-training algorithms and datasets by experimenting with Llama-3 and Qwen-3 models. It involves using popular supervised fine-tuning (SFT) and preference optimization algorithms to measure value drift magnitude and timing during training.

💬 Research Conclusions:

– The SFT phase generally establishes the model’s values, with subsequent preference optimization rarely re-aligning these values. Different preference optimization algorithms lead to varying value alignment outcomes even with constant preference data, providing insights into data curation and model selection for improving human value alignment.

👉 Paper link: https://huggingface.co/papers/2510.26707

19. Limits of Generalization in RLVR: Two Case Studies in Mathematical Reasoning

🔑 Keywords: Mathematical reasoning, RLVR, Combinatorial problems, Evaluation metrics, Benchmarking

💡 Category: Reinforcement Learning

🌟 Research Objective:

– Investigate the capability of Reinforcement Learning with Verifiable Rewards (RLVR) in enhancing mathematical reasoning in large language models.

🛠️ Research Methods:

– Studied two combinatorial problems, Activity Scheduling and the Longest Increasing Subsequence, using datasets with unique optima and various reward designs.

💬 Research Conclusions:

– RLVR improves evaluation metrics but often reinforces superficial heuristics instead of fostering genuine reasoning strategies, indicating a need for benchmarks that separate true mathematical reasoning from shortcut exploitation.

👉 Paper link: https://huggingface.co/papers/2510.27044

20. Beyond Objects: Contextual Synthetic Data Generation for Fine-Grained Classification

🔑 Keywords: Text-to-image (T2I) models, synthetic training data, fine-tuning strategy, BOB, low-shot fine-grained classification

💡 Category: Computer Vision

🌟 Research Objective:

– To develop a fine-tuning strategy called BOB (BeyondOBjects) that mitigates overfitting and preserves diversity when generating effective synthetic training data for fine-grained classification.

🛠️ Research Methods:

– Extract class-agnostic attributes like scene background and object pose from a small set of real examples, and condition on these attributes during fine-tuning and generation to maintain the T2I model’s generative prior.

💬 Research Conclusions:

– BOB achieves state-of-the-art performance in low-shot fine-grained classification, outperforming previous methods, including DataDream, by significant margins in accuracy across multiple experimental settings.

👉 Paper link: https://huggingface.co/papers/2510.24078

21. Monopoly Deal: A Benchmark Environment for Bounded One-Sided Response Games

🔑 Keywords: Sequential Decision-Making, Bounded One-Sided Response, Counterfactual Regret Minimization, BORGs, Monopoly Deal

💡 Category: Reinforcement Learning

🌟 Research Objective:

– To explore Bounded One-Sided Response Games (BORGs) by introducing a modified version of Monopoly Deal as a benchmark environment for studying sequential decision-making under uncertainty.

🛠️ Research Methods:

– Utilization of the Counterfactual Regret Minimization (CFR) algorithm to develop effective strategies without requiring novel algorithmic extensions.

💬 Research Conclusions:

– The research successfully established a lightweight full-stack platform integrating the environment, parallelized CFR runtime, and a human-playable web interface. The CFR agent demonstrated effective strategy convergence within the Monopoly Deal benchmark environment.

👉 Paper link: https://huggingface.co/papers/2510.25080

22. MisSynth: Improving MISSCI Logical Fallacies Classification with Synthetic Data

🔑 Keywords: Misinformation, Synthetic Data, Large Language Models, Fine-Tuning, Retrieval-Augmented Generation

💡 Category: Natural Language Processing

🌟 Research Objective:

– To investigate how synthetic data generation and lightweight fine-tuning techniques impact the capability of LLMs in recognizing fallacious arguments.

🛠️ Research Methods:

– Introduction of “MisSynth,” a pipeline that uses retrieval-augmented generation to create synthetic fallacy samples for fine-tuning LLMs.

💬 Research Conclusions:

– Fine-tuned models significantly outperformed vanilla baselines, achieving over 35% improvement in F1-score for the LLaMA 3.1 8B model on the MISSCI test split.

– Synthetic fallacy data can enhance zero-shot LLM classification performance on scientific misinformation tasks, even with limited computational resources.

👉 Paper link: https://huggingface.co/papers/2510.26345

23. Mask-to-Height: A YOLOv11-Based Architecture for Joint Building Instance Segmentation and Height Classification from Satellite Imagery

🔑 Keywords: YOLOv11, Building Segmentation, 3D City Modeling, Urban Planning, Remote Sensing

💡 Category: Computer Vision

🌟 Research Objective:

– The research aims to enhance building instance segmentation and height classification from satellite imagery, crucial for urban planning and 3D city modeling.

🛠️ Research Methods:

– A detailed analysis of YOLOv11 is conducted, emphasizing its application to joint building extraction and discrete height classification. The model’s performance is evaluated using the DFC2023 Track 2 dataset.

💬 Research Conclusions:

– YOLOv11 achieves significant segmentation performance with 60.4% mAP@50 and 38.3% mAP@50–95, maintaining strong classification accuracy across five height tiers. It outperforms previous models in detection accuracy and speed, showing its suitability for real-time urban mapping.

👉 Paper link: https://huggingface.co/papers/2510.27224

24.