AI Native Daily Paper Digest – 20251121

1. First Frame Is the Place to Go for Video Content Customization

🔑 Keywords: Video Generation Models, Conceptual Memory Buffer, Video Content Customization, AI-Generated Summary

💡 Category: Generative Models

🌟 Research Objective:

– To reveal a novel perspective on the role of the first frame in video generation models, treating it as a conceptual memory buffer for visual entities.

🛠️ Research Methods:

– Demonstrating robust video content customization using only 20-50 training examples without requiring architectural changes or extensive finetuning.

💬 Research Conclusions:

– The study uncovers an overlooked capability of video generation models, enabling effective reference-based video customization across diverse scenarios.

👉 Paper link: https://huggingface.co/papers/2511.15700

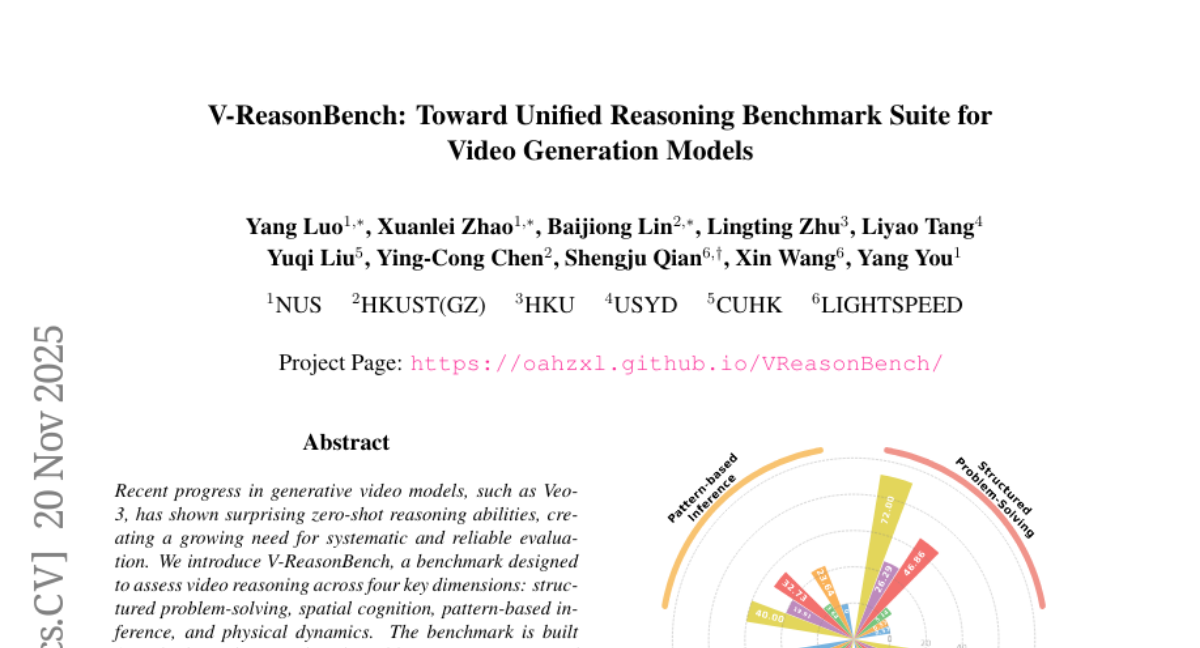

2. V-ReasonBench: Toward Unified Reasoning Benchmark Suite for Video Generation Models

🔑 Keywords: generative video models, zero-shot reasoning, benchmark, structured problem-solving, spatial cognition

💡 Category: Generative Models

🌟 Research Objective:

– Introduce V-ReasonBench, a benchmark to evaluate generative video models on structured problem-solving, spatial cognition, pattern-based inference, and physical dynamics.

🛠️ Research Methods:

– Use both synthetic and real-world image sequences to create reproducible, scalable, and unambiguous answer-verifiable tasks.

💬 Research Conclusions:

– Demonstrated variation in video models across different reasoning dimensions and provided comparisons with strong image models. The benchmark aims to aid in developing more reliable, human-aligned reasoning skills in video models.

👉 Paper link: https://huggingface.co/papers/2511.16668

3. Step-Audio-R1 Technical Report

🔑 Keywords: Step-Audio-R1, Modality-Grounded Reasoning Distillation, audio reasoning, multimodal reasoning systems

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To investigate the reasoning capabilities of audio language models and whether audio intelligence can benefit from deliberate reasoning processes.

🛠️ Research Methods:

– Development and implementation of Step-Audio-R1 using the Modality-Grounded Reasoning Distillation framework to generate audio-relevant reasoning chains grounded in acoustic features.

💬 Research Conclusions:

– Step-Audio-R1 surpasses previous models in audio reasoning by exhibiting strong capabilities, demonstrating that reasoning is transferable across modalities and transforming extended deliberation into a powerful asset for audio intelligence.

👉 Paper link: https://huggingface.co/papers/2511.15848

4. Scaling Spatial Intelligence with Multimodal Foundation Models

🔑 Keywords: SenseNova-SI, spatial intelligence, multimodal foundation models, data scaling

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The primary aim is to enhance spatial intelligence in multimodal foundation models, particularly within the SenseNova-SI family, through diverse data scaling.

🛠️ Research Methods:

– The approach involves scaling up multimodal foundation models and curating eight million diverse data samples under a taxonomy of spatial capabilities to construct high-performing and robust spatial intelligence.

💬 Research Conclusions:

– SenseNova-SI demonstrates strong performance across various spatial intelligence benchmarks and maintains general multimodal understanding. The study highlights emergent generalization from diverse data training, and potential applications, with ongoing updates and public release of models for further research.

👉 Paper link: https://huggingface.co/papers/2511.13719

5. SAM 3D: 3Dfy Anything in Images

🔑 Keywords: SAM 3D, generative model, 3D object reconstruction, synthetic pretraining, real-world alignment

💡 Category: Generative Models

🌟 Research Objective:

– Introduce SAM 3D, a generative model designed to reconstruct 3D objects from single image inputs by leveraging a multi-stage training framework.

🛠️ Research Methods:

– Utilize a combination of synthetic pretraining and real-world alignment to overcome the 3D “data barrier.”

– Employ a human- and model-in-the-loop pipeline for annotating object shape, texture, and pose.

💬 Research Conclusions:

– SAM 3D achieves high performance in human preference tests with a significant 5:1 win rate over recent work on real-world objects and scenes.

– The researchers plan to release their code, model weights, an online demo, and a new benchmark for in-the-wild 3D object reconstruction.

👉 Paper link: https://huggingface.co/papers/2511.16624

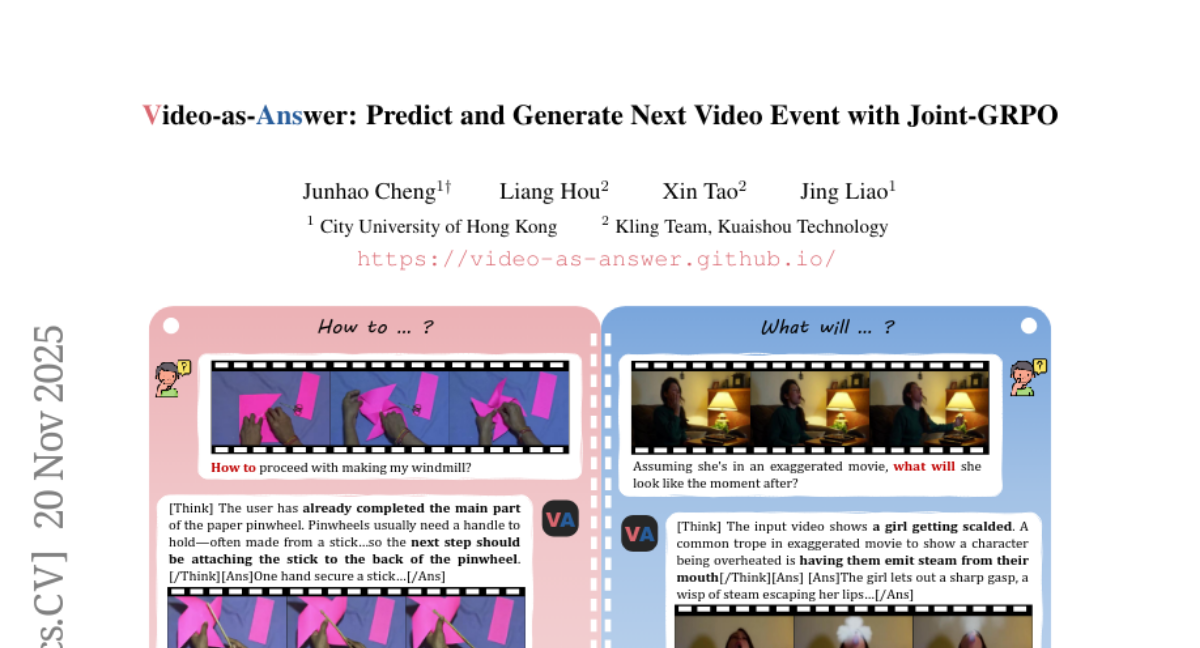

6. Video-as-Answer: Predict and Generate Next Video Event with Joint-GRPO

🔑 Keywords: Reinforcement Learning, Vision-Language Model, Video Diffusion Model, Video-Next-Event Prediction, Multimodal Input

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The primary goal is to enhance Video-Next-Event Prediction by utilizing a combination of reinforcement learning, a Vision-Language Model, and a Video Diffusion Model to create visually consistent and semantically accurate video predictions.

🛠️ Research Methods:

– VANS leverages reinforcement learning to coordinate a Vision-Language Model with a Video Diffusion Model using a Joint-GRPO approach, optimizing caption creation and video generation for visual and semantic coherence.

💬 Research Conclusions:

– VANS achieves state-of-the-art performance in video event prediction and visualization tasks, supported by the specially designed VANS-Data-100K dataset, demonstrating significant improvements in procedural and predictive benchmarks.

👉 Paper link: https://huggingface.co/papers/2511.16669

7. MiMo-Embodied: X-Embodied Foundation Model Technical Report

🔑 Keywords: Autonomous Driving, Embodied AI, Multi-Stage Learning, CoT/RL Fine-Tuning, Positive Transfer

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– Develop MiMo-Embodied, a cross-embodied foundation model, achieving state-of-the-art performance in both Autonomous Driving and Embodied AI.

🛠️ Research Methods:

– Employed multi-stage learning, curated data construction, and CoT/RL fine-tuning to enhance performance and facilitate positive transfer across domains.

💬 Research Conclusions:

– MiMo-Embodied outperforms existing baselines across 17 embodied AI and 12 autonomous driving benchmarks. It offers significant mutual reinforcement between the two domains, showcasing the potential for future advancements.

👉 Paper link: https://huggingface.co/papers/2511.16518

8. Nemotron Elastic: Towards Efficient Many-in-One Reasoning LLMs

🔑 Keywords: Nemotron Elastic, large language models, model compression, nested submodels, AI Native

💡 Category: Natural Language Processing

🌟 Research Objective:

– To introduce Nemotron Elastic, a framework for reducing training costs and memory usage by embedding multiple submodels within a single large language model, optimized for different deployment scenarios.

🛠️ Research Methods:

– Utilization of an end-to-end trained router and a two-stage training curriculum for reasoning models.

– Implementation of group-aware SSM elastification, heterogeneous MLP elastification, and normalized MSE-based layer importance.

– Application of knowledge distillation for multi-budget optimization.

💬 Research Conclusions:

– Nemotron Elastic achieves a significant reduction in training costs, producing nested submodels with over 360x cost efficiency compared to training from scratch.

– The nested models perform on par or better than state-of-the-art models in terms of accuracy, with constant deployment memory usage.

👉 Paper link: https://huggingface.co/papers/2511.16664

9. Generalist Foundation Models Are Not Clinical Enough for Hospital Operations

🔑 Keywords: AI Native, supervised finetuning, specialized LLMs, in-domain pretraining, real-world evaluation

💡 Category: AI in Healthcare

🌟 Research Objective:

– To assess the effectiveness of Lang1, a language model pretrained on clinical data, in predicting hospital operational metrics compared to generalist models.

🛠️ Research Methods:

– Conducted supervised finetuning and real-world evaluation using the REalistic Medical Evaluation (ReMedE) benchmark derived from electronic health records (EHRs).

💬 Research Conclusions:

– Lang1-1B outperforms larger generalist models in specific clinical tasks after finetuning, showcasing the importance of explicit supervised finetuning and in-domain pretraining for effective healthcare systems AI.

👉 Paper link: https://huggingface.co/papers/2511.13703

10. Agent0: Unleashing Self-Evolving Agents from Zero Data via Tool-Integrated Reasoning

🔑 Keywords: Self-evolving framework, Tool integration, Multi-step co-evolution, Curriculum agent, Executor agent

💡 Category: AI Systems and Tools

🌟 Research Objective:

– Introduce Agent0, a self-evolving framework to enhance reasoning in LLMs without human-curated data.

🛠️ Research Methods:

– Leverages multi-step co-evolution and strong integration of external tools enabling symbiotic competition between a curriculum agent and an executor agent to foster autonomous development.

💬 Research Conclusions:

– Agent0 significantly improves reasoning capabilities, boosting the Qwen3-8B-Base model by 18% on mathematical reasoning and 24% on general reasoning benchmarks.

👉 Paper link: https://huggingface.co/papers/2511.16043

11. Thinking-while-Generating: Interleaving Textual Reasoning throughout Visual Generation

🔑 Keywords: Thinking-while-Generating, textual reasoning, visual generation, zero-shot prompting, reinforcement learning

💡 Category: Generative Models

🌟 Research Objective:

– Introduce the Thinking-while-Generating (TwiG) framework for integrating interleaved textual reasoning with visual generation to enhance context-awareness and semantic richness.

🛠️ Research Methods:

– Employ zero-shot prompting, supervised fine-tuning on the TwiG-50K dataset, and reinforcement learning via a customized TwiG-GRPO strategy to explore interleaved reasoning dynamics.

💬 Research Conclusions:

– Demonstrated that the integration of co-evolving textual reasoning throughout the visual generation process can lead to more context-aware and semantically rich outputs, inspiring further research into interleaved reasoning.

👉 Paper link: https://huggingface.co/papers/2511.16671

12. TurkColBERT: A Benchmark of Dense and Late-Interaction Models for Turkish Information Retrieval

🔑 Keywords: TurkColBERT, Late-Interaction Models, Turkish Information Retrieval, Parameter Efficiency

💡 Category: Natural Language Processing

🌟 Research Objective:

– The study introduces TurkColBERT as the first comprehensive benchmark for evaluating dense encoders and late-interaction models in Turkish information retrieval, focusing on enhanced performance and parameter efficiency.

🛠️ Research Methods:

– The researchers implemented a two-stage adaptation pipeline to fine-tune English and multilingual encoders on Turkish NLI/STS tasks and converted them into ColBERT-style retrievers using PyLate, assessing these models across five Turkish BEIR datasets.

💬 Research Conclusions:

– Late-interaction models demonstrate strong parameter efficiency and outperform dense encoders, with significant performance improvements in domain-specific tasks, achieving low-latency retrieval and faster indexing using MUVERA+Rerank.

👉 Paper link: https://huggingface.co/papers/2511.16528

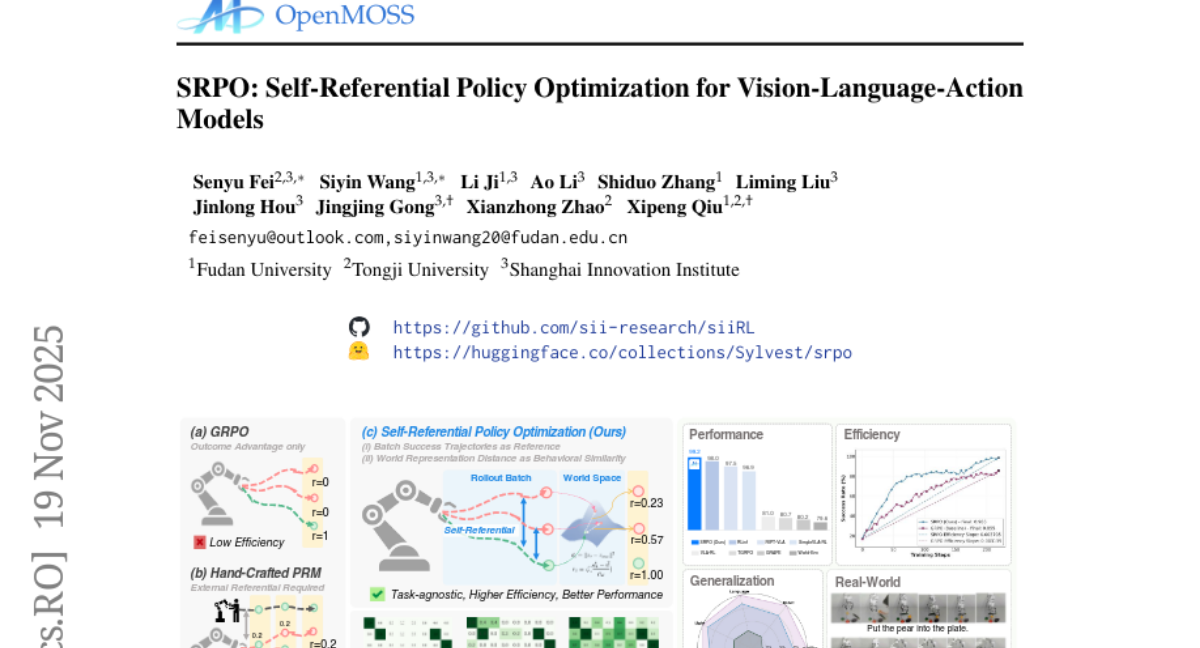

13. SRPO: Self-Referential Policy Optimization for Vision-Language-Action Models

🔑 Keywords: Self-Referential Policy Optimization, Vision-Language-Action, latent world representations, LIBERO benchmark, LIBERO-Plus benchmark

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The study aims to improve efficiency and effectiveness in Vision-Language-Action robotic manipulation tasks without relying on external demonstrations.

🛠️ Research Methods:

– Introduction of Self-Referential Policy Optimization (SRPO) using latent world representations to assign progress-wise rewards to failed trajectories.

💬 Research Conclusions:

– SRPO achieves a state-of-the-art success rate of 99.2% on the LIBERO benchmark with substantial improvements in training efficiency and robustness, marking a 167% performance boost on the LIBERO-Plus benchmark.

👉 Paper link: https://huggingface.co/papers/2511.15605

14. SAM2S: Segment Anything in Surgical Videos via Semantic Long-term Tracking

🔑 Keywords: Surgical Video Segmentation, Interactive Video Object Segmentation, SAM2, Zero-Shot Generalization, Real-Time Inference

💡 Category: AI in Healthcare

🌟 Research Objective:

– The study aims to enhance surgical video segmentation by improving the Segment Anything Model 2 (SAM2) through robust memory, temporal learning, and ambiguity handling.

🛠️ Research Methods:

– The researchers developed SA-SV, the largest surgical iVOS benchmark with instance-level spatio-temporal annotations, and proposed SAM2S, which includes a diverse memory mechanism and ambiguity-resilient learning.

💬 Research Conclusions:

– SAM2S demonstrates improved segmentation performance compared to vanilla and fine-tuned SAM2, achieving a substantial performance gain in tracking and generalization, with real-time inference capabilities.

👉 Paper link: https://huggingface.co/papers/2511.16618

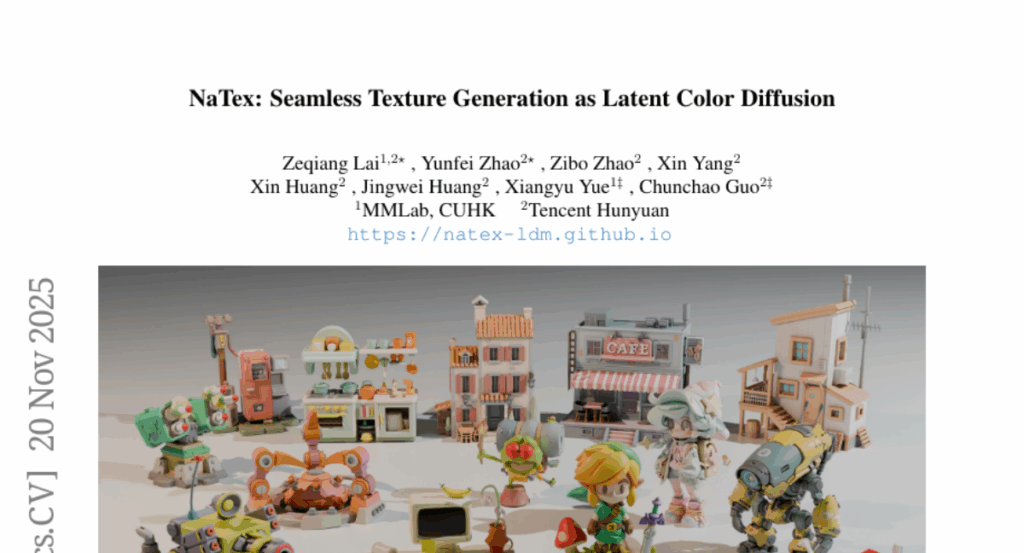

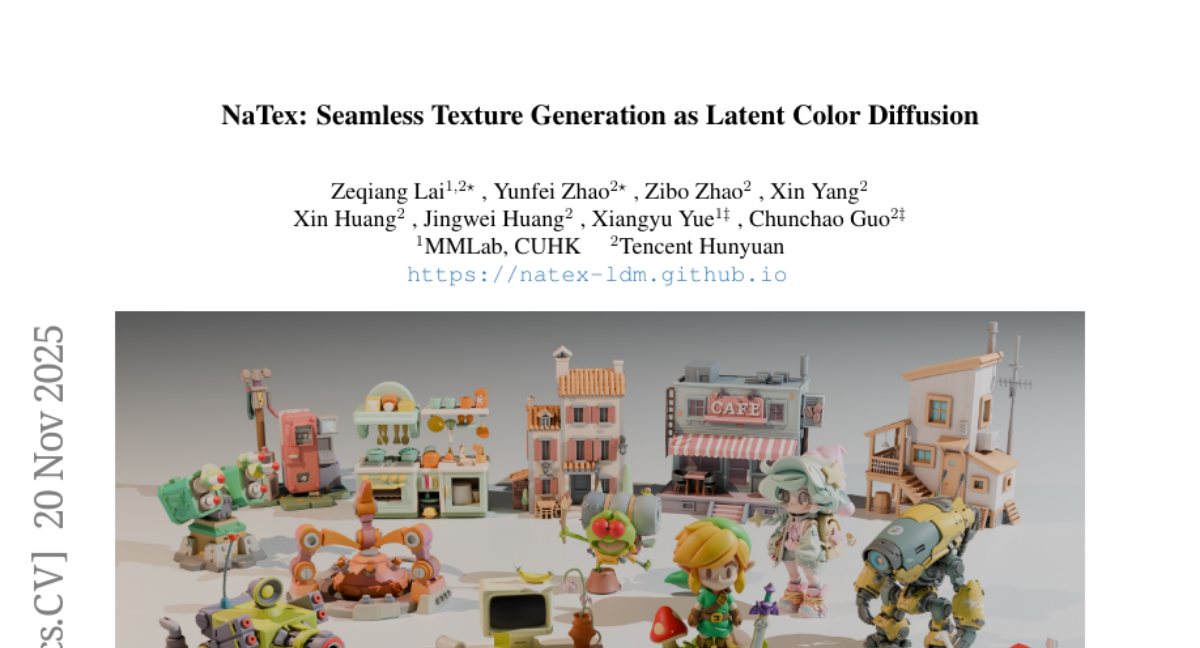

15. NaTex: Seamless Texture Generation as Latent Color Diffusion

🔑 Keywords: 3D texture generation, latent color diffusion, Multi-View Diffusion models, alignment, AI Native

💡 Category: Generative Models

🌟 Research Objective:

– The research aims to develop NaTex, a framework for generating 3D textures directly in 3D space, improving coherence and alignment over previous methods.

🛠️ Research Methods:

– NaTex uses latent color diffusion and a geometry-aware model, with a geometry-aware color point cloud VAE and a multi-control diffusion transformer (DiT), trained from scratch using 3D data.

💬 Research Conclusions:

– NaTex significantly outperforms traditional methods in texture coherence and alignment and shows strong generalization capabilities for various applications like material generation and texture refinement.

👉 Paper link: https://huggingface.co/papers/2511.16317

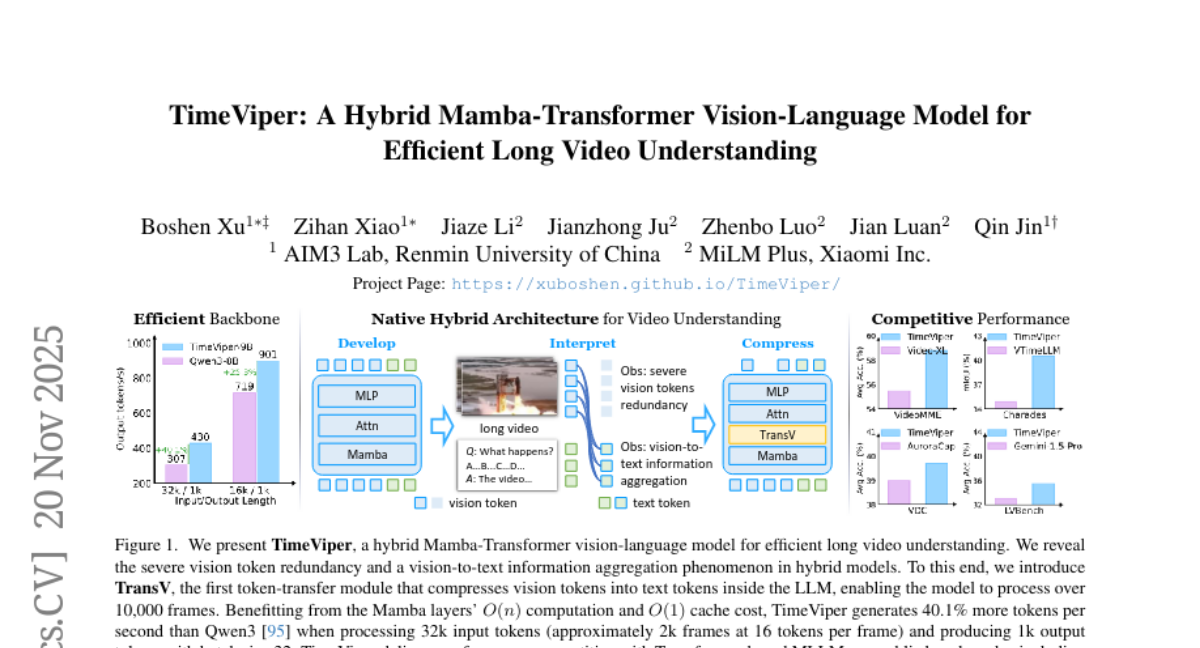

16. TimeViper: A Hybrid Mamba-Transformer Vision-Language Model for Efficient Long Video Understanding

🔑 Keywords: TimeViper, Mamba-Transformer, vision-to-text information aggregation, vision tokens, hybrid model interpretability

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Introduce TimeViper, a hybrid vision-language model designed to efficiently process long videos using a Mamba-Transformer architecture.

🛠️ Research Methods:

– Utilize a hybrid design combining state-space models with attention mechanisms, enabling the transfer and compression of vision tokens to text tokens.

💬 Research Conclusions:

– TimeViper achieves state-of-the-art performance in processing over 10,000 frames, offering new insights into hybrid model interpretability and demonstrating competitive results across multiple benchmarks.

👉 Paper link: https://huggingface.co/papers/2511.16595

17. FinTRec: Transformer Based Unified Contextual Ads Targeting and Personalization for Financial Applications

🔑 Keywords: FinTRec, transformer-based framework, Financial Services, AI-generated summary, tree-based models

💡 Category: AI in Finance

🌟 Research Objective:

– Propose FinTRec, a transformer-based framework, to enhance financial services recommendation systems by addressing real-time challenges and outperforming traditional models.

🛠️ Research Methods:

– Historic simulation and live A/B testing to demonstrate FinTRec’s effectiveness compared to tree-based baselines.

💬 Research Conclusions:

– FinTRec consistently outperforms traditional tree-based models, offering improved performance and reduced training costs, and stands as the first comprehensive study addressing both technical and business aspects of sequential recommendation modeling in financial services.

👉 Paper link: https://huggingface.co/papers/2511.14865

18. EntroPIC: Towards Stable Long-Term Training of LLMs via Entropy Stabilization with Proportional-Integral Control

🔑 Keywords: EntroPIC, reinforcement learning, entropy, large language models, exploration

💡 Category: Reinforcement Learning

🌟 Research Objective:

– Introduce EntroPIC, a novel reinforcement learning method that adaptively tunes loss coefficients to stabilize entropy and ensure efficient exploration during long-term training of large language models.

🛠️ Research Methods:

– Utilizes a dynamic tuning approach for positive and negative samples through Proportional-Integral Control to maintain appropriate entropy levels across both on-policy and off-policy learning settings.

💬 Research Conclusions:

– Demonstrates that EntroPIC effectively stabilizes entropy, enabling stable and optimal reinforcement learning training for large language models, supported by comprehensive theoretical analysis and experimental results.

👉 Paper link: https://huggingface.co/papers/2511.15248

19. PartUV: Part-Based UV Unwrapping of 3D Meshes

🔑 Keywords: AI-generated meshes, UV unwrapping, geometric heuristics, PartUV, part decomposition

💡 Category: AI Systems and Tools

🌟 Research Objective:

– To introduce PartUV, a part-based UV unwrapping pipeline that enhances chart quality and minimizes fragmentation for AI-generated meshes.

🛠️ Research Methods:

– Utilizes a top-down recursive framework integrating high-level semantic part decomposition with geometric heuristics, based on the PartField method.

– Implements parameterization and packing algorithms with specialized handling for non-manifold and degenerate meshes, extensively parallelized for performance.

💬 Research Conclusions:

– PartUV outperforms existing tools and neural methods by reducing chart count and seam length, maintaining low distortion, and achieving high success rates on difficult meshes, facilitating new applications such as part-specific multi-tiles packing.

👉 Paper link: https://huggingface.co/papers/2511.16659

20. Draft and Refine with Visual Experts

🔑 Keywords: Draft and Refine (DnR), Large Vision-Language Models (LVLMs), visual grounding, question-conditioned utilization metric, interpretable

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The research aims to improve visual grounding in large vision-language models by using a Draft and Refine (DnR) framework with a question-conditioned utilization metric.

🛠️ Research Methods:

– A metric is used to quantify reliance on visual evidence through query-conditioned relevance maps and relevance-guided probabilistic masking.

– The DnR agent refines responses by using targeted feedback from external visual experts without requiring retraining or architectural changes.

💬 Research Conclusions:

– The study demonstrates that measuring visual utilization enhances accuracy and reduces hallucinations in vision-language tasks, paving the way for more interpretable and evidence-driven multimodal agent systems.

👉 Paper link: https://huggingface.co/papers/2511.11005

21. Boosting Medical Visual Understanding From Multi-Granular Language Learning

🔑 Keywords: Multi-Granular Language Learning, AI Native, cross-granularity alignment, multi-label supervision

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The objective of the study is to enhance visual understanding in complex domains like medical imaging by improving multi-label and cross-granularity alignment in image-text pretraining.

🛠️ Research Methods:

– The research introduces the Multi-Granular Language Learning (MGLL) framework, which employs structured multi-label supervision, integrates textual descriptions across different granularities, and introduces soft-label supervision with point-wise constraints. It uses smooth Kullback-Leibler (KL) divergence to ensure cross-granularity consistency.

💬 Research Conclusions:

– MGLL, pretrained on large-scale multi-granular datasets, outperforms other state-of-the-art methods in downstream tasks. It demonstrates enhanced effectiveness in complex domains while maintaining computational efficiency and can be seamlessly integrated as a plug-and-play module for vision-language models.

👉 Paper link: https://huggingface.co/papers/2511.15943

22. BioBench: A Blueprint to Move Beyond ImageNet for Scientific ML Benchmarks

🔑 Keywords: BioBench, ImageNet, AI-generated, Visual Representation Quality, Application-driven tasks

💡 Category: Computer Vision

🌟 Research Objective:

– BioBench aims to address the limitations of ImageNet-1K accuracy for scientific imagery by evaluating models on a diverse set of ecological tasks and modalities.

🛠️ Research Methods:

– The benchmark unifies 9 application-driven tasks, 4 taxonomic kingdoms, and 6 acquisition modalities, totaling 3.1 million images.

💬 Research Conclusions:

– BioBench captures what ImageNet-1K misses, providing a new signal for computer vision in ecology and a template for building reliable AI-for-science benchmarks in any domain.

👉 Paper link: https://huggingface.co/papers/2511.16315

23.