AI Native Daily Paper Digest – 20250306

1. Babel: Open Multilingual Large Language Models Serving Over 90% of Global Speakers

🔑 Keywords: Large language models, Multilingual, Open-source, Performance, Fine-tuning

💡 Category: Natural Language Processing

🌟 Research Objective:

– To develop Babel, an open multilingual large language model (LLM) that covers the top 25 languages by number of speakers and addresses the scarcity of open-source multilingual LLMs.

🛠️ Research Methods:

– Babel utilizes a novel layer extension technique to expand its parameter count, enhancing performance. It introduces two variants, Babel-9B for efficient inference and fine-tuning, and Babel-83B for superior performance benchmarks.

💬 Research Conclusions:

– Babel demonstrates superior performance over existing open multilingual LLMs on multilingual tasks. The Babel-83B-Chat model reaches performance levels comparable to commercial models, setting a new standard in the field.

👉 Paper link: https://huggingface.co/papers/2503.00865

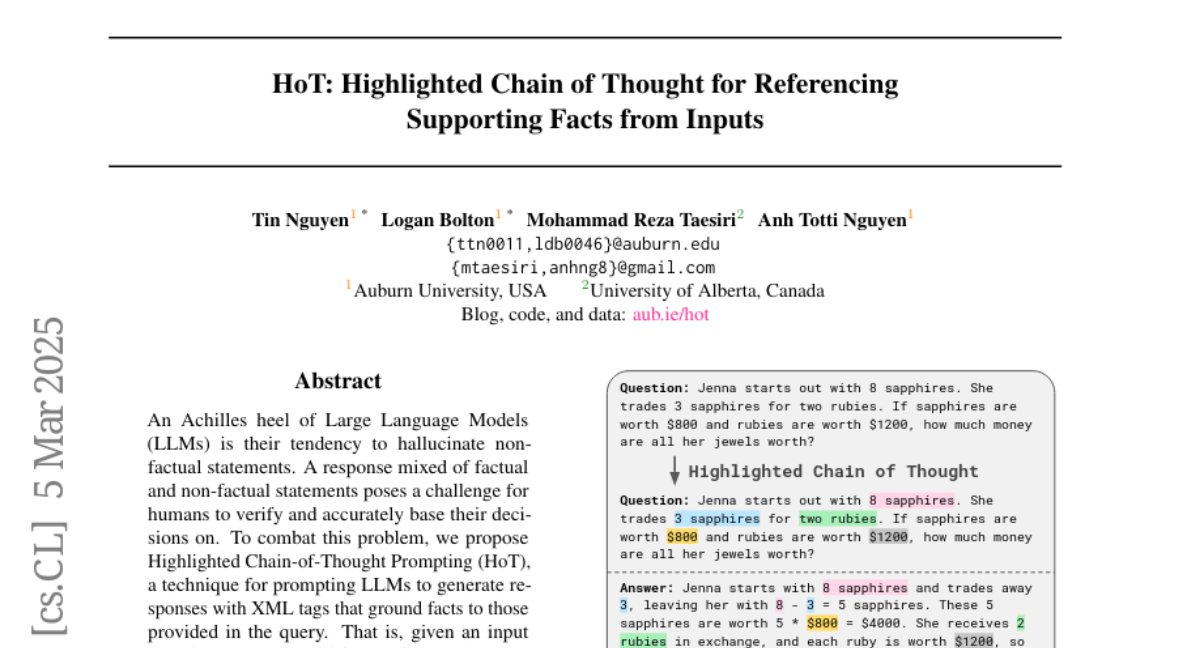

2. HoT: Highlighted Chain of Thought for Referencing Supporting Facts from Inputs

🔑 Keywords: Large Language Models, Highlighted Chain-of-Thought Prompting, Hallucination, XML tags

💡 Category: Natural Language Processing

🌟 Research Objective:

– The research aims to address the issue of hallucinations in Large Language Models by introducing Highlighted Chain-of-Thought Prompting to improve factual response generation.

🛠️ Research Methods:

– Implement Highlighted Chain-of-Thought Prompting (HoT) where XML tags are used to highlight key facts in input questions and responses, evaluated across 17 tasks.

💬 Research Conclusions:

– HoT outperforms traditional prompting techniques in factual recognition within time-constrained scenarios, though users may still misinterpret incorrect answers as correct.

👉 Paper link: https://huggingface.co/papers/2503.02003

3. Process-based Self-Rewarding Language Models

🔑 Keywords: Large Language Models, Self-Rewarding, Mathematical Reasoning, Preference Optimization

💡 Category: Natural Language Processing

🌟 Research Objective:

– To propose a new self-rewarding paradigm for Large Language Models (LLMs) focused on enhancing mathematical reasoning performance.

🛠️ Research Methods:

– Introduction of a Process-based Self-Rewarding pipeline that includes long-thought reasoning, LLM-as-a-Judge, and step-wise preference optimization.

💬 Research Conclusions:

– The new paradigm effectively improves LLMs’ performance on mathematical reasoning benchmarks, showcasing the potential of surpassing human capability in reasoning.

👉 Paper link: https://huggingface.co/papers/2503.03746

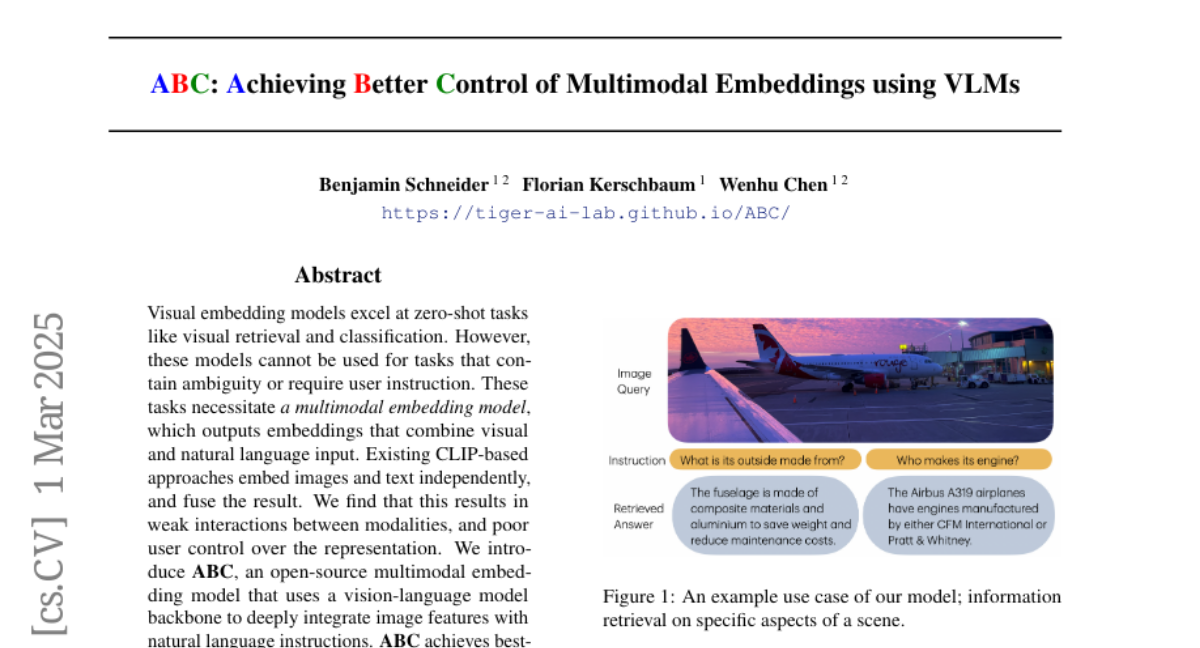

4. ABC: Achieving Better Control of Multimodal Embeddings using VLMs

🔑 Keywords: Multimodal embedding model, Vision-language integration, CLIP-based approach, Natural language control

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To introduce ABC, an open-source multimodal embedding model designed to integrate image features with natural language instructions for improved task performance.

🛠️ Research Methods:

– Utilize a vision-language model backbone that deeply integrates image and text embeddings to enhance user control and manage ambiguous retrieval tasks.

💬 Research Conclusions:

– ABC demonstrates superior performance in tasks involving image-to-text retrieval and classification, while providing flexible natural language control, advancing the field of multimodal embeddings.

👉 Paper link: https://huggingface.co/papers/2503.00329

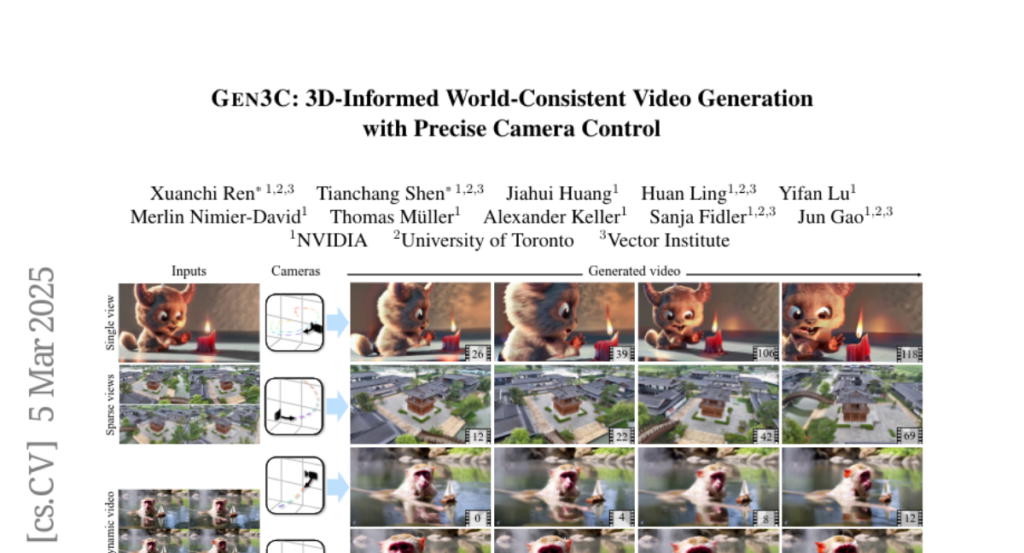

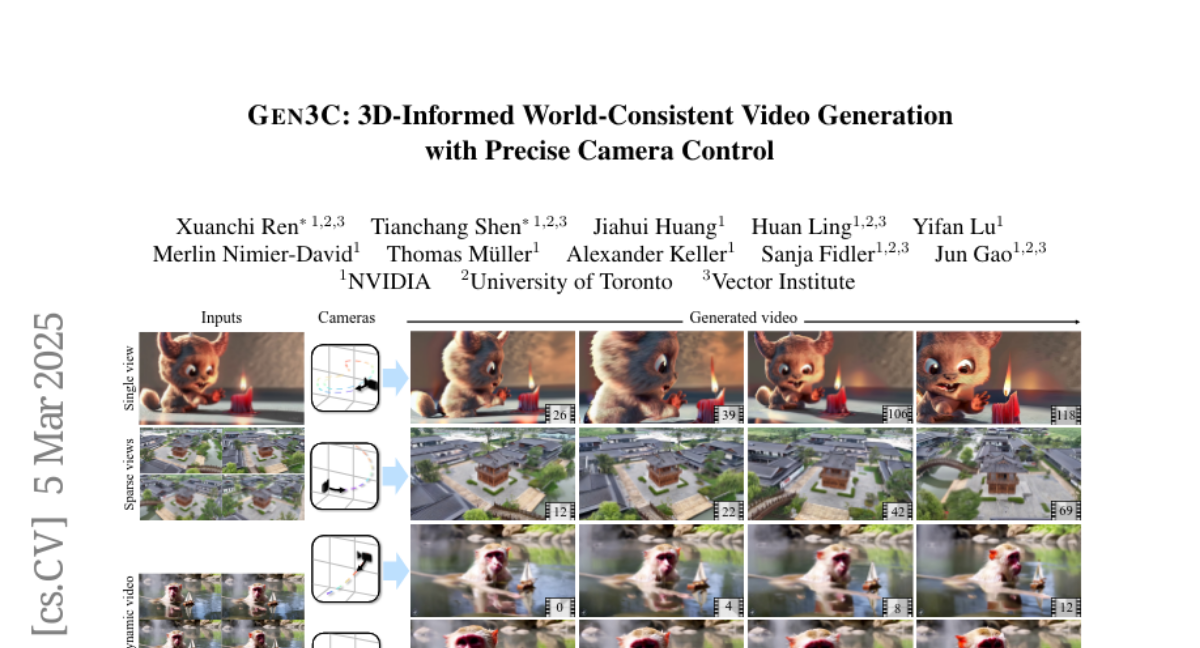

5. GEN3C: 3D-Informed World-Consistent Video Generation with Precise Camera Control

🔑 Keywords: GEN3C, Camera Control, 3D Consistency, Video Model, Sparse-view Novel View Synthesis

💡 Category: Generative Models

🌟 Research Objective:

– The paper introduces GEN3C, a generative video model aimed at achieving precise camera control and maintaining temporal 3D consistency in video generation.

🛠️ Research Methods:

– GEN3C utilizes a 3D cache comprising point clouds derived from pixel-wise depth predictions of seed images or prior frames, conditioning the generation of new frames on 2D renderings of this 3D cache with user-defined camera trajectories.

💬 Research Conclusions:

– Compared to previous models, GEN3C ensures more accurate camera control and demonstrates state-of-the-art performance in sparse-view novel view synthesis, excelling in complex scenarios such as driving scenes and monocular dynamic videos.

👉 Paper link: https://huggingface.co/papers/2503.03751

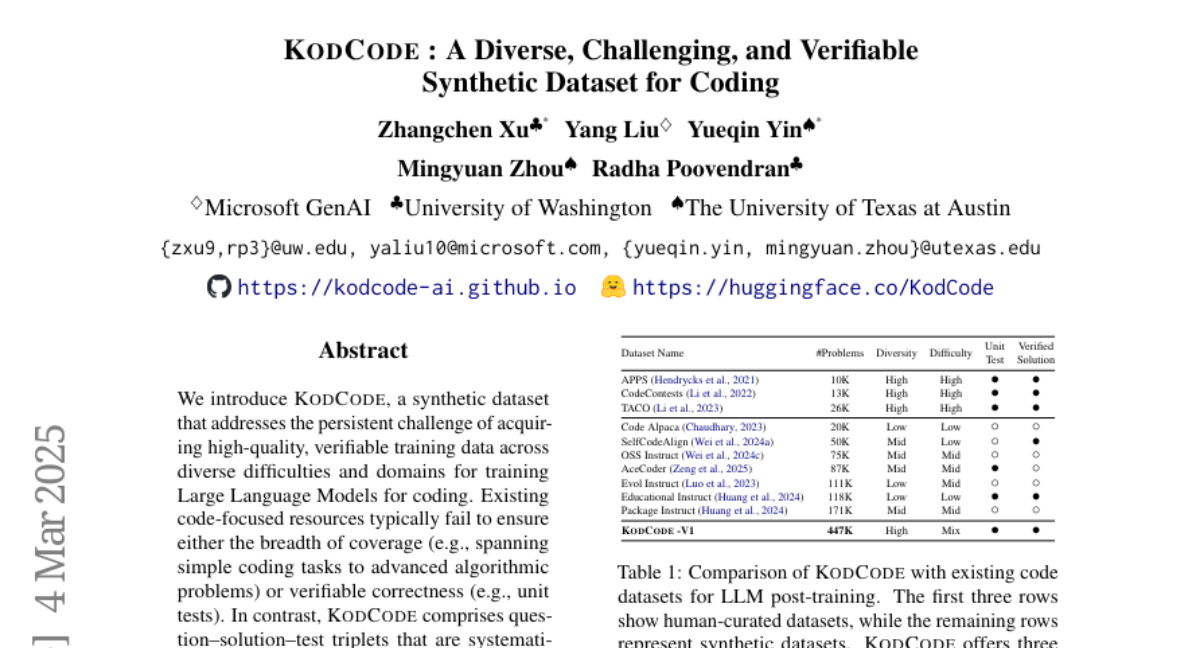

6. KodCode: A Diverse, Challenging, and Verifiable Synthetic Dataset for Coding

🔑 Keywords: KodCode, Large Language Models, coding benchmarks, self-verification, RL tuning

💡 Category: Natural Language Processing

🌟 Research Objective:

– The development of KodCode, a synthetic dataset designed to provide high-quality, verifiable training data across diverse coding difficulties for Large Language Models.

🛠️ Research Methods:

– Creation of question-solution-test triplets that are validated through a self-verification procedure, with data synthesis involving question rewriting and a test-based reject sampling procedure using DeepSeek R1.

💬 Research Conclusions:

– KodCode-tuned models demonstrate state-of-the-art performance in coding benchmarks, surpassing models like Qwen2.5-Coder-32B-Instruct and DeepSeek-R1-Distill-Llama-70B.

👉 Paper link: https://huggingface.co/papers/2503.02951

7. Enhancing Abnormality Grounding for Vision Language Models with Knowledge Descriptions

🔑 Keywords: Visual Language Models, medical abnormality detection, localization, decomposed medical knowledge

💡 Category: AI in Healthcare

🌟 Research Objective:

– The study seeks to enhance the performance of Visual Language Models (VLMs) in detecting and localizing abnormalities in medical images by utilizing decomposed medical knowledge.

🛠️ Research Methods:

– The approach involves breaking down medical concepts into fundamental attributes and common visual patterns to improve alignment between textual descriptions and visual features.

💬 Research Conclusions:

– The proposed method, evaluated on the 0.23B Florence-2 base model, achieves comparable abnormality grounding performance to larger models using significantly less training data and demonstrates strong generalization capabilities with both known and unseen abnormalities.

👉 Paper link: https://huggingface.co/papers/2503.03278

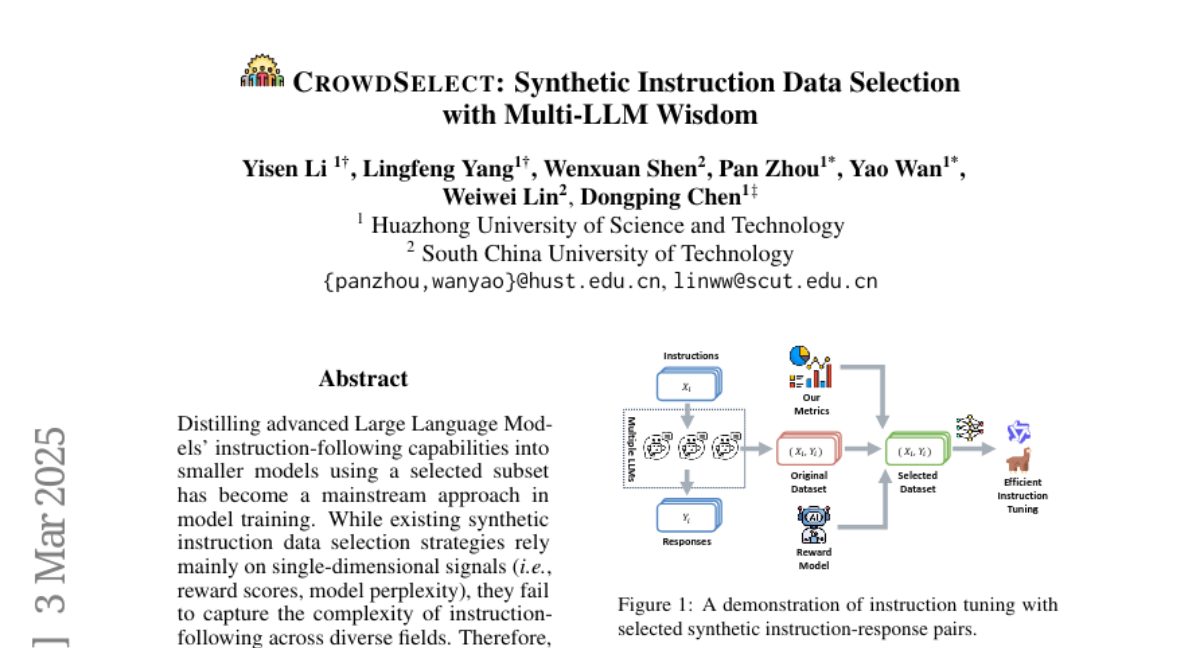

8. CrowdSelect: Synthetic Instruction Data Selection with Multi-LLM Wisdom

🔑 Keywords: Large Language Models, instruction-following, Multi-LLM, CrowdSelect

💡 Category: Natural Language Processing

🌟 Research Objective:

– To investigate diverse signals for capturing comprehensive characteristics of instruction-following capabilities in models and propose new foundational metrics.

🛠️ Research Methods:

– Utilization of Multi-LLM wisdom through diverse LLM responses and reward model assessment; introduction of CrowdSelect with a clustering-based approach.

💬 Research Conclusions:

– Foundation metrics improve performance across base models; CrowdSelect achieves state-of-the-art results, enhancing performance in model fine-tuning.

👉 Paper link: https://huggingface.co/papers/2503.01836

9. Fine-Tuning Small Language Models for Domain-Specific AI: An Edge AI Perspective

🔑 Keywords: Edge AI, Shakti Small Language Models, Responsible AI, Quantization Techniques, On-device Intelligence

💡 Category: Natural Language Processing

🌟 Research Objective:

– The objective is to introduce Shakti Small Language Models (SLMs) designed to overcome challenges like computational demands, energy consumption, and data privacy risks when deploying large-scale language models on edge devices.

🛠️ Research Methods:

– The approach includes using efficient architectures, quantization techniques, and Responsible AI principles to enable on-device intelligence.

💬 Research Conclusions:

– Findings show that Shakti SLMs, when carefully engineered and fine-tuned, can effectively perform tasks in general and specialized domains, often exceeding expectations in real-world edge-AI scenarios.

👉 Paper link: https://huggingface.co/papers/2503.01933

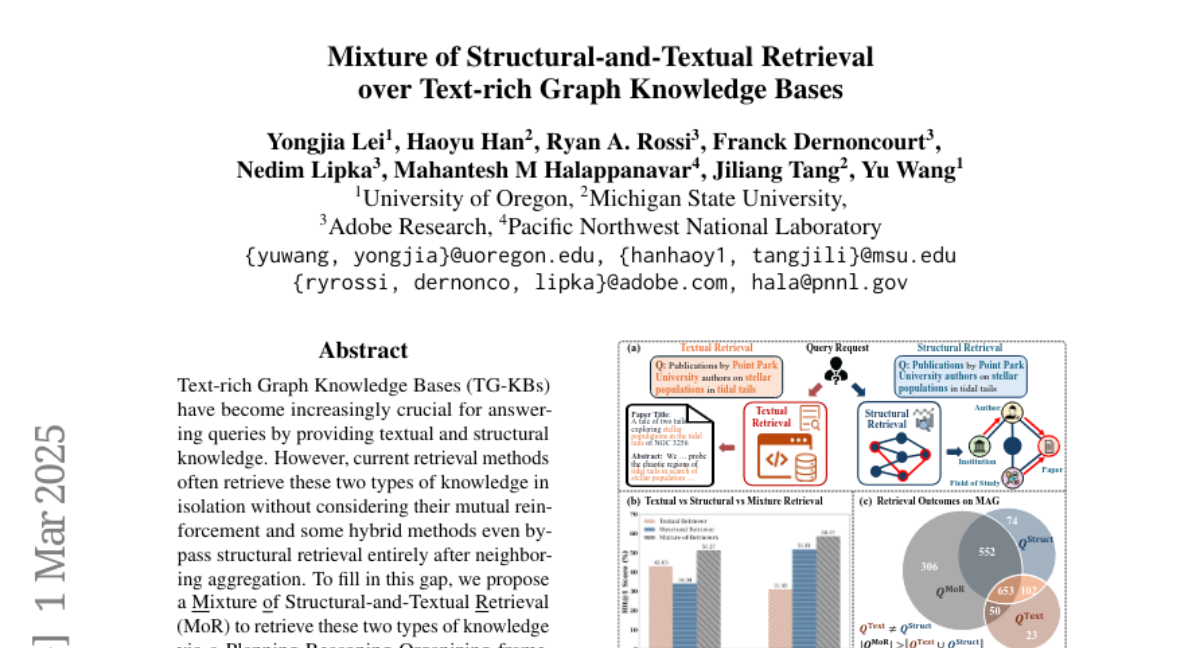

10. Mixture of Structural-and-Textual Retrieval over Text-rich Graph Knowledge Bases

🔑 Keywords: Text-rich Graph Knowledge Bases, Retrieval Methods, Mixture of Structural-and-Textual Retrieval, Planning-Reasoning-Organizing framework

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– Address the gap in current retrieval methods by introducing a Mixture of Structural-and-Textual Retrieval approach to harmonize textual and structural knowledge retrieval.

🛠️ Research Methods:

– Implement a Planning-Reasoning-Organizing framework, where textual planning graphs are generated in the Planning stage, followed by structural traversal and textual matching in the Reasoning stage, and reranking of candidates based on structural trajectory in the Organizing stage.

💬 Research Conclusions:

– MoR demonstrates superior performance in harmonizing structural and textual retrieval, highlighting the uneven performance across different query logics and the advantages of integrating structural trajectories for candidate reranking.

👉 Paper link: https://huggingface.co/papers/2502.20317

11. QE4PE: Word-level Quality Estimation for Human Post-Editing

🔑 Keywords: Word-level Quality Estimation, Machine Translation, Human Post-Editing, Neural MT Model, Error Detection

💡 Category: Natural Language Processing

🌟 Research Objective:

– Investigate the impact of word-level quality estimation on post-editing of machine translations by professionals.

🛠️ Research Methods:

– Conducted a study with 42 professional post-editors, comparing four error-span highlight modalities to evaluate post-editing effort and productivity.

💬 Research Conclusions:

– Domain, language, and editors’ speed significantly influence the effectiveness of highlights, revealing discrepancies between accuracy and usability in professional workflows.

👉 Paper link: https://huggingface.co/papers/2503.03044

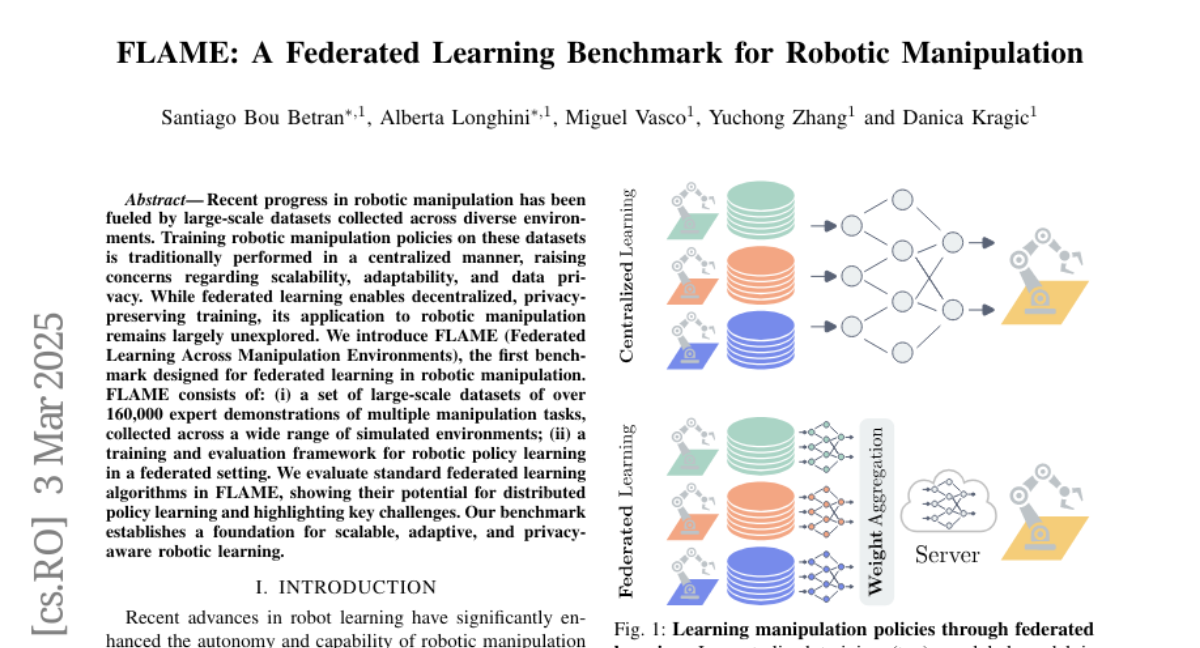

12. FLAME: A Federated Learning Benchmark for Robotic Manipulation

🔑 Keywords: Federated Learning, Robotic Manipulation, Privacy-Preserving

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– Introduce FLAME, a benchmark for federated learning in robotic manipulation aimed at addressing scalability, adaptability, and privacy concerns.

🛠️ Research Methods:

– Development of FLAME, which includes large datasets with over 160,000 expert demonstrations and a framework for policy learning in federated settings.

💬 Research Conclusions:

– Standard federated learning algorithms show potential for distributed policy learning, highlighting challenges and establishing a foundation for future research in scalable and privacy-aware robotic learning.

👉 Paper link: https://huggingface.co/papers/2503.01729

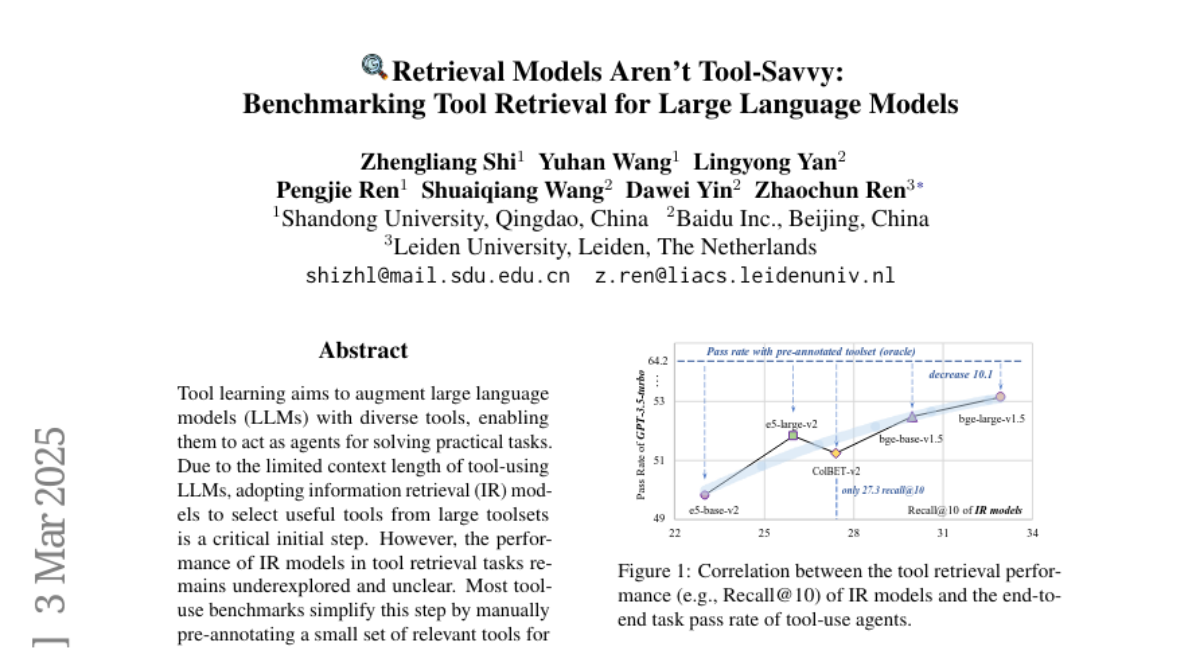

13. Retrieval Models Aren’t Tool-Savvy: Benchmarking Tool Retrieval for Large Language Models

🔑 Keywords: Tool Learning, Large Language Models, Information Retrieval, Tool Retrieval, ToolRet

💡 Category: AI Systems and Tools

🌟 Research Objective:

– The study aims to enhance large language models (LLMs) with diverse tools by establishing robust tool retrieval mechanisms.

🛠️ Research Methods:

– Proposes ToolRet, a heterogeneous tool retrieval benchmark comprising 7.6k diverse retrieval tasks and a corpus of 43k tools, to evaluate the performance of various IR models.

💬 Research Conclusions:

– Traditional IR models perform poorly on the ToolRet benchmark, leading to decreased task success rates of tool-using LLMs.

– A large-scale training dataset with over 200k instances significantly improves IR models’ tool retrieval capabilities.

👉 Paper link: https://huggingface.co/papers/2503.01763

14. Exploring Rewriting Approaches for Different Conversational Tasks

🔑 Keywords: Conversational Assistants, Query Rewriting, Generative Tasks, Fusion Approach, Multimodal Generative Task

💡 Category: Natural Language Processing

🌟 Research Objective:

– The objective was to systematically investigate two different approaches—rewriting and fusion—on diverse generative tasks within conversational assistants.

🛠️ Research Methods:

– Two approaches, rewriting and fusion, were examined on text-to-text generation and multimodal generative tasks, where input is text and output includes visualizations or data tables.

💬 Research Conclusions:

– The effectiveness of query rewriting or fusion heavily relies on the specific use case. Query rewriting is optimal for conversational question-answering, while query fusion shows better performance in data analysis tasks involving visualization and data table generation, regardless of conversation length.

👉 Paper link: https://huggingface.co/papers/2502.18860

15. Remasking Discrete Diffusion Models with Inference-Time Scaling

🔑 Keywords: Remasking Diffusion Model, Discrete Diffusion, Inference-time Compute Scaling, Natural Language Outputs, Molecule Design

💡 Category: Generative Models

🌟 Research Objective:

– The paper aims to enhance the capabilities of masked discrete diffusion models by introducing the Remasking Diffusion Model (ReMDM) sampler to address the limitation of lack of iterative refinement.

🛠️ Research Methods:

– ReMDM is a method applied to pretrained masked diffusion models through a custom remasking backward process to enable computing scaling during inference.

💬 Research Conclusions:

– ReMDM improves the quality of natural language outputs to compete with autoregressive models and enhances sample quality in image and molecule design domains by facilitating diffusion guidance and controllability.

👉 Paper link: https://huggingface.co/papers/2503.00307

16. Benchmarking Large Language Models for Multi-Language Software Vulnerability Detection

🔑 Keywords: Generative AI, Large Language Models (LLMs), Software Vulnerability Detection (SVD), Prompt Engineering, Software Security

💡 Category: Generative Models

🌟 Research Objective:

– To examine the capabilities of large language models in software vulnerability detection and bridge the knowledge gap regarding their effectiveness across different programming languages.

🛠️ Research Methods:

– A comprehensive empirical study using a dataset of vulnerable functions in Python, Java, and JavaScript, evaluating five open-source LLMs with approaches like prompt engineering, instruction tuning, and sequence classification fine-tuning.

– Comparison with five fine-tuned small language models and two open-source static application security testing tools.

– Exploration of data and model perspectives, such as retraining on balanced datasets and using ensemble learning methods.

💬 Research Conclusions:

– Despite advancements, software vulnerability detection remains challenging for large language models.

– The study provides insights into harnessing generative AI to enhance software security and suggests directions for improving LLM performance in this domain.

👉 Paper link: https://huggingface.co/papers/2503.01449

17. Interact, Instruct to Improve: A LLM-Driven Parallel Actor-Reasoner Framework for Enhancing Autonomous Vehicle Interactions

🔑 Keywords: Autonomous Vehicles, Human-Machine Interface, Large Language Models, Real-time Decision-making, Safety

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– The primary objective is to enhance the interaction capabilities of Autonomous Vehicles (AVs) with Human-driven Vehicles (HVs) by developing a framework for explicit bidirectional communication.

🛠️ Research Methods:

– Introduction of a parallel Actor-Reasoner framework that incorporates a memory database called the Actor to facilitate interactions between language model-driven Reasoner and simulated HVs.

– Implementation of a memory partition module and a two-layer memory retrieval module to improve handling of heterogeneous HVs.

💬 Research Conclusions:

– The Actor-Reasoner framework significantly enhances safety and efficiency in AV-HV interactions and is effective across multiple scenarios, as demonstrated by ablation studies.

– The combination of eHMI information and action solutions confirms the framework’s effectiveness.

👉 Paper link: https://huggingface.co/papers/2503.00502

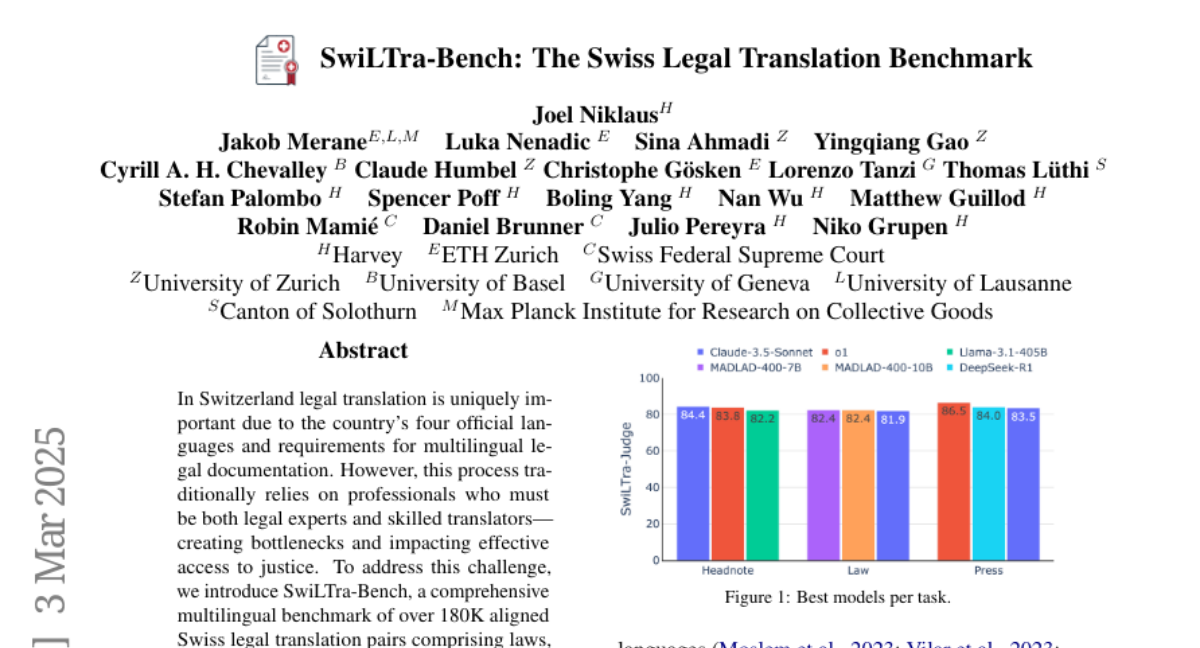

18. SwiLTra-Bench: The Swiss Legal Translation Benchmark

🔑 Keywords: Multilingual legal documentation, LLM-based translation systems, SwiLTra-Bench, SwiLTra-Judge

💡 Category: Natural Language Processing

🌟 Research Objective:

– The study aims to address bottlenecks in legal translation in Switzerland by introducing SwiLTra-Bench, a benchmark designed to evaluate multilingual LLM-based translation systems for improved legal documentation translation.

🛠️ Research Methods:

– The researchers conducted a systematic evaluation of over 180K aligned Swiss legal translation pairs and compared the performance of frontier models and specialized translation systems in translating legal documents.

💬 Research Conclusions:

– It was found that frontier models showed superior performance across document types, particularly when compared to specialized systems that excelled in translating laws but struggled with headnotes. Additionally, the fine-tuning of open SLMs improved translation quality but still fell short of zero-shot prompted models like Claude-3.5-Sonnet. SwiLTra-Judge was introduced to better align machine evaluations with human expert assessments.

👉 Paper link: https://huggingface.co/papers/2503.01372

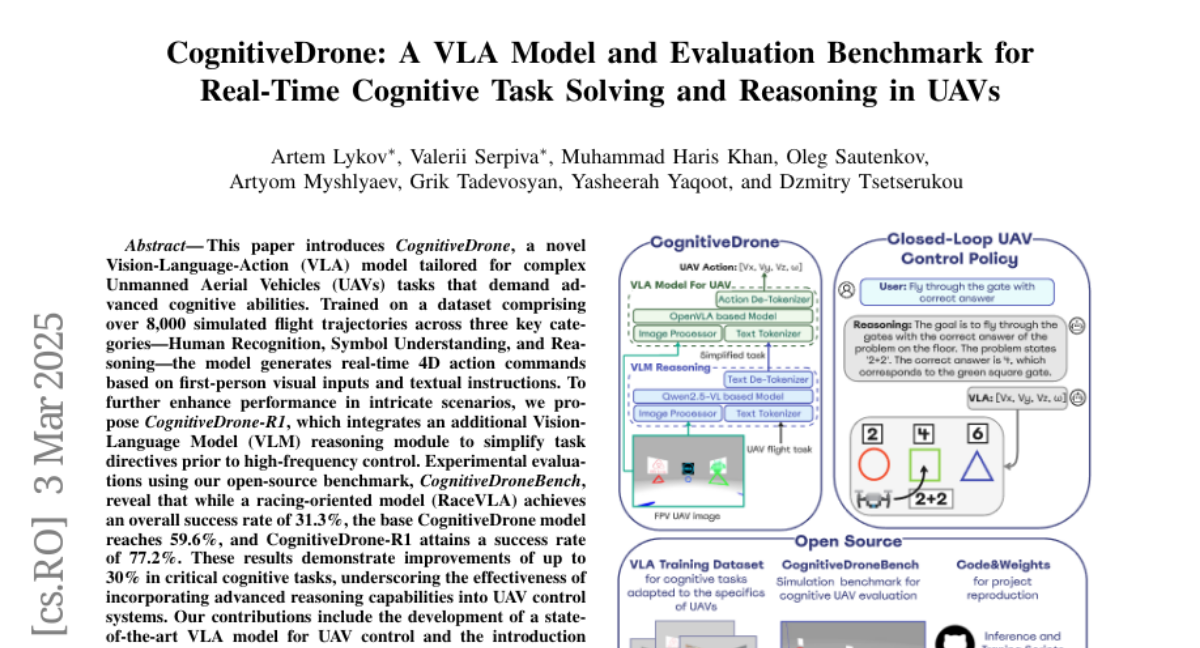

19. CognitiveDrone: A VLA Model and Evaluation Benchmark for Real-Time Cognitive Task Solving and Reasoning in UAVs

🔑 Keywords: CognitiveDrone, Vision-Language-Action, UAVs, Cognitive Tasks, Advanced Reasoning

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– Develop a novel VLA model, CognitiveDrone, tailored for complex UAV tasks requiring advanced cognitive abilities.

🛠️ Research Methods:

– Trained on over 8,000 simulated flight trajectories in categories like Human Recognition, Symbol Understanding, and Reasoning. Introduced CognitiveDrone-R1 with an enhanced reasoning module.

💬 Research Conclusions:

– CognitiveDrone-R1 achieved a 77.2% success rate, demonstrating up to a 30% improvement in cognitive tasks. Introduced a benchmark, CognitiveDroneBench, for assessing cognitive tasks in drones.

👉 Paper link: https://huggingface.co/papers/2503.01378

20. Reliable and Efficient Multi-Agent Coordination via Graph Neural Network Variational Autoencoders

🔑 Keywords: Multi-agent coordination, Graph Neural Network Variational Autoencoders, centralized optimization, mixed-integer linear program

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– The study aims to enhance multi-agent coordination in shared spaces for multi-robot navigation, particularly in high-density traffic areas where local coordination may fail.

🛠️ Research Methods:

– Utilizes Graph Neural Network Variational Autoencoders (GNN-VAE) to address the coordination problem as a graph problem and gathers ground truth data using a Mixed-Integer Linear Program (MILP) solver.

💬 Research Conclusions:

– The proposed GNN-VAE framework efficiently generates solutions that respect coordination constraints, achieving high-quality outcomes even for large-scale problems, significantly outperforming traditional centralized optimization methods.

👉 Paper link: https://huggingface.co/papers/2503.02954

21. Diverse Controllable Diffusion Policy with Signal Temporal Logic

🔑 Keywords: Autonomous Systems, Signal Temporal Logic, Diffusion Models, Rule-compliant Behaviors

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– The study aims to generate controllable, diverse, and rule-aware behaviors for autonomous systems, addressing limitations in existing driving simulators.

🛠️ Research Methods:

– The research utilizes Signal Temporal Logic (STL) and Diffusion Models to synthesize diverse synthetic data, employing trajectory optimization and learning a rectified diffusion policy.

💬 Research Conclusions:

– The approach achieves the most diverse and rule-compliant trajectories compared to other methods, significantly improving runtime efficiency and performance in closed-loop testing scenarios. The study offers tools and datasets for further research.

👉 Paper link: https://huggingface.co/papers/2503.02924