AI Native Daily Paper Digest – 20250328

1. Video-R1: Reinforcing Video Reasoning in MLLMs

🔑 Keywords: Video Reasoning, T-GRPO Algorithm, Multi-Modal Large Language Models, Temporal Modeling, Video-R1

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The study aims to explore the R1 paradigm for eliciting video reasoning within multimodal large language models (MLLMs).

🛠️ Research Methods:

– Introduced the T-GRPO algorithm to incorporate temporal information in video reasoning.

– Developed two datasets, Video-R1-COT-165k and Video-R1-260k, for leveraging both image and video data.

💬 Research Conclusions:

– Video-R1 demonstrates significant improvement in video reasoning benchmarks and surpasses the commercial proprietary model GPT-4o in video spatial reasoning accuracy.

👉 Paper link: https://huggingface.co/papers/2503.21776

2. UI-R1: Enhancing Action Prediction of GUI Agents by Reinforcement Learning

🔑 Keywords: reinforcement learning, rule-based rewards, MLLMs, GUI action prediction, Group Relative Policy Optimization

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The study aims to explore the enhancement of reasoning capabilities in multimodal large language models (MLLMs) specifically for graphic user interface (GUI) action prediction tasks using rule-based reinforcement learning.

🛠️ Research Methods:

– A dataset of 136 challenging tasks is curated, and a unified rule-based action reward is introduced to optimize MLLMs using policy-based algorithms like Group Relative Policy Optimization (GRPO).

💬 Research Conclusions:

– The proposed model, UI-R1-3B, shows significant improvement in both in-domain and out-of-domain tasks, demonstrating the effectiveness of rule-based reinforcement learning in advancing GUI understanding and control.

👉 Paper link: https://huggingface.co/papers/2503.21620

3. Challenging the Boundaries of Reasoning: An Olympiad-Level Math Benchmark for Large Language Models

🔑 Keywords: Large reasoning models, mathematical reasoning, evaluation frameworks, OlymMATH, bilingual assessment

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– Introduce OlymMATH, a novel Olympiad-level mathematical benchmark to evaluate the complex reasoning capabilities of large language models (LLMs).

🛠️ Research Methods:

– OlymMATH includes 200 problems in English and Chinese, organized into two difficulty tiers, with empirical testing on models like DeepSeek-R1 and OpenAI’s o3-mini.

💬 Research Conclusions:

– OlymMATH presents a significant challenge for state-of-the-art models, highlighting their limited accuracy on hard problems and offering a bilingual assessment to address existing evaluation gaps.

👉 Paper link: https://huggingface.co/papers/2503.21380

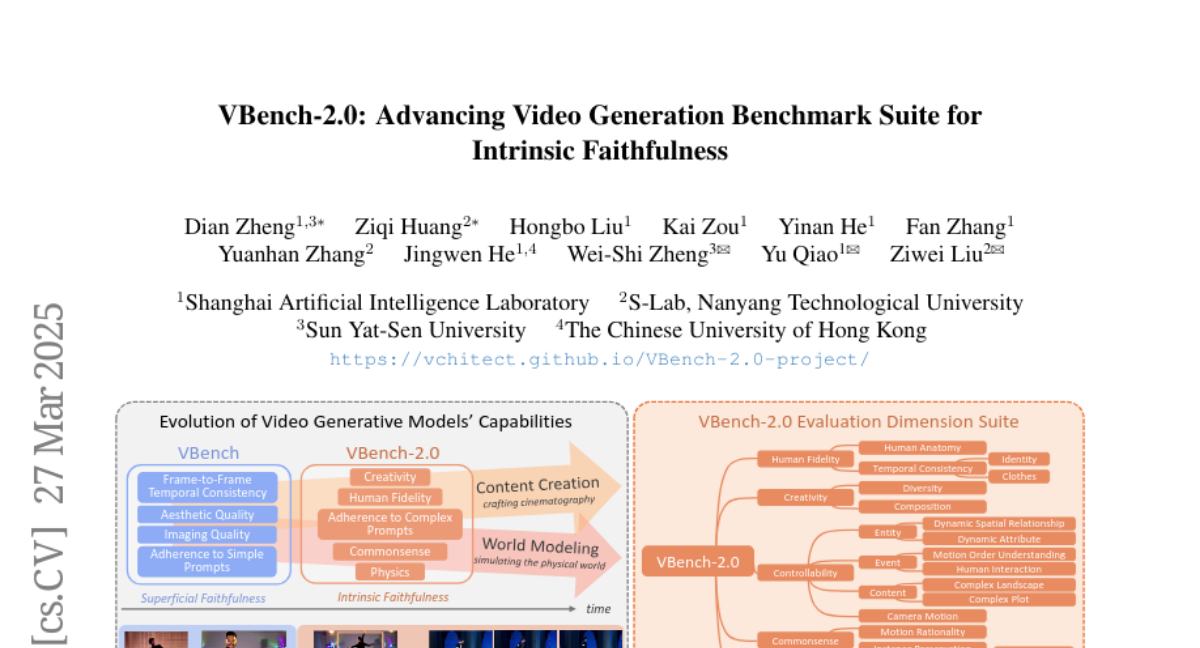

4. VBench-2.0: Advancing Video Generation Benchmark Suite for Intrinsic Faithfulness

🔑 Keywords: Video Generation, VBench-2.0, Intrinsic Faithfulness, AI-assisted Filmmaking, Simulated World Modeling

💡 Category: Generative Models

🌟 Research Objective:

– To advance the state of video generative models by enhancing their intrinsic faithfulness, ensuring adherence to real-world principles like physical laws and commonsense reasoning.

🛠️ Research Methods:

– Development of VBench-2.0, a new benchmark that evaluates models across five dimensions (Human Fidelity, Controllability, Creativity, Physics, and Commonsense) using state-of-the-art VLMs and LLMs as well as specialized methods like anomaly detection.

💬 Research Conclusions:

– VBench-2.0 aims to set a new standard for video generative models by pushing beyond superficial faithfulness towards true intrinsic faithfulness, with potential applications in AI-assisted filmmaking and simulated world modeling.

👉 Paper link: https://huggingface.co/papers/2503.21755

5. Large Language Model Agent: A Survey on Methodology, Applications and Challenges

🔑 Keywords: Large Language Models, Goal-driven Behaviors, Dynamic Adaptation, Methodology-centered Taxonomy, Artificial General Intelligence

💡 Category: Foundations of AI

🌟 Research Objective:

– To systematically deconstruct Large Language Model (LLM) agents and understand their role as a pathway toward Artificial General Intelligence through a methodology-centered taxonomy.

🛠️ Research Methods:

– The study uses a taxonomy-based analysis to link architectural foundations, collaboration mechanisms, and evolutionary pathways of LLM agents.

💬 Research Conclusions:

– The survey provides a unified architectural perspective, examines construction, collaboration, and evolution of agents, and offers a structured taxonomy to aid researchers. It also addresses evaluation methodologies, tool applications, and practical challenges in diverse domains.

👉 Paper link: https://huggingface.co/papers/2503.21460

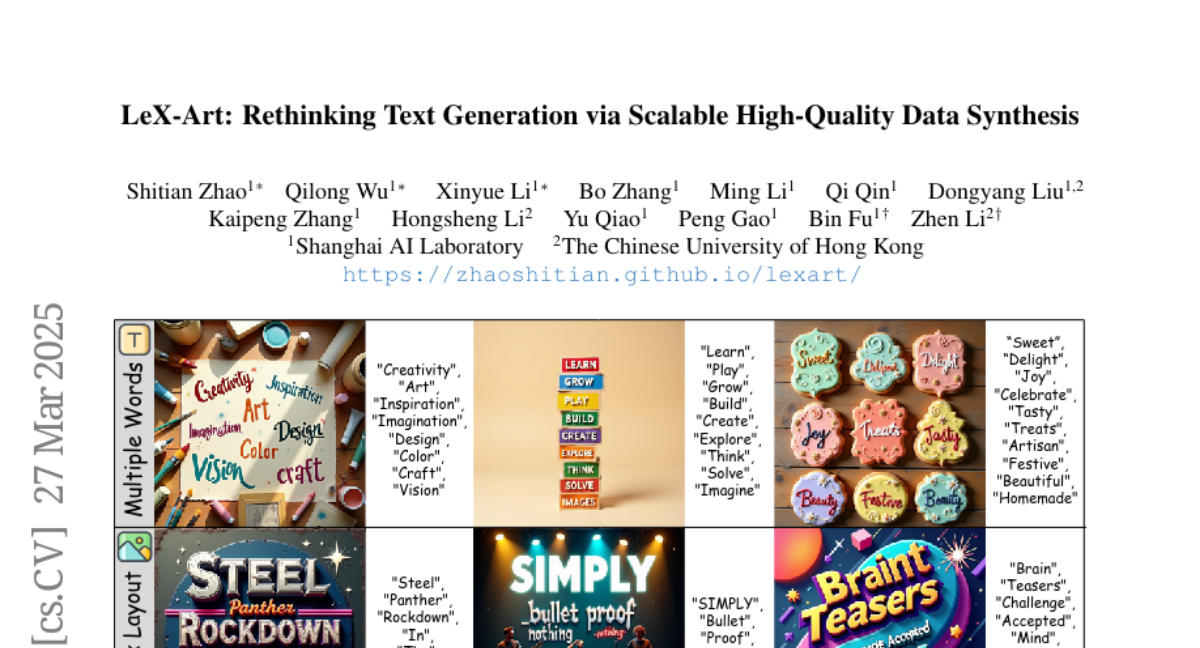

6. LeX-Art: Rethinking Text Generation via Scalable High-Quality Data Synthesis

🔑 Keywords: Text-Image Synthesis, LeX-Art, LeX-Enhancer, State-of-the-art, Visual Text Generation

💡 Category: Generative Models

🌟 Research Objective:

– The paper introduces LeX-Art, aiming to bridge the gap between prompt expressiveness and text rendering fidelity in text-image synthesis.

🛠️ Research Methods:

– Utilizes a data-centric paradigm to create a high-quality data synthesis pipeline, constructs the LeX-10K dataset, develops LeX-Enhancer for prompt enrichment, and trains two text-to-image models, LeX-FLUX and LeX-Lumina.

– Introduces LeX-Bench for evaluating visual text generation fidelity, aesthetics, and alignment, along with PNED for robust text accuracy evaluation.

💬 Research Conclusions:

– LeX-Lumina achieves a significant 79.81% PNED gain on CreateBench, with LeX-FLUX outperforming existing models in color, positional, and font accuracy.

– All resources including code, models, datasets, and a demo are publicly available.

👉 Paper link: https://huggingface.co/papers/2503.21749

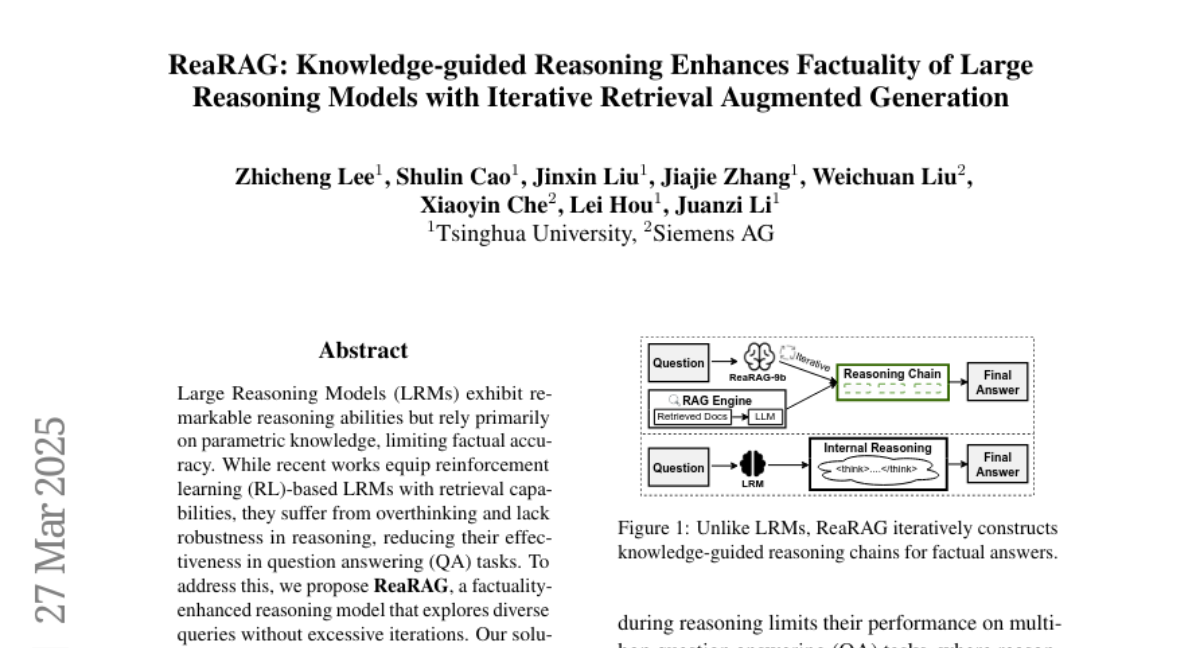

7. ReaRAG: Knowledge-guided Reasoning Enhances Factuality of Large Reasoning Models with Iterative Retrieval Augmented Generation

🔑 Keywords: Large Reasoning Models, reinforcement learning, ReaRAG, multi-hop QA, Retrieval-Augmented Generation

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– To enhance the factual accuracy and robustness of Large Reasoning Models (LRMs) in question answering tasks.

🛠️ Research Methods:

– Implemented ReaRAG, a model integrating retrieval capabilities with a novel data construction framework, focusing on limiting reasoning chain length and deliberate thinking.

💬 Research Conclusions:

– ReaRAG demonstrates superior performance over existing baselines in multi-hop QA by effectively integrating robust reasoning, improving the model’s factuality and reflective ability.

👉 Paper link: https://huggingface.co/papers/2503.21729

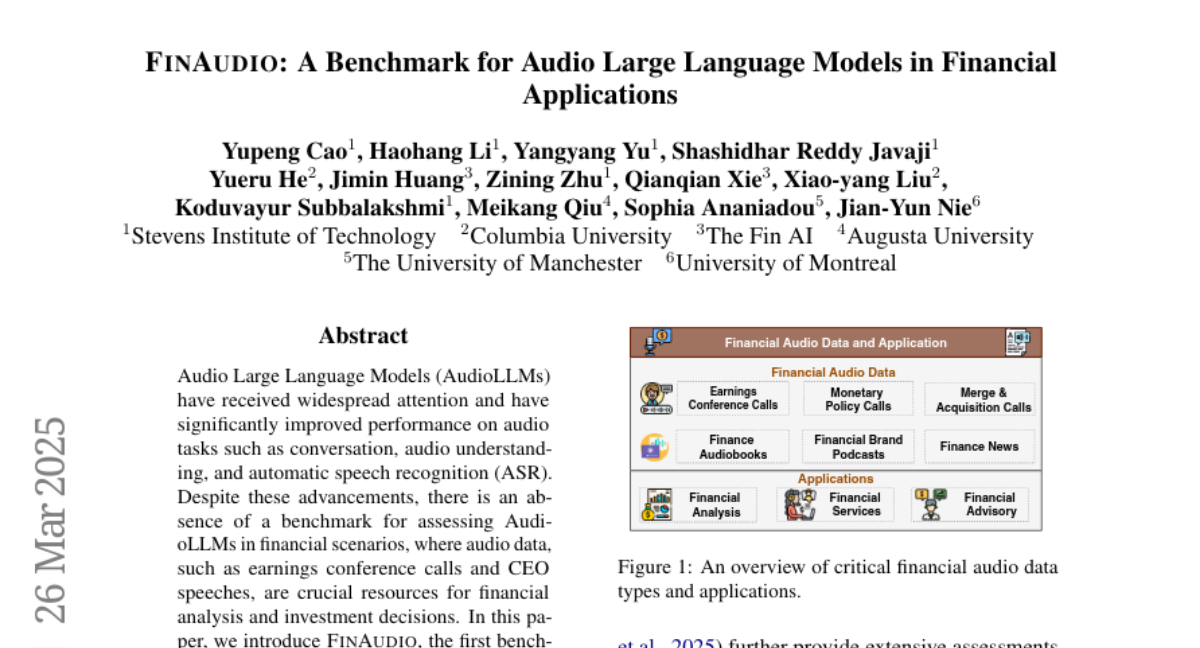

8. FinAudio: A Benchmark for Audio Large Language Models in Financial Applications

🔑 Keywords: AudioLLMs, Benchmark, Financial Scenarios, Automatic Speech Recognition, Financial Analysis

💡 Category: AI in Finance

🌟 Research Objective:

– This study introduces FinAudio, the first benchmark designed to evaluate the capacity of AudioLLMs specifically in the financial domain.

🛠️ Research Methods:

– The research defines three tasks based on financial scenarios: ASR for short and long financial audio, and summarization of long financial audio. It curates short and long audio datasets and develops a novel dataset for financial audio summarization.

💬 Research Conclusions:

– The evaluation of seven prevalent AudioLLMs on FinAudio reveals limitations in dealing with financial scenarios and provides insights for advancements in the field. All datasets and codes used are to be released for further research.

👉 Paper link: https://huggingface.co/papers/2503.20990

9. ResearchBench: Benchmarking LLMs in Scientific Discovery via Inspiration-Based Task Decomposition

🔑 Keywords: Large Language Models, Scientific Discovery, Automated Framework, Hypotheses, Inspiration Retrieval

💡 Category: Natural Language Processing

🌟 Research Objective:

– To introduce a large-scale benchmark for evaluating LLMs’ capabilities in scientific discovery.

🛠️ Research Methods:

– Development of an automated framework to extract critical components from scientific papers, validated across 12 disciplines.

💬 Research Conclusions:

– LLMs perform well in retrieving novel knowledge associations, suggesting their potential as “research hypothesis mines” for generating innovative hypotheses with minimal human intervention.

👉 Paper link: https://huggingface.co/papers/2503.21248

10. Embodied-Reasoner: Synergizing Visual Search, Reasoning, and Action for Embodied Interactive Tasks

🔑 Keywords: Embodied Reasoner, spatial understanding, temporal reasoning, imitation learning

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– To explore the effectiveness of deep thinking models in embodied domains requiring continuous environmental interaction.

🛠️ Research Methods:

– Development of a three-stage training pipeline incorporating imitation learning, self-exploration via rejection sampling, and self-correction through reflection tuning.

💬 Research Conclusions:

– The Embodied Reasoner model significantly outperforms existing visual reasoning models and demonstrates fewer logical inconsistencies in complex tasks.

👉 Paper link: https://huggingface.co/papers/2503.21696

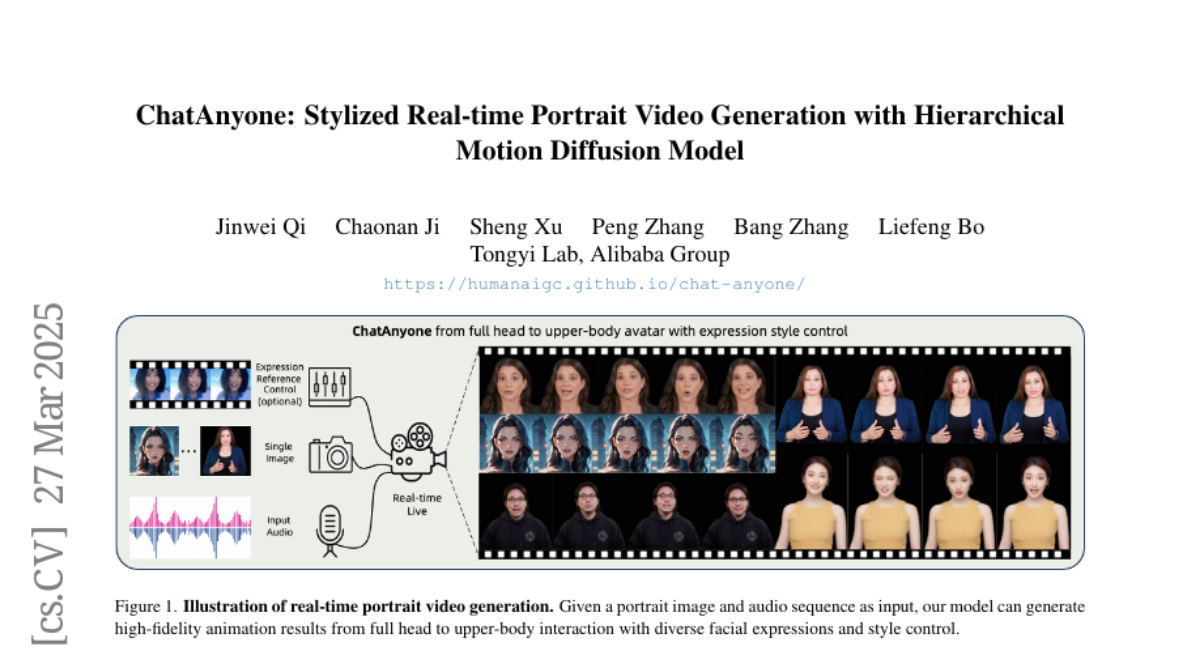

11. ChatAnyone: Stylized Real-time Portrait Video Generation with Hierarchical Motion Diffusion Model

🔑 Keywords: Real-time interactive video-chat, Stylized real-time portrait video generation, Hierarchical motion diffusion models, Synchronization between head and body movements, Face refinement

💡 Category: Generative Models

🌟 Research Objective:

– Introduce a novel framework for stylized real-time portrait video generation enhancing beyond head-only to upper-body interaction in video chats.

🛠️ Research Methods:

– Utilizes hierarchical motion diffusion models to generate diverse facial expressions synchronized with head and body movements and incorporates explicit hand control signals for upper-body dynamics.

💬 Research Conclusions:

– Demonstrates the capability to produce expressive and natural upper-body portrait videos in real-time, with resolutions up to 512×768 at 30fps on a 4090 GPU.

👉 Paper link: https://huggingface.co/papers/2503.21144

12. Lumina-Image 2.0: A Unified and Efficient Image Generative Framework

🔑 Keywords: Text-to-Image, Lumina-Image 2.0, Unified Architecture, Cross-Modal Interactions, Efficiency

💡 Category: Generative Models

🌟 Research Objective:

– Introduction of Lumina-Image 2.0, aiming to enhance text-to-image generation by improving previous models like Lumina-Next through unification and efficiency principles.

🛠️ Research Methods:

– Utilization of a unified architecture for text and image tokens, a specialized captioning system called Unified Captioner (UniCap), implementation of multi-stage progressive training, and inference acceleration techniques.

💬 Research Conclusions:

– Lumina-Image 2.0 demonstrates significant improvements over existing models, achieving substantial efficiency and scalability with strong performance on benchmarks while utilizing only 2.6B parameters. Training details and models are publicly available.

👉 Paper link: https://huggingface.co/papers/2503.21758

13. Optimal Stepsize for Diffusion Sampling

🔑 Keywords: Diffusion models, generation quality, computational intensive sampling, dynamic programming framework, Optimal Stepsize Distillation

💡 Category: Generative Models

🌟 Research Objective:

– To design an optimal stepsize schedule for improving computational efficiency in diffusion models by using a dynamic programming framework.

🛠️ Research Methods:

– Implementation of a dynamic programming framework called Optimal Stepsize Distillation to extract optimal schedules by distilling knowledge from reference trajectories.

💬 Research Conclusions:

– Achieved a 10x acceleration in text-to-image generation while preserving 99.4% performance on the GenEval benchmark, demonstrating robustness across different architectures and systems.

👉 Paper link: https://huggingface.co/papers/2503.21774

14. Exploring the Evolution of Physics Cognition in Video Generation: A Survey

🔑 Keywords: video generation, diffusion models, physical cognition, cognitive science, interpretable

💡 Category: Generative Models

🌟 Research Objective:

– To provide a comprehensive summary of architecture designs and applications in video generation with physical cognition, from a cognitive science perspective.

🛠️ Research Methods:

– The paper organizes and discusses the evolutionary process of physical cognition in video generation, proposing a three-tier taxonomy: basic schema perception, passive cognition of physical knowledge, and active cognition for world simulation.

💬 Research Conclusions:

– The survey emphasizes the key challenges and potential future pathways for research, aiming to develop interpretable, controllable, and physically consistent video generation paradigms, moving beyond “visual mimicry” to “human-like physical comprehension”.

👉 Paper link: https://huggingface.co/papers/2503.21765

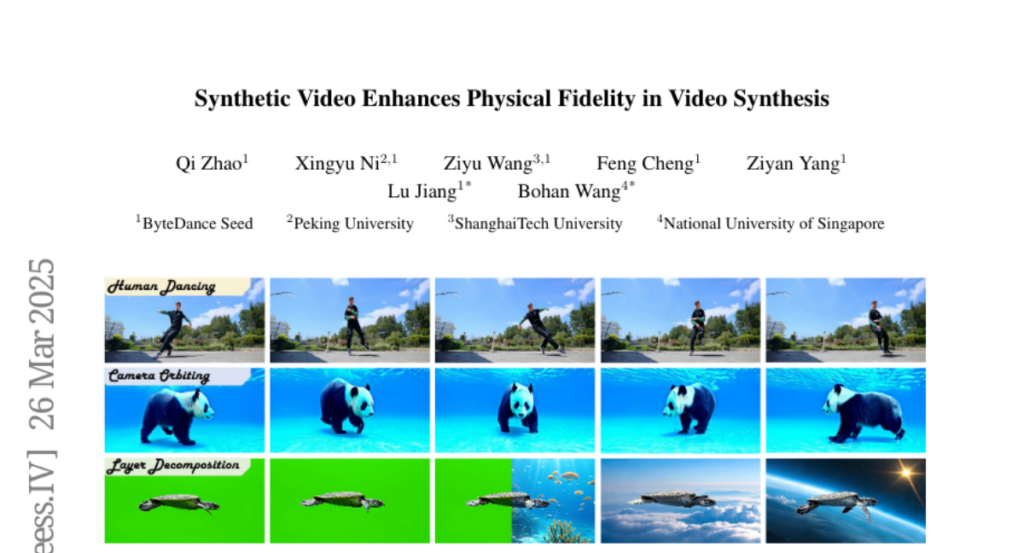

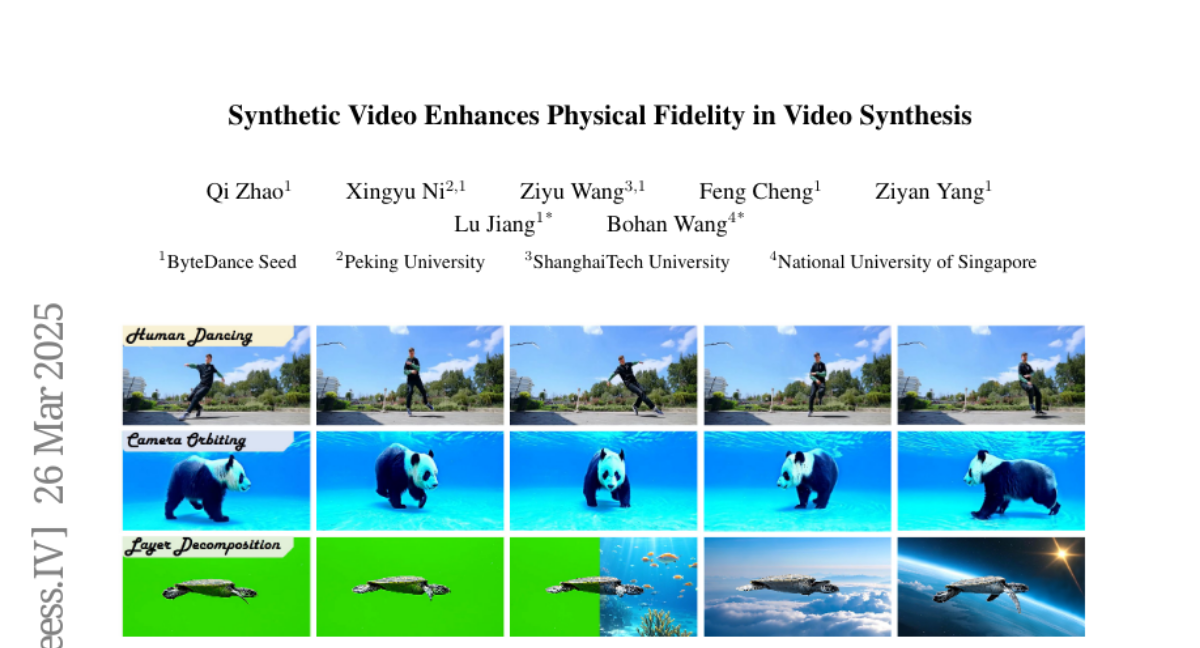

15. Synthetic Video Enhances Physical Fidelity in Video Synthesis

🔑 Keywords: physical fidelity, synthetic videos, computer graphics pipelines, video generation models, unwanted artifacts

💡 Category: Generative Models

🌟 Research Objective:

– The study investigates the enhancement of physical fidelity in video generation models by utilizing synthetic videos from computer graphics pipelines.

🛠️ Research Methods:

– The research involves curating and integrating synthetic data, and introducing a method to transfer physical realism to models to reduce unwanted artifacts.

💬 Research Conclusions:

– The experiments demonstrate that synthetic video can enhance physical fidelity in video synthesis, although the model still lacks deep understanding of physics.

👉 Paper link: https://huggingface.co/papers/2503.20822

16. ZJUKLAB at SemEval-2025 Task 4: Unlearning via Model Merging

🔑 Keywords: Unlearning, Large Language Models, Model Merging, TIES-Merging, ROUGE-based metrics

💡 Category: Natural Language Processing

🌟 Research Objective:

– To selectively erase sensitive knowledge from large language models while avoiding over-forgetting and under-forgetting issues.

🛠️ Research Methods:

– Utilized an unlearning system leveraging Model Merging, specifically TIES-Merging, to combine two specialized models into a balanced unlearned model.

💬 Research Conclusions:

– Achieved competitive results, ranking second among 26 teams with specific scores, and highlighted that current evaluation metrics are insufficient, necessitating a rethinking of unlearning objectives and evaluation methodologies.

👉 Paper link: https://huggingface.co/papers/2503.21088

17. Feature4X: Bridging Any Monocular Video to 4D Agentic AI with Versatile Gaussian Feature Fields

🔑 Keywords: Feature4X, 4D feature field, spatiotemporally aware, agentic AI applications, Gaussian Splatting

💡 Category: Computer Vision

🌟 Research Objective:

– Introduce Feature4X, a framework to extend 2D vision foundation models into the 4D realm using monocular video inputs.

🛠️ Research Methods:

– Utilization of a dynamic optimization strategy to unify model capabilities into a single representation.

– Employing Gaussian Splatting to distill features from video foundation models into an explicit 4D feature field.

💬 Research Conclusions:

– Demonstrated success in open-vocabulary segmentation, language-guided editing, and free-form visual question answering, enhancing immersive 4D scene interactions.

👉 Paper link: https://huggingface.co/papers/2503.20776

18. LLPut: Investigating Large Language Models for Bug Report-Based Input Generation

🔑 Keywords: Failure-inducing inputs, Natural Language Processing, generative LLMs, bug diagnosis

💡 Category: Generative Models

🌟 Research Objective:

– To evaluate how effectively generative Large Language Models (LLMs) can extract failure-inducing inputs from bug reports.

🛠️ Research Methods:

– Empirical evaluation using three open-source generative LLMs (LLaMA, Qwen, Qwen-Coder) on a dataset of 206 bug reports to assess their accuracy and effectiveness.

💬 Research Conclusions:

– The study provides insights into the capabilities and limitations of generative LLMs in automated bug diagnosis.

👉 Paper link: https://huggingface.co/papers/2503.20578

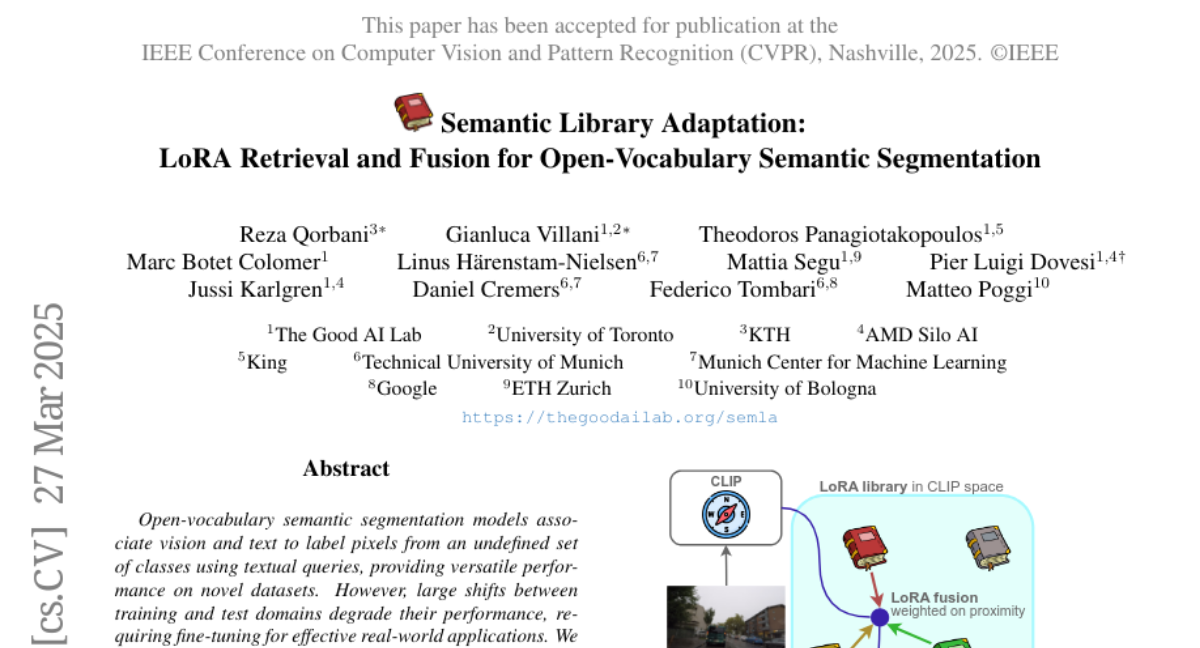

19. Semantic Library Adaptation: LoRA Retrieval and Fusion for Open-Vocabulary Semantic Segmentation

🔑 Keywords: Open-vocabulary semantic segmentation, Textual queries, Domain adaptation, CLIP embeddings, Data privacy

💡 Category: Computer Vision

🌟 Research Objective:

– To introduce Semantic Library Adaptation (SemLA), a framework for training-free, test-time domain adaptation that enhances performance in open-vocabulary semantic segmentation.

🛠️ Research Methods:

– Utilizes a library of LoRA-based adapters indexed with CLIP embeddings to dynamically merge relevant adapters, constructing an ad-hoc model tailored to each specific input without additional training.

💬 Research Conclusions:

– SemLA shows superior adaptability and performance in domain adaptation for open-vocabulary semantic segmentation, efficiently scaling, enhancing explainability, and protecting data privacy.

👉 Paper link: https://huggingface.co/papers/2503.21780

20. Unified Multimodal Discrete Diffusion

🔑 Keywords: Multimodal Generative Models, Discrete Diffusion Models, Autoregressive Approaches, AI Native

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To explore discrete diffusion models as a unified generative framework in the joint text and image domain.

🛠️ Research Methods:

– Comparison of the newly proposed Unified Multimodal Discrete Diffusion (UniDisc) model against multimodal autoregressive models through scaling analysis.

💬 Research Conclusions:

– UniDisc demonstrates superior performance and efficiency in inference-time compute, enhanced controllability, editability, and flexible balancing between inference time and generation quality compared to multimodal autoregressive models.

👉 Paper link: https://huggingface.co/papers/2503.20853

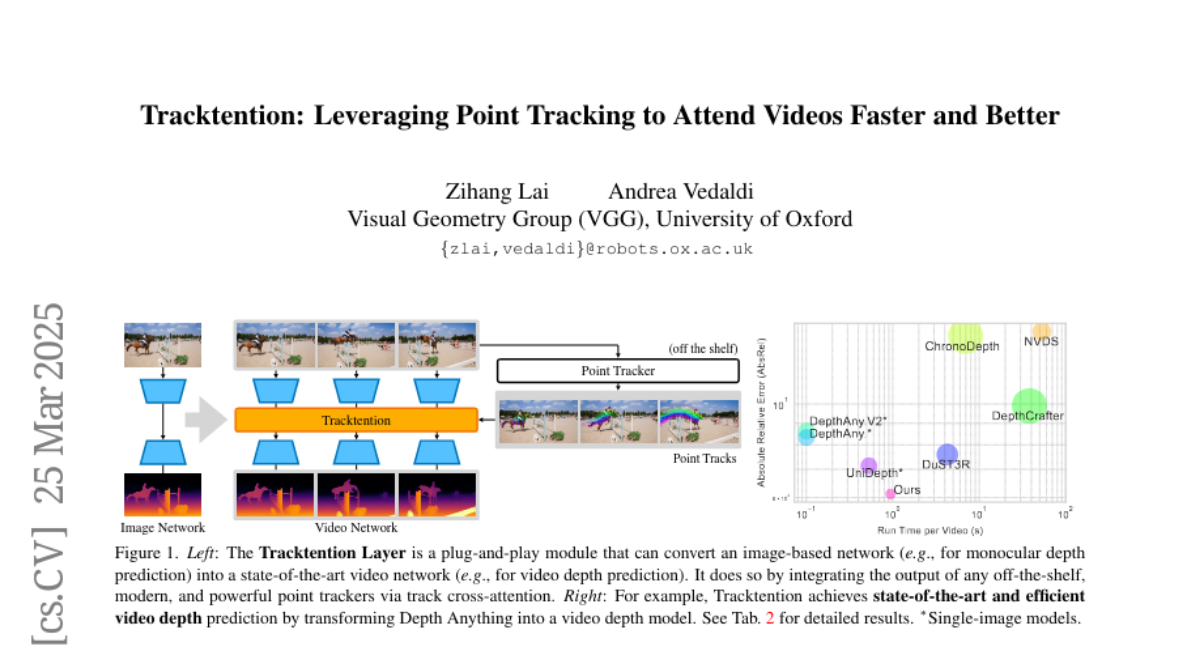

21. Tracktention: Leveraging Point Tracking to Attend Videos Faster and Better

🔑 Keywords: Temporal Consistency, Tracktention Layer, Video Depth Prediction, Video Colorization, Vision Transformers

💡 Category: Computer Vision

🌟 Research Objective:

– To enhance Temporal Consistency in video prediction by addressing the challenges of significant object motion and long-range temporal dependencies.

🛠️ Research Methods:

– The introduction of the Tracktention Layer, a new architectural component that uses point tracks to improve temporal alignment and manage complex object motions.

💬 Research Conclusions:

– Models with the Tracktention Layer achieve superior temporal consistency and can outperform video-native models in tasks such as video depth prediction and video colorization.

👉 Paper link: https://huggingface.co/papers/2503.19904

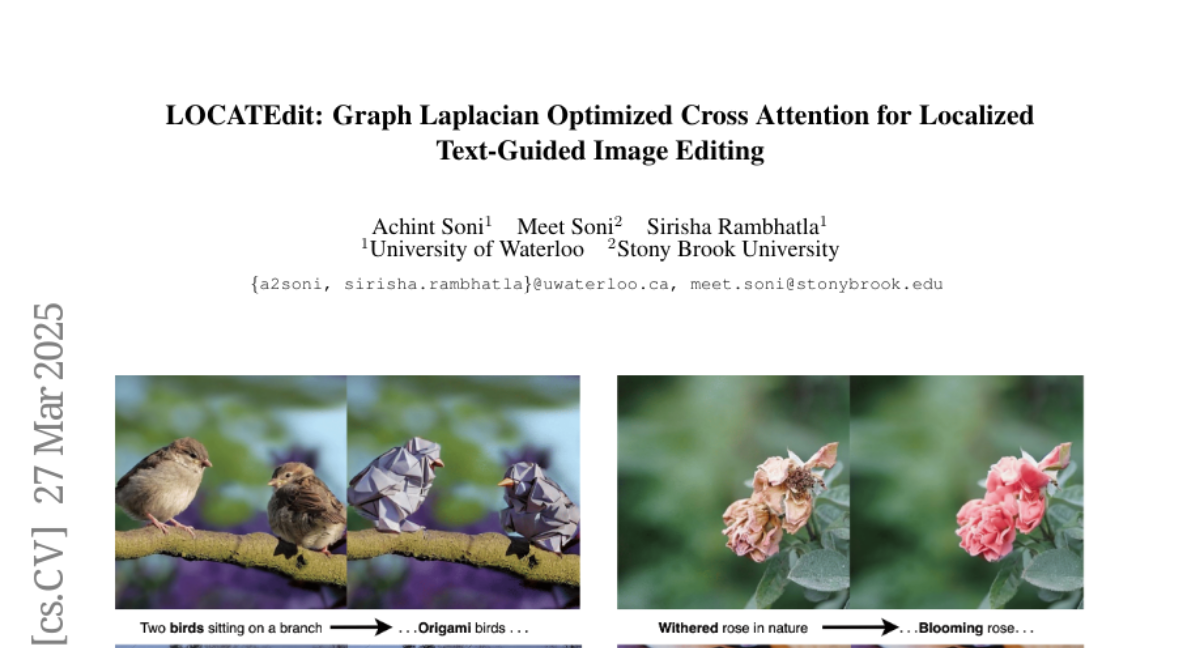

22. LOCATEdit: Graph Laplacian Optimized Cross Attention for Localized Text-Guided Image Editing

🔑 Keywords: Text-guided Image Editing, Cross-attention Maps, Diffusion Models, Self-attention, State-of-the-art Performance

💡 Category: Computer Vision

🌟 Research Objective:

– To enhance the precision and spatial consistency of text-guided image editing while maintaining the image’s structural integrity.

🛠️ Research Methods:

– Development of LOCATEdit, a tool that enhances cross-attention maps using a graph-based approach with self-attention-derived patch relationships.

💬 Research Conclusions:

– LOCATEdit significantly outperforms existing methods in maintaining coherence and reducing artifacts, achieving state-of-the-art performance on various editing tasks as demonstrated on PIE-Bench.

👉 Paper link: https://huggingface.co/papers/2503.21541

23.