AI Native Daily Paper Digest – 20250421

1. Does Reinforcement Learning Really Incentivize Reasoning Capacity in LLMs Beyond the Base Model?

🔑 Keywords: Reinforcement Learning, Verifiable Rewards, reasoning capabilities, base models, distillation

💡 Category: Reinforcement Learning

🌟 Research Objective:

– Critically examine the assumption that Reinforcement Learning with Verifiable Rewards (RLVR) fundamentally improves the reasoning capabilities of Large Language Models (LLMs).

🛠️ Research Methods:

– Utilize the pass@k metric with large k values to analyze the reasoning capability of models across various model families and benchmarks.

💬 Research Conclusions:

– RL-trained models outperform base models at smaller k values, but base models achieve similar or higher pass@k scores at larger k values.

– RLVR tends to narrow the model’s reasoning capability by biasing output distribution towards known paths.

– Distillation can introduce genuinely new knowledge, contrasting RLVR’s limitations.

– The study calls for a reevaluation of RL training’s role in advancing reasoning abilities in LLMs.

👉 Paper link: https://huggingface.co/papers/2504.13837

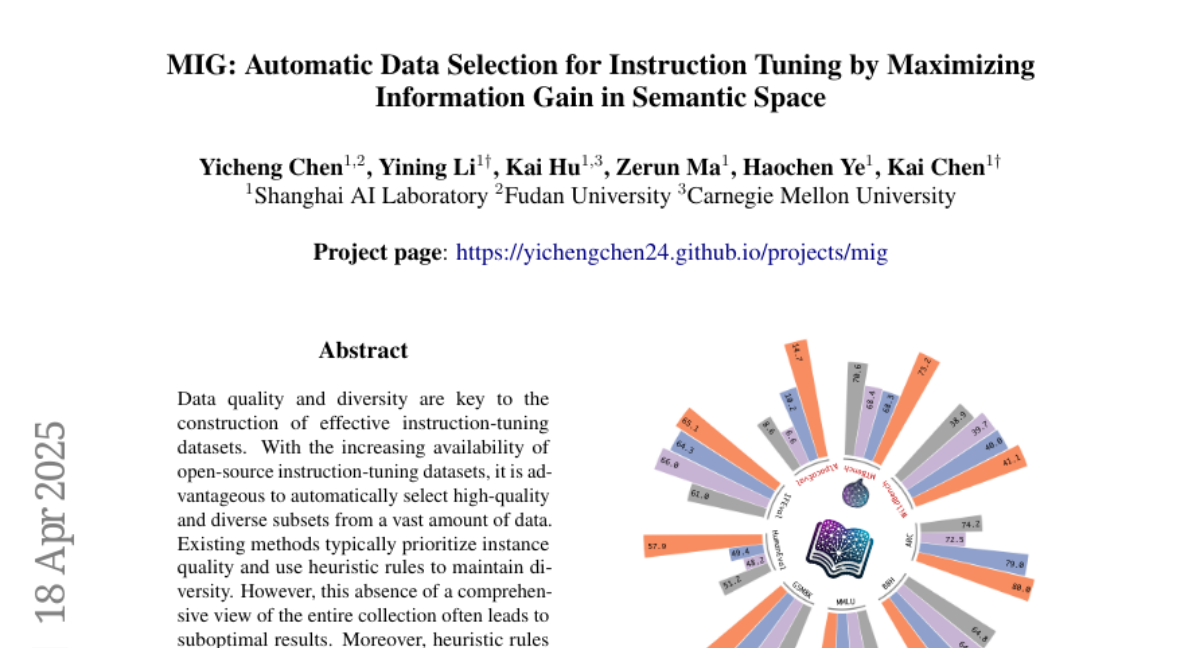

2. MIG: Automatic Data Selection for Instruction Tuning by Maximizing Information Gain in Semantic Space

🔑 Keywords: Data Quality, Diversity, Semantic Space, Information Gain, Instruction-Tuning

💡 Category: Natural Language Processing

🌟 Research Objective:

– To propose a unified method for quantifying the information content of instruction-tuning datasets, enhancing data quality and diversity.

🛠️ Research Methods:

– The study models the semantic space using a label graph and introduces an efficient sampling method to maximize Information Gain (MIG) for selecting high-quality data subsets.

💬 Research Conclusions:

– The proposed MIG method consistently outperforms state-of-the-art approaches, achieving significant performance improvements in model fine-tuning with a reduced dataset.

👉 Paper link: https://huggingface.co/papers/2504.13835

3. NodeRAG: Structuring Graph-based RAG with Heterogeneous Nodes

🔑 Keywords: Retrieval-augmented generation, Graph-based RAG, NodeRAG, Heterogeneous graph structures, Question-answering performance

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– The study aims to enhance retrieval-augmented generation (RAG) by proposing a graph-centric framework called NodeRAG, focusing on the integration of heterogeneous graph structures to improve factually consistent responses in specific domains.

🛠️ Research Methods:

– The framework introduces heterogeneous graph structures to ensure seamless and holistic integration of graph-based methodologies into the RAG workflow, aligning closely with the capabilities of large language models for a cohesive end-to-end process.

💬 Research Conclusions:

– Through extensive experimentation, NodeRAG demonstrates superior performance over methods like GraphRAG and LightRAG in terms of indexing time, query time, storage efficiency, and question-answering on multi-hop benchmarks. The full framework and results can be explored on their GitHub repository.

👉 Paper link: https://huggingface.co/papers/2504.11544

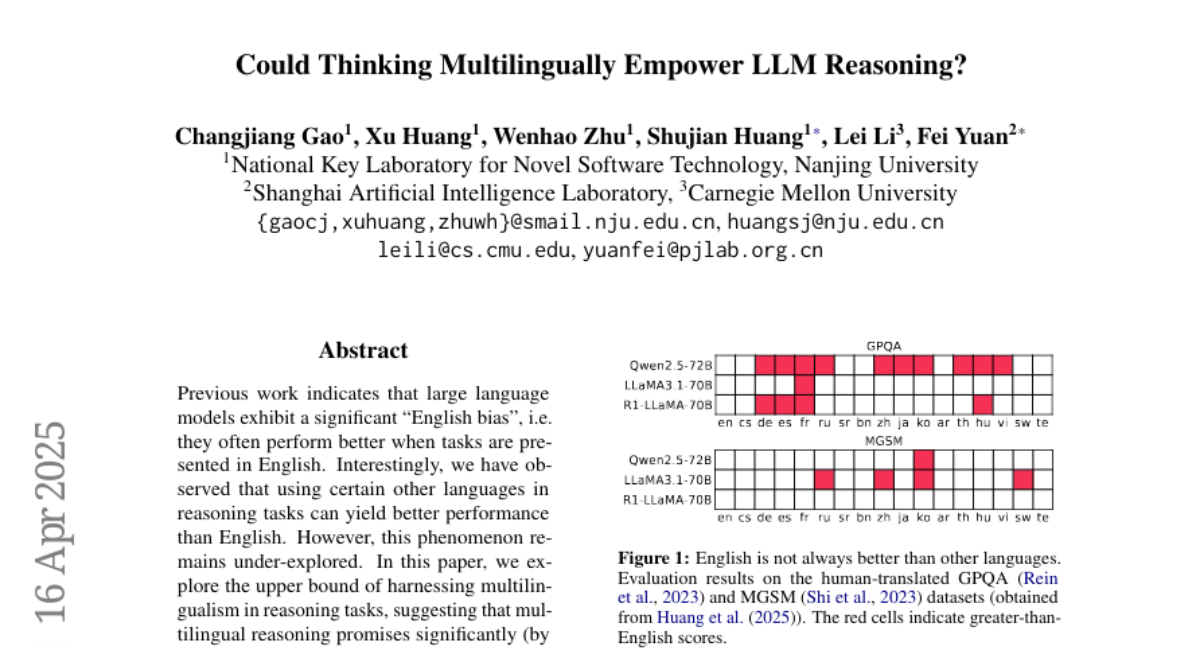

4. Could Thinking Multilingually Empower LLM Reasoning?

🔑 Keywords: Large Language Models, English Bias, Multilingual Reasoning, AI Ethics and Fairness

💡 Category: Natural Language Processing

🌟 Research Objective:

– To explore the potential of multilingual reasoning in overcoming the English bias of large language models and achieving better performance on reasoning tasks than English-only approaches.

🛠️ Research Methods:

– Investigation of the performance ceiling of multilingual reasoning tasks, analyzing variations in translation quality and language selection, and evaluating current answer selection methods.

💬 Research Conclusions:

– Multilingual reasoning tasks offer significantly higher and more robust performance upper bounds compared to English-only tasks. However, common answer selection methods are insufficient in reaching this potential, indicating an area for further research and improvement.

👉 Paper link: https://huggingface.co/papers/2504.11833

5. It’s All Connected: A Journey Through Test-Time Memorization, Attentional Bias, Retention, and Online Optimization

🔑 Keywords: attentional bias, associative memory modules, retention regularization, language modeling, Transformers

💡 Category: Natural Language Processing

🌟 Research Objective:

– To design efficient architectural backbones for neural architectures inspired by human attentional bias.

🛠️ Research Methods:

– Reconceptualizing neural architectures like Transformers and Titans as associative memory modules.

– Introduction of alternative attentional bias configurations and a framework called Miras with choices for architectural design.

💬 Research Conclusions:

– Proposed novel sequence models Moneta, Yaad, and Memora surpass existing linear RNNs in tasks such as language modeling and outperform traditional models like Transformers in specific scenarios.

👉 Paper link: https://huggingface.co/papers/2504.13173

6. CLASH: Evaluating Language Models on Judging High-Stakes Dilemmas from Multiple Perspectives

🔑 Keywords: Large Language Models, CLASH, Decision Ambivalence, Psychological Discomfort, Value Shifts

💡 Category: Natural Language Processing

🌟 Research Objective:

– To develop a dataset, CLASH, for evaluating Large Language Models (LLMs) in high-stakes dilemmas involving conflicting values.

🛠️ Research Methods:

– Introduction of CLASH dataset with 345 dilemmas and 3,795 perspectives, benchmarked 10 frontier models to study decision-making processes.

💬 Research Conclusions:

– LLMs show less than 50% accuracy in ambivalent decision scenarios; perform better in clear-cut situations.

– Models predict psychological discomfort accurately but struggle with value shifts.

– A significant connection between value preferences and steerability is observed, with third-party perspectives enhancing steerability.

👉 Paper link: https://huggingface.co/papers/2504.10823

7. AerialMegaDepth: Learning Aerial-Ground Reconstruction and View Synthesis

🔑 Keywords: geometric reconstruction, extreme viewpoint variation, co-registered, pseudo-synthetic renderings, domain gap

💡 Category: Computer Vision

🌟 Research Objective:

– The study aims to improve geometric reconstruction of images from mixed ground and aerial views by addressing the extreme viewpoint variation issue.

🛠️ Research Methods:

– Developed a scalable framework that combines pseudo-synthetic renderings from 3D city-wide meshes with real, crowd-sourced images to create a hybrid dataset for fine-tuning algorithms.

💬 Research Conclusions:

– Fine-tuning state-of-the-art algorithms with the new dataset significantly improves accuracy in handling large viewpoint changes, raising localization accuracy from under 5% to nearly 56%.

– The dataset also enhances performance on tasks like novel-view synthesis in challenging scenarios, showcasing the practical value of the approach in real-world applications.

👉 Paper link: https://huggingface.co/papers/2504.13157

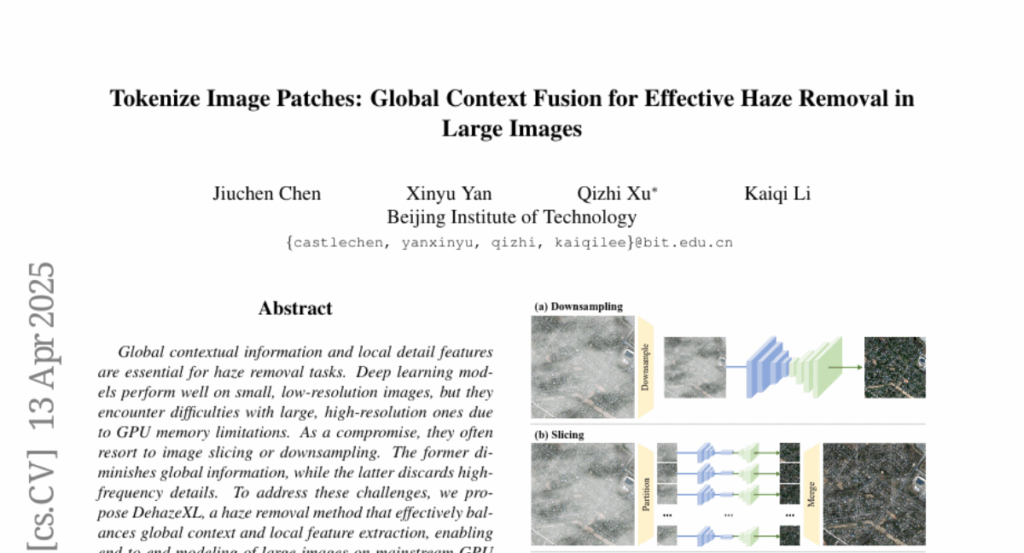

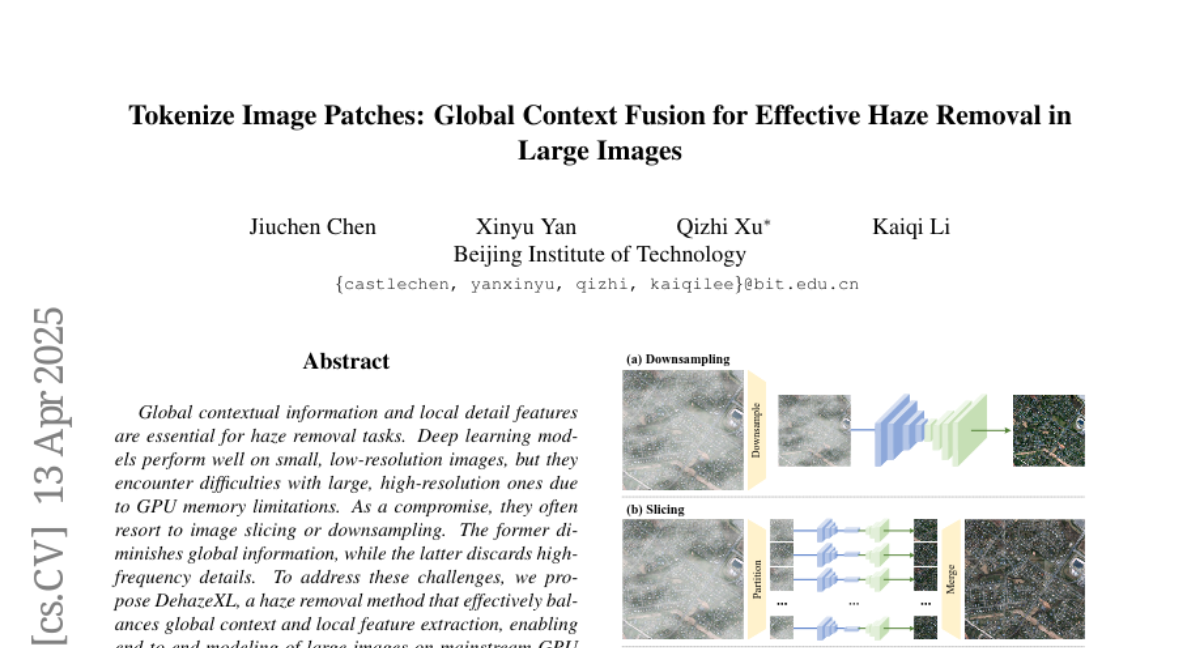

8. Tokenize Image Patches: Global Context Fusion for Effective Haze Removal in Large Images

🔑 Keywords: DehazeXL, haze removal, global context, local feature extraction, ultra-high-resolution dataset

💡 Category: Computer Vision

🌟 Research Objective:

– The study aims to improve haze removal for large, high-resolution images while balancing global context and local feature extraction.

🛠️ Research Methods:

– DehazeXL is proposed as a haze removal method enabling end-to-end modeling of large images on mainstream GPUs.

– A visual attribution method is designed to evaluate global context utilization, and an ultra-high-resolution haze removal dataset (8KDehaze) is developed for model training and testing.

💬 Research Conclusions:

– DehazeXL demonstrates state-of-the-art performance, handling images up to 10240×10240 pixels with only 21 GB of GPU memory.

– The model outperforms all evaluated methods, proving the effectiveness of the proposed approach and dataset.

👉 Paper link: https://huggingface.co/papers/2504.09621

9. HiScene: Creating Hierarchical 3D Scenes with Isometric View Generation

🔑 Keywords: Scene-level 3D generation, Hierarchical framework, 2D image generation, 3D object generation, Interactive applications

💡 Category: Generative Models

🌟 Research Objective:

– To develop HiScene, a novel hierarchical framework that bridges the gap between 2D and 3D generation, aiming to create high-fidelity and aesthetically pleasing 3D scenes with compositional identities.

🛠️ Research Methods:

– Using a hierarchical approach to treat scenes as objects and utilizing a video-diffusion-based amodal completion technique for handling occlusions and maintaining spatial coherence through shape prior injection.

💬 Research Conclusions:

– The proposed method of HiScene produces more natural and physically plausible object arrangements suitable for interactive applications, aligning well with user inputs and maintaining compositional structure.

👉 Paper link: https://huggingface.co/papers/2504.13072

10. Thought Manipulation: External Thought Can Be Efficient for Large Reasoning Models

🔑 Keywords: Large Reasoning Models, ThoughtMani, CoT generator, safety alignment

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– Address the “overthinking” issue in Large Reasoning Models (LRMs) by developing a method to reduce redundant reasoning steps and computational cost.

🛠️ Research Methods:

– Introduce ThoughtMani, a pipeline leveraging CoTs from smaller models to streamline LRMs’ reasoning process and empirically validate its efficiency on datasets like LiveBench/Code.

💬 Research Conclusions:

– ThoughtMani achieves a reduction in output token count by 30% while maintaining performance, and enhances safety alignment by an average of 10%, making it an effective tool for constructing efficient LRMs.

👉 Paper link: https://huggingface.co/papers/2504.13626

11. Generative AI Act II: Test Time Scaling Drives Cognition Engineering

🔑 Keywords: Large Language Models, generative AI, cognition engineering, test-time scaling, prompt engineering

💡 Category: Foundations of AI

🌟 Research Objective:

– To clarify the conceptual foundations of cognition engineering and highlight its critical development phase in AI’s “Act II”.

🛠️ Research Methods:

– Comprehensive tutorials and optimized implementations are provided to democratize access to cognition engineering and engage AI practitioners.

💬 Research Conclusions:

– The emergence of “Act II” allows models to transform from knowledge-retrieval systems to thought-construction engines, enhancing AI through language-based thoughts and mind-level connections.

👉 Paper link: https://huggingface.co/papers/2504.13828

12. Self-alignment of Large Video Language Models with Refined Regularized Preference Optimization

🔑 Keywords: LVLMs, self-alignment, spatio-temporal understanding, RRPO

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The study aims to address limitations in Large Video Language Models (LVLMs), specifically in fine-grained temporal understanding and error-prone video question-answering tasks.

🛠️ Research Methods:

– Introduction of a self-alignment framework with a training set of preferred and non-preferred response pairs.

– Implementation of Refined Regularized Preference Optimization (RRPO) using sub-sequence-level refined rewards and token-wise KL regularization.

💬 Research Conclusions:

– RRPO results in more precise alignment and stable training than Direct Preference Optimization.

– The effectiveness of the proposed approach is validated across various video tasks, including video hallucination and temporal reasoning.

👉 Paper link: https://huggingface.co/papers/2504.12083

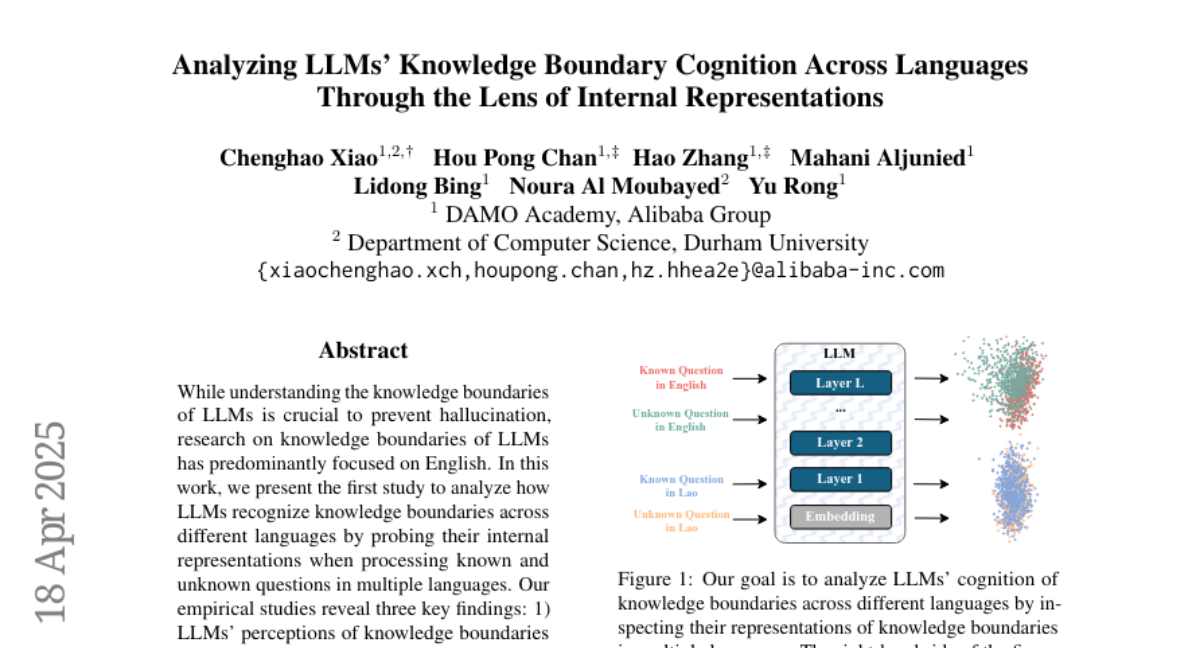

13. Analyzing LLMs’ Knowledge Boundary Cognition Across Languages Through the Lens of Internal Representations

🔑 Keywords: Knowledge Boundaries, LLMs, Training-Free Alignment, Multilingual Evaluation

💡 Category: Natural Language Processing

🌟 Research Objective:

– The study aims to analyze how large language models (LLMs) recognize knowledge boundaries across different languages by examining their internal representations.

🛠️ Research Methods:

– The researchers conducted empirical studies by probing the internal representations of LLMs while processing known and unknown questions across multiple languages. Additionally, they developed a multilingual evaluation suite for cross-lingual knowledge boundary analysis.

💬 Research Conclusions:

– Three primary findings were revealed:

1) LLMs’ perceptions of knowledge boundaries are encoded in the middle to middle-upper layers across languages.

2) A linear structure is observed in language differences, facilitating a training-free alignment method to transfer knowledge boundary perception across languages.

3) Fine-tuning on bilingual question pair translation improves LLMs’ ability to recognize knowledge boundaries across languages.

👉 Paper link: https://huggingface.co/papers/2504.13816

14. Cost-of-Pass: An Economic Framework for Evaluating Language Models

🔑 Keywords: AI systems, economic value, cost-of-pass, lightweight models, reasoning models

💡 Category: Natural Language Processing

🌟 Research Objective:

– The paper aims to evaluate the tradeoff between economic value and inference costs of AI systems using a framework grounded in production theory.

🛠️ Research Methods:

– Introduces the concept of “cost-of-pass” and “frontier cost-of-pass” to assess AI models by combining accuracy and inference cost.

💬 Research Conclusions:

– Innovations in lightweight, large, and reasoning models are crucial for cost-efficiency in respective tasks. Complementary model-level innovations drive significant economic progress in AI system deployment.

👉 Paper link: https://huggingface.co/papers/2504.13359

15. Filter2Noise: Interpretable Self-Supervised Single-Image Denoising for Low-Dose CT with Attention-Guided Bilateral Filtering

🔑 Keywords: Low-Dose CT, Self-Supervised, Denoising, Attention-Guided Bilateral Filter

💡 Category: AI in Healthcare

🌟 Research Objective:

– Propose an interpretable self-supervised single-image denoising framework, Filter2Noise (F2N), for low-dose CT scans.

🛠️ Research Methods:

– Introduced an Attention-Guided Bilateral Filter, using a novel downsampling shuffle strategy with a new self-supervised loss function.

💬 Research Conclusions:

– F2N outperforms current methods by improving PSNR by 4.59 dB, enhancing transparency, user control, and parametric efficiency.

👉 Paper link: https://huggingface.co/papers/2504.13519

16. Revisiting Uncertainty Quantification Evaluation in Language Models: Spurious Interactions with Response Length Bias Results

🔑 Keywords: Uncertainty Quantification, Language Models, AUROC, Length Biases, LLM-as-a-judge

💡 Category: Natural Language Processing

🌟 Research Objective:

– The study focuses on improving the safety and reliability of Language Models through Uncertainty Quantification.

🛠️ Research Methods:

– Evaluation of seven correctness functions across various datasets, models, and UQ methods, highlighting biases in current performance metrics.

💬 Research Conclusions:

– The analysis identifies LLM-as-a-judge approaches as among the least biased by length, presenting them as a possible solution to mitigate these biases.

👉 Paper link: https://huggingface.co/papers/2504.13677

17.