AI Native Daily Paper Digest – 20250430

1. Reinforcement Learning for Reasoning in Large Language Models with One Training Example

🔑 Keywords: Reinforcement Learning, Large Language Models, Mathematical Reasoning, 1-shot RLVR, Entropy Loss

💡 Category: Reinforcement Learning

🌟 Research Objective:

– To demonstrate the effectiveness of 1-shot Reinforcement Learning with Verifiable Reward (RLVR) in enhancing the math reasoning capabilities of Large Language Models (LLMs).

🛠️ Research Methods:

– Applied 1-shot RLVR to the Qwen2.5-Math-1.5B base model, using a single example to significantly improve performance on MATH500 and six common mathematical reasoning benchmarks.

– Examined additional models, RL algorithms, and the role of entropy loss in promoting exploration.

💬 Research Conclusions:

– Achieved substantial performance improvements across various models and benchmarks using 1-shot RLVR.

– Identified interesting phenomena such as cross-domain generalization and post-saturation generalization, highlighting the critical role of policy gradient loss and exploration.

– Observed that applying entropy loss alone can significantly boost performance, suggesting new avenues for enhancing RLVR data efficiency.

👉 Paper link: https://huggingface.co/papers/2504.20571

2. UniversalRAG: Retrieval-Augmented Generation over Multiple Corpora with Diverse Modalities and Granularities

🔑 Keywords: Retrieval-Augmented Generation, UniversalRAG, external knowledge, modality-aware routing mechanism, fine-tuned retrieval

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The paper introduces a new RAG framework, UniversalRAG, designed to retrieve and integrate knowledge from heterogeneous sources across diverse modalities and granularities.

🛠️ Research Methods:

– UniversalRAG employs a modality-aware routing mechanism to dynamically select the most appropriate modality-specific corpus for targeted retrieval. It also organizes each modality into multiple granularity levels for fine-tuned retrieval suited to the query’s complexity.

💬 Research Conclusions:

– The proposed UniversalRAG framework demonstrates superior performance over modality-specific and unified baselines on 8 benchmarks spanning multiple modalities.

👉 Paper link: https://huggingface.co/papers/2504.20734

3. ReasonIR: Training Retrievers for Reasoning Tasks

🔑 Keywords: ReasonIR-8B, retriever, synthetic data generation pipeline, nDCG@10, LLM reranker

💡 Category: Natural Language Processing

🌟 Research Objective:

– Introduce ReasonIR-8B, the first retriever trained for general reasoning tasks.

🛠️ Research Methods:

– Utilized a synthetic data generation pipeline to create challenging and relevant queries paired with hard negatives.

– Combined synthetic data with public datasets for training.

💬 Research Conclusions:

– Achieved state-of-the-art performance on BRIGHT benchmark with notable gains in nDCG@10 scores.

– Outperformed other retrievers and search engines in RAG tasks, improving MMLU and GPQA performance significantly.

– Demonstrated enhanced test-time compute efficiency and scalability with LLM reranker. Open-sourced code, data, and model for future LLMs.

👉 Paper link: https://huggingface.co/papers/2504.20595

4. The Leaderboard Illusion

🔑 Keywords: AI Systems, Benchmark Distortion, Chatbot Arena, Performance Bias, Data Access Asymmetry

💡 Category: AI Systems and Tools

🌟 Research Objective:

– The study aims to identify and address systematic issues causing biased performance scores in AI benchmarks, specifically in the Chatbot Arena leaderboard.

🛠️ Research Methods:

– The researchers analyzed the practices around private testing and selective disclosure, and evaluated data access disparities among proprietary and open-weight models.

💬 Research Conclusions:

– The study concludes that current practices lead to biased performance outcomes and data asymmetries, benefiting specific providers disproportionately. Recommendations for fairer and transparent evaluation frameworks are proposed.

👉 Paper link: https://huggingface.co/papers/2504.20879

5. Toward Evaluative Thinking: Meta Policy Optimization with Evolving Reward Models

🔑 Keywords: Meta Policy Optimization, reward hacking, prompt engineering, meta-learning approach, adaptive reward signal

💡 Category: Reinforcement Learning

🌟 Research Objective:

– Address the limitations of reward hacking and reliance on extensive prompt engineering in Large Language Models using a novel framework.

🛠️ Research Methods:

– Introduce and utilize Meta Policy Optimization with a meta-reward model that refines rewards dynamically to maintain high alignment during training.

💬 Research Conclusions:

– Achieves comparable or superior performance to models with hand-crafted rewards and maintains effectiveness across various tasks without needing specialized reward designs.

👉 Paper link: https://huggingface.co/papers/2504.20157

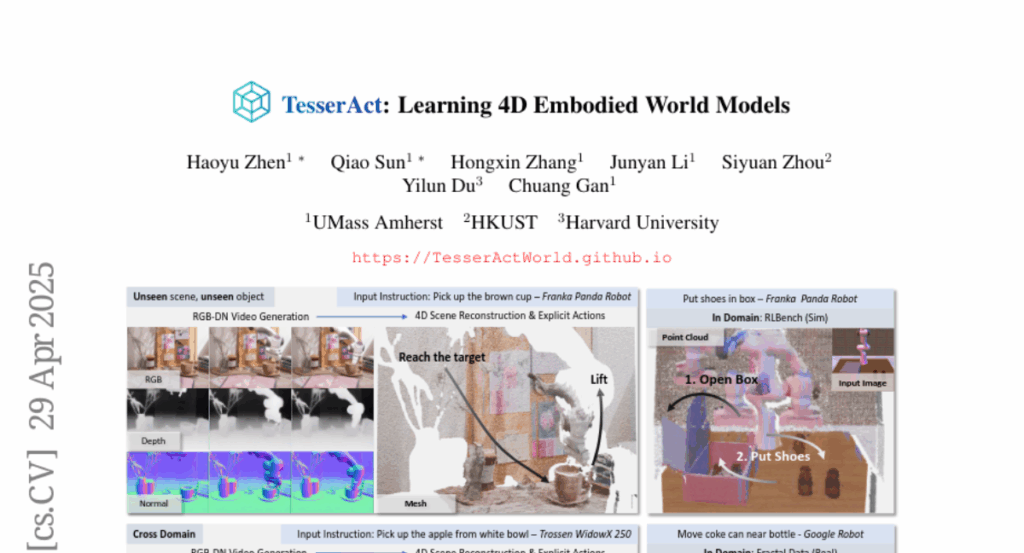

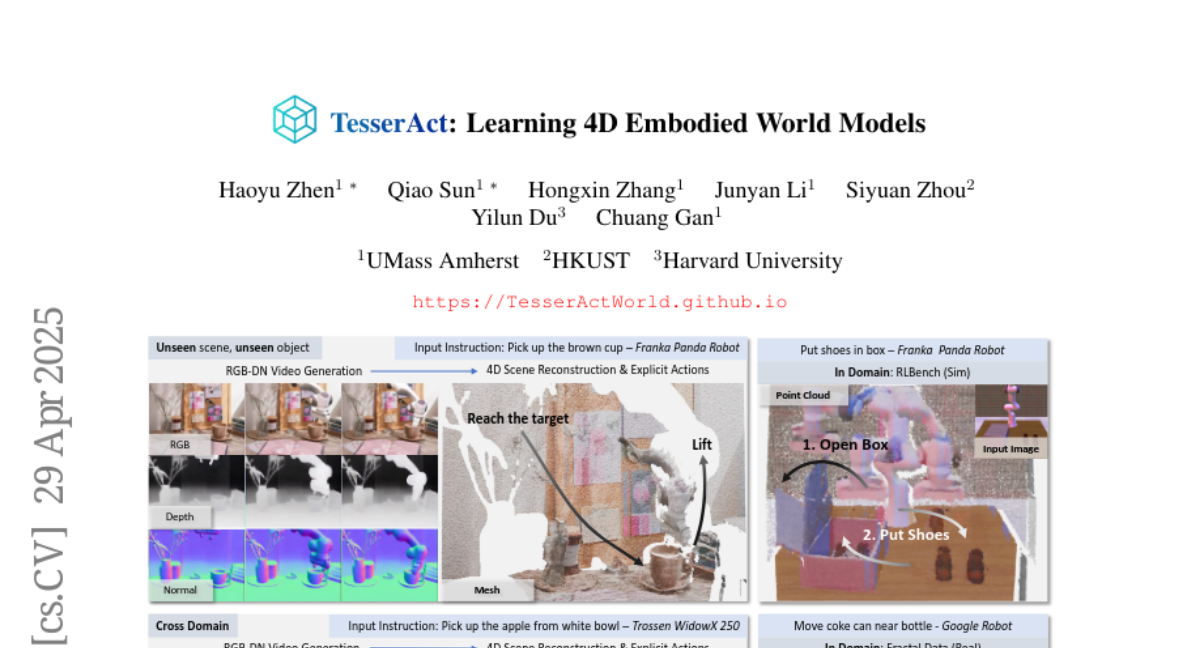

6. TesserAct: Learning 4D Embodied World Models

🔑 Keywords: 4D world model, Embodied Agent, Novel View Synthesis, Temporal Consistency, Video Generation Model

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– To develop a 4D embodied world model capable of predicting the dynamic evolution of 3D scenes in response to an embodied agent’s actions.

🛠️ Research Methods:

– Extend existing robotic manipulation datasets with depth and normal data using off-the-shelf models and fine-tune a video generation model to predict RGB-DN for each frame.

💬 Research Conclusions:

– The proposed model ensures both temporal and spatial coherence in 4D scene predictions and enhances policy learning significantly compared to prior video-based models.

👉 Paper link: https://huggingface.co/papers/2504.20995

7. Certified Mitigation of Worst-Case LLM Copyright Infringement

🔑 Keywords: copyright takedown, BloomScrub, inference-time approach, Bloom filters, adaptive abstention

💡 Category: Natural Language Processing

🌟 Research Objective:

– The research aims to address concerns about unintentional copyright infringement by large language models (LLMs) during deployment by developing methods to prevent models from generating content similar to copyrighted materials.

🛠️ Research Methods:

– The study introduces BloomScrub, an inference-time approach that interleaves quote detection with rewriting techniques, utilizing Bloom filters for efficient and scalable copyright screening.

💬 Research Conclusions:

– BloomScrub effectively reduces infringement risks while preserving model utility and allows for adaptive enforcement levels through abstention, demonstrating that lightweight inference-time methods can be remarkably effective for copyright prevention.

👉 Paper link: https://huggingface.co/papers/2504.16046

8. ISDrama: Immersive Spatial Drama Generation through Multimodal Prompting

🔑 Keywords: Multimodal Inputs, Binaural Speech, Dramatic Prosody, AR, VR

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The primary aim is to generate multimodal immersive spatial drama with continuous multi-speaker binaural speech and dramatic prosody using multimodal prompts.

🛠️ Research Methods:

– MRSDrama dataset is created with binaural drama audios, scripts, videos, poses, and textual prompts, and ISDrama model with Multimodal Pose Encoder and Immersive Drama Transformer is proposed.

– Introduces classifiers-free guidance strategy for coherent drama generation.

💬 Research Conclusions:

– ISDrama model significantly outperforms baseline models in both objective and subjective metrics in generating high-quality immersive spatial drama.

👉 Paper link: https://huggingface.co/papers/2504.20630

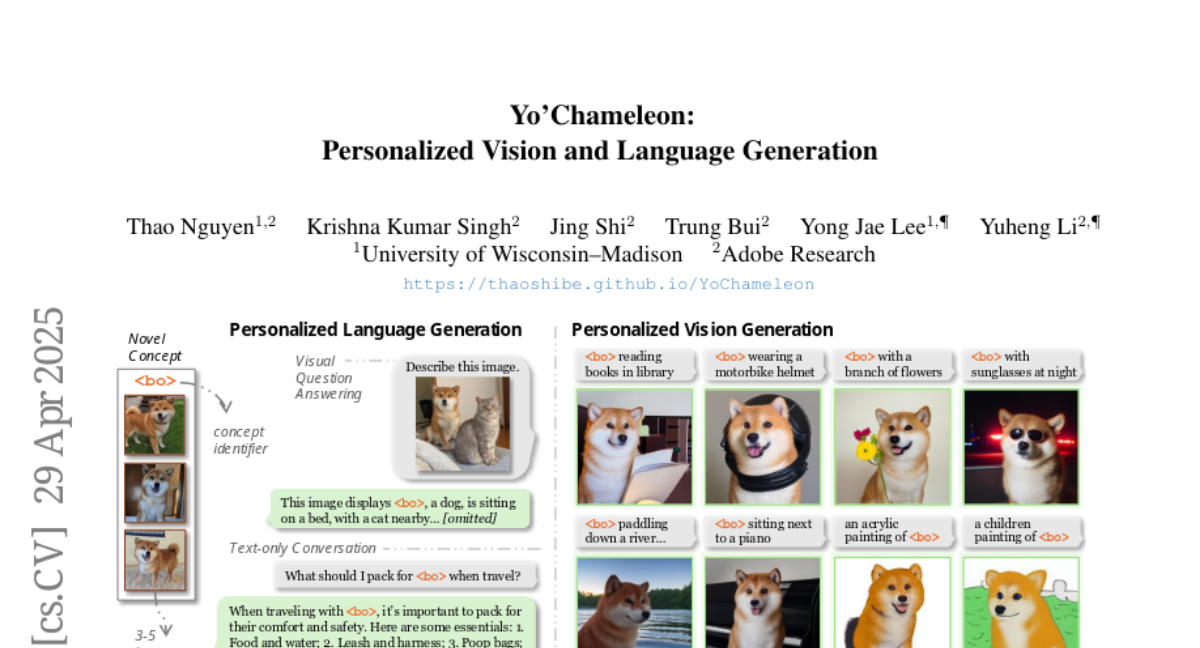

9. YoChameleon: Personalized Vision and Language Generation

🔑 Keywords: Large Multimodal Models, personalization, image generation, soft-prompt tuning, self-prompting optimization mechanism

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Introduce Yo’Chameleon for personalizing large multimodal models to incorporate specific user concepts, enhancing their image generation capabilities.

🛠️ Research Methods:

– Utilizes soft-prompt tuning to embed subject-specific information and self-prompting optimization mechanism to balance performance across modalities.

💬 Research Conclusions:

– Yo’Chameleon successfully personalizes multimodal models, allowing them to answer questions and recreate detailed images of specific subjects in new contexts with improved quality.

👉 Paper link: https://huggingface.co/papers/2504.20998

10. RAGEN: Understanding Self-Evolution in LLM Agents via Multi-Turn Reinforcement Learning

🔑 Keywords: StarPO, RAGEN, Reinforcement Learning, Echo Trap, reasoning-aware reward signals

💡 Category: Reinforcement Learning

🌟 Research Objective:

– Explore multi-turn agent Reinforcement Learning (RL) training, often challenged by long-horizon decision making and stochastic feedback.

🛠️ Research Methods:

– Introduce StarPO (State-Thinking-Actions-Reward Policy Optimization) as a framework and RAGEN as a modular system for training and evaluating Large Language Model (LLM) agents.

💬 Research Conclusions:

– Identified an Echo Trap in agent RL where reward variance cliffs and gradient spikes affect performance, addressed by using a stabilized variant, StarPO-S.

– Found that RL rollouts benefit from diverse initial states, medium interaction granularity, and frequent sampling.

– Concluded that without reasoning-aware reward signals, agent reasoning does not easily emerge, leading to shallow strategies or hallucinated thoughts.

👉 Paper link: https://huggingface.co/papers/2504.20073

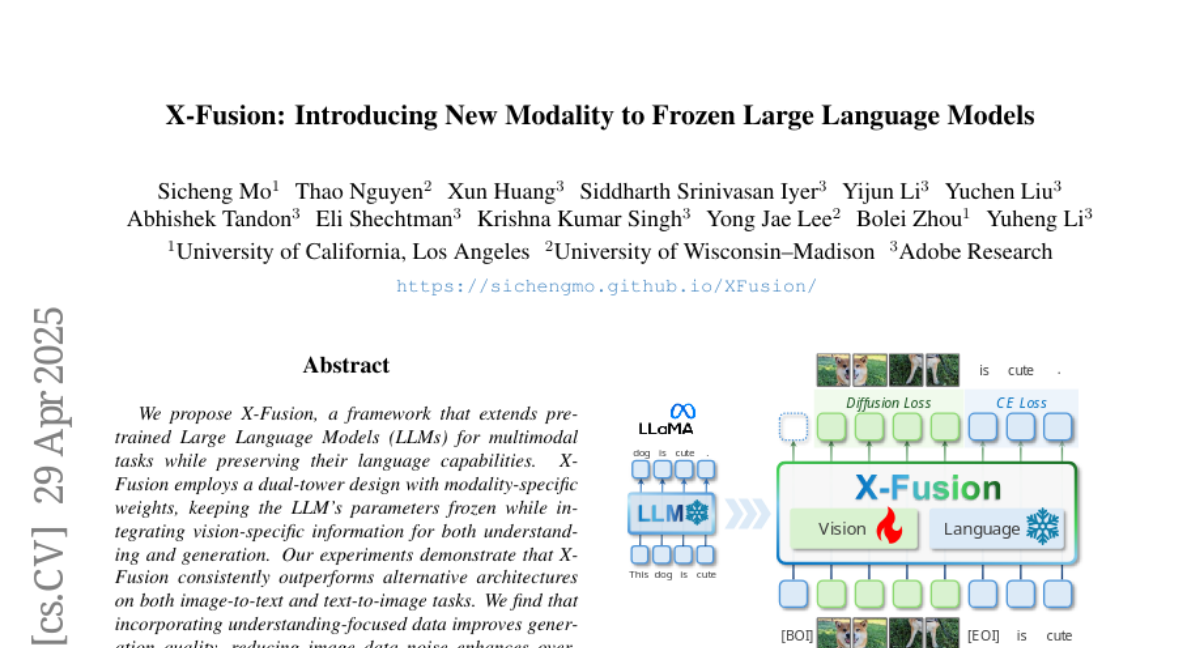

11. X-Fusion: Introducing New Modality to Frozen Large Language Models

🔑 Keywords: X-Fusion, Large Language Models, multimodal tasks, dual-tower design, feature alignment

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To extend pretrained LLMs for multimodal tasks while preserving language capabilities.

🛠️ Research Methods:

– Employed a dual-tower design with modality-specific weights, keeping LLM parameters frozen and integrating vision-specific information.

💬 Research Conclusions:

– X-Fusion outperforms alternative architectures in image-to-text and text-to-image tasks, with findings highlighting the importance of understanding-focused data and feature alignment.

👉 Paper link: https://huggingface.co/papers/2504.20996

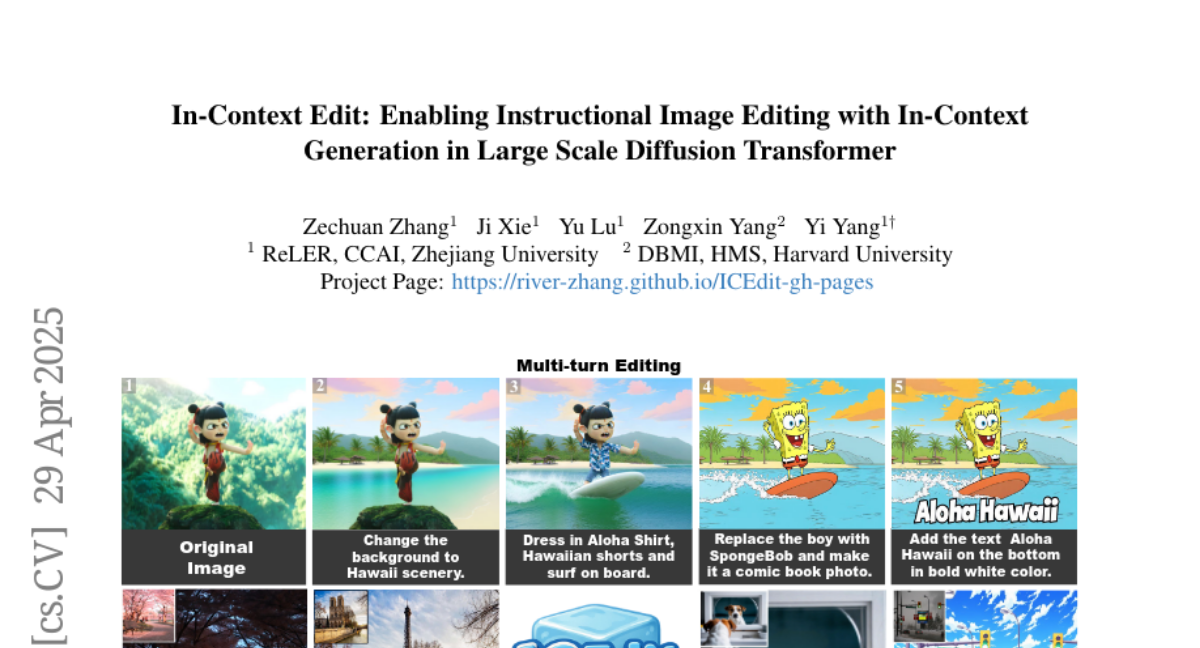

12. In-Context Edit: Enabling Instructional Image Editing with In-Context Generation in Large Scale Diffusion Transformer

🔑 Keywords: Instruction-based image editing, precision-efficiency tradeoff, Diffusion Transformer (DiT), LoRA-MoE hybrid, vision-language models (VLMs)

💡 Category: Generative Models

🌟 Research Objective:

– To overcome the precision-efficiency tradeoff in instruction-based image editing using an advanced method that requires minimal resources.

🛠️ Research Methods:

– Developed an in-context editing framework for zero-shot instruction compliance.

– Introduced a LoRA-MoE hybrid strategy for flexible and efficient tuning without extensive retraining.

– Applied an early filter inference-time scaling method using VLMs to enhance edit quality.

💬 Research Conclusions:

– The proposed method surpasses state-of-the-art methods with only 0.5% of the training data and 1% of trainable parameters compared to conventional techniques, establishing a paradigm for high-precision, efficient instruction-guided editing.

👉 Paper link: https://huggingface.co/papers/2504.20690

13. Learning Explainable Dense Reward Shapes via Bayesian Optimization

🔑 Keywords: RLHF, scalar rewards, reward shaping, SHAP, LIME

💡 Category: Reinforcement Learning

🌟 Research Objective:

– To optimize token-level credit assignment in reinforcement learning from human feedback (RLHF) for large language model alignment.

🛠️ Research Methods:

– Proposed a reward shaping function using explainability methods (SHAP and LIME) to estimate per-token rewards.

– Utilized a bilevel optimization framework with Bayesian Optimization and policy training to handle noise in token reward estimates.

💬 Research Conclusions:

– Achieving better token-level reward attribution improves performance on downstream tasks and accelerates finding an optimal policy during training.

– Feature additive attribution functions maintain the optimal policy as the original reward.

👉 Paper link: https://huggingface.co/papers/2504.16272

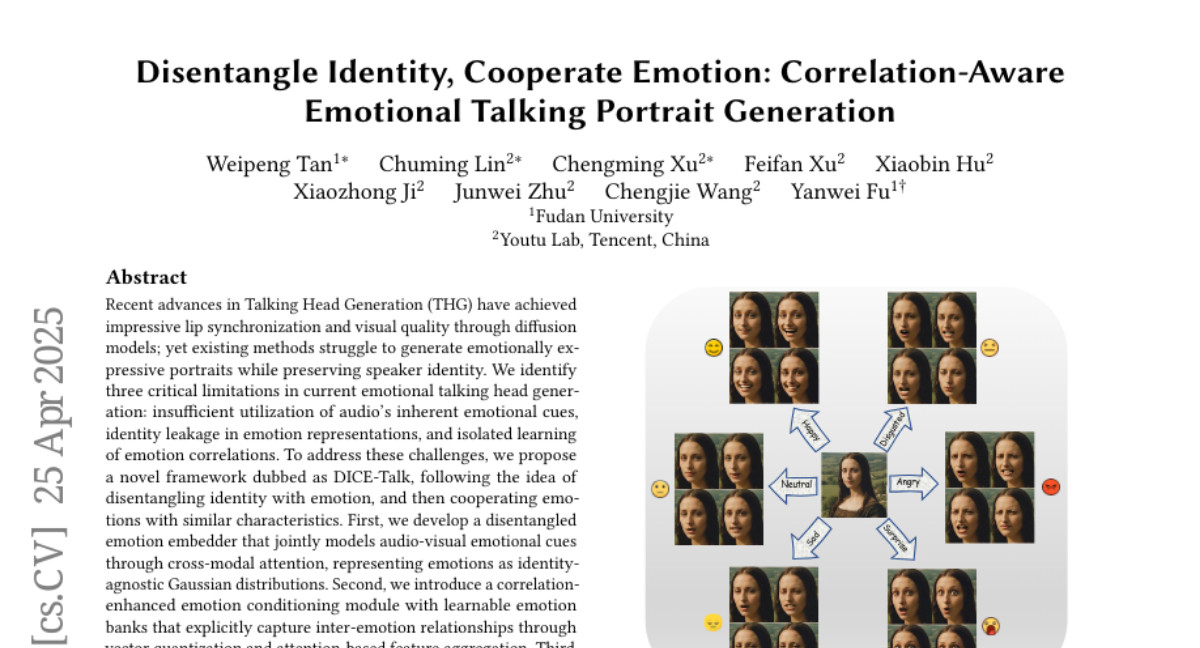

14. Disentangle Identity, Cooperate Emotion: Correlation-Aware Emotional Talking Portrait Generation

🔑 Keywords: Talking Head Generation, Emotion, Identity, Diffusion Models, Correlation

💡 Category: Generative Models

🌟 Research Objective:

– Address limitations in emotional Talking Head Generation by preserving speaker identity and utilizing audio’s emotional cues effectively.

🛠️ Research Methods:

– Proposed a DICE-Talk framework that disentangles identity from emotion, uses a cross-modal attention-based embedder, and introduces a correlation-enhanced emotion conditioning module.

💬 Research Conclusions:

– DICE-Talk significantly improves emotion accuracy and maintains lip-sync performance, producing identity-preserving, emotionally rich portraits.

👉 Paper link: https://huggingface.co/papers/2504.18087

15. LawFlow : Collecting and Simulating Lawyers’ Thought Processes

🔑 Keywords: Legal AI, LawFlow, LLM, AI Native, Legal Workflows

💡 Category: Natural Language Processing

🌟 Research Objective:

– To address the limitations of existing AI models in understanding end-to-end legal decision-making by introducing LawFlow, a dataset capturing real-world legal workflows.

🛠️ Research Methods:

– Compared human and LLM-generated workflows using the LawFlow dataset to analyze differences in structure, reasoning flexibility, and execution.

💬 Research Conclusions:

– Human workflows showed greater adaptability and modularity, while LLM workflows were more sequential. Legal professionals prefer AI in supportive roles rather than for complex task execution, offering opportunities to develop reasoning-aware legal AI systems.

👉 Paper link: https://huggingface.co/papers/2504.18942

16. TreeHop: Generate and Filter Next Query Embeddings Efficiently for Multi-hop Question Answering

🔑 Keywords: Retrieval-augmented generation, multi-hop question answering, TreeHop, embedding-level framework, query latency

💡 Category: Natural Language Processing

🌟 Research Objective:

– To address the limitations of existing retrieval-augmented generation systems in multi-hop question answering by eliminating the need for iterative LLM-based query rewriting.

🛠️ Research Methods:

– Introduction of TreeHop, an embedding-level framework that fuses semantic information from queries and documents to dynamically update query embeddings without LLMs, streamlining to a “Retrieve-Embed-Retrieve” loop.

💬 Research Conclusions:

– TreeHop significantly reduces computational overhead and query latency by up to 99% compared to concurrent approaches while maintaining competitive performance across open-domain MHQA datasets with a fraction of model parameters.

👉 Paper link: https://huggingface.co/papers/2504.20114

17. A Review of 3D Object Detection with Vision-Language Models

🔑 Keywords: 3D Object Detection, Vision-Language Models, VLMs, Zero-Shot Generalization, Open-Vocabulary Detection

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The paper provides the first systematic analysis of 3D object detection using Vision-Language Models (VLMs).

🛠️ Research Methods:

– Over 100 research papers were examined to compare traditional approaches with modern frameworks, using key architectures and pretraining strategies to align textual and 3D features.

💬 Research Conclusions:

– Identifies current challenges, such as the limited availability of 3D-language datasets and high computational demands, while proposing future research directions to advance this field.

👉 Paper link: https://huggingface.co/papers/2504.18738

18. CaRL: Learning Scalable Planning Policies with Simple Rewards

🔑 Keywords: Reinforcement Learning, Privileged Planning, Autonomous Driving, PPO, Distributed Data Parallelism

💡 Category: Reinforcement Learning

🌟 Research Objective:

– Investigate reinforcement learning for privileged planning in autonomous driving to overcome limitations of rule-based approaches.

🛠️ Research Methods:

– Proposed a new reward design focusing on a single intuitive reward term: route completion, with infractions penalized by episode termination or reducing route completion multiplicatively.

💬 Research Conclusions:

– The model scales well with higher mini-batch sizes, achieving high performance on CARLA and nuPlan benchmarks, outperforming other RL methods with complex rewards, and it operates significantly faster.

👉 Paper link: https://huggingface.co/papers/2504.17838

19. Chain-of-Defensive-Thought: Structured Reasoning Elicits Robustness in Large Language Models against Reference Corruption

🔑 Keywords: Chain-of-thought prompting, Large language models, Reasoning abilities, Robustness, Chain-of-defensive-thought

💡 Category: Natural Language Processing

🌟 Research Objective:

– The study aims to enhance the robustness of large language models in tasks that are not inherently focused on reasoning by leveraging their improved reasoning abilities.

🛠️ Research Methods:

– The research employs a method called chain-of-defensive-thought, which includes providing structured and defensive reasoning examples to improve model robustness against reference corruption.

💬 Research Conclusions:

– Significant improvements in robustness were observed, notably using GPT-4o in the Natural Questions task, where accuracy was maintained at 50% against prompt injection attacks, compared to a drastic drop using standard prompting.

👉 Paper link: https://huggingface.co/papers/2504.20769