AI Native Daily Paper Digest – 20250501

1. Sadeed: Advancing Arabic Diacritization Through Small Language Model

🔑 Keywords: Arabic text diacritization, morphological richness, fine-tuned, benchmarking, SadeedDiac-25

💡 Category: Natural Language Processing

🌟 Research Objective:

– To introduce Sadeed, a novel approach to Arabic text diacritization using a fine-tuned language model.

🛠️ Research Methods:

– Sadeed is based on a decoder-only language model adapted from Kuwain 1.5B Hennara, fine-tuned on high-quality diacritized datasets.

💬 Research Conclusions:

– Sadeed achieves competitive results with modest resources, outperforms traditional models, and highlights limitations of current benchmarking practices. A new benchmark, SadeedDiac-25, is also introduced to enable comprehensive evaluation.

👉 Paper link: https://huggingface.co/papers/2504.21635

2. WebThinker: Empowering Large Reasoning Models with Deep Research Capability

🔑 Keywords: Large reasoning models, WebThinker, Autonomous Think-Search-and-Draft, RL-based training strategy

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– The paper proposes WebThinker, a deep research agent that enhances the reasoning capabilities of Large reasoning models by enabling them to autonomously search the web and draft research reports.

🛠️ Research Methods:

– Integration of a Deep Web Explorer module that allows for dynamic information extraction from the web, and an RL-based training strategy using iterative online Direct Preference Optimization for improved research tool utilization.

💬 Research Conclusions:

– WebThinker significantly outperforms existing methods and strong proprietary systems on complex reasoning benchmarks and scientific report generation tasks, improving LRM reliability and applicability.

👉 Paper link: https://huggingface.co/papers/2504.21776

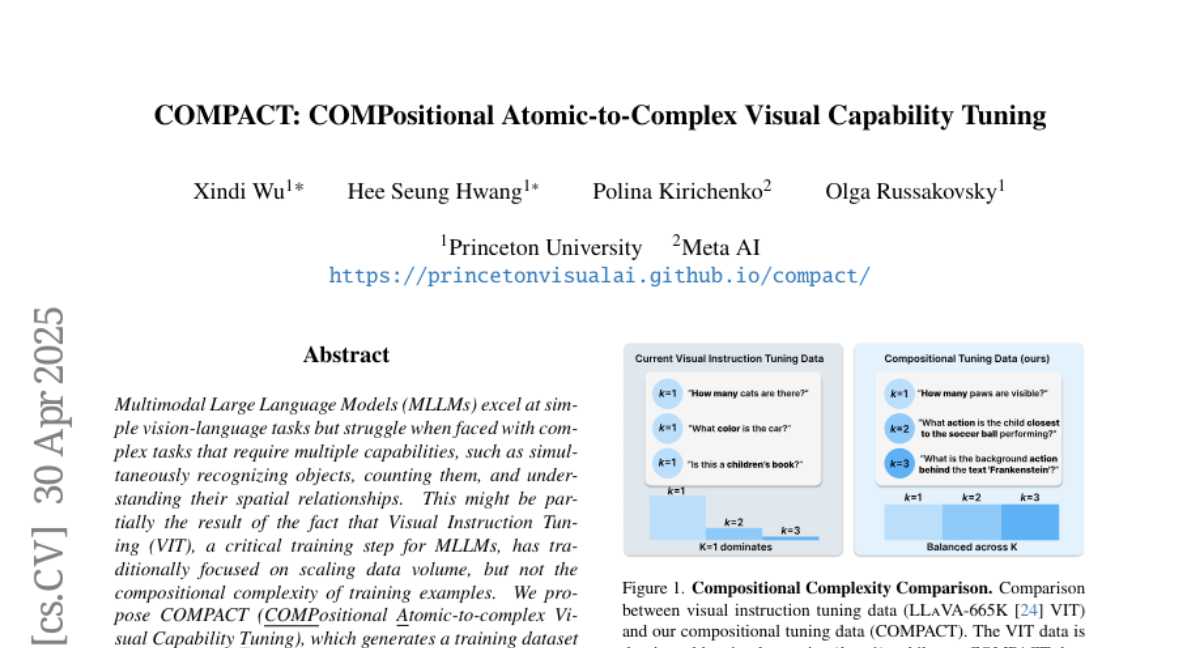

3. COMPACT: COMPositional Atomic-to-Complex Visual Capability Tuning

🔑 Keywords: Multimodal Large Language Models, Visual Instruction Tuning, COMPACT, compositional complexity, atomic capabilities

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The primary aim is to enhance the performance of Multimodal Large Language Models (MLLMs) in complex vision-language tasks by focusing on the compositional complexity of training examples.

🛠️ Research Methods:

– The proposed method is COMPACT, which generates a training dataset that explicitly controls the compositional complexity, enabling MLLMs to learn complex abilities from combinations of atomic capabilities efficiently.

💬 Research Conclusions:

– COMPACT matches the performance of LLaVA-665k Visual Instruction Tuning (VIT) using less than 10% of its data budget and significantly outperforms it on tasks requiring multiple atomic capabilities, illustrating an improvement of 83.3% on MMStar and 94.0% on MM-Vet.

👉 Paper link: https://huggingface.co/papers/2504.21850

4. Phi-4-Mini-Reasoning: Exploring the Limits of Small Reasoning Language Models in Math

🔑 Keywords: Chain-of-Thought, Large Language Models, Small Language Models, Reinforcement Learning, distillation

💡 Category: Natural Language Processing

🌟 Research Objective:

– To enhance reasoning capabilities in Small Language Models by employing a systematic training approach using distillation from LLM-generated synthetic data.

🛠️ Research Methods:

– A four-step training recipe: large-scale mid-training on diverse distilled long-CoT data, supervised fine-tuning on high-quality long-CoT data, Rollout DPO using a curated preference dataset, and Reinforcement Learning with Verifiable Reward.

💬 Research Conclusions:

– The Phi-4-Mini-Reasoning model demonstrates superior math reasoning abilities compared to larger models, proving that high-quality training recipes can substantially boost reasoning capabilities in resource-constrained small models.

👉 Paper link: https://huggingface.co/papers/2504.21233

5. Beyond the Last Answer: Your Reasoning Trace Uncovers More than You Think

🔑 Keywords: Large Language Models, step-by-step reasoning, subthoughts, correctness, model’s confidence

💡 Category: Natural Language Processing

🌟 Research Objective:

– Challenge the reliance on final answers in Large Language Models and investigate if alternative reasoning paths can yield different results.

🛠️ Research Methods:

– Analyze intermediate reasoning steps termed subthoughts, segment reasoning trace using linguistic cues, and prompt models to generate continuations from these subthoughts.

💬 Research Conclusions:

– Aggregating potential answers from subthoughts by selecting the most frequent one improves accuracy, with experiments showing up to 13% and 10% accuracy gains on challenging mathematical datasets.

👉 Paper link: https://huggingface.co/papers/2504.20708

6. Softpick: No Attention Sink, No Massive Activations with Rectified Softmax

🔑 Keywords: softpick, transformer attention mechanisms, performance parity, quantization, sparsity optimization

💡 Category: Machine Learning

🌟 Research Objective:

– The research introduces “softpick” as a rectified alternative to softmax in transformer attention mechanisms to eliminate attention sink and massive activations.

🛠️ Research Methods:

– Experiments were conducted with 340M parameter models to compare softpick and softmax performance on standard benchmarks.

💬 Research Conclusions:

– Softpick maintains performance parity with softmax while achieving a 0% sink rate, lower kurtosis, and increased sparsity. Softpick shows potential advantages in quantization, low-precision training, and interpretability.

👉 Paper link: https://huggingface.co/papers/2504.20966

7. Phi-4-reasoning Technical Report

🔑 Keywords: Phi-4-reasoning, supervised fine-tuning, reasoning chains, reinforcement learning, general-purpose benchmarks

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– Introduce and evaluate Phi-4-reasoning, a model designed for complex reasoning tasks.

🛠️ Research Methods:

– Utilization of supervised fine-tuning and reinforcement learning to train Phi-4-reasoning on diverse and high-complexity prompts.

💬 Research Conclusions:

– Phi-4-reasoning and its enhanced variants outperform larger models in reasoning tasks and show notable improvements transferable to general-purpose benchmarks, highlighting the effectiveness of careful data curation and reinforcement learning in reasoning models.

👉 Paper link: https://huggingface.co/papers/2504.21318

8. Taming the Titans: A Survey of Efficient LLM Inference Serving

🔑 Keywords: Large Language Models, Generative AI, attention mechanism, model placement, GPU cluster deployment

💡 Category: Generative Models

🌟 Research Objective:

– The paper aims to survey recent advancements to address the challenges of memory overhead and computational demands in Large Language Models (LLM) inference services.

🛠️ Research Methods:

– The study covers instance-level methods like model placement and request scheduling, and cluster-level techniques such as GPU cluster deployment and multi-instance load balancing.

💬 Research Conclusions:

– The paper highlights niche areas and proposes potential research directions for advancing LLM inference serving.

👉 Paper link: https://huggingface.co/papers/2504.19720

9. Llama-3.1-FoundationAI-SecurityLLM-Base-8B Technical Report

🔑 Keywords: LLMs, Cybersecurity, Llama 3.1, Cybersecurity Corpus, AI-driven Tools

💡 Category: Natural Language Processing

🌟 Research Objective:

– Develop a cybersecurity-focused large language model named Foundation-Sec-8B to address the adoption limitations of LLMs in the cybersecurity domain.

🛠️ Research Methods:

– Utilized the Llama 3.1 architecture with continued pretraining on a specialized cybersecurity corpus to enhance model performance on cybersecurity-specific tasks.

💬 Research Conclusions:

– Foundation-Sec-8B performs comparably to models like Llama 3.1-70B and GPT-4o-mini on certain cybersecurity-specific benchmarks.

– The release of this model aims to promote the adoption of AI-driven tools in cybersecurity applications.

👉 Paper link: https://huggingface.co/papers/2504.21039

10. RoboVerse: Towards a Unified Platform, Dataset and Benchmark for Scalable and Generalizable Robot Learning

🔑 Keywords: Robotics, Simulation Platform, Synthetic Dataset, Unified Benchmarks, Imitation Learning

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– The objective is to address challenges in robotics data scaling and evaluation benchmarks using a comprehensive framework called RoboVerse.

🛠️ Research Methods:

– RoboVerse comprises a simulation platform, a synthetic dataset with high-fidelity physics, and unified benchmarks for imitation and reinforcement learning, using MetaSim to ensure interoperability.

💬 Research Conclusions:

– Experiments show that RoboVerse improves performance in imitation learning, reinforcement learning, world model learning, and sim-to-real transfer, establishing it as a reliable solution for advancing robot learning.

👉 Paper link: https://huggingface.co/papers/2504.18904

11. Generative AI for Character Animation: A Comprehensive Survey of Techniques, Applications, and Future Directions

🔑 Keywords: Generative AI, Animation, Face Animation, Character Animation, Diffusion Models

💡 Category: Generative Models

🌟 Research Objective:

– The study aims to provide a comprehensive review of generative AI applications in character animation, including facial animation and gesture modeling.

🛠️ Research Methods:

– The research surveys recent advancements in various areas such as face animation, expression rendering, and motion synthesis, and provides an integrative view by examining state-of-the-art techniques and emerging trends.

💬 Research Conclusions:

– It offers insights into key research, practical applications, and challenges, providing a roadmap for future research in AI-driven character animation. This survey serves as a resource for newcomers and existing researchers looking to deepen their understanding of generative AI in animation.

👉 Paper link: https://huggingface.co/papers/2504.19056

12. ReVision: High-Quality, Low-Cost Video Generation with Explicit 3D Physics Modeling for Complex Motion and Interaction

🔑 Keywords: video generation, 3D physical knowledge, video diffusion model, motion-consistent videos, motion fidelity

💡 Category: Generative Models

🌟 Research Objective:

– To enhance video generation with complex motions and interactions using a new framework, ReVision, that incorporates 3D physical knowledge.

🛠️ Research Methods:

– Utilization of a video diffusion model to generate initial coarse videos, extracting 2D/3D features for a 3D object-centric representation, and employing a parameterized physical prior model to refine the motion sequence for greater accuracy and realism.

💬 Research Conclusions:

– ReVision significantly improves motion fidelity and coherence in video generation, outperforming a larger state-of-the-art model with only 1.5B parameters by integrating 3D physical knowledge, making it highly effective for generating realistic and controllable complex motions.

👉 Paper link: https://huggingface.co/papers/2504.21855

13. Selecting Optimal Candidate Profiles in Adversarial Environments Using Conjoint Analysis and Machine Learning

🔑 Keywords: Conjoint analysis, Optimal candidate profiles, Adversarial dynamics, Political analysis

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– To identify optimal candidate profiles in political conjoint experiments by incorporating adversarial dynamics.

🛠️ Research Methods:

– Development of an optimal stochastic intervention to address the challenge of identifying the best profile due to numerous feature combinations.

– Application of the derived methodology to a conjoint experiment on US presidential vote choice.

💬 Research Conclusions:

– Adversarial dynamics yield outcomes more aligned with historical electoral results, offering unique insights compared to non-adversarial approaches.

👉 Paper link: https://huggingface.co/papers/2504.19043

14. UniBiomed: A Universal Foundation Model for Grounded Biomedical Image Interpretation

🔑 Keywords: Multi-modal interpretation, UniBiomed, Multi-modal Large Language Model, Segment Anything Model, Biomedical AI

💡 Category: AI in Healthcare

🌟 Research Objective:

– Introduce UniBiomed, the first universal foundation model for grounded biomedical image interpretation, unifying clinical text generation and object segmentation.

🛠️ Research Methods:

– Integration of Multi-modal Large Language Model (MLLM) and Segment Anything Model (SAM), coupled with a large-scale dataset of over 27 million triplets of images, annotations, and text descriptions across ten imaging modalities.

💬 Research Conclusions:

– UniBiomed achieves state-of-the-art performance in various biomedical tasks, providing automated, end-to-end grounded interpretation. It represents a novel paradigm shift in clinical workflows, significantly enhancing diagnostic efficiency and accuracy.

👉 Paper link: https://huggingface.co/papers/2504.21336