AI Native Daily Paper Digest – 20250514

1. MiniMax-Speech: Intrinsic Zero-Shot Text-to-Speech with a Learnable Speaker Encoder

🔑 Keywords: autoregressive Transformer, Text-to-Speech (TTS), learnable speaker encoder, zero-shot, Flow-VAE

💡 Category: Generative Models

🌟 Research Objective:

– The objective is to develop MiniMax-Speech, a TTS model that achieves high-quality, expressive speech synthesis with advanced voice cloning capabilities.

🛠️ Research Methods:

– The model employs an autoregressive Transformer framework combined with a learnable speaker encoder to extract timbre features without needing transcription. The Flow-VAE is proposed to improve audio quality.

💬 Research Conclusions:

– MiniMax-Speech achieves state-of-the-art results in voice cloning metrics and excels in the TTS Arena leaderboard. It supports 32 languages and enables various applications with robust speaker encoder capabilities.

👉 Paper link: https://huggingface.co/papers/2505.07916

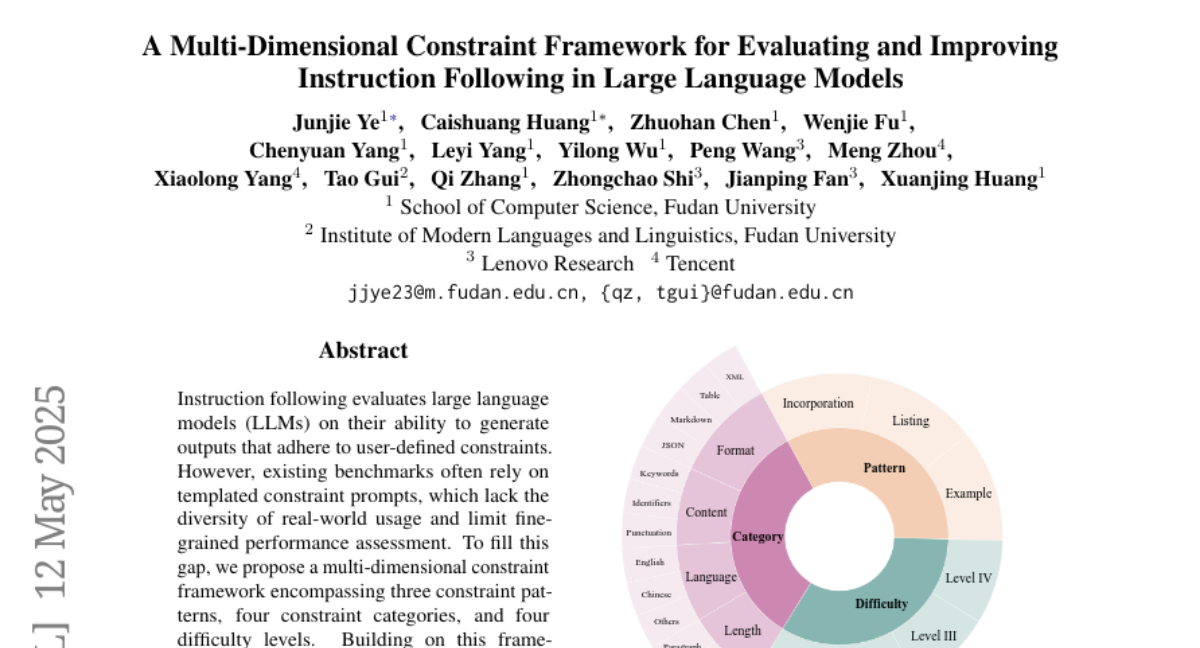

2. A Multi-Dimensional Constraint Framework for Evaluating and Improving Instruction Following in Large Language Models

🔑 Keywords: Instruction Following, Constraint Framework, Large Language Models, Reinforcement Learning

💡 Category: Natural Language Processing

🌟 Research Objective:

– To address the limitations of existing benchmarks for LLMs in instruction following by introducing a multi-dimensional constraint framework that offers diverse and fine-grained performance assessment.

🛠️ Research Methods:

– Development of an automated instruction generation pipeline encompassing constraint expansion, conflict detection, and instruction rewriting, evaluating 19 LLMs across seven model families.

💬 Research Conclusions:

– The proposed approach demonstrates significant improvements in instruction following, highlighting substantial variation in LLM performance across different constraint levels and revealing the importance of modifying attention module parameters to enhance constraint recognition and adherence.

👉 Paper link: https://huggingface.co/papers/2505.07591

3. Fast Text-to-Audio Generation with Adversarial Post-Training

🔑 Keywords: ARC post-training, diffusion/flow models, contrastive discriminator objective, prompt adherence, Stable Audio Open

💡 Category: Generative Models

🌟 Research Objective:

– To improve the inference speed of text-to-audio systems using Adversarial Relativistic-Contrastive (ARC) post-training without relying on distillation.

🛠️ Research Methods:

– Introduced the first adversarial acceleration algorithm for diffusion/flow models, combining relativistic adversarial formulation with a novel contrastive discriminator objective.

💬 Research Conclusions:

– Achieved significant speed improvements, generating high-quality stereo audio quickly, establishing the fastest text-to-audio model on both high-performance and mobile edge devices.

👉 Paper link: https://huggingface.co/papers/2505.08175

4. Bring Reason to Vision: Understanding Perception and Reasoning through Model Merging

🔑 Keywords: Vision-Language Models (VLMs), Large Language Models (LLMs), model merging, multimodal integration

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To explore the combination of visual perception and reasoning in Vision-Language Models (VLMs) through model merging techniques.

🛠️ Research Methods:

– Utilizing model merging to integrate the reasoning capabilities of Large Language Models (LLMs) into VLMs without requiring additional training.

– Conducting extensive experiments to assess the impact of model merging on perception and reasoning mechanisms within VLMs.

💬 Research Conclusions:

– Model merging successfully transfers reasoning abilities from LLMs to VLMs.

– Perception is primarily encoded in early layers, while reasoning emerges in the middle-to-late layers of models.

– After merging, all layers contribute to reasoning, enhancing the potential of multimodal integration and interpretation.

👉 Paper link: https://huggingface.co/papers/2505.05464

5. Measuring General Intelligence with Generated Games

🔑 Keywords: gg-bench, Large Language Model (LLM), Gym environment, reinforcement learning, winrate

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The objective is to evaluate general reasoning capabilities in language models using dynamically generated game environments called gg-bench.

🛠️ Research Methods:

– A synthetic data-generating process is utilized where a large language model creates natural language game descriptions, implements the games in a Gym environment, and trains reinforcement learning agents through self-play.

💬 Research Conclusions:

– State-of-the-art language models, such as GPT-4o and Claude 3.7 Sonnet, achieve relatively low winrates of 7-9% on gg-bench, whereas specialized reasoning models, like o1, o3-mini, and DeepSeek-R1, achieve higher winrates of 31-36%.

– The release of games, data generation processes, and evaluation code aims to support future modeling efforts and enhancement of the benchmark.

👉 Paper link: https://huggingface.co/papers/2505.07215

6. AM-Thinking-v1: Advancing the Frontier of Reasoning at 32B Scale

🔑 Keywords: AM-Thinking-v1, Dense Language Model, Open-source innovation, Reasoning capabilities, Reinforcement Learning

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– To develop AM-Thinking-v1, a dense language model that advances reasoning capabilities and exemplifies open-source collaboration.

🛠️ Research Methods:

– Utilized a 32B dense language model built from an open-source base, employing a combination of supervised fine-tuning and reinforcement learning within a post-training pipeline.

💬 Research Conclusions:

– AM-Thinking-v1 surpasses models like DeepSeek-R1 and matches MoE models, showcasing exceptional performance in math and coding tasks. It highlights the potential of the open-source community to achieve high performance and accessibility in mid-scale models.

👉 Paper link: https://huggingface.co/papers/2505.08311

7. TRAIL: Trace Reasoning and Agentic Issue Localization

🔑 Keywords: Agentic Workflows, Error Analysis, Long Context LLMs, TRAIL

💡 Category: AI Systems and Tools

🌟 Research Objective:

– This study aims to develop scalable and systematic evaluation methods for complex traces generated by agentic workflows to address the limitations of manual analysis.

🛠️ Research Methods:

– The authors introduce a formal taxonomy of error types in agentic systems and present a dataset of 148 human-annotated traces, focusing on real-world applications.

💬 Research Conclusions:

– Modern long context LLMs, including the Gemini-2.5-pro model, demonstrate poor performance in trace debugging, achieving only an 11% score on the TRAIL dataset. The dataset and code have been made publicly available to aid further research in this area.

👉 Paper link: https://huggingface.co/papers/2505.08638

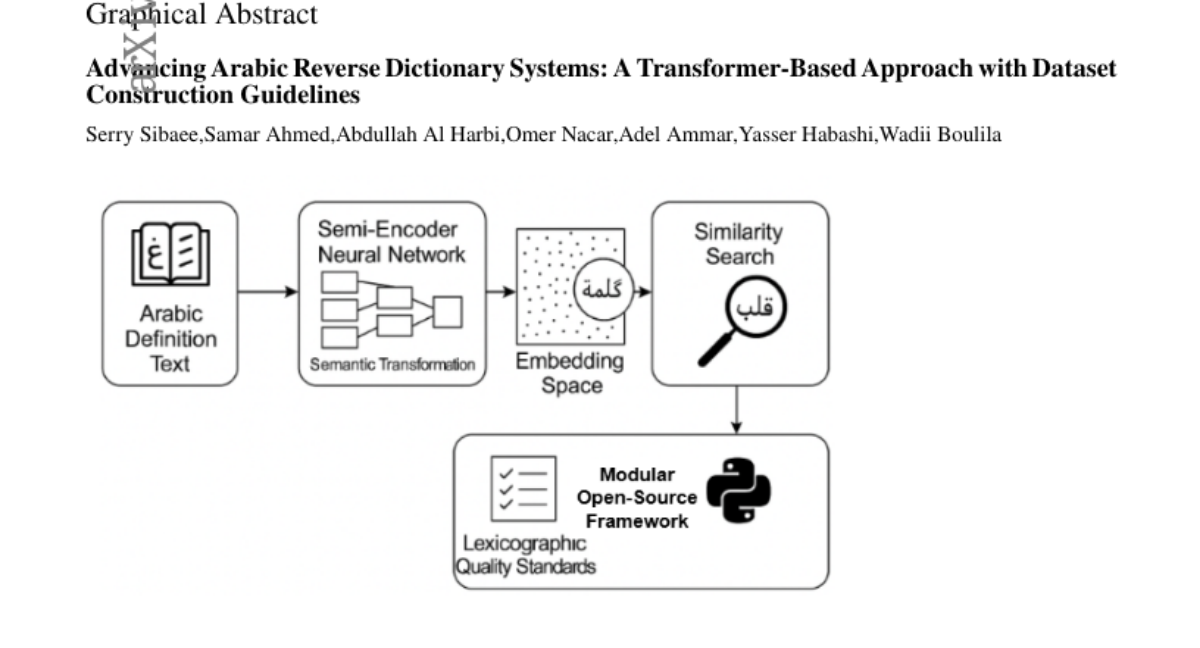

8. Advancing Arabic Reverse Dictionary Systems: A Transformer-Based Approach with Dataset Construction Guidelines

🔑 Keywords: Arabic Reverse Dictionary, Transformer-Based Approach, Semi-Encoder Neural Network, ARBERTv2, Python Library

💡 Category: Natural Language Processing

🌟 Research Objective:

– Develop an effective Arabic Reverse Dictionary system using a novel transformer-based approach to fill a gap in Arabic natural language processing.

🛠️ Research Methods:

– Utilized a semi-encoder neural network with geometrically decreasing layers and established formal quality standards for lexicographic definitions.

💬 Research Conclusions:

– Arabic-specific models significantly outperform multilingual models; ARBERTv2 model achieves the highest ranking score. A modular, extensible Python library with configurable training pipelines was developed to aid language learning and communication in Arabic.

👉 Paper link: https://huggingface.co/papers/2504.21475

9. SkillFormer: Unified Multi-View Video Understanding for Proficiency Estimation

🔑 Keywords: SkillFormer, parameter-efficient architecture, multi-view integration, adaptive self-calibration

💡 Category: Computer Vision

🌟 Research Objective:

– The study aims to address the challenge of assessing human skill levels in complex activities, with applications in areas such as sports, rehabilitation, and training.

🛠️ Research Methods:

– The research introduces SkillFormer, a parameter-efficient architecture built on the TimeSformer backbone. It utilizes a CrossViewFusion module with multi-head cross-attention, learnable gating, and adaptive self-calibration.

💬 Research Conclusions:

– SkillFormer exhibits state-of-the-art accuracy and computational efficiency in multi-view settings, using significantly fewer parameters and requiring fewer training epochs compared to existing baselines.

👉 Paper link: https://huggingface.co/papers/2505.08665

10. Memorization-Compression Cycles Improve Generalization

🔑 Keywords: Information Bottleneck Language Modeling, memorization-compression cycle, Gated Phase Transition, representation entropy, sleep consolidation

💡 Category: Natural Language Processing

🌟 Research Objective:

– To prove that generalization improves through both data scaling and compressing internal representations.

🛠️ Research Methods:

– Introduced the Information Bottleneck Language Modeling (IBLM) as a constrained optimization problem.

– Proposed a training algorithm, Gated Phase Transition (GAPT), which adapts between memorization and compression phases.

💬 Research Conclusions:

– GAPT reduced representation entropy by 50% and improved cross-entropy by 4.8%.

– Improved out-of-distribution generalization by 35% in pretraining tasks.

– Achieved 97% improvement in representation separation, simulating the impact of sleep consolidation on catastrophic forgetting.

👉 Paper link: https://huggingface.co/papers/2505.08727

11. Aya Vision: Advancing the Frontier of Multilingual Multimodality

🔑 Keywords: Multimodal Language Models, Aya Vision, Multilingual, Synthetic Annotation Framework, Catastrophic Forgetting

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To develop robust techniques for building multilingual multimodal models that align vision and language, curating high-quality instruction data, and address challenges in data scarcity and catastrophic forgetting.

🛠️ Research Methods:

– Introduced a synthetic annotation framework for creating diverse multilingual multimodal instruction data.

– Proposed a cross-modal model merging technique to prevent catastrophic forgetting while enhancing multimodal generative performance.

💬 Research Conclusions:

– Aya-Vision-8B demonstrated best-in-class performance in comparison to leading multimodal models.

– Aya-Vision-32B outperformed models more than twice its size, highlighting the efficacy of the proposed methods in advancing multilingual multimodal capabilities.

👉 Paper link: https://huggingface.co/papers/2505.08751

12. ViMRHP: A Vietnamese Benchmark Dataset for Multimodal Review Helpfulness Prediction via Human-AI Collaborative Annotation

🔑 Keywords: Multimodal Review Helpfulness Prediction, E-commerce, Linguistic Diversity, AI Assistance, Vietnamese

💡 Category: Natural Language Processing

🌟 Research Objective:

– The research aims to introduce the ViMRHP dataset to address the lack of linguistic diversity in existing datasets for predicting the helpfulness of reviews on E-commerce platforms, specifically targeting the Vietnamese language.

🛠️ Research Methods:

– To create the dataset, the researchers leveraged AI to assist annotators, significantly reducing annotation time while maintaining data quality. The AI assistance achieved a reduction in annotation time from 90-120 seconds per task to 20-40 seconds per task.

💬 Research Conclusions:

– The ViMRHP dataset, consisting of four domains and 46K reviews, enhances linguistic diversity and provides a basis for evaluating AI-generated versus human-verified annotations, demonstrating its practical application in assessing quality differences. The dataset is made publicly available for further research.

👉 Paper link: https://huggingface.co/papers/2505.07416

13. Optimizing Retrieval-Augmented Generation: Analysis of Hyperparameter Impact on Performance and Efficiency

🔑 Keywords: Retrieval-augmented generation, hyperparameters, Chroma, Faiss, retrieval accuracy

💡 Category: Natural Language Processing

🌟 Research Objective:

– The study aims to analyze the influence of hyperparameters on the speed and quality of Retrieval-Augmented Generation (RAG) systems.

🛠️ Research Methods:

– The research evaluates various RAG configurations, including vector stores, chunking policies, and re-ranking methods, assessing them on six key metrics such as faithfulness, context precision, and answer relevance.

💬 Research Conclusions:

– The results display a speed-accuracy trade-off between Chroma and Faiss, with naive fixed-length chunking proving efficient. Re-ranking, while improving retrieval quality modestly, significantly increases runtime. The study concludes that RAG systems can achieve high retrieval accuracy by tuning hyperparameters carefully, which has direct implications for applications like clinical decision support in healthcare.

👉 Paper link: https://huggingface.co/papers/2505.08445

14. NavDP: Learning Sim-to-Real Navigation Diffusion Policy with Privileged Information Guidance

🔑 Keywords: Navigation Diffusion Policy, diffusion-based trajectory generation, policy transformer, sim-to-real transfer

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– Develop an efficient framework for robot navigation in dynamic open-world environments without relying on precise localization or costly real-world demonstrations.

🛠️ Research Methods:

– Introduce the Navigation Diffusion Policy (NavDP), utilizing diffusion-based trajectory generation, a critic function for selection, and local observation tokens via a policy transformer.

– Train the model in simulation, generating 2,500 trajectories per GPU daily, creating a large-scale navigation dataset for diverse environments.

💬 Research Conclusions:

– NavDP achieves state-of-the-art performance and excellent generalization across different robot types in varied indoor and outdoor settings.

– Introducing Gaussian Splatting for real-to-sim fine-tuning enhances success rates by 30% without compromising generalization abilities.

👉 Paper link: https://huggingface.co/papers/2505.08712

15.