AI Native Daily Paper Digest – 20250515

1. BLIP3-o: A Family of Fully Open Unified Multimodal Models-Architecture, Training and Dataset

🔑 Keywords: Unifying image understanding, Image generation, Diffusion transformer, Sequential pretraining strategy, BLIP3-o

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Investigate the optimal architecture and training strategy for a unified framework integrating image understanding and generation.

🛠️ Research Methods:

– Utilization of autoregressive and diffusion models to enhance image representations and training strategies.

– Implementation of a diffusion transformer to generate semantically rich CLIP image features.

– Adoption of a sequential pretraining strategy focusing initially on image understanding, then on image generation.

💬 Research Conclusions:

– The research introduces BLIP3-o, a novel suite of unified multimodal models demonstrating superior performance in both image understanding and generation benchmarks.

– The high-quality instruction-tuning dataset, BLIP3o-60k, supports the development of state-of-the-art models.

– Comprehensive open-sourcing of models, code, and datasets to promote future research advancements.

👉 Paper link: https://huggingface.co/papers/2505.09568

2. DeCLIP: Decoupled Learning for Open-Vocabulary Dense Perception

🔑 Keywords: Vision-Language Models, CLIP, dense prediction, local discriminability, spatial consistency

💡 Category: Computer Vision

🌟 Research Objective:

– To address the limitations of CLIP in dense visual prediction tasks, particularly in improving local discriminability and spatial consistency.

🛠️ Research Methods:

– Introduced DeCLIP, a novel framework that decouples the self-attention module to independently enhance “content” and “context” features, improving aggregative performance in open-vocabulary dense prediction tasks.

💬 Research Conclusions:

– DeCLIP significantly outperforms existing methods in tasks like object detection and semantic segmentation, demonstrating enhanced capability in handling unbounded visual concepts with improved spatial correlations.

👉 Paper link: https://huggingface.co/papers/2505.04410

3. Insights into DeepSeek-V3: Scaling Challenges and Reflections on Hardware for AI Architectures

🔑 Keywords: Hardware-Aware Model Co-Design, Multi-head Latent Attention, Mixture of Experts, FP8 Mixed-Precision Training, Multi-Plane Network Topology

💡 Category: AI Systems and Tools

🌟 Research Objective:

– To address the limitations of current hardware architectures in supporting large language models through a hardware-aware model co-design approach.

🛠️ Research Methods:

– Trained the DeepSeek-V3 model on 2,048 NVIDIA H800 GPUs, utilizing innovations such as Multi-head Latent Attention for memory efficiency, Mixture of Experts for computation-communication trade-offs, FP8 mixed-precision training, and a Multi-Plane Network Topology to reduce network overhead.

💬 Research Conclusions:

– Demonstrated that hardware-aware model co-design can significantly enhance the efficiency of AI training and inference, providing a blueprint for future developments in AI systems to meet the demands of large-scale models.

👉 Paper link: https://huggingface.co/papers/2505.09343

4. Marigold: Affordable Adaptation of Diffusion-Based Image Generators for Image Analysis

🔑 Keywords: deep learning, pretrained models, generative models, latent diffusion, zero-shot generalization

💡 Category: Computer Vision

🌟 Research Objective:

– Introduce Marigold, a family of conditional generative models designed for dense image analysis tasks.

🛠️ Research Methods:

– Utilize pretrained latent diffusion models with minimal architectural modifications and fine-tuning using small synthetic datasets on a single GPU.

💬 Research Conclusions:

– Marigold demonstrates state-of-the-art zero-shot generalization capabilities in tasks like monocular depth estimation and surface normals prediction.

👉 Paper link: https://huggingface.co/papers/2505.09358

5. UniSkill: Imitating Human Videos via Cross-Embodiment Skill Representations

🔑 Keywords: Mimicry, UniSkill, embodiment-agnostic, cross-embodiment video data, robot policies

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– Develop UniSkill framework to enable robots to learn skills from human video prompts in an embodiment-agnostic manner.

🛠️ Research Methods:

– Utilize large-scale cross-embodiment video data without labels to teach robots skills transferable from humans to robots, tested in both simulation and real-world environments.

💬 Research Conclusions:

– Successfully guided robots in selecting appropriate actions with skills extracted from human video prompts, even in scenarios with unseen prompts.

👉 Paper link: https://huggingface.co/papers/2505.08787

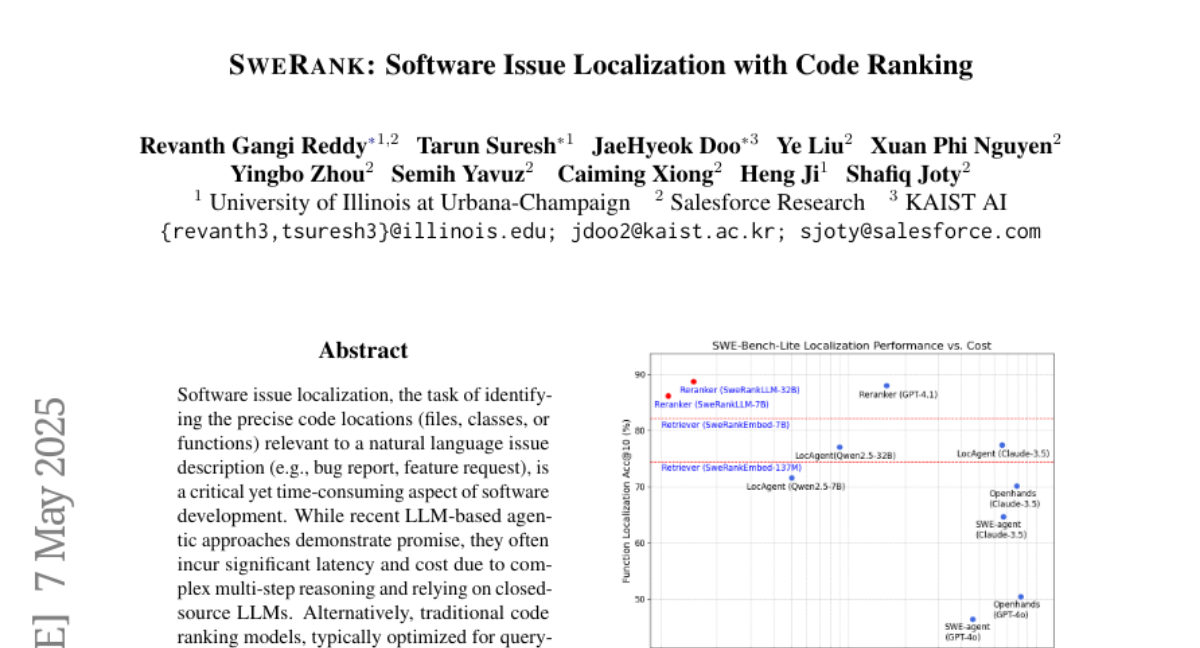

6. SweRank: Software Issue Localization with Code Ranking

🔑 Keywords: Software issue localization, Closed-source LLMs, SweRank, SweLoc, Retrieve-and-rerank framework

💡 Category: AI Systems and Tools

🌟 Research Objective:

– The research introduces SweRank, a novel retrieve-and-rerank framework aimed at improving the efficiency and effectiveness of software issue localization.

🛠️ Research Methods:

– The authors constructed a large-scale dataset named SweLoc, sourced from public GitHub repositories, to facilitate the training of the proposed framework.

💬 Research Conclusions:

– Empirical results demonstrate that SweRank outperforms existing ranking models and closed-source LLM-based systems, establishing SweLoc as a valuable resource for enhancing retriever and reranker models in the domain of issue localization.

👉 Paper link: https://huggingface.co/papers/2505.07849

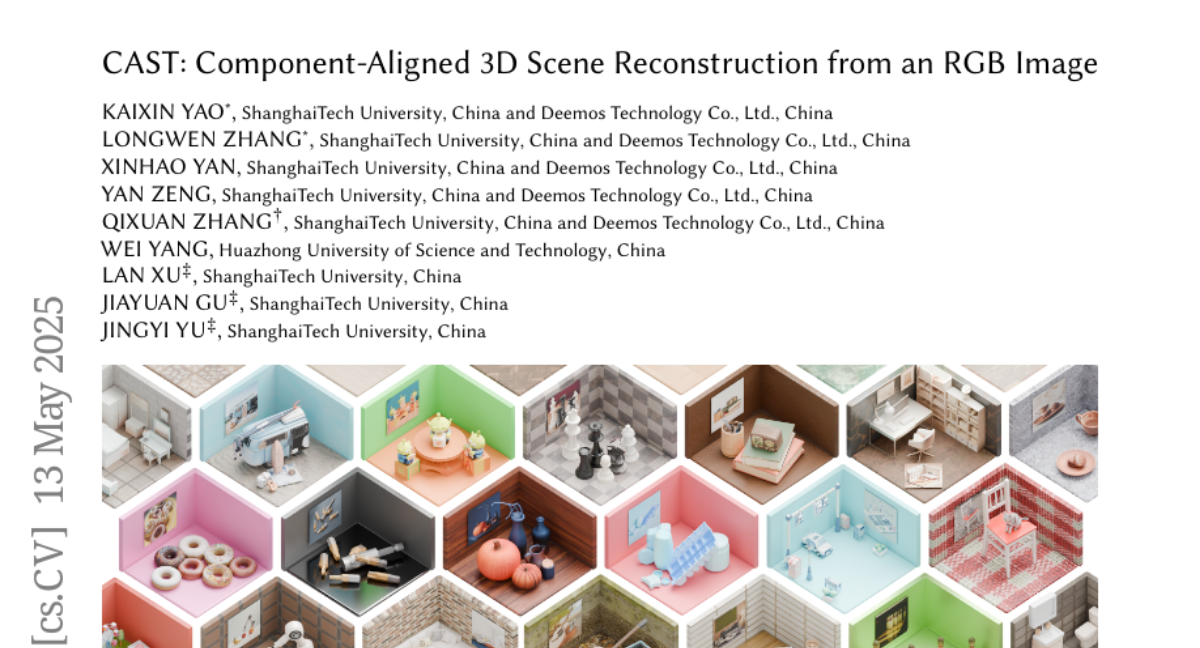

7. CAST: Component-Aligned 3D Scene Reconstruction from an RGB Image

🔑 Keywords: CAST, 3D scene reconstruction, GPT-based model, occlusion-aware, physical consistency

💡 Category: Computer Vision

🌟 Research Objective:

– The study aims to recover high-quality 3D scenes from a single RGB image, addressing the challenges of domain-specific limitations and low-quality object generation.

🛠️ Research Methods:

– Utilizes a novel method called CAST for 3D scene reconstruction, which begins with object-level 2D segmentation and depth information extraction.

– Employs a GPT-based model to analyze inter-object spatial relationships for coherent reconstruction and uses an occlusion-aware 3D generation model to independently generate each object’s geometry.

– Incorporates a physics-aware correction step to ensure physical consistency and spatial coherence, with the aid of a fine-grained relation graph and Signed Distance Fields.

💬 Research Conclusions:

– The CAST method provides accurate alignment and integration of generated meshes into the scene’s point cloud, effectively addressing issues like occlusions and floating objects.

– CAST is applicable in robotics, offering efficient real-to-simulation workflows and realistic, scalable simulation environments.

👉 Paper link: https://huggingface.co/papers/2502.12894

8. WavReward: Spoken Dialogue Models With Generalist Reward Evaluators

🔑 Keywords: Spoken Dialogue Models, WavReward, Reinforcement Learning, Audio Language Models, ChatReward-30K

💡 Category: Natural Language Processing

🌟 Research Objective:

– To address the evaluation gap in spoken dialogue models by proposing WavReward, which assesses both IQ and EQ through audio-based analysis.

🛠️ Research Methods:

– Utilizes audio language models and reinforcement learning to create WavReward, incorporating deep reasoning and nonlinear reward mechanisms. A new dataset, ChatReward-30K, supports its training process.

💬 Research Conclusions:

– WavReward significantly outperforms previous models in spoken dialogue evaluation, achieving a 91.5% objective accuracy and an 83% improvement in subjective testing, with comprehensive ablation studies validating each model component.

👉 Paper link: https://huggingface.co/papers/2505.09558

9. VCRBench: Exploring Long-form Causal Reasoning Capabilities of Large Video Language Models

🔑 Keywords: Large Video Language Models (LVLMs), causal reasoning, VCRBench, Recognition-Reasoning Decomposition (RRD), video recognition

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– To explore and enhance the capabilities of Large Video Language Models in performing video-based causal reasoning, particularly in visually grounded and goal-driven settings, through the introduction of a novel benchmark, VCRBench.

🛠️ Research Methods:

– Introduction of VCRBench, a benchmark using procedural videos with shuffled steps to assess LVLMs’ ability to identify and sequence key causal events.

– Proposal of Recognition-Reasoning Decomposition (RRD), a modular approach dividing causal reasoning into video recognition and causal reasoning tasks.

💬 Research Conclusions:

– State-of-the-art LVLMs struggle with long-form causal reasoning due to difficulties in modeling long-range causal dependencies from visual observations.

– The RRD approach significantly improves model accuracy on VCRBench, with gains up to 25.2%, and highlights that LVLMs mainly rely on language knowledge for complex causal reasoning tasks.

👉 Paper link: https://huggingface.co/papers/2505.08455

10. Omni-R1: Do You Really Need Audio to Fine-Tune Your Audio LLM?

🔑 Keywords: Omni-R1, multi-modal LLM, Qwen2.5-Omni, reinforcement learning, text-based reasoning

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The paper introduces Omni-R1, a model fine-tuned on Qwen2.5-Omni using reinforcement learning to excel at audio question answering.

🛠️ Research Methods:

– Reinforcement learning method GRPO was used to fine-tune Qwen2.5-Omni on an audio dataset, achieving top performance on the MMAU benchmark.

💬 Research Conclusions:

– Omni-R1 demonstrated state-of-the-art performance on multiple audio categories and highlighted the effectiveness of text-based reasoning in enhancing audio-based performance.

👉 Paper link: https://huggingface.co/papers/2505.09439

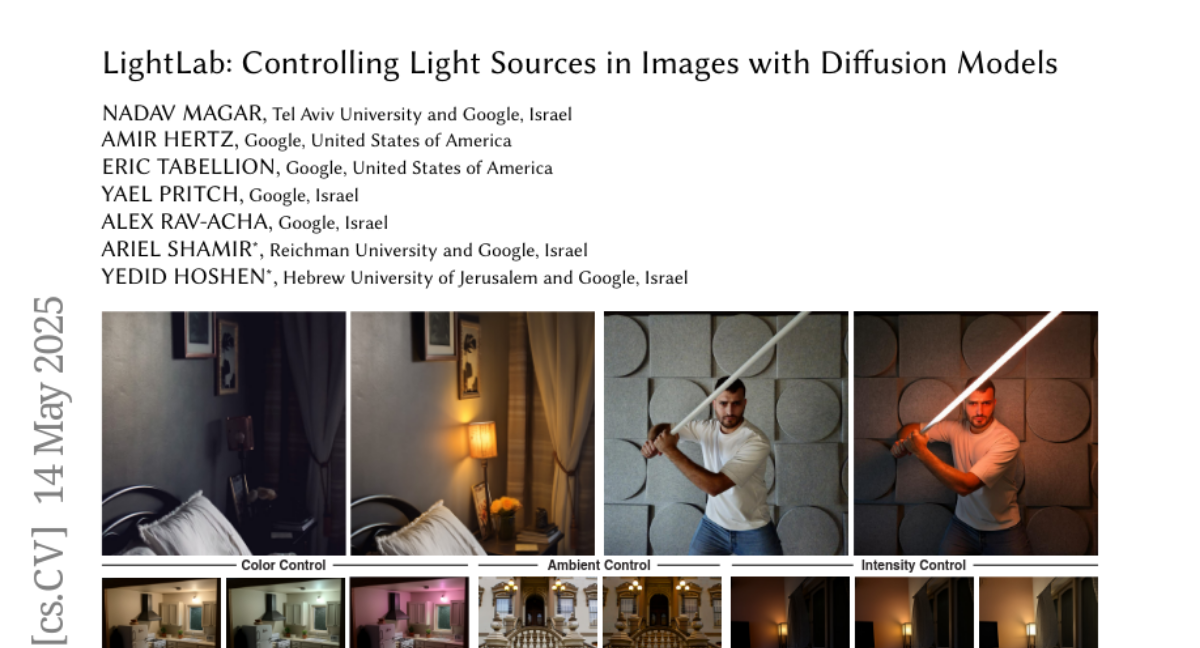

11. LightLab: Controlling Light Sources in Images with Diffusion Models

🔑 Keywords: diffusion-based method, light sources, inverse rendering, relighting methods, photorealistic prior

💡 Category: Generative Models

🌟 Research Objective:

– Introduce a diffusion-based method for fine-grained parametric control over light sources in images, overcoming limitations of existing relighting methods.

🛠️ Research Methods:

– Fine-tune a diffusion model on real raw photograph pairs and synthetically-rendered images to establish photorealistic prior for controlled light changes.

– Leverage the linearity of light to synthesize image pairs for target light source or ambient illumination adjustments.

💬 Research Conclusions:

– The proposed method achieves superior light editing results with explicit control over light intensity and color, outperforming existing methods based on user preferences.

👉 Paper link: https://huggingface.co/papers/2505.09608

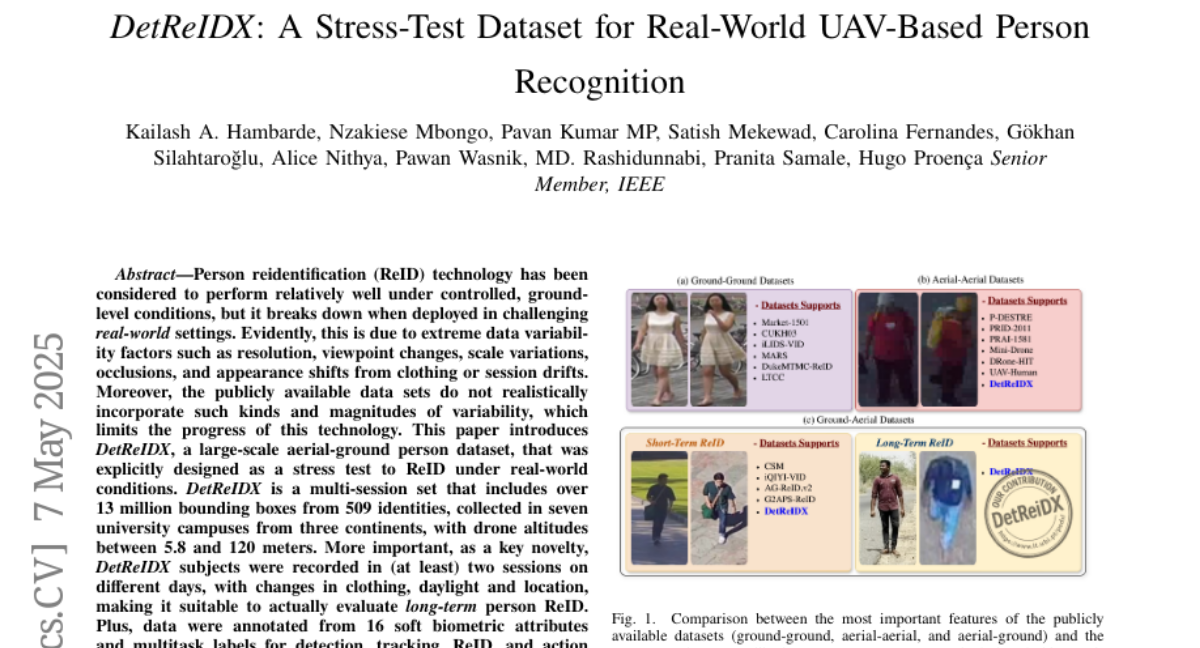

12. DetReIDX: A Stress-Test Dataset for Real-World UAV-Based Person Recognition

🔑 Keywords: Person ReID, Real-world conditions, DetReIDX, Dataset, SOTA methods

💡 Category: Computer Vision

🌟 Research Objective:

– Introduce a large-scale aerial-ground person dataset, DetReIDX, designed as a stress test for person reidentification (ReID) technology under challenging real-world conditions.

🛠️ Research Methods:

– Collection of data including over 13 million bounding boxes from 509 identities on seven university campuses across three continents using drone altitudes of 5.8 to 120 meters.

– Addition of annotations for 16 soft biometric attributes and multitask labels for detection, tracking, ReID, and action recognition.

💬 Research Conclusions:

– DetReIDX highlights the performance decline of State-Of-The-Art (SOTA) methods by up to 80% in detection accuracy and over 70% in Rank-1 ReID under its challenging conditions.

👉 Paper link: https://huggingface.co/papers/2505.04793

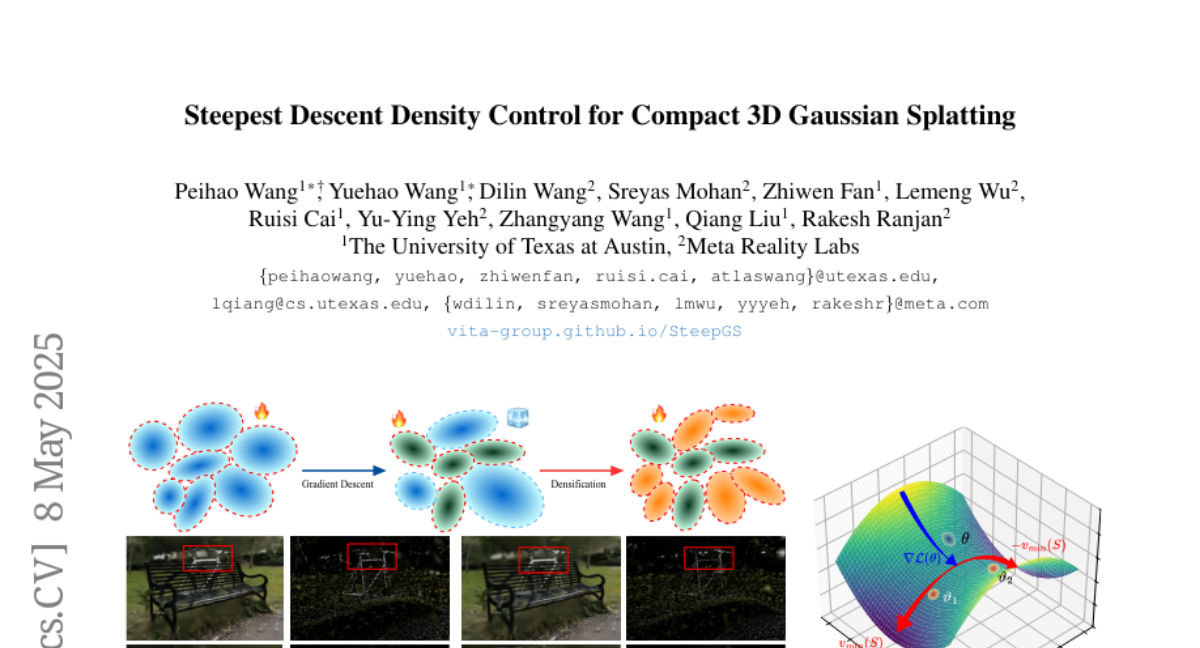

13. Steepest Descent Density Control for Compact 3D Gaussian Splatting

🔑 Keywords: 3D Gaussian Splatting, real-time, novel view synthesis, GPU rasterization pipelines, SteepGS

💡 Category: Computer Vision

🌟 Research Objective:

– To improve efficiency and scalability in real-time, high-resolution novel view synthesis using 3D Gaussian Splatting through better density control.

🛠️ Research Methods:

– Utilizes a theoretical framework for density control including an optimization-theoretic approach, with specific techniques such as splitting and a densification algorithm.

💬 Research Conclusions:

– Introduces SteepGS, a strategy that achieves roughly 50% reduction in Gaussian points, minimizing memory usage and maintaining rendering quality, thus enhancing efficiency and scalability.

👉 Paper link: https://huggingface.co/papers/2505.05587

14. Understanding and Mitigating Toxicity in Image-Text Pretraining Datasets: A Case Study on LLaVA

🔑 Keywords: Pretraining dataset, web-scale corpora, toxicity, LLaVA, image-text pairs

💡 Category: AI Ethics and Fairness

🌟 Research Objective:

– To investigate the prevalence of toxicity in LLaVA image-text pretraining datasets and examine how harmful content manifests in different modalities.

🛠️ Research Methods:

– Conduct a comprehensive analysis of common toxicity categories and propose mitigation strategies, leading to the creation of a refined toxicity-mitigated dataset.

💬 Research Conclusions:

– Findings highlight the importance of identifying and filtering toxic content, such as hate speech, explicit imagery, and targeted harassment, to build responsible and equitable multimodal systems; the resulting dataset is open source for further research.

👉 Paper link: https://huggingface.co/papers/2505.06356

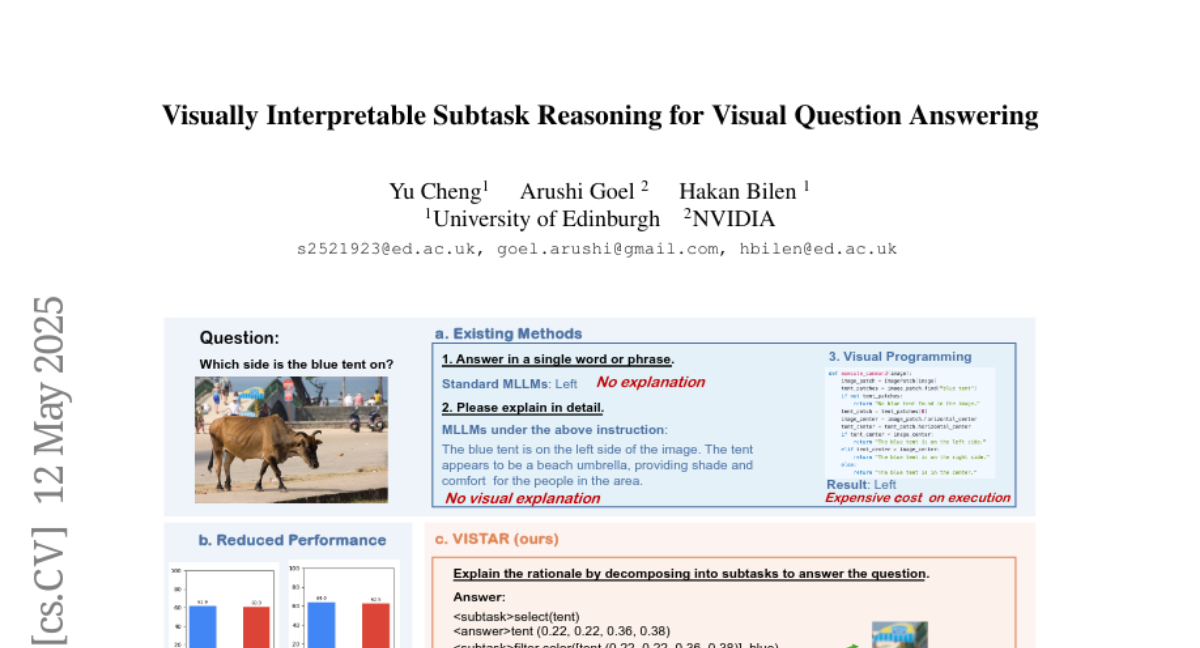

15. Visually Interpretable Subtask Reasoning for Visual Question Answering

🔑 Keywords: multimodal large language models (MLLMs), VISTAR, subtask-driven training framework, interpretability

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To enhance interpretability and reasoning in multimodal large language models by introducing the Visually Interpretable Subtask-Aware Reasoning Model (VISTAR).

🛠️ Research Methods:

– Development of a subtask-driven training framework that generates textual and visual explanations, fine-tuning MLLMs to produce structured Subtask-of-Thought rationales.

💬 Research Conclusions:

– VISTAR consistently improves reasoning accuracy and interpretability when applied to complex visual question answering, as validated by experiments on two benchmarks.

👉 Paper link: https://huggingface.co/papers/2505.08084

16. Behind Maya: Building a Multilingual Vision Language Model

🔑 Keywords: Vision-Language Models, Multilingual, Low-Resource Languages, Cultural Comprehension

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The introduction of Maya, an open-source Multilingual VLM, to improve performance in low-resource languages and varied cultural contexts.

🛠️ Research Methods:

– Creation of a multilingual image-text pretraining dataset in eight languages based on the LLaVA pretraining dataset.

– Development of a multilingual image-text model to support these languages, enhancing cultural and linguistic comprehension.

💬 Research Conclusions:

– Maya advances vision-language tasks across different languages and cultural contexts, showcasing versatility and improved comprehension through a multilingual approach.

👉 Paper link: https://huggingface.co/papers/2505.08910

17.