AI Native Daily Paper Digest – 20250523

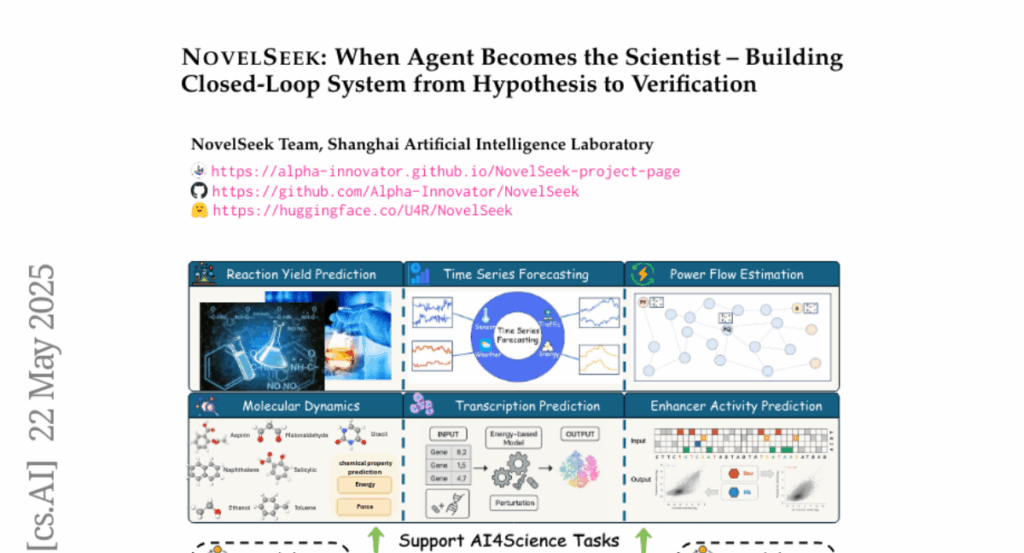

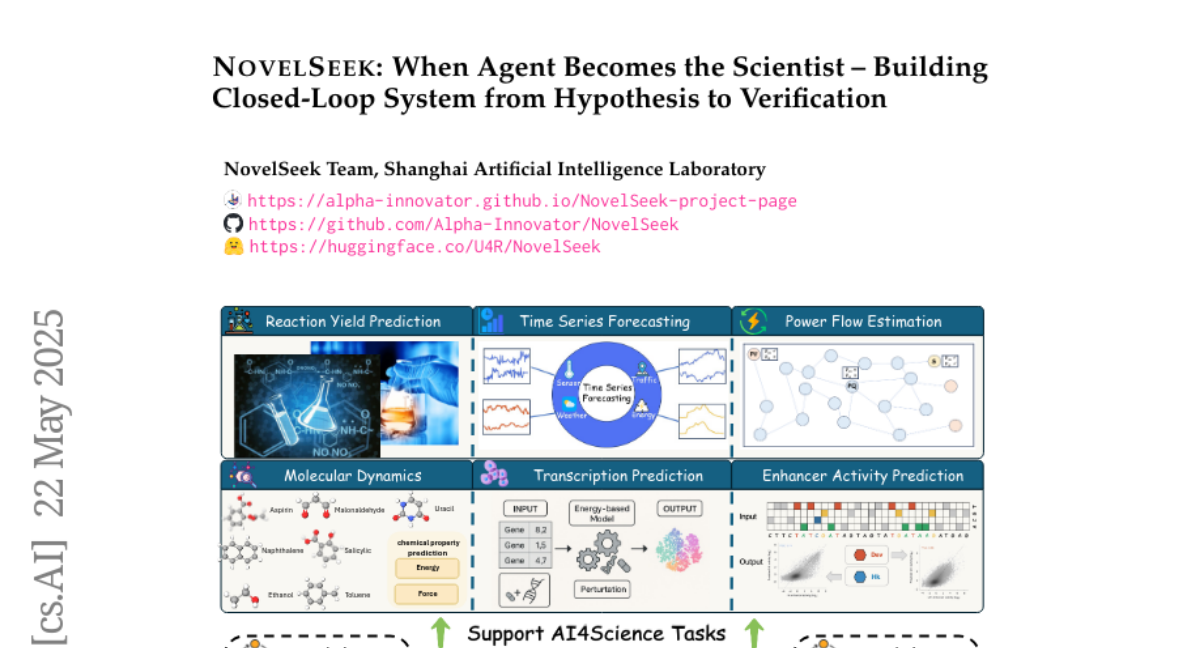

1. NovelSeek: When Agent Becomes the Scientist — Building Closed-Loop System from Hypothesis to Verification

🔑 Keywords: AI, Autonomous Scientific Research, Multi-Agent Framework, NovelSeek, Innovation

💡 Category: AI Systems and Tools

🌟 Research Objective:

– The paper introduces NovelSeek, a unified closed-loop multi-agent framework designed to conduct Autonomous Scientific Research (ASR) efficiently and innovatively across various scientific fields.

🛠️ Research Methods:

– NovelSeek incorporates scalability, interactivity, and efficiency by facilitating innovative idea generation, expert feedback integration, and multi-agent interactions.

💬 Research Conclusions:

– NovelSeek significantly enhances research efficiency by improving performance metrics in diverse tasks such as reaction yield prediction, enhancer activity prediction, and 2D semantic segmentation, achieving notable performance gains in much less time compared to traditional human efforts.

👉 Paper link: https://huggingface.co/papers/2505.16938

2. Scaling Reasoning, Losing Control: Evaluating Instruction Following in Large Reasoning Models

🔑 Keywords: MathIF, Instruction-following, Reasoning-oriented models, Reinforcement learning, Instruction adherence

💡 Category: Natural Language Processing

🌟 Research Objective:

– The study introduces MathIF, a benchmark for evaluating instruction-following in mathematical reasoning tasks within large language models.

🛠️ Research Methods:

– Empirical analysis was conducted on the tension between reasoning capacity and instruction adherence in models using techniques like reasoning-oriented reinforcement learning and distilled chains-of-thought.

💬 Research Conclusions:

– The findings suggest a consistent tension between scaling reasoning capacity and maintaining controllability, with reasoning-focused models often struggling with instruction adherence, especially with increased generation length. Simple interventions can restore some adherence but decrease reasoning performance.

👉 Paper link: https://huggingface.co/papers/2505.14810

3. Tool-Star: Empowering LLM-Brained Multi-Tool Reasoner via Reinforcement Learning

🔑 Keywords: Tool-Star, RL-based framework, multi-tool collaborative reasoning, hierarchical reward design, tool-use data

💡 Category: Reinforcement Learning

🌟 Research Objective:

– Introduce Tool-Star, a RL-based framework to enable LLMs to autonomously use multiple tools for stepwise reasoning.

🛠️ Research Methods:

– A general tool-integrated reasoning data synthesis pipeline that combines prompting and hint-based sampling to generate tool-use trajectories.

– A two-stage training framework featuring cold-start fine-tuning and a multi-tool self-critic RL algorithm with hierarchical rewards.

💬 Research Conclusions:

– Tool-Star proves effective and efficient across over 10 challenging reasoning benchmarks.

– Offers a systematic approach for improving tool collaboration within LLMs.

👉 Paper link: https://huggingface.co/papers/2505.16410

4. Pixel Reasoner: Incentivizing Pixel-Space Reasoning with Curiosity-Driven Reinforcement Learning

🔑 Keywords: Vision-Language Models, Pixel-Space Reasoning, Reinforcement Learning, Curiosity-Driven Reward Scheme

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To introduce pixel-space reasoning in Vision-Language Models (VLMs) to enhance their performance on visually intensive tasks.

🛠️ Research Methods:

– Implement a two-phase training approach, including instruction tuning on synthesized reasoning traces and a curiosity-driven reinforcement learning phase.

💬 Research Conclusions:

– The proposed framework significantly improves VLM performance across diverse visual reasoning benchmarks, achieving the highest accuracy in open-source models.

👉 Paper link: https://huggingface.co/papers/2505.15966

5. KRIS-Bench: Benchmarking Next-Level Intelligent Image Editing Models

🔑 Keywords: KRIS-Bench, Knowledge-based Reasoning, Knowledge Plausibility, Multi-modal Generative Models

💡 Category: Generative Models

🌟 Research Objective:

– Introduce KRIS-Bench to assess knowledge-based reasoning in image editing models.

🛠️ Research Methods:

– Categorize tasks using a taxonomy of Factual, Conceptual, and Procedural knowledge.

– Design 22 tasks across 7 reasoning dimensions with 1,267 annotated instances.

– Propose a Knowledge Plausibility metric for nuanced evaluation.

💬 Research Conclusions:

– Identify significant gaps in reasoning performance of current models.

– Emphasize the importance of knowledge-centric benchmarks for progress in intelligent image editing systems.

👉 Paper link: https://huggingface.co/papers/2505.16707

6. QuickVideo: Real-Time Long Video Understanding with System Algorithm Co-Design

🔑 Keywords: Long-video understanding, Real-time performance, QuickVideo, AI-generated summary, VideoLLMs

💡 Category: AI Systems and Tools

🌟 Research Objective:

– To accelerate the understanding of long videos for real-time applications like video surveillance and sports broadcasting by addressing computational bottlenecks.

🛠️ Research Methods:

– QuickVideo incorporates a parallelized CPU-based video decoder for a 2-3 times speedup, a memory-efficient prefilling method using KV-cache pruning, and overlaps CPU video decoding with GPU inference to reduce inference time.

💬 Research Conclusions:

– QuickVideo enables scalable, high-quality video understanding on limited hardware, supporting various durations and sampling rates, making long-video processing feasible in practice.

👉 Paper link: https://huggingface.co/papers/2505.16175

7. GoT-R1: Unleashing Reasoning Capability of MLLM for Visual Generation with Reinforcement Learning

🔑 Keywords: reinforcement learning, semantic-spatial reasoning, visual generation, AI Native, T2I-CompBench

💡 Category: Generative Models

🌟 Research Objective:

– The study aims to enhance visual generation by improving semantic-spatial reasoning using reinforcement learning, thereby surpassing existing models in handling complex compositional tasks.

🛠️ Research Methods:

– The researchers developed a framework, GoT-R1, that uses reinforcement learning combined with a dual-stage multi-dimensional reward system to enable effective reasoning strategies in visual generation.

💬 Research Conclusions:

– GoT-R1 significantly improves performance on the T2I-CompBench benchmark, particularly in tasks that require precise spatial relationships and attribute binding, advancing state-of-the-art image generation. The code and models are publicly available for further research.

👉 Paper link: https://huggingface.co/papers/2505.17022

8. LLaDA-V: Large Language Diffusion Models with Visual Instruction Tuning

🔑 Keywords: LLaDA-V, Multimodal Large Language Model, diffusion-based, visual instruction tuning, multimodal understanding

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Introduce LLaDA-V, a diffusion-based Multimodal Large Language Model with visual instruction tuning to improve multimodal task performance.

🛠️ Research Methods:

– Integration of visual instruction tuning with masked diffusion models, incorporating a vision encoder and MLP connector for effective multimodal alignment.

💬 Research Conclusions:

– LLaDA-V shows promising multimodal performance, competitive with existing models, and achieves state-of-the-art results in multimodal understanding, suggesting large language diffusion models’ potential in multimodal contexts.

👉 Paper link: https://huggingface.co/papers/2505.16933

9. Scaling Diffusion Transformers Efficiently via μP

🔑 Keywords: Maximal Update Parametrization, Diffusion Transformers, Hyperparameter Transferability, Text-to-Image Generation

💡 Category: Generative Models

🌟 Research Objective:

– To extend Maximal Update Parametrization (μP) to diffusion Transformers and demonstrate its efficiency in hyperparameter transferability and reduction of tuning costs.

🛠️ Research Methods:

– Generalization of standard μP to diffusion Transformers and validation through large-scale experiments, including the use of models like DiT and PixArt-alpha.

💬 Research Conclusions:

– μP applied to diffusion Transformers, such as DiT and U-ViT, proves effective by significantly reducing tuning costs while achieving faster convergence and superior performance in tasks like text-to-image generation.

👉 Paper link: https://huggingface.co/papers/2505.15270

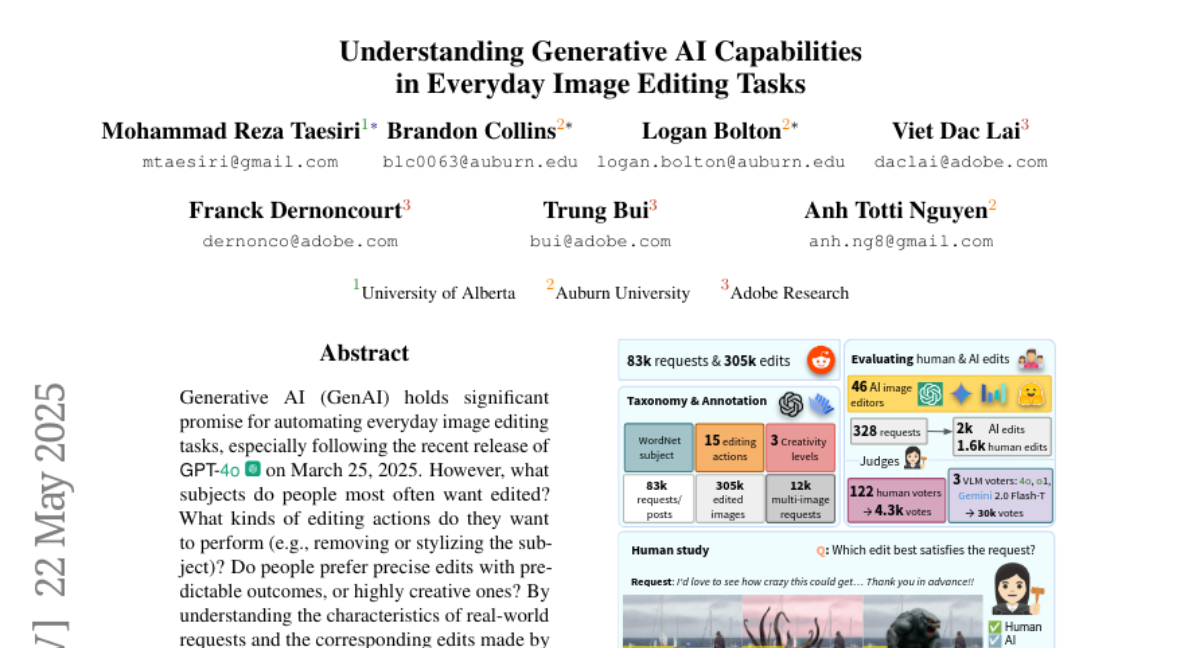

10. Understanding Generative AI Capabilities in Everyday Image Editing Tasks

🔑 Keywords: Generative AI, AI Editors, GPT-4o, Low-Creativity Tasks, VLM Judges

💡 Category: Generative Models

🌟 Research Objective:

– The study aims to understand the kinds of image editing requests users have and if AI editors, like GPT-4o, can effectively handle these tasks.

🛠️ Research Methods:

– Analysis of 83k image editing requests from a Reddit community over 12 years to evaluate the performance of AI editors and compare human and VLM judge preferences.

💬 Research Conclusions:

– AI editors struggle with precise, low-creativity tasks and excel more in open-ended tasks. Human judges rate AI edits as fulfilling only 33% of requests, while VLM judges might prefer AI edits over human edits.

👉 Paper link: https://huggingface.co/papers/2505.16181

11. Risk-Averse Reinforcement Learning with Itakura-Saito Loss

🔑 Keywords: Risk-averse Reinforcement Learning, Itakura-Saito divergence, Exponential Utility, Numerical Stability, Action-value Functions

💡 Category: Reinforcement Learning

🌟 Research Objective:

– To enhance numerical stability in risk-averse reinforcement learning using a proposed Itakura-Saito divergence-based loss function.

🛠️ Research Methods:

– Derivation of Bellman equations with a focus on exponential utility functions, and implementation of reinforcement learning algorithms with minimal modifications. Theoretical and empirical evaluation of the proposed loss function in various financial scenarios.

💬 Research Conclusions:

– The proposed Itakura-Saito divergence-based loss function provides improved numerical stability and outperforms established alternatives in experiments across multiple financial scenarios.

👉 Paper link: https://huggingface.co/papers/2505.16925

12. Let LLMs Break Free from Overthinking via Self-Braking Tuning

🔑 Keywords: Self-Braking Tuning, overthinking, reasoning models, computational overhead

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– To develop a framework (Self-Braking Tuning) that reduces overthinking and unnecessary computational overhead in large reasoning models by enabling them to self-regulate their reasoning process.

🛠️ Research Methods:

– Constructing a set of overthinking identification metrics and designing a systematic method to detect redundant reasoning.

– Developing a strategy for constructing data with adaptive reasoning lengths and introducing a braking prompt mechanism.

💬 Research Conclusions:

– The proposed method reduces token consumption by up to 60% while maintaining accuracy comparable to unconstrained models across mathematical benchmarks.

👉 Paper link: https://huggingface.co/papers/2505.14604

13. Mind the Gap: Bridging Thought Leap for Improved Chain-of-Thought Tuning

🔑 Keywords: Chain-of-Thought, Thought Leaps, CoT-Bridge, ScaleQM+, Generalization

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– The main objective is to detect and generate missing intermediate steps in mathematical Chain-of-Thought reasoning to enhance performance and generalization.

🛠️ Research Methods:

– The researchers developed the CoT Thought Leap Bridge Task and constructed a specialized training dataset, ScaleQM+, to train the CoT-Bridge model which facilitates filling in reasoning gaps.

💬 Research Conclusions:

– Models fine-tuned with the newly devised bridged datasets show consistent improvements over those trained on original datasets, with significant performance enhancements in mathematical reasoning benchmarks. The approach also improves data distillation and serves as an effective starting point for reinforcement learning, demonstrating improved generalization to out-of-domain tasks.

👉 Paper link: https://huggingface.co/papers/2505.14684

14. AceReason-Nemotron: Advancing Math and Code Reasoning through Reinforcement Learning

🔑 Keywords: Reinforcement Learning, Reasoning, Distillation, Math-only RL, Code-only RL

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The study aims to demonstrate that large-scale reinforcement learning (RL) enhances the reasoning capabilities of small and mid-sized models more effectively than distillation, achieving superior results on math and code benchmarks.

🛠️ Research Methods:

– The authors implement an approach that involves starting with math-only prompts and then progressing to code-only prompts for RL training, supported by a robust data curation pipeline and verification-based RL.

💬 Research Conclusions:

– The findings reveal that this large-scale RL approach significantly outperforms state-of-the-art distillation-based models in reasoning tasks, improves performance on math and code benchmarks, and enhances foundational reasoning abilities.

👉 Paper link: https://huggingface.co/papers/2505.16400

15. VideoGameQA-Bench: Evaluating Vision-Language Models for Video Game Quality Assurance

🔑 Keywords: VideoGameQA-Bench, Vision-Language Models, Quality Assurance, Automation

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The study introduces VideoGameQA-Bench to assess Vision-Language Models in video game quality assurance tasks, optimizing development workflows in the industry.

🛠️ Research Methods:

– VideoGameQA-Bench serves as a comprehensive benchmark, covering tasks such as visual unit testing, visual regression testing, needle-in-a-haystack tasks, glitch detection, and bug report generation.

💬 Research Conclusions:

– The benchmark addresses the need for standardized evaluation tools in video game QA, emphasizing the potential of Vision-Language Models to automate and improve labor-intensive QA processes.

👉 Paper link: https://huggingface.co/papers/2505.15952

16. Backdoor Cleaning without External Guidance in MLLM Fine-tuning

🔑 Keywords: Attention Entropy, Multimodal Large Language Models, Backdoor Defense, Fine-tuning-as-a-Service, Self-supervised

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Develop a defense framework, Believe Your Eyes (BYE), to identify and filter backdoor samples in fine-tuned multimodal large language models without additional labels or model changes.

🛠️ Research Methods:

– Implement a three-stage pipeline: extracting attention maps, computing entropy scores with bimodal separation, and performing unsupervised clustering to filter suspicious samples.

💬 Research Conclusions:

– BYE effectively counters backdoor threats in MLLMs, achieving near-zero attack success rates while maintaining performance on clean tasks, without requiring clean supervision or model modifications.

👉 Paper link: https://huggingface.co/papers/2505.16916

17. Dimple: Discrete Diffusion Multimodal Large Language Model with Parallel Decoding

🔑 Keywords: DMLLM, Discrete Diffusion, Autoregressive, Confident Decoding, Structure Priors

💡 Category: Generative Models

🌟 Research Objective:

– Introduce Dimple, the first Discrete Diffusion Multimodal Large Language Model (DMLLM) to address training instability and performance issues in purely discrete diffusion approaches.

🛠️ Research Methods:

– A hybrid training paradigm combining an initial autoregressive phase with a subsequent diffusion phase to enhance efficiency and performance.

– Implement confident decoding to dynamically adjust the number of tokens generated, and explore the use of structure priors for controlled response output.

💬 Research Conclusions:

– The Dimple-7B model surpasses LLaVA-NEXT by 3.9% in performance, demonstrating that DMLLM can achieve comparable performance to autoregressive models.

– Confident decoding significantly reduces generation iterations and offers speedup benefits.

– Structure priors enable fine-grained control over response format and length, enhancing inference efficiency and controllability.

👉 Paper link: https://huggingface.co/papers/2505.16990

18. Training-Free Efficient Video Generation via Dynamic Token Carving

🔑 Keywords: video Diffusion Transformer, Jenga, dynamic attention carving, progressive resolution generation

💡 Category: Generative Models

🌟 Research Objective:

– The paper presents Jenga, an inference pipeline designed to accelerate video Diffusion Transformer models while maintaining high generation quality.

🛠️ Research Methods:

– Jenga combines dynamic attention carving with progressive resolution generation, leveraging 3D space-filling curves and a block-wise attention mechanism.

💬 Research Conclusions:

– Jenga significantly speeds up video generation by achieving an 8.83x speedup with minimal performance drop, and it facilitates practical usage on modern hardware without requiring model retraining.

👉 Paper link: https://huggingface.co/papers/2505.16864

19. Fixing Data That Hurts Performance: Cascading LLMs to Relabel Hard Negatives for Robust Information Retrieval

🔑 Keywords: false negatives, LLM prompts, retrieval models, nDCG@10, human annotation

💡 Category: Natural Language Processing

🌟 Research Objective:

– To improve the effectiveness of retrieval and reranker models by identifying and relabeling false negatives in datasets using cascading LLM prompts.

🛠️ Research Methods:

– Utilization of cascading LLM prompts to relabel datasets and examine their impact on model performance. Evaluation metrics include nDCG@10 on the BEIR benchmark and zero-shot AIR-Bench evaluation.

💬 Research Conclusions:

– Relabeling false negatives leads to significant improvements in retrieval models’ performance metrics, such as nDCG@10. The reliability of this approach is further confirmed through human annotation results showing higher agreement with GPT-4o compared to GPT-4o-mini.

👉 Paper link: https://huggingface.co/papers/2505.16967

20. SophiaVL-R1: Reinforcing MLLMs Reasoning with Thinking Reward

🔑 Keywords: Multimodal Language Models, Rule-based Reinforcement Learning, Thinking Process Rewards, Generalization, SophiaVL-R1

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Enhance reasoning and generalization in Multimodal Large Language Models (MLLMs) through the integration of thinking process rewards.

🛠️ Research Methods:

– Developed SophiaVL-R1 by incorporating a thinking reward model and the Trust-GRPO method to enhance MLLM reasoning strategies.

– Implemented an annealing training strategy to adjust reliance on thinking rewards versus rule-based outcome rewards.

💬 Research Conclusions:

– SophiaVL-R1 achieved superior performance on various benchmarks, outperforming larger models like LLaVA-OneVision-72B, demonstrating its effectiveness in reasoning and generalization.

👉 Paper link: https://huggingface.co/papers/2505.17018

21. TinyV: Reducing False Negatives in Verification Improves RL for LLM Reasoning

🔑 Keywords: TinyV, Large Language Models, Reinforcement Learning, False Negatives, Convergence

💡 Category: Reinforcement Learning

🌟 Research Objective:

– To address the issue of false negatives in RL training of large language models, enhancing reward accuracy and convergence speed.

🛠️ Research Methods:

– Analysis of the Big-Math-RL-Verified dataset to identify the prevalence of false negatives.

– Proposal and implementation of TinyV, a lightweight LLM-based verifier to improve reward estimates.

💬 Research Conclusions:

– TinyV significantly improves pass rates and accelerates convergence by reducing false negatives compared to existing rule-based methods.

– The study underscores the importance of addressing verifier false negatives for effective RL-based fine-tuning of LLMs.

👉 Paper link: https://huggingface.co/papers/2505.14625

22. LaViDa: A Large Diffusion Language Model for Multimodal Understanding

🔑 Keywords: Vision-Language Models, Discrete Diffusion Models, AI Native, Multimodal Instruction Following, Bidirectional Reasoning

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The primary aim is to develop LaViDa, a family of vision-language models based on Discrete Diffusion Models (DMs) to enhance speed, controllability, and bidirectional reasoning in multimodal tasks.

🛠️ Research Methods:

– LaViDa utilizes a vision encoder integrated with DMs, complemented by techniques such as complementary masking, prefix KV cache, and timestep shifting for effective training and efficient inference.

💬 Research Conclusions:

– LaViDa demonstrates competitive or superior performance to autoregressive VLMs on multimodal benchmarks, significantly surpassing models like Open-LLaVa-Next-8B on COCO captioning with increased speed and flexibility. It is a strong alternative to autoregressive vision-language models with unique advantages in speed and bidirectional reasoning.

👉 Paper link: https://huggingface.co/papers/2505.16839

23. SpatialScore: Towards Unified Evaluation for Multimodal Spatial Understanding

🔑 Keywords: Multimodal large language models, spatial understanding, SpatialAgent, VGBench, SpatialScore

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To investigate whether existing multimodal large language models possess 3D spatial perception and understanding abilities.

🛠️ Research Methods:

– Introduced VGBench for assessing visual geometry perception.

– Developed SpatialScore, integrating data from 11 datasets for comprehensive spatial understanding benchmarking.

– Created SpatialAgent, a multi-agent system with specialized tools for spatial reasoning.

💬 Research Conclusions:

– The study reveals persistent challenges in spatial reasoning.

– Demonstrates the effectiveness of SpatialAgent in improving spatial understanding tasks.

👉 Paper link: https://huggingface.co/papers/2505.17012

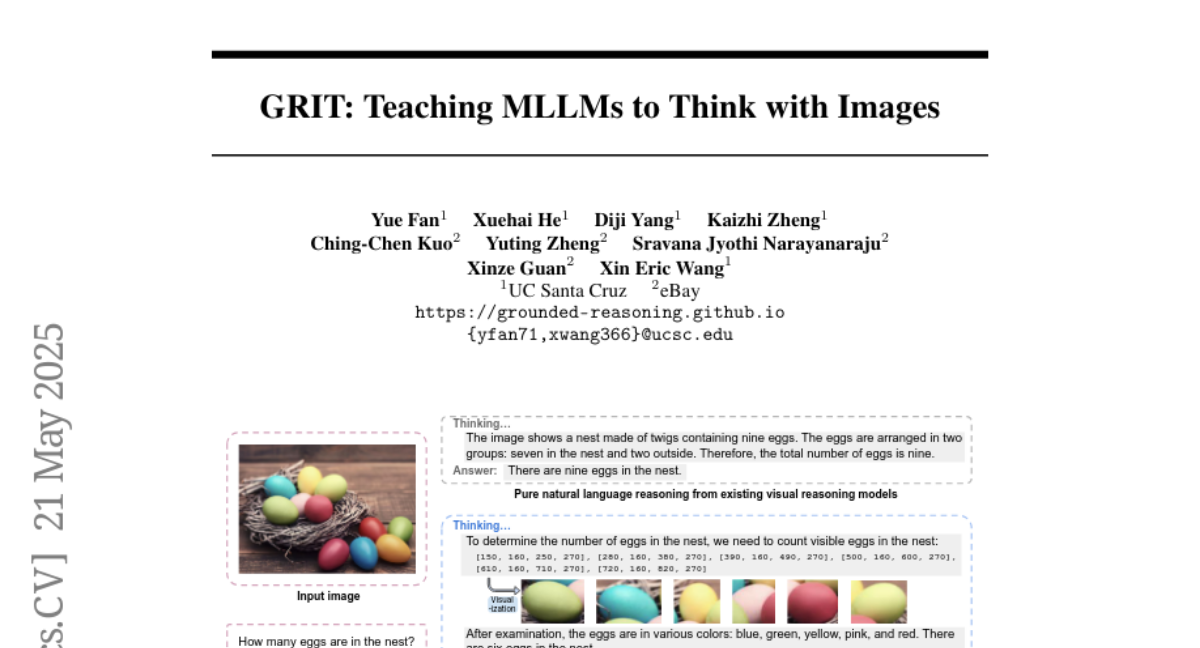

24. GRIT: Teaching MLLMs to Think with Images

🔑 Keywords: GRIT, Visual Reasoning, MLLMs, Reinforcement Learning, reasoning chains

💡 Category: Reinforcement Learning

🌟 Research Objective:

– To enhance visual reasoning in MLLMs by generating reasoning chains that integrate natural language and visual information using bounding box coordinates.

🛠️ Research Methods:

– Development of a novel method called GRIT, which uses a reinforcement learning approach (GRPO-GR) to train models in generating interleaved reasoning chains without the need for reasoning chain annotations or bounding box labels.

💬 Research Conclusions:

– GRIT effectively improves data efficiency, requiring minimal data inputs while achieving coherent and visually grounded reasoning chains, unifying reasoning and grounding abilities in MLLMs.

👉 Paper link: https://huggingface.co/papers/2505.15879

25. Training-Free Reasoning and Reflection in MLLMs

🔑 Keywords: FRANK Model, Multimodal LLMs, Reasoning LLMs, Reinforcement Learning, Hierarchical Weight Merging

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The paper aims to enhance Multimodal LLMs with reasoning and reflection abilities without the costs of retraining, through the introduction of the FRANK Model.

🛠️ Research Methods:

– A decoupling of perception and reasoning across MLLM decoder layers is proposed, utilizing a hierarchical weight merging approach. This includes layer-wise, Taylor-derived closed-form fusion to integrate reasoning capacity while preserving visual grounding.

💬 Research Conclusions:

– The FRANK Model demonstrated superior performance on multimodal reasoning benchmarks, achieving a higher accuracy than existing models, including surpassing the GPT-4o model on the MMMU benchmark.

👉 Paper link: https://huggingface.co/papers/2505.16151

26. WebAgent-R1: Training Web Agents via End-to-End Multi-Turn Reinforcement Learning

🔑 Keywords: WebAgent-R1, Multi-turn Interactions, Reinforcement Learning, Thinking-based Prompting Strategy, Chain-of-Thought Reasoning

💡 Category: Reinforcement Learning

🌟 Research Objective:

– Develop an effective end-to-end multi-turn RL framework to train web agents in dynamic and complex web environments.

🛠️ Research Methods:

– Implemented WebAgent-R1 to asynchronously generate diverse trajectories using binary rewards, evaluated through the WebArena-Lite benchmark.

💬 Research Conclusions:

– WebAgent-R1 significantly increased task success rates and outperformed existing methods; highlighted the importance of thinking-based prompting and incorporating chain-of-thought reasoning.

👉 Paper link: https://huggingface.co/papers/2505.16421

27. Think or Not? Selective Reasoning via Reinforcement Learning for Vision-Language Models

🔑 Keywords: AI-generated summary, Reinforcement Learning, vision-language models, Group Relative Policy Optimization

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The study aims to enhance reasoning in vision-language models by reducing unnecessary reasoning steps without compromising performance through a novel two-stage training strategy, called TON.

🛠️ Research Methods:

– TON combines supervised fine-tuning with ‘thought dropout’ and Group Relative Policy Optimization to decide when reasoning is necessary, and maximize task-aware outcomes.

💬 Research Conclusions:

– Experimental results demonstrate that TON can significantly reduce completion length by up to 90% compared to standard GRPO, while maintaining or improving performance across diverse vision-language tasks and models.

👉 Paper link: https://huggingface.co/papers/2505.16854

28. Training Step-Level Reasoning Verifiers with Formal Verification Tools

🔑 Keywords: AI Native, Process Reward Models, Large Language Models, step-level error labels, formal verification

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– To address the challenges of automatic dataset creation and the generalization of PRMs to diverse reasoning tasks.

🛠️ Research Methods:

– Introduction of FoVer, a method using formal verification tools like Z3 and Isabelle to automatically annotate step-level error labels for training PRMs.

💬 Research Conclusions:

– PRMs trained with FoVer show improved cross-task generalization and outperform human-annotated methods on various reasoning benchmarks.

👉 Paper link: https://huggingface.co/papers/2505.15960

29. OViP: Online Vision-Language Preference Learning

🔑 Keywords: OViP, hallucination, diffusion model, multi-modal capabilities, contrastive training

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The main objective is to reduce hallucinations in large vision-language models while maintaining their multi-modal capabilities.

🛠️ Research Methods:

– Implement a framework called Online Vision-language Preference Learning (OViP) which uses a diffusion model to dynamically create contrastive training data based on the model’s own hallucinated outputs to provide real-time relevant supervision signals.

💬 Research Conclusions:

– Through experiments on hallucination and general benchmarks, OViP effectively reduces hallucinations and preserves core multi-modal capabilities by aligning textual and visual preferences adaptively.

👉 Paper link: https://huggingface.co/papers/2505.15963

30. VLM-R^3: Region Recognition, Reasoning, and Refinement for Enhanced Multimodal Chain-of-Thought

🔑 Keywords: VLM-R3, multi-modal language models, region recognition, reasoning, reinforcement policy optimization

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Introducing VLM-R^3 to enhance multi-modal language models with region recognition and reasoning to improve visual question answering tasks.

🛠️ Research Methods:

– Utilization of Region-Conditioned Reinforcement Policy Optimization (R-GRPO) for dynamic region selection and integration of visual content into reasoning processes.

💬 Research Conclusions:

– VLM-R^3 achieves state-of-the-art performance in visual question answering benchmarks, especially in zero-shot and few-shot settings requiring intricate spatial reasoning.

👉 Paper link: https://huggingface.co/papers/2505.16192

31. AGENTIF: Benchmarking Instruction Following of Large Language Models in Agentic Scenarios

🔑 Keywords: Large Language Models, agentic applications, instruction following, complex constraints, tool specifications

💡 Category: Natural Language Processing

🌟 Research Objective:

– Introduce AgentIF, a benchmark designed to evaluate the ability of Large Language Models to follow complex instructions within realistic agentic scenarios.

🛠️ Research Methods:

– Development of AgentIF from 50 real-world agentic applications with human-annotated instructions, evaluating LLMs through code-based, LLM-based, and hybrid evaluations.

💬 Research Conclusions:

– Current advanced LLMs generally display poor performance in managing complex constraints and tool specifications. The study also provides insights into failure modes through error analysis and the impact of instruction length and meta constraints.

👉 Paper link: https://huggingface.co/papers/2505.16944

32. Reinforcement Learning Finetunes Small Subnetworks in Large Language Models

🔑 Keywords: Reinforcement Learning, Large Language Models, Parameter Update Sparsity, KL Regularization, Policy Distribution

💡 Category: Reinforcement Learning

🌟 Research Objective:

– To explore how reinforcement learning improves performance and alignment of large language models through minimal parameter updates affecting only a small subnetwork.

🛠️ Research Methods:

– Experiments employing 7 widely used RL algorithms across 10 different large language models, focusing on the effects of parameter update sparsity without explicit sparsity techniques.

💬 Research Conclusions:

– Significant task performance improvement is achieved via updating only 5 to 30 percent of the parameters. This intrinsic sparsity occurs without regularizations, and fine-tuning the subnetwork can recover test accuracy similarly to full fine-tuning processes.

👉 Paper link: https://huggingface.co/papers/2505.11711

33. SafeKey: Amplifying Aha-Moment Insights for Safety Reasoning

🔑 Keywords: Large Reasoning Models, safety aha moment, Dual-Path Safety Head, Query-Mask Modeling, jailbreak prompts

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– To enhance the safety of large reasoning models against harmful queries and adversarial attacks by introducing SafeKey.

🛠️ Research Methods:

– Implementing SafeKey which includes a Dual-Path Safety Head and Query-Mask Modeling to activate safety aha moments within key sentences.

💬 Research Conclusions:

– SafeKey significantly improves safety generalization across various benchmarks, reduces the average harmfulness rate by 9.6%, and retains the models’ general capabilities.

👉 Paper link: https://huggingface.co/papers/2505.16186

34. Think-RM: Enabling Long-Horizon Reasoning in Generative Reward Models

🔑 Keywords: Reinforcement Learning, Human Feedback, Generative Models, Long-Horizon Reasoning, Pairwise RLHF Pipeline

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The research aims to enhance generative reward models (GenRMs) by introducing Think-RM, which incorporates long-horizon reasoning and a novel pairwise RLHF pipeline to align large language models with human preferences.

🛠️ Research Methods:

– The framework utilizes an internal thinking process to produce flexible self-guided reasoning traces, supplemented by supervised fine-tuning over long chain-of-thought data and rule-based reinforcement learning.

💬 Research Conclusions:

– Think-RM achieves state-of-the-art results on RM-Bench, showing an 8% improvement over BT RM and vertically scaled GenRM, and demonstrating superior end-policy performance with the novel pairwise RLHF pipeline compared to traditional approaches.

👉 Paper link: https://huggingface.co/papers/2505.16265

35. Robo2VLM: Visual Question Answering from Large-Scale In-the-Wild Robot Manipulation Datasets

🔑 Keywords: Robo2VLM, Vision-Language Models (VLMs), Visual Question Answering (VQA), Robot Trajectory Data, Interaction Reasoning

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Presenting Robo2VLM, a framework that utilizes robot trajectory data to generate Visual Question Answering datasets for enhancing and evaluating Vision-Language Models (VLMs).

🛠️ Research Methods:

– Robo2VLM derives ground-truth data from multi-modal sensory modalities such as end-effector pose, gripper aperture, and force sensing to segment robot trajectories and generate VQA queries based on these properties.

💬 Research Conclusions:

– The curated Robo2VLM-1 dataset, containing over 684,710 questions, benchmarks and improves VLM capabilities in spatial and interaction reasoning tasks.

👉 Paper link: https://huggingface.co/papers/2505.15517

36. Multi-SpatialMLLM: Multi-Frame Spatial Understanding with Multi-Modal Large Language Models

🔑 Keywords: Multi-modal large language models, multi-frame spatial understanding, depth perception, visual correspondence, dynamic perception

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The objective is to enhance Multi-modal large language models (MLLMs) with robust multi-frame spatial understanding for improved performance in multi-frame reasoning tasks.

🛠️ Research Methods:

– Integration of depth perception, visual correspondence, and dynamic perception using the MultiSPA dataset with over 27 million samples and a comprehensive benchmark for diverse spatial tasks.

💬 Research Conclusions:

– The Multi-SpatialMLLM framework achieves significant improvements over existing models, demonstrating scalable and generalizable capabilities, and functions as a multi-frame reward annotator for robotics.

👉 Paper link: https://huggingface.co/papers/2505.17015

37. Let Androids Dream of Electric Sheep: A Human-like Image Implication Understanding and Reasoning Framework

🔑 Keywords: Image Implication Understanding, GPT-4o-mini, Vision-Language Reasoning, Human-AI Interaction, Contextual Gaps

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To develop a novel framework for enhancing image implication understanding and reasoning across different languages and question types using GPT-4o-mini.

🛠️ Research Methods:

– Implementation of a three-stage framework (Perception, Search, Reasoning) to convert visual information, integrate cross-domain knowledge, and generate context-alignment image implications.

💬 Research Conclusions:

– Achieved state-of-the-art performance on English benchmarks and significant improvement on Chinese benchmarks, offering insights for advancing vision-language reasoning and human-AI interaction.

👉 Paper link: https://huggingface.co/papers/2505.17019

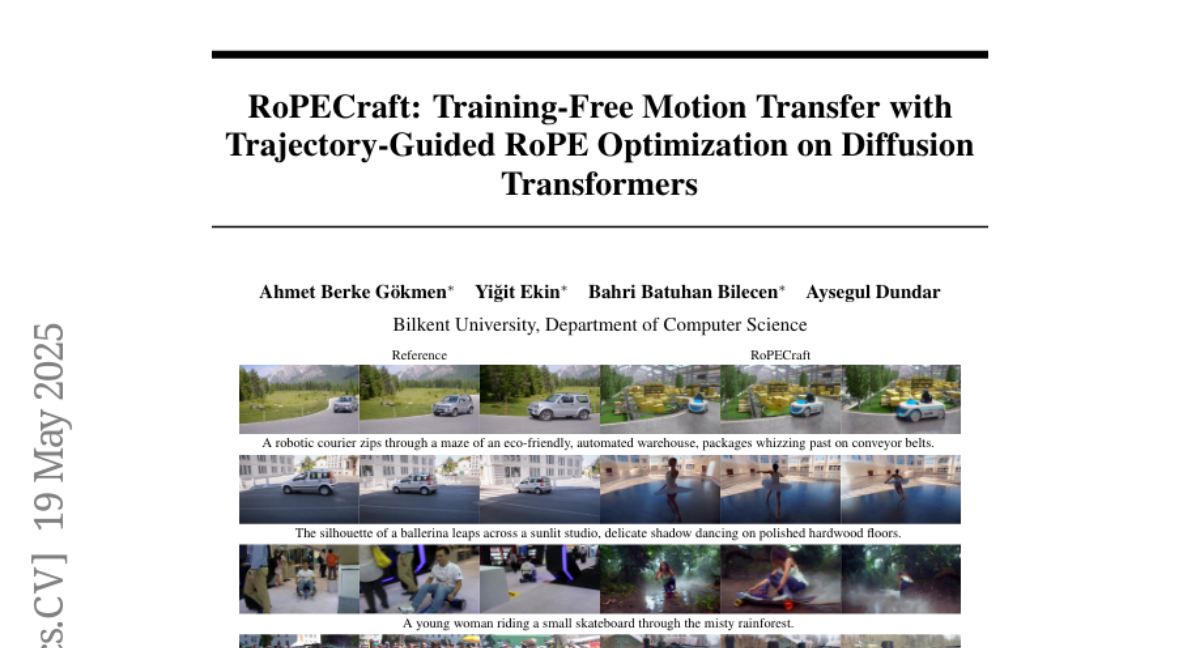

38. RoPECraft: Training-Free Motion Transfer with Trajectory-Guided RoPE Optimization on Diffusion Transformers

🔑 Keywords: RoPECraft, diffusion transformers, rotary positional embeddings, motion transfer

💡 Category: Generative Models

🌟 Research Objective:

– Enhance text-guided video generation by transferring motion from reference videos using a training-free approach.

🛠️ Research Methods:

– Modifying rotary positional embeddings (RoPE) in diffusion transformers to encode motion using dense optical flow.

– Optimization through trajectory alignment and a regularization term based on phase components to reduce artifacts.

💬 Research Conclusions:

– RoPECraft outperforms recent methods in video generation both qualitatively and quantitatively, according to benchmarks.

👉 Paper link: https://huggingface.co/papers/2505.13344

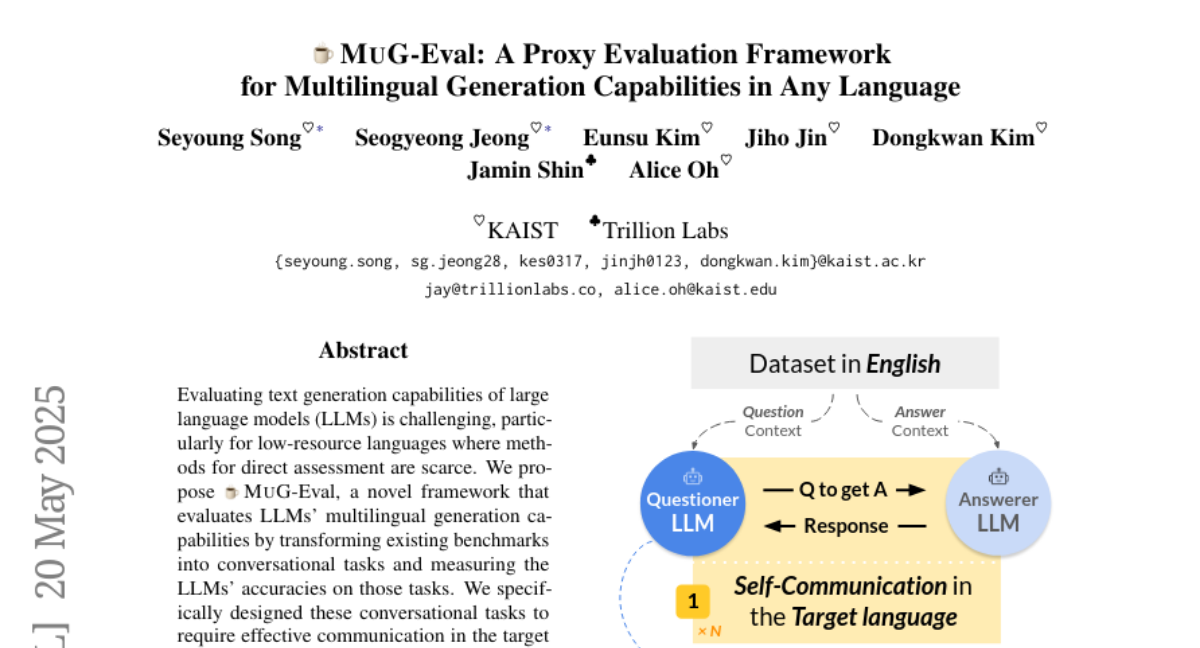

39. MUG-Eval: A Proxy Evaluation Framework for Multilingual Generation Capabilities in Any Language

🔑 Keywords: Multilingual Generation, LLMs, Low-Resource Languages, Conversational Tasks, NLP Tools

💡 Category: Natural Language Processing

🌟 Research Objective:

– To evaluate the multilingual generation capabilities of large language models (LLMs) by transforming benchmarks into conversational tasks.

🛠️ Research Methods:

– Developed MUG-Eval, a framework that converts existing benchmarks into conversational tasks to assess LLM accuracies without relying on language-specific NLP tools or annotated datasets.

💬 Research Conclusions:

– MUG-Eval demonstrates strong correlation with established benchmarks (r > 0.75) and facilitates standardized comparisons across languages, offering a resource-efficient evaluation for multilingual generation.

👉 Paper link: https://huggingface.co/papers/2505.14395

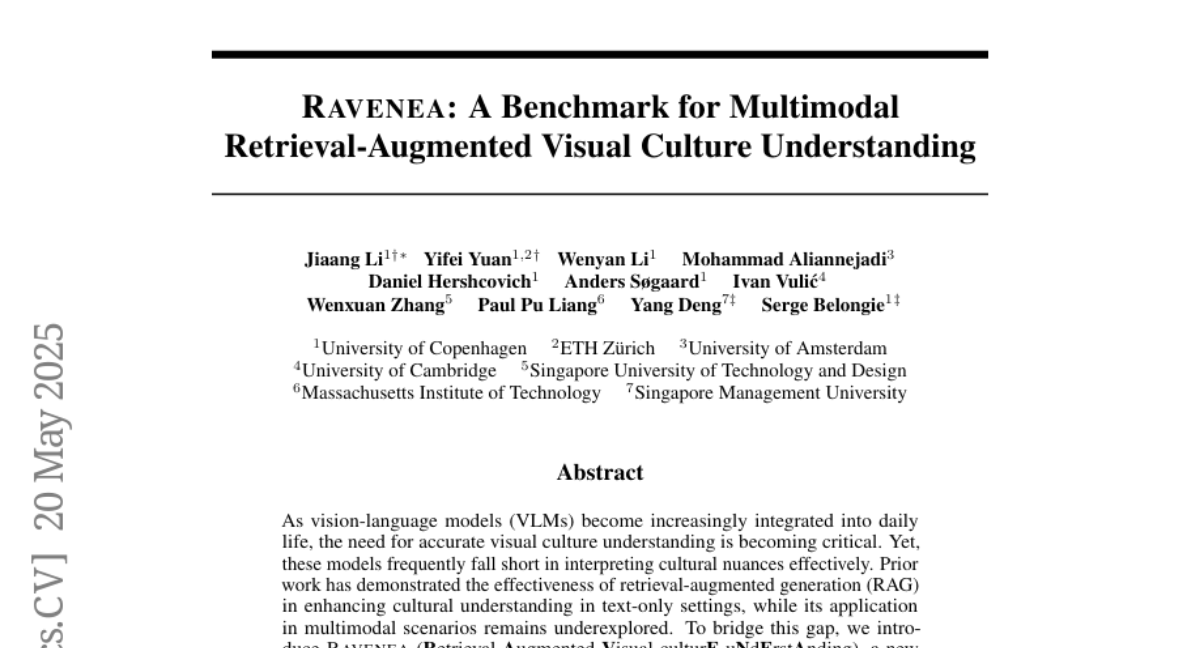

40. RAVENEA: A Benchmark for Multimodal Retrieval-Augmented Visual Culture Understanding

🔑 Keywords: RAVENEA, Retrieval-Augmented Visual Understanding, Vision-Language Models, Cultural Understanding, Multimodal Retirevers

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To enhance visual culture understanding in vision-language models using a retrieval-augmented benchmark named RAVENEA.

🛠️ Research Methods:

– Introduction of RAVENEA, which focuses on culture-focused visual question answering (cVQA) and culture-informed image captioning (cIC), integrating over 10,000 curated Wikipedia documents.

💬 Research Conclusions:

– Culture-aware retrieval-augmented vision-language models outperform non-augmented models, achieving at least a 3.2% improvement on cVQA and a 6.2% improvement on cIC.

👉 Paper link: https://huggingface.co/papers/2505.14462

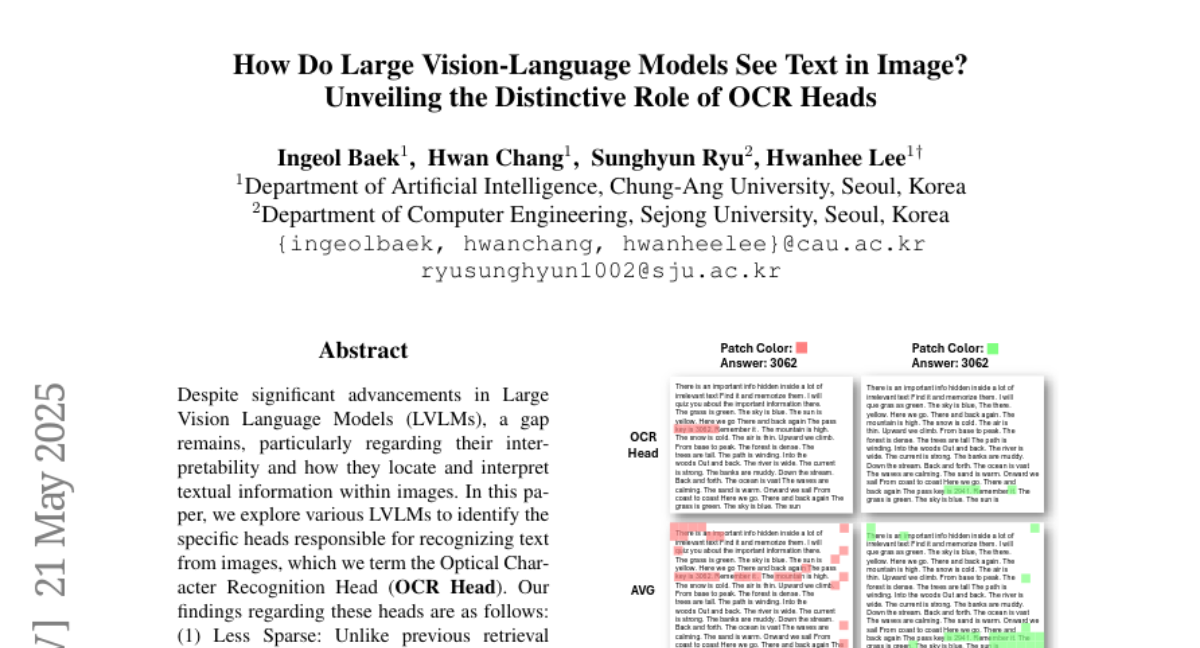

41. How Do Large Vision-Language Models See Text in Image? Unveiling the Distinctive Role of OCR Heads

🔑 Keywords: OCR Head, Large Vision Language Models, Optical Character Recognition, Chain-of-Thought

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The paper aims to explore and identify specific heads, termed Optical Character Recognition Heads, within Large Vision Language Models that are responsible for recognizing text from images.

🛠️ Research Methods:

– The study involves analyzing and comparing the activation patterns and roles of OCR Heads with general retrieval heads, and it validates findings through applying Chain-of-Thought to both OCR and conventional retrieval heads, alongside experiments on masking and redistributing sink-token values.

💬 Research Conclusions:

– The research concludes that OCR Heads are less sparse, qualitatively distinct, and their activation frequency aligns with OCR scores. Improving performance is possible by redistributing sink-token values, providing insights into the internal mechanisms of how LVLMs process textual information.

👉 Paper link: https://huggingface.co/papers/2505.15865

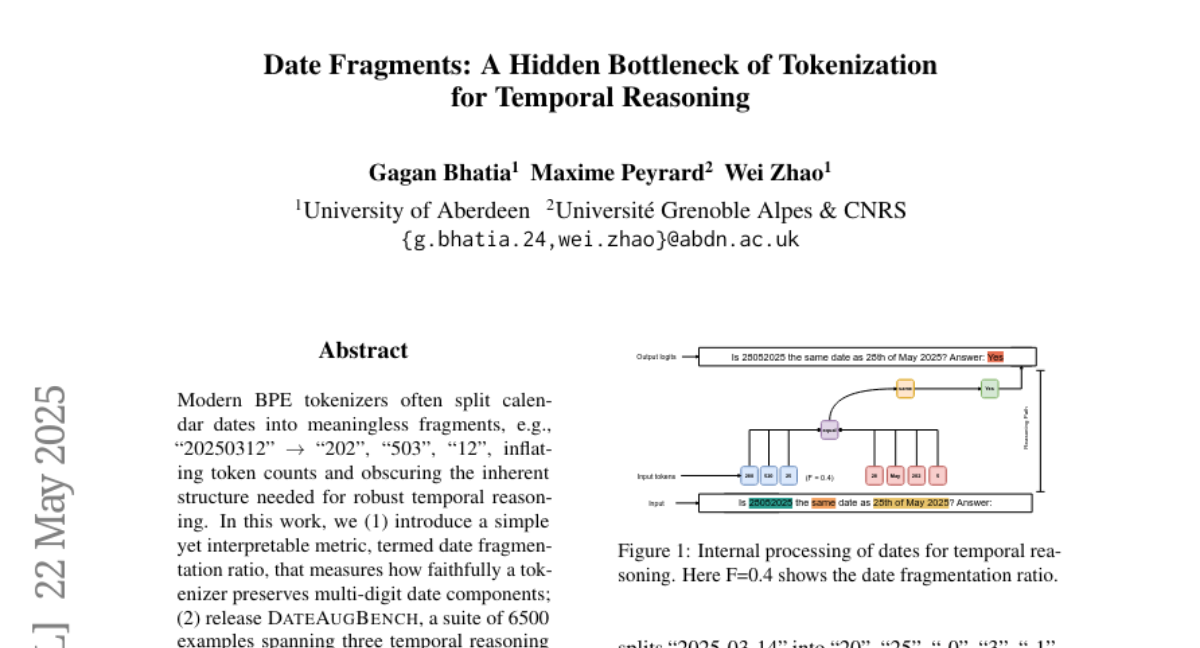

42. Date Fragments: A Hidden Bottleneck of Tokenization for Temporal Reasoning

🔑 Keywords: DateAugBench, Temporal Reasoning, Large Language Models, BPE Tokenizers

💡 Category: Natural Language Processing

🌟 Research Objective:

– The study aims to evaluate how modern BPE tokenizers fragment calendar dates and impact the accuracy of temporal reasoning tasks in large language models.

🛠️ Research Methods:

– Introduced the date fragmentation ratio as a metric to measure tokenizer efficiency in preserving date structure, released DateAugBench with 6500 examples across various temporal reasoning tasks, and employed layer-wise probing and causal attention-hop analyses.

💬 Research Conclusions:

– Excessive date fragmentation can lead to accuracy drops of up to 10 points in temporal reasoning tasks, particularly with rare dates. Larger models can faster reconstruct fragmented dates, following a reasoning path distinct from human interpretation.

👉 Paper link: https://huggingface.co/papers/2505.16088

43. When Do LLMs Admit Their Mistakes? Understanding the Role of Model Belief in Retraction

🔑 Keywords: Retract, Internal Belief, Supervised Fine-Tuning, Self-Verification

💡 Category: Natural Language Processing

🌟 Research Objective:

– To explore the capability of large language models (LLMs) in retracting incorrect answers that contradict their internal beliefs.

🛠️ Research Methods:

– Construction of model-specific datasets to evaluate retraction capabilities.

– Steering experiments to assess the impact of internal beliefs on retraction behavior.

💬 Research Conclusions:

– LLMs infrequently retract incorrect answers due to strong internal beliefs, but supervised fine-tuning can enhance retraction by refining these beliefs.

– Improved internal belief accuracy encourages verification and affects attention during self-verification processes.

👉 Paper link: https://huggingface.co/papers/2505.16170

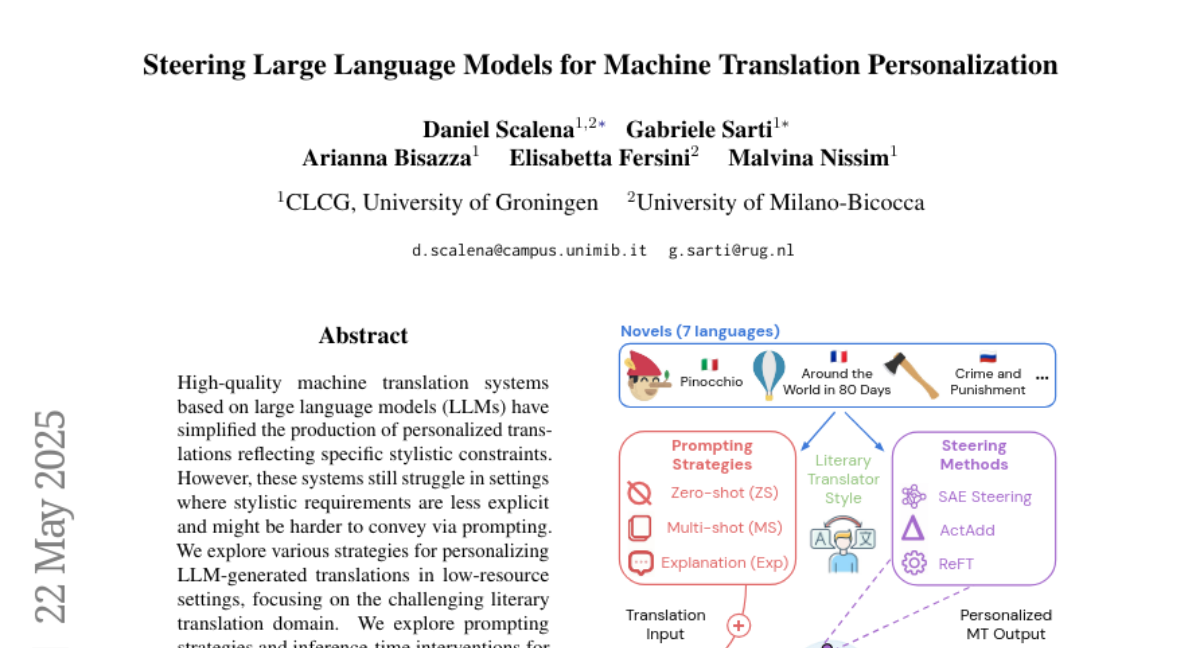

44. Steering Large Language Models for Machine Translation Personalization

🔑 Keywords: LLMs, Personalized Translations, Contrastive Framework, Sparse Autoencoders, Steering

💡 Category: Natural Language Processing

🌟 Research Objective:

– To explore strategies that personalize translations generated by LLMs in low-resource settings, particularly in the domain of literary translation.

🛠️ Research Methods:

– Utilizing prompting strategies and inference-time interventions to guide model generations towards personalized styles.

– Introducing a contrastive framework using latent concepts from sparse autoencoders to identify salient personalization properties.

💬 Research Conclusions:

– Steering achieves strong personalization while maintaining translation quality.

– Steering and multi-shot prompting affect LLM layers similarly, implying a common underlying mechanism for personalization.

👉 Paper link: https://huggingface.co/papers/2505.16612

45. gen2seg: Generative Models Enable Generalizable Instance Segmentation

🔑 Keywords: Generative Models, Stable Diffusion, MAE, Instance Segmentation, Zero-Shot Generalization

💡 Category: Generative Models

🌟 Research Objective:

– To explore how generative models can be fine-tuned for category-agnostic instance segmentation and exhibit strong zero-shot generalization capabilities.

🛠️ Research Methods:

– Fine-tuning Stable Diffusion and MAE frameworks using an instance coloring loss on a narrow set of categories to demonstrate zero-shot capability in unseen object types and styles.

💬 Research Conclusions:

– The fine-tuned models demonstrate superior zero-shot performance compared to discriminative models and can accurately segment various unseen objects and styles. This indicates that generative models possess an intrinsic ability to understand object boundaries and compositions, which transfers across different categories and domains.

👉 Paper link: https://huggingface.co/papers/2505.15263

46. SPhyR: Spatial-Physical Reasoning Benchmark on Material Distribution

🔑 Keywords: Large Language Models, Topology Optimization, Physical Reasoning, Spatial Reasoning, Material Distribution

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– The objective is to introduce a novel dataset for benchmarking the physical and spatial reasoning capabilities of Large Language Models (LLMs) using topology optimization tasks.

🛠️ Research Methods:

– This dataset involves LLMs being provided with 2D boundary conditions, applied forces, and supports. The models are challenged to reason about optimal material distribution without using simulation tools or explicit physical models.

💬 Research Conclusions:

– Indicates the potential of LLMs to perform structural stability and spatial organization reasoning, providing a complementary perspective to traditional language and logic benchmarks.

👉 Paper link: https://huggingface.co/papers/2505.16048

47. SAKURA: On the Multi-hop Reasoning of Large Audio-Language Models Based on Speech and Audio Information

🔑 Keywords: Large audio-language models, multimodal understanding, multi-hop reasoning, SAKURA, speech/audio representations

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The study introduces SAKURA to evaluate the multi-hop reasoning abilities of large audio-language models (LALMs), focusing on their integration of speech and audio representations.

🛠️ Research Methods:

– The researchers developed a benchmark, SAKURA, to assess LALMs’ reasoning, specifically their ability to recall and integrate multiple facts using speech and audio information.

💬 Research Conclusions:

– The findings reveal that LALMs struggle with integrating speech/audio representations for multi-hop reasoning, even when extracting relevant information correctly, highlighting a fundamental challenge in multimodal reasoning.

👉 Paper link: https://huggingface.co/papers/2505.13237

48.