AI Native Daily Paper Digest – 20250610

1. Reinforcement Pre-Training

🔑 Keywords: Reinforcement Pre-Training, language model accuracy, scalable method, next-token prediction, reinforcement learning

💡 Category: Reinforcement Learning

🌟 Research Objective:

– To introduce Reinforcement Pre-Training (RPT) as a new scaling paradigm for large language models and reinforcement learning.

🛠️ Research Methods:

– Reframed next-token prediction as a reasoning task trained using reinforcement learning to receive verifiable rewards.

💬 Research Conclusions:

– RPT significantly improves language modeling accuracy and provides a strong pre-trained foundation for further reinforcement fine-tuning. The scaling curves indicate that increased computational training enhances next-token prediction accuracy, positioning RPT as an effective and promising scaling paradigm.

👉 Paper link: https://huggingface.co/papers/2506.08007

2. Lingshu: A Generalist Foundation Model for Unified Multimodal Medical Understanding and Reasoning

🔑 Keywords: Multimodal Large Language Model, medical knowledge, data curation, reasoning capabilities, reinforcement learning

💡 Category: AI in Healthcare

🌟 Research Objective:

– To address the limitations of existing medical MLLMs by enhancing data coverage, reducing hallucinations, and improving reasoning capabilities in medical applications.

🛠️ Research Methods:

– Implementation of a comprehensive data curation procedure and multi-stage training to enrich and embed medical expertise.

– Exploration of reinforcement learning with verifiable rewards to boost medical reasoning.

– Development of MedEvalKit for standardized evaluation.

💬 Research Conclusions:

– Lingshu, the medical-specialized multimodal large language model, outperforms existing open-source models in tasks like multimodal QA, text-based QA, and medical report generation.

👉 Paper link: https://huggingface.co/papers/2506.07044

3. Saffron-1: Towards an Inference Scaling Paradigm for LLM Safety Assurance

🔑 Keywords: SAFFRON, LLM safety, inference scaling, multifurcation reward model, safety assurance

💡 Category: Natural Language Processing

🌟 Research Objective:

– To pioneer an inference scaling approach for LLM safety against emerging threats.

🛠️ Research Methods:

– Proposed a novel inference scaling paradigm, SAFFRON, which includes a multifurcation reward model (MRM), a partial supervision training objective, a conservative exploration constraint, and a Trie-based key-value caching strategy.

💬 Research Conclusions:

– SAFFRON significantly enhances LLM safety by reducing the computational overhead associated with frequent reward model evaluations. The approach was validated through extensive experiments, and key resources have been released publicly to promote further research.

👉 Paper link: https://huggingface.co/papers/2506.06444

4. MiniCPM4: Ultra-Efficient LLMs on End Devices

🔑 Keywords: MiniCPM4, sparse attention, InfLLM v2, UltraClean, UltraChat v2

💡 Category: Natural Language Processing

🌟 Research Objective:

– The objective is to develop MiniCPM4, a highly efficient large language model optimized for end-side devices using innovations in model architecture, training data, training algorithms, and inference systems.

🛠️ Research Methods:

– Introduction of a trainable sparse attention mechanism called InfLLM v2 and the proposal of UltraClean and UltraChat v2 for efficient pre-training and supervised fine-tuning datasets. Deployment of ModelTunnel v2 and innovative post-training methods including chunk-wise rollout and data-efficient tenary LLM.

💬 Research Conclusions:

– MiniCPM4 demonstrates superior performance and efficiency compared to similar-sized open-source models, with significant speed improvements in processing long sequences and broad applicability in various applications.

👉 Paper link: https://huggingface.co/papers/2506.07900

5. OneIG-Bench: Omni-dimensional Nuanced Evaluation for Image Generation

🔑 Keywords: Text-to-Image, Benchmark Framework, Reasoning, Text Rendering, Diversity

💡 Category: Generative Models

🌟 Research Objective:

– The paper introduces OneIG-Bench, a comprehensive benchmark framework designed to evaluate Text-to-Image (T2I) models across multiple dimensions such as reasoning, text rendering, and diversity.

🛠️ Research Methods:

– OneIG-Bench structures evaluations for fine-grained analysis, allowing focused evaluation on specific dimensions like prompt-image alignment and stylization, facilitating flexible and in-depth model performance analysis.

💬 Research Conclusions:

– The comprehensive design of OneIG-Bench aids researchers and practitioners in identifying strengths and weaknesses in T2I models. The available codebase and dataset support reproducible evaluations and cross-model comparisons within the T2I research community.

👉 Paper link: https://huggingface.co/papers/2506.07977

6. SpatialLM: Training Large Language Models for Structured Indoor Modeling

🔑 Keywords: SpatialLM, 3D point cloud, multimodal LLM, layout estimation, embodied robotics

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To process 3D point cloud data and generate structured 3D scene understanding outputs using a new multimodal large language model named SpatialLM.

🛠️ Research Methods:

– Utilization of a large-scale, high-quality synthetic dataset with point clouds and ground-truth 3D annotations. Fine-tuning of open-source large language models within a standard multimodal LLM architecture.

💬 Research Conclusions:

– Achieved state-of-the-art performance in layout estimation and competitive results in 3D object detection, demonstrating enhanced spatial understanding capabilities for modern LLMs applicable in augmented reality and embodied robotics.

👉 Paper link: https://huggingface.co/papers/2506.07491

7. Image Reconstruction as a Tool for Feature Analysis

🔑 Keywords: Vision encoders, image reconstruction, feature representations, orthogonal rotations, SigLIP

💡 Category: Computer Vision

🌟 Research Objective:

– To explore how vision encoders represent features internally using a novel image reconstruction approach.

🛠️ Research Methods:

– Comparison of model families SigLIP and SigLIP2 based on their training objectives.

– Analysis of image information retention in encoders trained on image-based versus non-image tasks.

💬 Research Conclusions:

– Vision encoders pre-trained on image tasks retain more image information than those trained on tasks like contrastive learning.

– Orthogonal rotations in feature space, rather than spatial transformations, control color encoding in reconstructed images.

– The approach can be applied to any vision encoder to better understand the structure of its feature space.

👉 Paper link: https://huggingface.co/papers/2506.07803

8. Astra: Toward General-Purpose Mobile Robots via Hierarchical Multimodal Learning

🔑 Keywords: AI Native, Multimodal LLM, Self-supervised learning, Transformer encoder, Robotics and Autonomous Systems

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– To develop Astra, a dual-model architecture designed to enhance mobile robot navigation in diverse indoor environments.

🛠️ Research Methods:

– Utilized Astra-Global, a multimodal LLM, for global localization via a hybrid topological-semantic graph.

– Employed Astra-Local, which uses a multitask network with a 4D spatial-temporal encoder trained through self-supervised learning.

💬 Research Conclusions:

– Astra demonstrates high end-to-end mission success rates in diverse indoor conditions, surpassing traditional visual place recognition methods.

👉 Paper link: https://huggingface.co/papers/2506.06205

9. Pre-trained Large Language Models Learn Hidden Markov Models In-context

🔑 Keywords: In-context learning, Large language models, Hidden Markov Models, Predictive accuracy, Scaling trends

💡 Category: Natural Language Processing

🌟 Research Objective:

– Explore the effectiveness of pre-trained large language models in modeling data generated by Hidden Markov Models through in-context learning.

🛠️ Research Methods:

– Evaluate large language models on synthetic HMMs, using in-context learning to infer patterns and predict sequences.

💬 Research Conclusions:

– Large language models achieve near-optimal predictive accuracy on HMM-generated sequences, revealing new scaling trends.

– The study demonstrates the potential of in-context learning as a diagnostic tool for complex scientific data, with notable performance on real-world animal decision-making tasks.

👉 Paper link: https://huggingface.co/papers/2506.07298

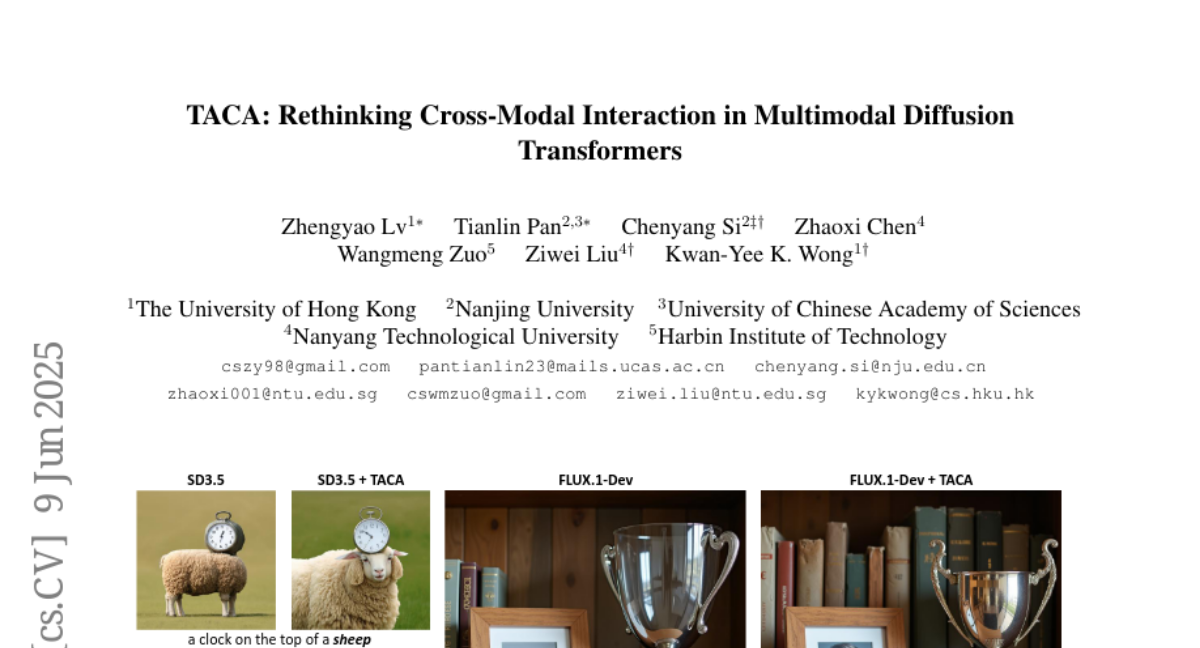

10. Rethinking Cross-Modal Interaction in Multimodal Diffusion Transformers

🔑 Keywords: TACA, Cross-modal Attention, Temperature Scaling, Text-to-Image Diffusion Models

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Enhance text-image alignment in diffusion models through a new parameter-efficient method.

🛠️ Research Methods:

– Introduced Temperature-Adjusted Cross-modal Attention (TACA) using temperature scaling and timestep-dependent adjustment.

💬 Research Conclusions:

– TACA significantly improves image-text alignment in terms of object appearance, attribute binding, and spatial relationships, demonstrating its effectiveness on models like FLUX and SD3.5.

👉 Paper link: https://huggingface.co/papers/2506.07986

11. BitVLA: 1-bit Vision-Language-Action Models for Robotics Manipulation

🔑 Keywords: BitVLA, VLA models, distillation-aware training, memory footprint, robotics manipulation

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– The research aims to develop a 1-bit VLA model named BitVLA for robotics manipulation with ternary parameters, reducing memory usage while maintaining performance.

🛠️ Research Methods:

– The study introduces distillation-aware training to compress a full-precision encoder into 1.58-bit weights, aligning latent representations using a full-precision encoder as a teacher model.

💬 Research Conclusions:

– BitVLA matches the performance of the state-of-the-art model OpenVLA-OFT on the LIBERO benchmark but uses 29.8% less memory, making it viable for memory-constrained edge devices.

👉 Paper link: https://huggingface.co/papers/2506.07530

12. GTR-CoT: Graph Traversal as Visual Chain of Thought for Molecular Structure Recognition

🔑 Keywords: GTR-Mol-VLM, Optical Chemical Structure Recognition, Graph Traversal, Data-centric, Cheminformatics

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Introduce GTR-Mol-VLM to improve Optical Chemical Structure Recognition (OCSR) by accurately parsing molecular graphs and handling abbreviated structures.

🛠️ Research Methods:

– Developed a novel framework using Graph Traversal as a Visual Chain of Thought mechanism and a data-centric principle called “Faithfully Recognize What You’ve Seen.”

– Constructed GTR-CoT-1.3M, a large-scale instruction-tuning dataset, and introduced MolRec-Bench for evaluation.

💬 Research Conclusions:

– GTR-Mol-VLM outperforms existing models in both SMILES and graph-based metrics, particularly with molecular images involving functional group abbreviations.

– Demonstrates superior results compared to specialist models and commercial general-purpose VLMs.

– Aims to advance OCSR technology to better meet real-world needs in cheminformatics and AI for science.

👉 Paper link: https://huggingface.co/papers/2506.07553

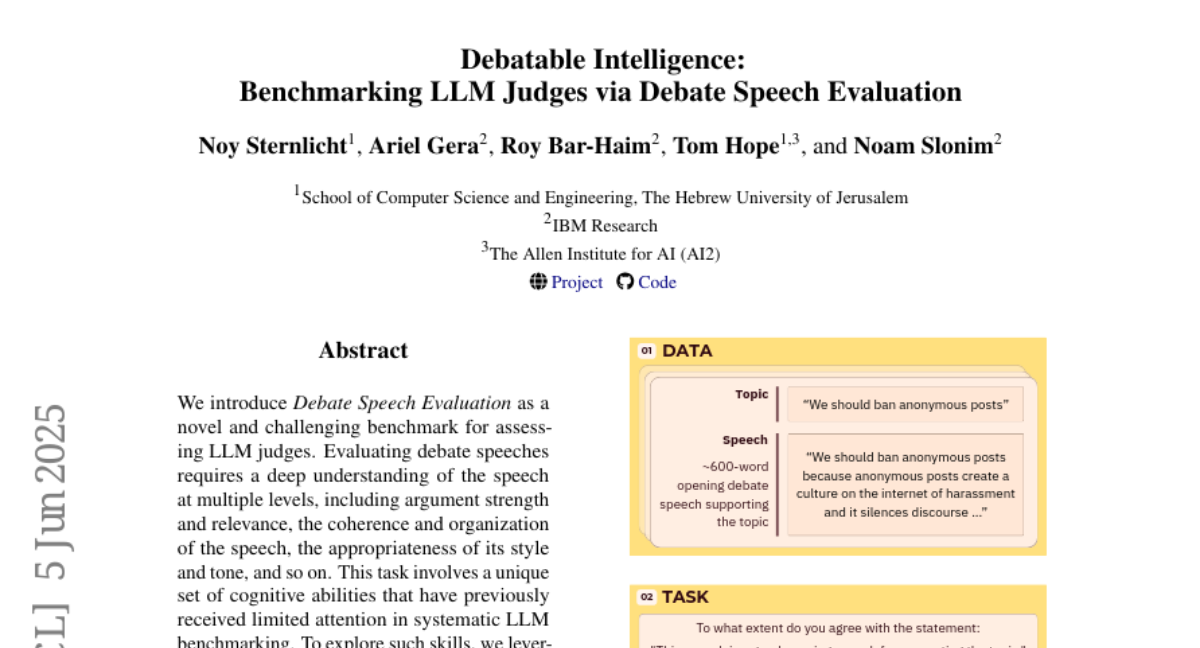

13. Debatable Intelligence: Benchmarking LLM Judges via Debate Speech Evaluation

🔑 Keywords: Debate Speech Evaluation, LLM, argument strength, coherence, human judges

💡 Category: Natural Language Processing

🌟 Research Objective:

– The study introduces a novel benchmark called Debate Speech Evaluation to assess the performance of large language models (LLMs) in evaluating debate speeches compared to human judges.

🛠️ Research Methods:

– Utilized a dataset of over 600 meticulously annotated debate speeches to compare state-of-the-art LLMs with human judges.

💬 Research Conclusions:

– Larger models can approximate individual human judgments in specific aspects but vary significantly in overall judgment behavior.

– Frontier LLMs have the potential to generate persuasive, opinionated speeches at a human level.

👉 Paper link: https://huggingface.co/papers/2506.05062

14. Bootstrapping World Models from Dynamics Models in Multimodal Foundation Models

🔑 Keywords: Foundation models, Dynamics model, Vision-and-language, Fine-tuning, Action-centric image editing

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The study investigates how vision-and-language foundation models can possess a realistic world model and dynamics model when actions are expressed through language.

🛠️ Research Methods:

– The research relies on fine-tuning foundation models to develop dynamics models.

– Dynamics models are used to bootstrap world models using weakly supervised learning from synthetic data and inference time verification.

💬 Research Conclusions:

– Dynamics models effectively annotate actions for unlabelled video frames, expanding training data.

– Achieved state-of-the-art performance in action-centric image editing on Aurora-Bench, improving by 15% on real-world subsets.

👉 Paper link: https://huggingface.co/papers/2506.06006

15. The Illusion of Thinking: Understanding the Strengths and Limitations of Reasoning Models via the Lens of Problem Complexity

🔑 Keywords: Large Reasoning Models, reasoning traces, exact computation, puzzle environments

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– To investigate the capabilities, scaling properties, and limitations of Large Reasoning Models (LRMs) using controllable puzzle environments.

🛠️ Research Methods:

– Systematic analysis and experiments in controllable puzzle environments to evaluate LRMs’ reasoning processes and performance across varying task complexities.

💬 Research Conclusions:

– LRMs demonstrate a complete accuracy collapse at higher complexities and exhibit a counterintuitive scaling limit. They show differing performance across low, medium, and high complexity tasks, and have limitations in exact computation and consistent reasoning.

👉 Paper link: https://huggingface.co/papers/2506.06941

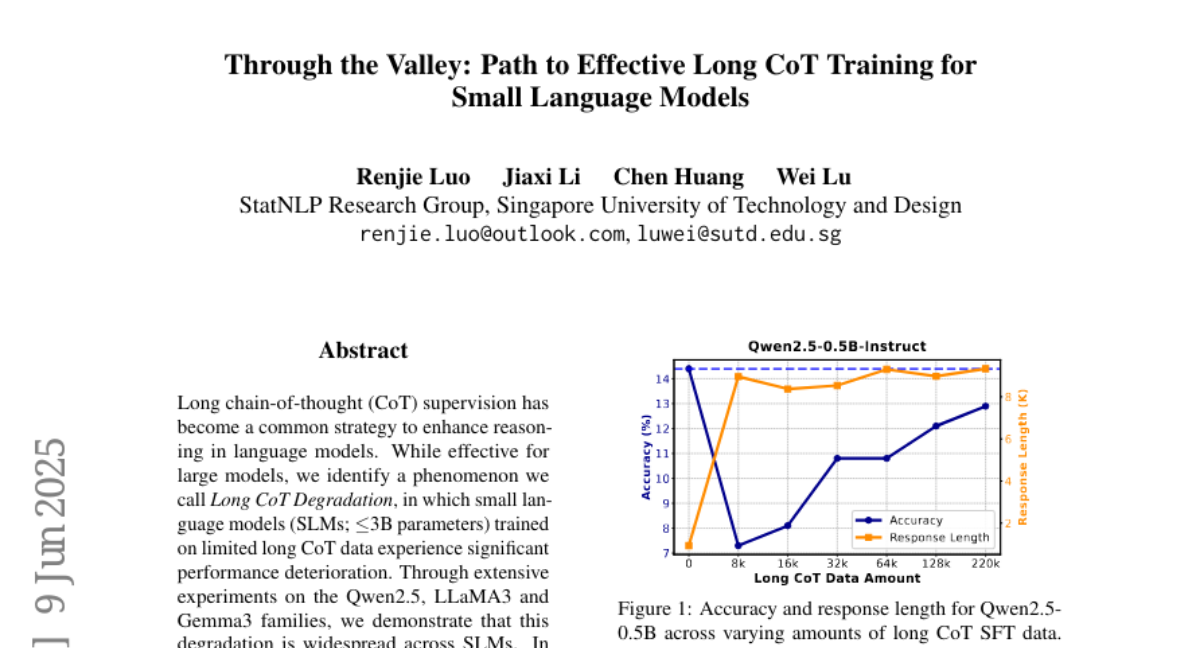

16. Through the Valley: Path to Effective Long CoT Training for Small Language Models

🔑 Keywords: Small language models, Long chain-of-thought, Error accumulation, Reinforcement learning, Supervised fine-tuning

💡 Category: Natural Language Processing

🌟 Research Objective:

– Examine the performance impact of Long CoT training on small language models and its broader effects on reasoning and downstream tasks.

🛠️ Research Methods:

– Conduct extensive experiments on language model families like Qwen2.5, LLaMA3, and Gemma3 to evaluate model performance and the phenomenon of Long CoT Degradation.

💬 Research Conclusions:

– Small language models face significant performance declines with Long CoT data due to error accumulation, affecting reinforcement learning, though improvements are possible with effective supervised fine-tuning.

👉 Paper link: https://huggingface.co/papers/2506.07712

17. Vision Transformers Don’t Need Trained Registers

🔑 Keywords: Vision Transformers, high-norm activations, attention maps, interpretability, training-free approach

💡 Category: Computer Vision

🌟 Research Objective:

– Investigate the mechanism behind high-norm token emergence causing noisy attention maps in Vision Transformers.

🛠️ Research Methods:

– Developed a training-free method that shifts high-norm activations to an untrained token to enhance attention maps and model performance.

💬 Research Conclusions:

– The method produces cleaner attention and feature maps, improves performance in visual tasks, and enhances interpretability in vision-language models without retraining.

👉 Paper link: https://huggingface.co/papers/2506.08010

18. Model Immunization from a Condition Number Perspective

🔑 Keywords: Model immunization, Hessian matrix, Condition number, Linear models, Deep-nets

💡 Category: Machine Learning

🌟 Research Objective:

– The objective is to propose an algorithm with regularization terms based on the Hessian matrix’s condition number to achieve model immunization, particularly for linear models and deep-nets.

🛠️ Research Methods:

– A framework utilizing the condition number of a Hessian matrix is developed to analyze and enhance model immunization. An algorithm with specific regularization terms is designed to maintain a controlled condition number during pre-training.

💬 Research Conclusions:

– The proposed algorithm was empirically validated to be effective in achieving model immunization across both linear models and deep-nets.

👉 Paper link: https://huggingface.co/papers/2505.23760

19. ConfQA: Answer Only If You Are Confident

🔑 Keywords: ConfQA, hallucination, Large Language Models, knowledge graphs, confidence calibration

💡 Category: Natural Language Processing

🌟 Research Objective:

– The study aims to reduce factual statement hallucination in Large Language Models (LLMs) by using a fine-tuning strategy called ConfQA.

🛠️ Research Methods:

– The ConfQA strategy utilizes a dampening prompt to guide LLM behavior, combined with factual statements from knowledge graphs to improve confidence calibration.

💬 Research Conclusions:

– ConfQA reduces hallucination rates from 20-40% to under 5% and enhances accuracy beyond 95%, while reducing unnecessary external retrievals by over 30%.

👉 Paper link: https://huggingface.co/papers/2506.07309

20. CCI4.0: A Bilingual Pretraining Dataset for Enhancing Reasoning in Large Language Models

🔑 Keywords: Bilingual Pre-training, Data Quality, Reasoning Trajectory, CoT Extraction, LLMs

💡 Category: Natural Language Processing

🌟 Research Objective:

– Introduction of CCI4.0, a bilingual dataset designed to enhance data quality and diversify reasoning patterns for language models.

🛠️ Research Methods:

– Utilization of a novel pipeline involving two-stage deduplication, multiclassifier quality scoring, and domain-aware fluency filtering to ensure high-quality data processing.

💬 Research Conclusions:

– Pre-training with CCI4.0 significantly improves performance in downstream tasks, particularly in math and code reflection, demonstrating the importance of rigorous data curation and human thinking templates.

👉 Paper link: https://huggingface.co/papers/2506.07463

21. Well Begun is Half Done: Low-resource Preference Alignment by Weak-to-Strong Decoding

🔑 Keywords: Large Language Models, alignment, Weak-to-Strong Decoding, GenerAlign, Pilot-3B

💡 Category: Natural Language Processing

🌟 Research Objective:

– To enhance the alignment capability of large language models (LLMs) with human preferences through a new framework called Weak-to-Strong Decoding.

🛠️ Research Methods:

– Utilization of a small aligned model to draft responses initially, followed by continuation by a large base model controlled through an auto-switch mechanism.

– Introduction of a new dataset, GenerAlign, for fine-tuning a small-sized model, Pilot-3B, to serve as the draft model in this framework.

💬 Research Conclusions:

– The Weak-to-Strong Decoding (WSD) framework effectively improves alignment in LLMs without causing degradation, referred to as alignment tax, in downstream tasks.

– Extensive experiments demonstrate the framework’s capability to outperform baseline methods while analyzing the efficiency and intrinsic mechanisms involved.

👉 Paper link: https://huggingface.co/papers/2506.07434

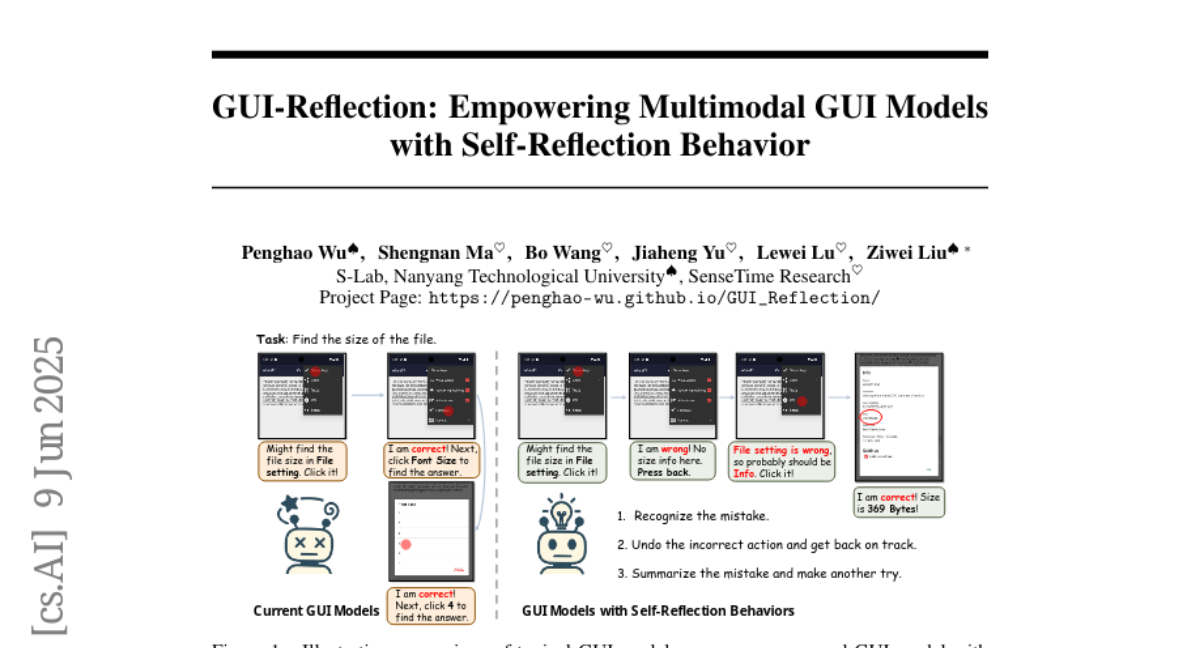

22. GUI-Reflection: Empowering Multimodal GUI Models with Self-Reflection Behavior

🔑 Keywords: GUI-Reflection, self-reflection, error correction, GUI-specific pre-training, online reflection tuning

💡 Category: AI Systems and Tools

🌟 Research Objective:

– The study introduces GUI-Reflection, a framework designed to enhance GUI automation by integrating self-reflection and error correction capabilities into multimodal GUI models.

🛠️ Research Methods:

– The framework involves several dedicated training stages including GUI-specific pre-training, offline supervised fine-tuning, and innovative online reflection tuning.

– A scalable data pipeline is proposed to automatically generate data for reflection and error correction from successful trajectories.

– A diverse environment for online training and data collection on mobile devices is developed.

💬 Research Conclusions:

– The GUI-Reflection framework allows for the development of more robust and adaptable GUI automation systems by endowing GUI agents with self-reflection and correction abilities. All related data, models, and tools are to be made publicly available.

👉 Paper link: https://huggingface.co/papers/2506.08012

23. Play to Generalize: Learning to Reason Through Game Play

🔑 Keywords: Multimodal Reasoning, Visual Game Learning, Reinforcement Learning, MLLMs, Cognitive Skills

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To enhance out-of-domain generalization of multimodal reasoning in MLLMs through a post-training paradigm involving arcade-like games.

🛠️ Research Methods:

– The method involves post-training a 7B-parameter multimodal large language model using reinforcement learning on simple arcade-like games, such as Snake.

💬 Research Conclusions:

– The study demonstrates that Visual Game Learning enhances multimodal reasoning skills and outperforms specialist models while preserving performance on general benchmarks.

👉 Paper link: https://huggingface.co/papers/2506.08011

24. ExpertLongBench: Benchmarking Language Models on Expert-Level Long-Form Generation Tasks with Structured Checklists

🔑 Keywords: ExpertLongBench, CLEAR, long-form outputs, domain-specific requirements, large language models

💡 Category: Natural Language Processing

🌟 Research Objective:

– The paper aims to introduce ExpertLongBench, a benchmark designed to evaluate expert-level workflows with long-form tasks across various domains.

🛠️ Research Methods:

– ExpertLongBench consists of 11 tasks drawn from 9 domains requiring long-form outputs and adherence to detailed rubrics evaluated via the CLEAR framework.

– CLEAR supports the evaluation by deriving checklists from model outputs and references, which are compared for a grounded assessment of correctness.

💬 Research Conclusions:

– Existing large language models show a need for improvement in handling expert-level tasks, with current top performers scoring only 26.8% F1.

– Although models can generate the required aspects of content, the accuracy is often lacking.

– The CLEAR framework demonstrates potential for scalable and cost-effective evaluation using open-weight models.

👉 Paper link: https://huggingface.co/papers/2506.01241

25. SynthesizeMe! Inducing Persona-Guided Prompts for Personalized Reward Models in LLMs

🔑 Keywords: Large Language Models, personalized reward models, user interactions, synthetic user personas, reasoning

💡 Category: Natural Language Processing

🌟 Research Objective:

– Introduce SynthesizeMe to generate personalized prompts from user interactions to enhance the accuracy of LLMs in assessing chatbot performances without relying heavily on identity information.

🛠️ Research Methods:

– Develop synthetic user personas through user interactions and reasoning.

– Filter informative prior user interactions to create personalized prompts for individual users.

💬 Research Conclusions:

– SynthesizeMe improves personalized LLM-as-a-judge accuracy by 4.4% in Chatbot Arena.

– Combining SynthesizeMe prompts with a reward model achieves top performance on PersonalRewardBench, which includes data from 854 users via Chatbot Arena and PRISM.

👉 Paper link: https://huggingface.co/papers/2506.05598

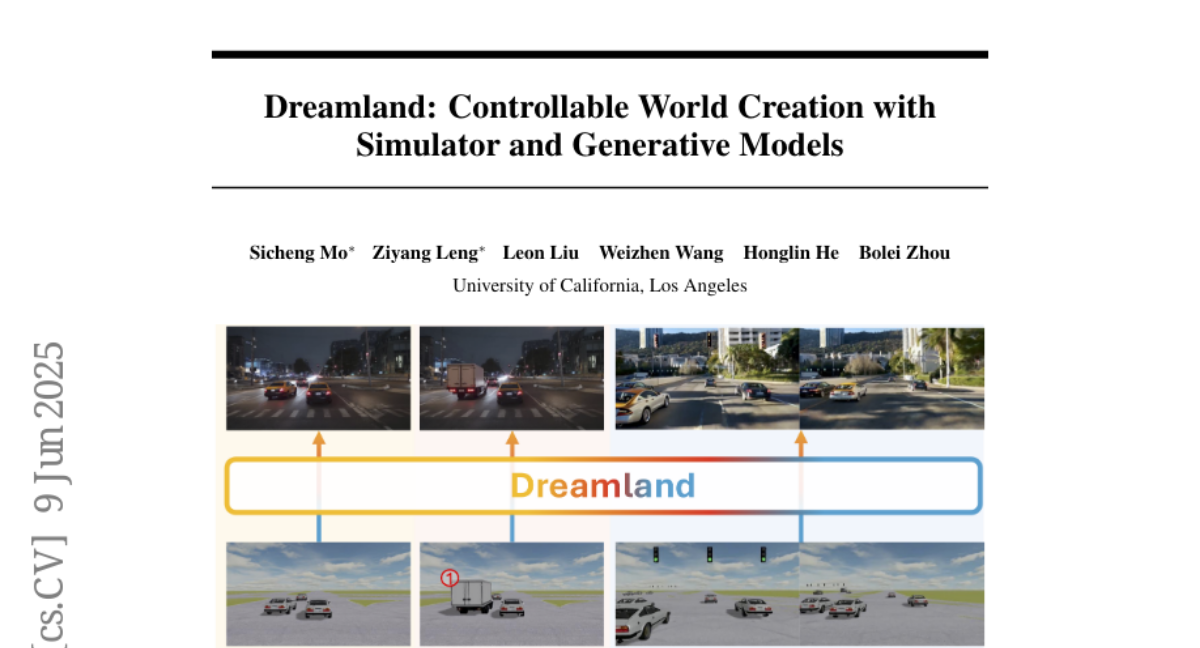

26. Dreamland: Controllable World Creation with Simulator and Generative Models

🔑 Keywords: Video Generative Models, Physics-based Simulator, Photorealistic Content, Layered World Abstraction, Embodied Agent Training

💡 Category: Generative Models

🌟 Research Objective:

– The research aims to enhance controllability and image quality in video generation by integrating physics-based simulators with generative models.

🛠️ Research Methods:

– Dreamland, a hybrid framework, is proposed using a layered world abstraction that bridges a physics-based simulator and large-scale pretrained generative models for improved controllability and realism.

💬 Research Conclusions:

– Dreamland significantly improves image quality by 50.8% and controllability by 17.9% compared to existing baselines, and shows potential for enhancing embodied agent training. Code and data will be released for further exploration.

👉 Paper link: https://huggingface.co/papers/2506.08006

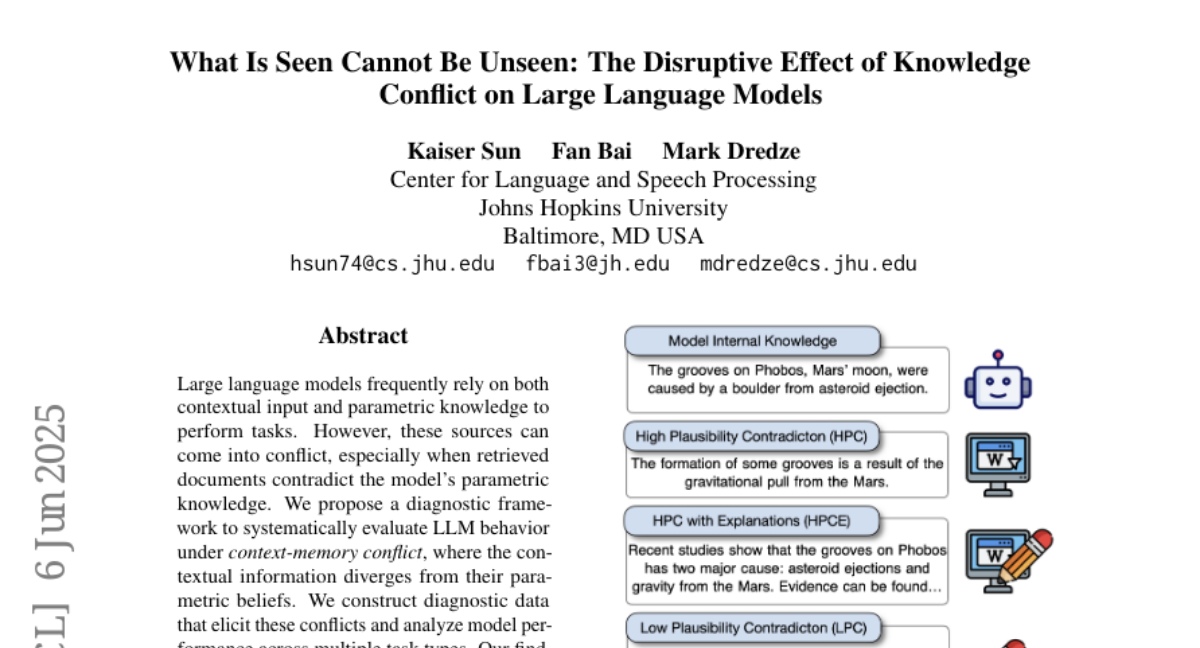

27. What Is Seen Cannot Be Unseen: The Disruptive Effect of Knowledge Conflict on Large Language Models

🔑 Keywords: Large language models, contextual input, parametric knowledge, diagnostic framework, context-memory conflict

💡 Category: Natural Language Processing

🌟 Research Objective:

– To propose a diagnostic framework to evaluate LLM behavior under context-memory conflict where contextual information diverges from parametric beliefs.

🛠️ Research Methods:

– Construction of diagnostic data to highlight conflicts and analysis of model performance across multiple task types.

💬 Research Conclusions:

– Knowledge conflict minimally impacts tasks not requiring knowledge utilization.

– Model performance is higher when contextual and parametric knowledge are aligned.

– Models struggle to suppress internal knowledge when instructed.

– Providing rationales for conflicts increases reliance on contexts.

👉 Paper link: https://huggingface.co/papers/2506.06485

28. Agents of Change: Self-Evolving LLM Agents for Strategic Planning

🔑 Keywords: LLM agents, strategic planning, multi-agent architecture, self-evolving, adaptive reasoning

💡 Category: AI Systems and Tools

🌟 Research Objective:

– Investigate the self-improvement capacity of LLM agents in strategic environments like Settlers of Catan.

🛠️ Research Methods:

– Utilized the open-source Catanatron framework to benchmark LLM agents, introducing a multi-agent architecture with roles like Analyzer and Coder for collaborative gameplay analysis and strategy development.

💬 Research Conclusions:

– Self-evolving agents, using models like Claude 3.7 and GPT-4o, outperform static baselines by autonomously adopting strategies and demonstrating adaptive reasoning over iterations.

👉 Paper link: https://huggingface.co/papers/2506.04651

29. Cartridges: Lightweight and general-purpose long context representations via self-study

🔑 Keywords: KV cache, Cartridge, Self-study, Context-distillation, In-context learning

💡 Category: Natural Language Processing

🌟 Research Objective:

– To reduce serving costs and match in-context learning (ICL) performance by training a smaller, offline KV cache called Cartridge.

🛠️ Research Methods:

– The Cartridge is trained with a novel technique called self-study, using a context-distillation objective to create synthetic conversations for training.

💬 Research Conclusions:

– Cartridges trained with self-study replicate ICL functionality while being cheaper, using 38.6x less memory, and allowing 26.4x higher throughput. They also extend the model’s effective context length and can be composed at inference time without retraining.

👉 Paper link: https://huggingface.co/papers/2506.06266

30. NetPress: Dynamically Generated LLM Benchmarks for Network Applications

🔑 Keywords: Large Language Models, Network Operations, Benchmark Generation, AI-generated, Infrastructure-centric Domains

💡 Category: AI Systems and Tools

🌟 Research Objective:

– The research introduces NetPress, a dynamic benchmark generation framework designed to evaluate large language model agents specifically in network applications, addressing current limitations of static benchmarking.

🛠️ Research Methods:

– NetPress utilizes a unified abstraction with state and action to enable dynamic generation of diverse query sets and integrates with network emulators to provide realistic environment feedback.

💬 Research Conclusions:

– NetPress successfully bridges the gap between benchmark performance and real-world deployment by offering scalable, realistic testing in infrastructure-centric domains, revealing fine-grained differences in agent behavior often missed by static benchmarks.

👉 Paper link: https://huggingface.co/papers/2506.03231

31. Self-Adapting Improvement Loops for Robotic Learning

🔑 Keywords: Self-Adapting Improvement Loop, video model, internet-scale pretrained video model, self-produced data, novel robotic tasks

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– To design agents that can self-improve continuously in an online manner using SAIL, enhancing video model performance on robotic tasks with self-produced data.

🛠️ Research Methods:

– Iterative updates using self-collected behaviors and adaptation with internet-scale pretrained video models applied to MetaWorld tasks and real robot arm manipulations.

💬 Research Conclusions:

– The SAIL loop demonstrates continuous performance improvement on novel tasks not seen during initial training, showing robustness in handling self-collected experience and leveraging internet-scale data.

👉 Paper link: https://huggingface.co/papers/2506.06658

32. Learning What Reinforcement Learning Can’t: Interleaved Online Fine-Tuning for Hardest Questions

🔑 Keywords: Reinforcement Learning, Supervised Fine-Tuning, ReLIFT, Large Language Model, Reasoning

💡 Category: Natural Language Processing

🌟 Research Objective:

– The goal is to enhance large language model reasoning by combining reinforcement learning with supervised fine-tuning, addressing the limitations of reinforcement learning alone.

🛠️ Research Methods:

– A novel method called ReLIFT is implemented, which interleaves reinforcement learning with supervised fine-tuning, particularly for challenging questions where high-quality solutions are collected for fine-tuning.

💬 Research Conclusions:

– ReLIFT achieves over +5.2 points improvement on competition and out-of-distribution benchmarks compared to zero-RL models, demonstrating scalability and overcoming RL limitations using only 13% of the demonstration data.

👉 Paper link: https://huggingface.co/papers/2506.07527

33. SAFEFLOW: A Principled Protocol for Trustworthy and Transactional Autonomous Agent Systems

🔑 Keywords: SAFEFLOW, autonomous agents, information flow control, reliability, multi-agent coordination

💡 Category: AI Systems and Tools

🌟 Research Objective:

– Introduce SAFEFLOW to establish a secure framework for building trustworthy LLM/VLM-based autonomous agents by enforcing fine-grained information flow control and ensuring reliability and coordination.

🛠️ Research Methods:

– Implement SAFEFLOW with transactional execution, conflict resolution, and secure scheduling, reinforced by mechanisms like write-ahead logging, rollback, and secure caches.

– Develop SAFEFLOWBENCH as a benchmark suite to evaluate agent reliability against adversarial, noisy, and concurrent conditions.

💬 Research Conclusions:

– SAFEFLOW enhances task performance and security in hostile environments, surpassing state-of-the-art solutions and establishing a robust, secure ecosystem for autonomous agents.

👉 Paper link: https://huggingface.co/papers/2506.07564

34. τ^2-Bench: Evaluating Conversational Agents in a Dual-Control Environment

🔑 Keywords: Conversational AI, dual-control, coordination, communication, Telecom

💡 Category: Human-AI Interaction

🌟 Research Objective:

– To introduce a dual-control benchmark named tau²-bench for conversational AI agents in telecommunications, addressing the need for both AI and user to actively participate in real-world scenarios.

🛠️ Research Methods:

– Employed a novel Telecom dual-control domain as a Dec-POMDP to simulate shared environments and tasks, utilized a compositional task generator for diverse task creation, and implemented a reliable user simulator to enhance simulation fidelity.

💬 Research Conclusions:

– Highlighted challenges faced by AI agents in scenarios requiring coordination with active user involvement, evidenced by performance drops in dual-control settings, thereby providing a valuable testbed for advancing both reasoning and user guidance capabilities.

👉 Paper link: https://huggingface.co/papers/2506.07982

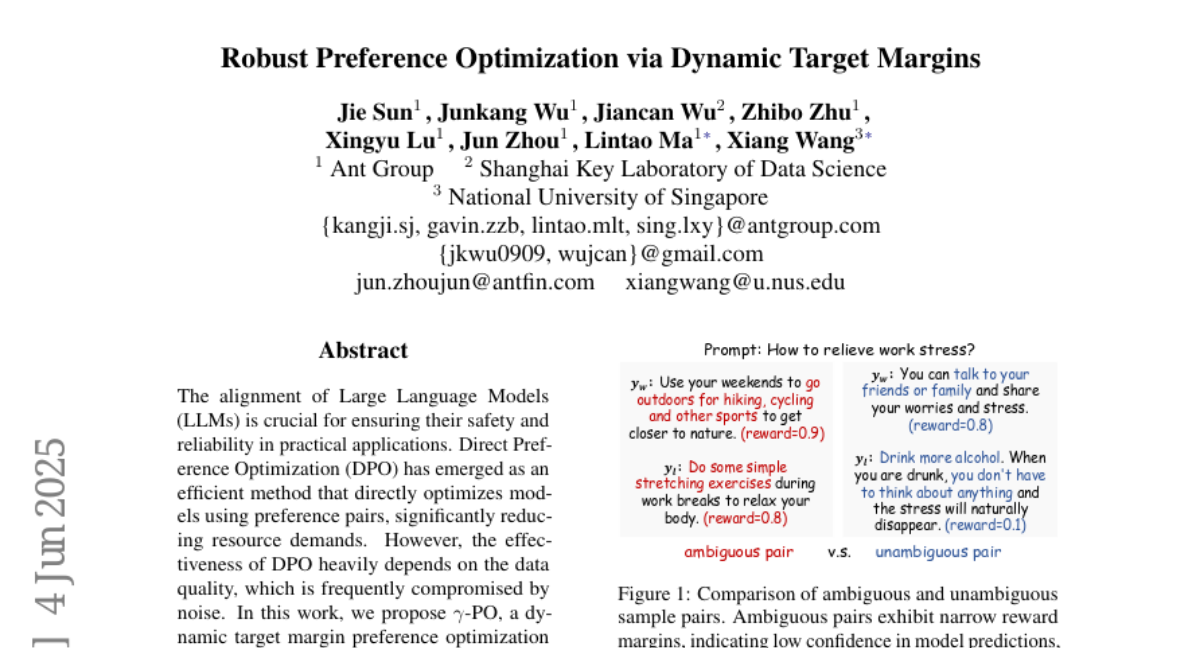

35. Robust Preference Optimization via Dynamic Target Margins

🔑 Keywords: γ-PO, Dynamic Target Margin, Large Language Models, Preference Optimization, Reward Margins

💡 Category: Natural Language Processing

🌟 Research Objective:

– To enhance the alignment of Large Language Models by adjusting reward margins dynamically at the pairwise level using the γ-PO algorithm.

🛠️ Research Methods:

– Introduced a novel dynamic target margin preference optimization algorithm named gamma-PO which calibrates margins instance-specifically, prioritizing high-confidence pairs while mitigating noise from ambiguous pairs.

💬 Research Conclusions:

– Gamma-PO demonstrated a 4.4% improvement in alignment performance across benchmarks like AlpacaEval2 and Arena-Hard, requiring minimal code changes and having negligible impact on training efficiency.

👉 Paper link: https://huggingface.co/papers/2506.03690

36. MegaHan97K: A Large-Scale Dataset for Mega-Category Chinese Character Recognition with over 97K Categories

🔑 Keywords: Mega-category recognition, OCR, GB18030-2022, Long-tail distribution, Zero-shot learning

💡 Category: Computer Vision

🌟 Research Objective:

– Introduce MegaHan97K, a large-scale dataset for recognizing over 97,000 Chinese characters to address mega-category recognition challenges in OCR.

🛠️ Research Methods:

– Development of MegaHan97K, which includes three subsets: handwritten, historical, and synthetic. This dataset supports the latest GB18030-2022 standard and provides comprehensive coverage to tackle the long-tail distribution problem.

💬 Research Conclusions:

– MegaHan97K surpasses existing datasets in category coverage, addresses long-tail distribution, and reveals new challenges such as storage demands, similar character recognition, and zero-shot learning, contributing to cultural heritage preservation and digital application development.

👉 Paper link: https://huggingface.co/papers/2506.04807

37. GeometryZero: Improving Geometry Solving for LLM with Group Contrastive Policy Optimization

🔑 Keywords: Reinforcement Learning, Group Contrastive Policy Optimization, Geometric Reasoning, AI Native, Auxiliary Construction

💡 Category: Reinforcement Learning

🌟 Research Objective:

– To enhance geometric reasoning in large language models through the introduction of a new reinforcement learning framework, Group Contrastive Policy Optimization (GCPO).

🛠️ Research Methods:

– Developed a framework featuring Group Contrastive Masking for adaptive reward signaling and a length reward to promote longer reasoning chains.

– Introduced GeometryZero models to implement these methods efficiently on geometric benchmarks.

💬 Research Conclusions:

– GeometryZero models, built on the GCPO framework, consistently surpass existing models like GRPO on geometric benchmarks, with an average improvement of 4.29%.

👉 Paper link: https://huggingface.co/papers/2506.07160

38. Overclocking LLM Reasoning: Monitoring and Controlling Thinking Path Lengths in LLMs

🔑 Keywords: LLMs, reasoning process, progress encoding, inference, overthinking

💡 Category: Natural Language Processing

🌟 Research Objective:

– To explore and improve how LLMs regulate the length of their reasoning stage to enhance accuracy and efficiency.

🛠️ Research Methods:

– Introduced an interactive progress bar visualization to reveal planning dynamics and manipulated internal progress encoding during inference to streamline reasoning.

💬 Research Conclusions:

– The technique of “overclocking” reduces overthinking, improves answer accuracy, and decreases inference latency, demonstrating the potential for optimizing LLMs’ reasoning processes.

👉 Paper link: https://huggingface.co/papers/2506.07240

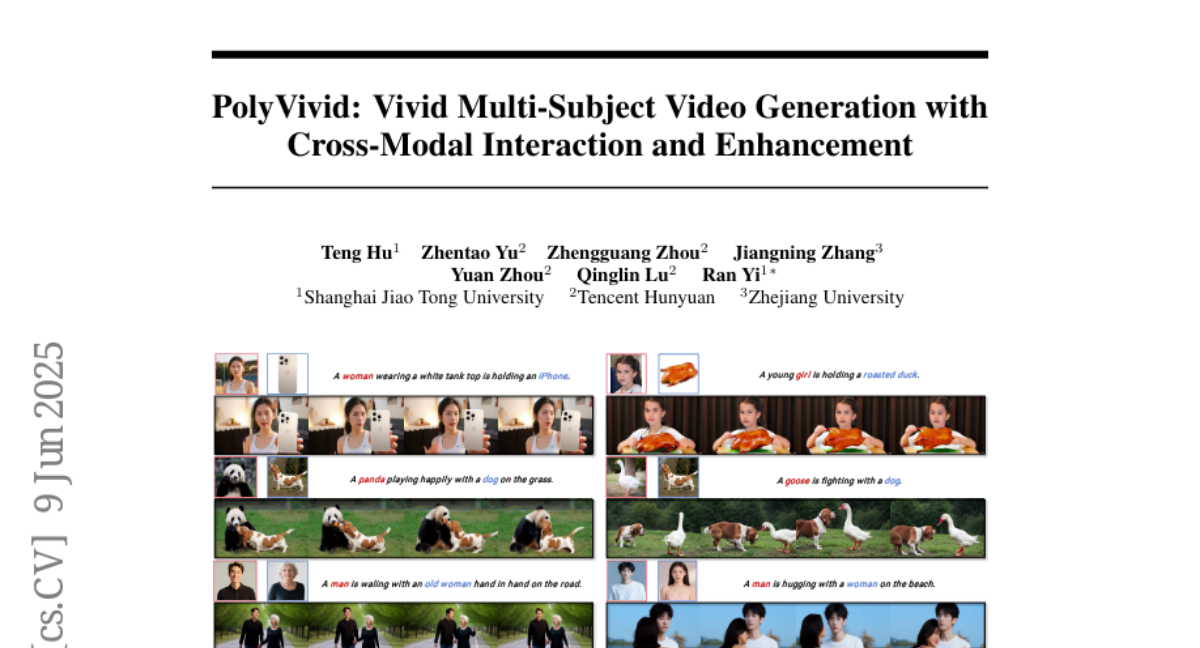

39. PolyVivid: Vivid Multi-Subject Video Generation with Cross-Modal Interaction and Enhancement

🔑 Keywords: text-image fusion, 3D-RoPE enhancement, identity injection, video realism, subject alignment

💡 Category: Generative Models

🌟 Research Objective:

– The primary aim is to provide fine-grained controllability in multi-subject video customization, ensuring consistent identity and interaction through a novel framework called PolyVivid.

🛠️ Research Methods:

– The framework employs a VLLM-based text-image fusion module, a 3D-RoPE-based enhancement module, and an attention-inherited identity injection technique to achieve precise subject grounding and identity consistency.

– An MLLM-based data pipeline is constructed to enhance subject distinction and reduce ambiguity in multi-subject video generation tasks.

💬 Research Conclusions:

– PolyVivid outperforms existing open-source and commercial models, excelling in identity fidelity, video realism, and subject alignment.

👉 Paper link: https://huggingface.co/papers/2506.07848

40. EVOREFUSE: Evolutionary Prompt Optimization for Evaluation and Mitigation of LLM Over-Refusal to Pseudo-Malicious Instructions

🔑 Keywords: EVOREFUSE, Pseudo-Malicious Instructions, Evolutionary Algorithm, LLM Refusal Probability, Over-refusals

💡 Category: Natural Language Processing

🌟 Research Objective:

– The main goal is to optimize LLM refusal training by generating diverse pseudo-malicious instructions without compromising user safety.

🛠️ Research Methods:

– Introduction of EVOREFUSE using an evolutionary algorithm to explore instruction space with mutation strategies and recombination for prompt optimization.

💬 Research Conclusions:

– EVOREFUSE leads to improved benchmarks with a 140.41% higher refusal rate and enhanced lexical diversity and response confidence in LLMs compared to existing methods. Models fine-tuned on created datasets reduce over-refusals significantly.

👉 Paper link: https://huggingface.co/papers/2505.23473

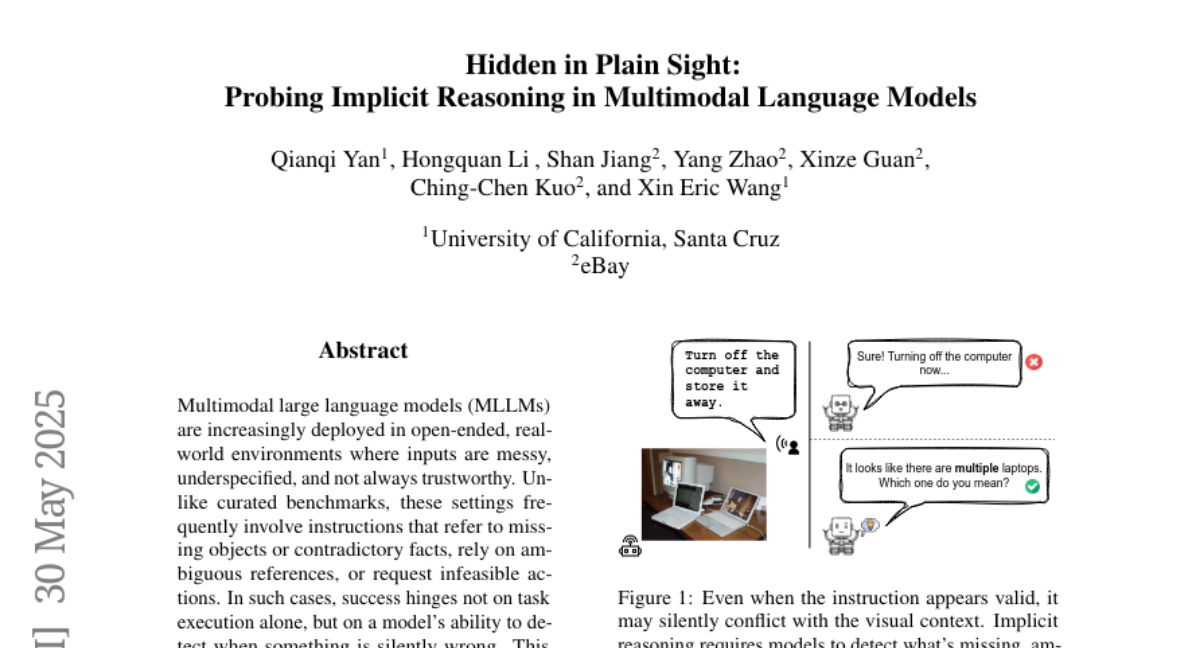

41. Hidden in Plain Sight: Probing Implicit Reasoning in Multimodal Language Models

🔑 Keywords: Multimodal Large Language Models, Implicit Reasoning, Cautious Prompting, Clarifying Questions

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To analyze how current Multimodal Large Language Models (MLLMs) handle implicit reasoning scenarios in real-world, messy inputs.

🛠️ Research Methods:

– Systematic analysis using a curated diagnostic suite spanning four categories of real-world failure modes, evaluating six MLLMs including o3 and GPT-4o.

💬 Research Conclusions:

– Found a gap between reasoning competence and user compliance in MLLMs, but performance can improve with cautious prompting and requiring clarifying questions.

👉 Paper link: https://huggingface.co/papers/2506.00258

42. Training-Free Tokenizer Transplantation via Orthogonal Matching Pursuit

🔑 Keywords: Orthogonal Matching Pursuit, tokenizers, pretrained large language models, zero-shot preservation

💡 Category: Natural Language Processing

🌟 Research Objective:

– To develop a training-free method for transplanting tokenizers in pretrained large language models using Orthogonal Matching Pursuit.

🛠️ Research Methods:

– Utilize Orthogonal Matching Pursuit to reconstruct unseen token embeddings as a sparse linear combination of shared anchor tokens, effectively preserving the model’s performance across different tokenizers without gradient updates.

💬 Research Conclusions:

– OMP achieves superior zero-shot preservation of the base model’s performance across multiple benchmarks compared to other approaches, addressing large tokenizer discrepancies and enabling effective cross-tokenizer knowledge distillation and domain-specific vocabulary adaptation.

👉 Paper link: https://huggingface.co/papers/2506.06607

43. Evaluating LLMs Robustness in Less Resourced Languages with Proxy Models

🔑 Keywords: Large language models, Low-resource languages, Perturbations, Proxy model, Multilingual

💡 Category: Natural Language Processing

🌟 Research Objective:

– To reveal vulnerabilities in large language models (LLMs) across various languages, especially in low-resource languages like Polish, through character and word-level attacks.

🛠️ Research Methods:

– Utilizing a proxy model for word importance calculation to create cost-effective attacks by altering minimal characters, validating these methods on Polish and showing applicability to other languages.

💬 Research Conclusions:

– The study demonstrates that these tailored attacks can significantly alter LLM predictions, highlighting potential vulnerabilities in their safety mechanisms, and emphasizes the need for comprehensive multilingual safety evaluations.

👉 Paper link: https://huggingface.co/papers/2506.07645

44. Improving large language models with concept-aware fine-tuning

🔑 Keywords: Large Language Models, Concept-Aware Fine-Tuning, Multi-token Learning, Post-training Phase, Broader Implications

💡 Category: Natural Language Processing

🌟 Research Objective:

– The study aims to enhance the coherent understanding and performance of large language models (LLMs) through Concept-Aware Fine-Tuning (CAFT), which allows multi-token learning in the fine-tuning phase.

🛠️ Research Methods:

– The researchers introduced a novel multi-token training approach, redefined as CAFT, enabling the sequence learning that spans multiple tokens to improve concept-aware learning.

💬 Research Conclusions:

– CAFT significantly improves LLM performance across various tasks, including text summarization and protein design. It democratizes multi-token prediction benefits by applying them in the post-training phase and shows broader implications for the machine learning research community.

👉 Paper link: https://huggingface.co/papers/2506.07833

45. CyberV: Cybernetics for Test-time Scaling in Video Understanding

🔑 Keywords: Multimodal Large Language Models, adaptive systems, self-monitoring, self-correction, test-time adaptive scaling

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The paper aims to enhance Multimodal Large Language Models (MLLMs) for video understanding by developing an adaptive framework that addresses current limitations such as computational demands, lack of robustness, and limited accuracy.

🛠️ Research Methods:

– A novel cybernetic-inspired framework, CyberV, is proposed, incorporating a Sensor and Controller system for self-monitoring and self-correction during inference, enhancing existing MLLMs without retraining.

💬 Research Conclusions:

– Experiments show that CyberV significantly improves the performance of models on various benchmarks, surpassing competitive models like GPT-4o and achieving human-expert comparable results, demonstrating its effectiveness and generalization capabilities.

👉 Paper link: https://huggingface.co/papers/2506.07971

46. Dynamic View Synthesis as an Inverse Problem

🔑 Keywords: Dynamic View Synthesis, Monocular Videos, Pre-trained Video Diffusion Model, K-order Recursive Noise Representation, Stochastic Latent Modulation

💡 Category: Computer Vision

🌟 Research Objective:

– The study aims to achieve high-fidelity dynamic view synthesis from monocular videos using a training-free approach.

🛠️ Research Methods:

– Redesigning noise initialization in a pre-trained diffusion model with K-order Recursive Noise Representation.

– Introduction of Stochastic Latent Modulation to efficiently align and fill occluded regions resulting from camera motion.

💬 Research Conclusions:

– The paper concludes that structured latent manipulation in the noise initialization phase can effectively enable dynamic view synthesis without additional training or auxiliary modules.

👉 Paper link: https://huggingface.co/papers/2506.08004

47. Proactive Assistant Dialogue Generation from Streaming Egocentric Videos

🔑 Keywords: Real-time systems, Conversational AI, Streaming video inputs, Data curation pipeline, End-to-end model

💡 Category: Human-AI Interaction

🌟 Research Objective:

– To develop a comprehensive framework for real-time, proactive conversational AI task guidance using streaming video inputs.

🛠️ Research Methods:

– Introduction of a novel data curation pipeline to synthesize dialogues from annotated egocentric videos, creating a large-scale synthetic dialogue dataset.

– Development of automatic evaluation metrics validated through human studies.

– Proposal of an end-to-end model that processes streaming video inputs to produce contextually appropriate responses.

💬 Research Conclusions:

– The framework addresses challenges in real-time perceptual task guidance by automating data synthesis and evaluation, thus laying the groundwork for the development of proactive AI assistants capable of assisting users with diverse tasks.

👉 Paper link: https://huggingface.co/papers/2506.05904

48. Meta-Adaptive Prompt Distillation for Few-Shot Visual Question Answering

🔑 Keywords: Meta-Learning, Soft Prompts, Attention-Mapper Module, Few-Shot Capabilities, Visual Question Answering

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The study aims to enhance few-shot capabilities in Large Multimodal Models (LMMs) by addressing inconsistent performance in in-context learning (ICL).

🛠️ Research Methods:

– A meta-learning approach is proposed, using fixed soft prompts distilled from task-relevant image features, integrated with an attention-mapper module adaptable at test time.

💬 Research Conclusions:

– This method consistently outperforms in-context learning and related prompt-tuning approaches, especially under image perturbations, improving task induction and reasoning in visual question answering tasks.

👉 Paper link: https://huggingface.co/papers/2506.06905

49.