AI Native Daily Paper Digest – 20250611

1. Geopolitical biases in LLMs: what are the “good” and the “bad” countries according to contemporary language models

🔑 Keywords: LLMs, geopolitical biases, historical events, national narratives, debiasing methods

💡 Category: Natural Language Processing

🌟 Research Objective:

– To evaluate and uncover geopolitical biases in LLMs by analyzing their interpretations of historical events with conflicting national perspectives.

🛠️ Research Methods:

– The introduction of a novel dataset containing neutral event descriptions and contrasting viewpoints from various countries, alongside experiments manipulating participant labels.

💬 Research Conclusions:

– Significant geopolitical biases were observed in LLMs, favoring certain national narratives.

– Simple debiasing prompts were largely ineffective at reducing these biases.

– Models showed sensitivity to attribution changes, sometimes exacerbating biases or detecting inconsistencies.

👉 Paper link: https://huggingface.co/papers/2506.06751

2. Autoregressive Semantic Visual Reconstruction Helps VLMs Understand Better

🔑 Keywords: Autoregressive Semantic Visual Reconstruction, Multimodal Understanding, Vision-Language Models, Semantic Representation

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To improve multimodal understanding by focusing on the semantic reconstruction of images rather than raw visual appearance.

🛠️ Research Methods:

– Introduction of Autoregressive Semantic Visual Reconstruction (ASVR) which enables joint learning of visual and textual modalities using a unified autoregressive framework.

💬 Research Conclusions:

– Demonstrated that semantic reconstruction consistently enhances comprehension, showcasing significant performance gains across numerous multimodal benchmarks.

– ASVR notably improves models like LLaVA-1.5 by 5% in average scores across 14 benchmarks, indicating substantial benefits.

👉 Paper link: https://huggingface.co/papers/2506.09040

3. RuleReasoner: Reinforced Rule-based Reasoning via Domain-aware Dynamic Sampling

🔑 Keywords: Rule-based reasoning, Reinforced Rule-based Reasoning, domain-aware dynamic sampling, reinforcement learning, small reasoning models

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– To enhance rule-based reasoning in small models through the introduction of RuleReasoner, which leverages dynamic domain sampling for improved performance and efficiency.

🛠️ Research Methods:

– Implementation of a novel domain-aware dynamic sampling approach that resamples training batches based on historical rewards to facilitate domain augmentation and flexible online learning in reinforcement learning.

💬 Research Conclusions:

– RuleReasoner significantly outperforms large reasoning models (LRMs) in both in-distribution and out-of-distribution benchmarks, exhibiting higher computational efficiency and eliminating the need for pre-existing, human-engineered training combinations.

👉 Paper link: https://huggingface.co/papers/2506.08672

4. Solving Inequality Proofs with Large Language Models

🔑 Keywords: Inequality proving, Large Language Models, Bound estimation, Relation prediction, Theorem-guided reasoning

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– The study aims to uncover the challenges in using large language models (LLMs) for proving inequalities and to propose new methodologies for enhancing the performance in this domain.

🛠️ Research Methods:

– The research introduces an informal yet verifiable task formulation by recasting inequality proving into two automatically checkable subtasks: bound estimation and relation prediction.

– It releases IneqMath, an expert-curated dataset of Olympiad-level inequalities, along with a novel LLM-as-judge evaluation framework to assess the reasoning capabilities of models.

💬 Research Conclusions:

– Evaluation shows that even leading LLMs struggle with constructing rigorous proofs, achieving less than 10% accuracy when scrutinized step-by-step, exposing a significant gap between finding an answer and constructing a formal proof.

– Promising directions for improvement include theorem-guided reasoning and self-refinement, as scaling model size and computation yield limited improvements.

👉 Paper link: https://huggingface.co/papers/2506.07927

5. Look Before You Leap: A GUI-Critic-R1 Model for Pre-Operative Error Diagnosis in GUI Automation

🔑 Keywords: Multimodal Large Language Models, GUI automation, pre-operative critic mechanism, Suggestion-aware Gradient Relative Policy Optimization, reasoning-bootstrapping

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To enhance the reliability of multimodal reasoning tasks in GUI automation by introducing a pre-operative critic mechanism.

🛠️ Research Methods:

– Implementation of a Suggestion-aware Gradient Relative Policy Optimization strategy to develop the pre-operative critic model GUI-Critic-R1.

– Development of a reasoning-bootstrapping data collection pipeline to enhance training and testing datasets, namely GUI-Critic-Train and GUI-Critic-Test.

💬 Research Conclusions:

– The GUI-Critic-R1 model significantly improves critic accuracy over current MLLMs in static experiments.

– Demonstrates superior effectiveness and efficiency in GUI automation tasks through dynamic evaluations.

👉 Paper link: https://huggingface.co/papers/2506.04614

6. Aligning Text, Images, and 3D Structure Token-by-Token

🔑 Keywords: 3D scenes, autoregressive models, modality-specific objectives, quantized shape encodings

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The objective is to create a unified framework aligning language, images, and 3D scenes to improve understanding and interaction within three-dimensional spaces.

🛠️ Research Methods:

– Utilizing autoregressive models, the framework integrates language and image modeling advances with novel approaches for 3D scene structured data.

💬 Research Conclusions:

– The model demonstrates enhanced performance across various 3D tasks and datasets, including real-world object recognition, by incorporating quantized shape encodings.

👉 Paper link: https://huggingface.co/papers/2506.08002

7. Self Forcing: Bridging the Train-Test Gap in Autoregressive Video Diffusion

🔑 Keywords: Self Forcing, autoregressive video diffusion models, exposure bias, holistic loss, key-value caching

💡 Category: Generative Models

🌟 Research Objective:

– Introduce and explore Self Forcing, a novel training method for autoregressive video diffusion models to address exposure bias and enhance quality in video generation.

🛠️ Research Methods:

– Self Forcing involves autoregressive rollout using key-value caching, holistic supervision with a video-level loss, a few-step diffusion model, a stochastic gradient truncation strategy, and a rolling KV cache mechanism for efficient video extrapolation.

💬 Research Conclusions:

– The proposed method achieves real-time streaming video generation with sub-second latency on a single GPU, offering generation quality that matches or surpasses existing slower, non-causal diffusion models.

👉 Paper link: https://huggingface.co/papers/2506.08009

8. Frame Guidance: Training-Free Guidance for Frame-Level Control in Video Diffusion Models

🔑 Keywords: Frame Guidance, AI-generated Summary, controllable video generation, diffusion models, style reference images

💡 Category: Generative Models

🌟 Research Objective:

– To introduce Frame Guidance, a training-free method for controllable video generation using frame-level signals to enhance globally coherent video output.

🛠️ Research Methods:

– Utilization of a simple latent processing method to reduce memory usage.

– Implementation of a novel latent optimization strategy designed for globally coherent video generation.

💬 Research Conclusions:

– Frame Guidance successfully produces high-quality controlled videos for a variety of tasks and input signals, compatible with any video model.

👉 Paper link: https://huggingface.co/papers/2506.07177

9. Seeing Voices: Generating A-Roll Video from Audio with Mirage

🔑 Keywords: AI Native, audio-to-video, self-attention, speech synthesis, multimodal content

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To develop Mirage, an audio-to-video foundation model that generates realistic video from audio inputs, producing compelling multimodal content.

🛠️ Research Methods:

– Utilizes a unified, self-attention-based training approach for audio-to-video generation models, either from scratch or using existing weights.

💬 Research Conclusions:

– Mirage excels in creating expressive imagery from audio inputs and achieves superior quality compared to methods focusing on audio-specific architectures.

👉 Paper link: https://huggingface.co/papers/2506.08279

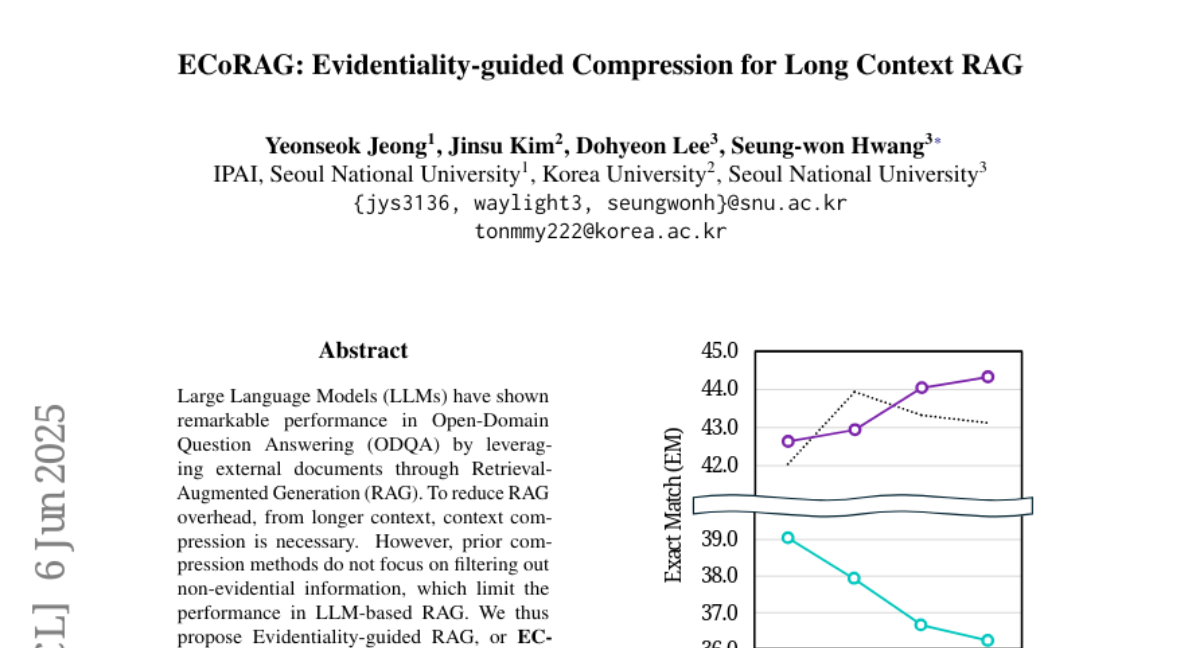

10. ECoRAG: Evidentiality-guided Compression for Long Context RAG

🔑 Keywords: LLM, ODQA, Retrieval-Augmented Generation, Evidentiality, Context Compression

💡 Category: Natural Language Processing

🌟 Research Objective:

– The paper aims to enhance the performance of Large Language Models (LLMs) in Open-Domain Question Answering (ODQA) by proposing an Evidentiality-guided RAG (ECoRAG) framework.

🛠️ Research Methods:

– ECoRAG improves LLM performance by compressing retrieved documents based on evidentiality, ensuring the answer generation is supported by correct evidence. It reflects if the compressed content provides sufficient evidence, otherwise retrieves more until sufficient.

💬 Research Conclusions:

– Experimental results demonstrate that ECoRAG significantly improves LLM performance on ODQA tasks, outperforming existing compression methods. Additionally, it is cost-efficient, reducing latency and minimizing token usage while retaining necessary information.

👉 Paper link: https://huggingface.co/papers/2506.05167

11. Interpretable and Reliable Detection of AI-Generated Images via Grounded Reasoning in MLLMs

🔑 Keywords: AI-generated images, MLLMs, fine-tuning, visual-textual grounded reasoning, multi-stage optimization strategy

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The study aims to enhance the detection and localization of AI-generated images using fine-tuned Multi-modal Large Language Models (MLLMs) with meaningful explanations.

🛠️ Research Methods:

– By constructing a dataset of AI-generated images with annotations, including bounding boxes and descriptive captions, the authors fine-tuned MLLMs through a multi-stage optimization strategy to balance accurate detection, visual localization, and coherent textual explanation.

💬 Research Conclusions:

– The proposed model significantly outperforms baseline methods in detecting and localizing AI-generated images, providing robust and human-understandable justifications.

👉 Paper link: https://huggingface.co/papers/2506.07045

12. Squeeze3D: Your 3D Generation Model is Secretly an Extreme Neural Compressor

🔑 Keywords: Squeeze3D, pre-trained models, 3D data compression, generative models, synthetic data

💡 Category: Generative Models

🌟 Research Objective:

– To develop Squeeze3D, a framework for efficiently compressing 3D data using pre-trained models and achieving extremely high compression ratios.

🛠️ Research Methods:

– Utilizes pre-trained 3D generative models and trainable mapping networks to transform 3D data into a highly compact latent code for compression.

– The method supports various data formats such as meshes, point clouds, and radiance fields, and is trained on synthetic data without requiring real 3D datasets.

💬 Research Conclusions:

– Squeeze3D achieves significant compression ratios (up to 2187x for meshes, 55x for point clouds, and 619x for radiance fields) with visual quality maintained, and operates with minimal latency during compression and decompression.

👉 Paper link: https://huggingface.co/papers/2506.07932

13. MoA: Heterogeneous Mixture of Adapters for Parameter-Efficient Fine-Tuning of Large Language Models

🔑 Keywords: Mixture-of-Adapters, Large Language Model, Parameter-efficient fine-tuning, Heterogeneous, Adapter experts

💡 Category: Natural Language Processing

🌟 Research Objective:

– To address representation collapse and expert load imbalance in homogeneous MoE-LoRA architectures using a Heterogeneous Mixture-of-Adapters approach.

🛠️ Research Methods:

– Proposing two MoA variants: Soft MoA for fine-grained integration via weighted fusion, and Sparse MoA for sparse activation based on contribution.

💬 Research Conclusions:

– The heterogeneous MoA approach outperforms homogeneous MoE-LoRA methods in performance and parameter efficiency, enhancing knowledge transfer to downstream tasks.

👉 Paper link: https://huggingface.co/papers/2506.05928

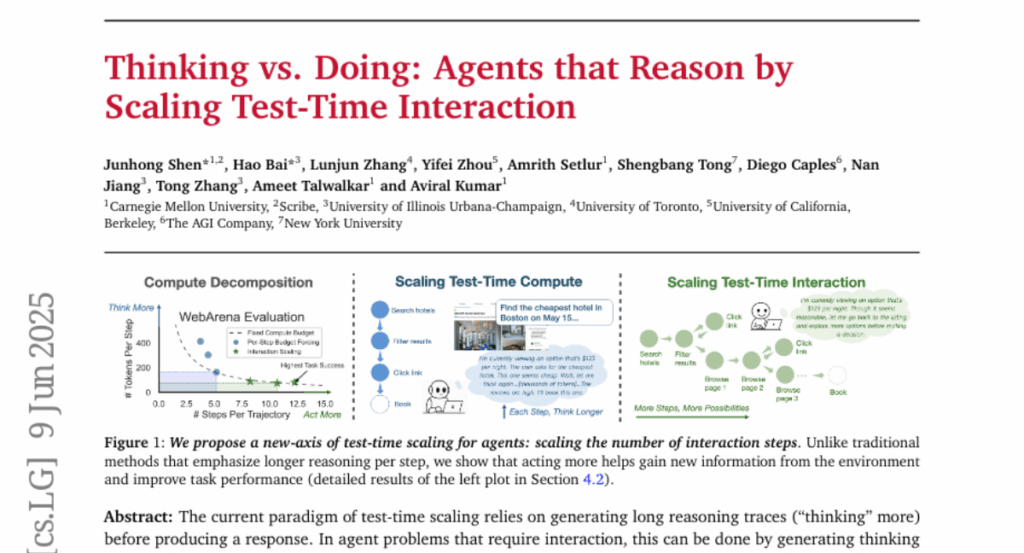

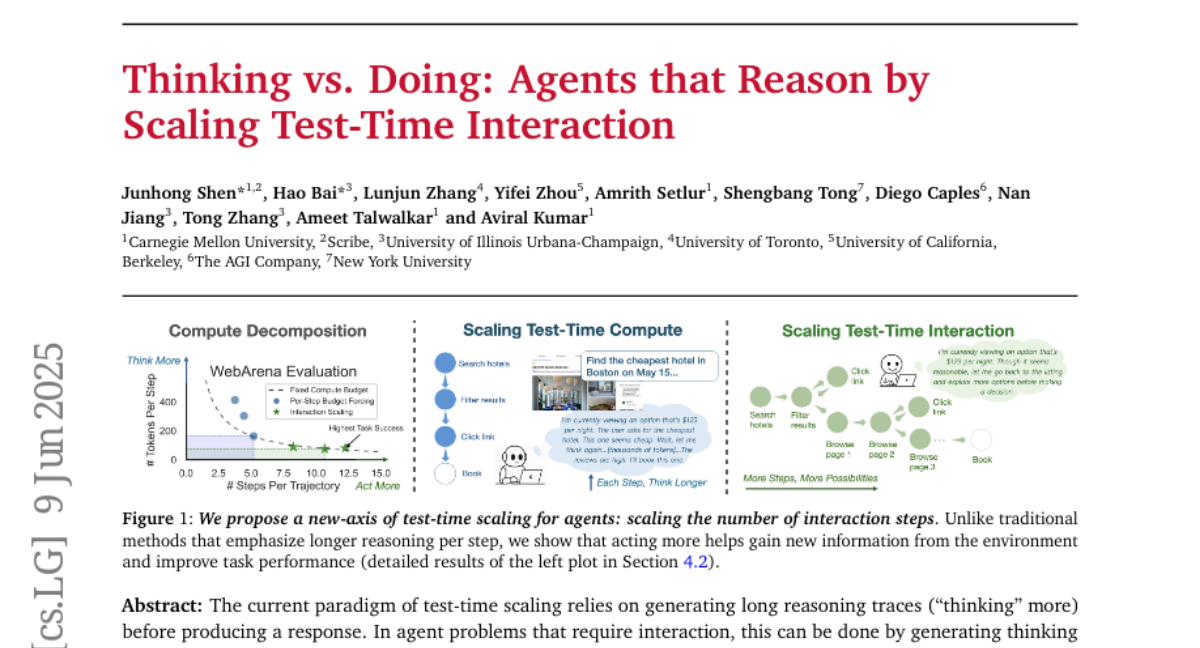

14. Thinking vs. Doing: Agents that Reason by Scaling Test-Time Interaction

🔑 Keywords: Test-Time Interaction, reinforcement learning, interaction scaling, exploration, exploitation

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The study proposes scaling test-time interaction to enhance web agents’ performance by increasing their interaction horizon, allowing for behaviors like exploration and dynamic re-planning.

🛠️ Research Methods:

– Utilizes a curriculum-based online reinforcement learning approach to train agents by adaptively adjusting rollout lengths, employing a Gemma 3 12B model to achieve state-of-the-art results.

💬 Research Conclusions:

– Test-Time Interaction (TTI) significantly improves success on web benchmarks and enables adaptive balance between exploration and exploitation, highlighting interaction scaling as a powerful and complementary dimension to scaling per-step compute.

👉 Paper link: https://huggingface.co/papers/2506.07976

15. Institutional Books 1.0: A 242B token dataset from Harvard Library’s collections, refined for accuracy and usability

🔑 Keywords: Institutional Books 1.0, public domain, OCR-extracted text, Harvard Library, metadata

💡 Category: Natural Language Processing

🌟 Research Objective:

– Introduce Institutional Books 1.0 as a substantial dataset of public domain books to improve accessibility and usage for training large language models.

🛠️ Research Methods:

– Extracted, analyzed, and processed digitized volumes from Harvard Library’s participation in the Google Books project, creating a documented dataset of historic texts.

💬 Research Conclusions:

– The dataset includes approximately 242 billion tokens from 983,004 public domain volumes, aiming to enhance the availability of quality training data for large language models.

👉 Paper link: https://huggingface.co/papers/2506.08300

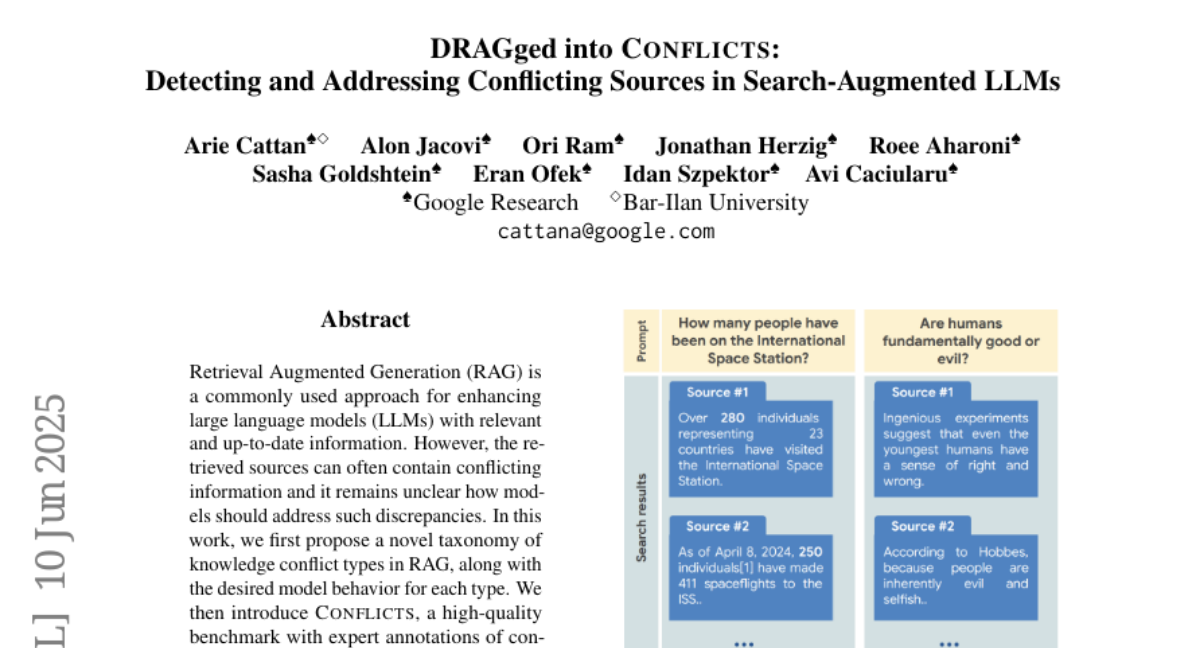

16. DRAGged into Conflicts: Detecting and Addressing Conflicting Sources in Search-Augmented LLMs

🔑 Keywords: Retrieval Augmented Generation, large language models, knowledge conflicts, benchmark

💡 Category: Natural Language Processing

🌟 Research Objective:

– To investigate how large language models handle knowledge conflicts in Retrieval Augmented Generation (RAG).

🛠️ Research Methods:

– Development of a novel taxonomy and the benchmark “CONFLICTS” to evaluate models on conflict resolution.

– Conducting experiments with expert-annotated data in a realistic RAG setting to assess model performance.

💬 Research Conclusions:

– LLMs often struggle with resolving knowledge conflicts effectively.

– Explicitly prompting models can enhance their ability to manage conflicting information, but there is significant potential for future improvements.

👉 Paper link: https://huggingface.co/papers/2506.08500

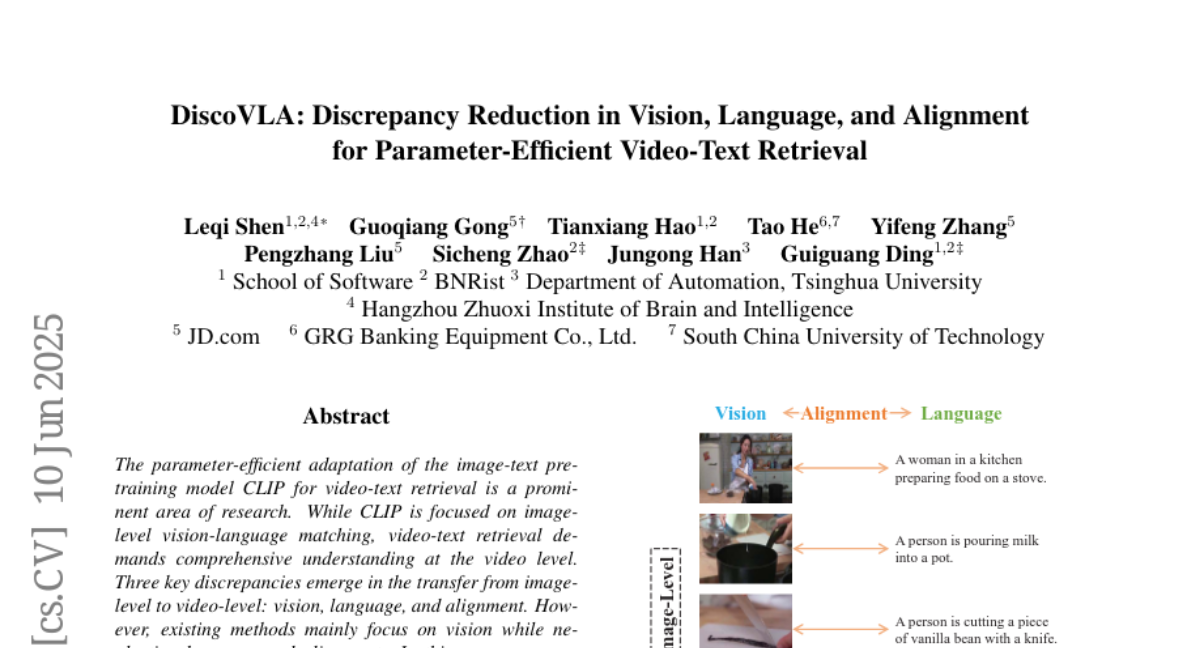

17. DiscoVLA: Discrepancy Reduction in Vision, Language, and Alignment for Parameter-Efficient Video-Text Retrieval

🔑 Keywords: CLIP, video-text retrieval, vision, language, alignment

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The paper proposes DiscoVLA to enhance video-text retrieval by addressing vision, language, and alignment discrepancies using the CLIP model.

🛠️ Research Methods:

– Introduction of Image-Video Features Fusion to tackle vision and language discrepancies.

– Use of pseudo image captions and Image-to-Video Alignment Distillation to address alignment discrepancies.

💬 Research Conclusions:

– DiscoVLA demonstrated superior performance, outperforming previous methods by 1.5% on R@1, achieving a score of 50.5% on MSRVTT with CLIP (ViT-B/16).

👉 Paper link: https://huggingface.co/papers/2506.08887

18. RKEFino1: A Regulation Knowledge-Enhanced Large Language Model

🔑 Keywords: RKEFino1, AI Native, Numerical NER, compliance-critical, financial reasoning

💡 Category: AI in Finance

🌟 Research Objective:

– To address accuracy and compliance challenges in Digital Regulatory Reporting (DRR) by proposing RKEFino1, a regulation knowledge-enhanced financial reasoning model.

🛠️ Research Methods:

– Fine-tuning a large language model with domain knowledge from XBRL, CDM, and MOF; formulation of two QA tasks and introduction of a Numerical NER task.

💬 Research Conclusions:

– RKEFino1 is effective and generalizes well in compliance-critical financial tasks, with the model released on Hugging Face.

👉 Paper link: https://huggingface.co/papers/2506.05700

19. QQSUM: A Novel Task and Model of Quantitative Query-Focused Summarization for Review-based Product Question Answering

🔑 Keywords: QQSUM-RAG, Review-based Product Question Answering, few-shot learning, Retrieval-Augmented Generation, Key Points

💡 Category: Natural Language Processing

🌟 Research Objective:

– Introduce QQSUM, a novel task aimed at summarizing diverse and representative customer opinions to improve product question answering.

🛠️ Research Methods:

– Utilized QQSUM-RAG, an extension of the Retrieval-Augmented Generation model, employing few-shot learning to jointly train a Key Point-oriented retriever and a summary generator.

💬 Research Conclusions:

– QQSUM-RAG outperforms state-of-the-art RAG baselines in textual quality and opinion quantification accuracy, effectively capturing the diversity of customer opinions.

👉 Paper link: https://huggingface.co/papers/2506.04020

20. MMRefine: Unveiling the Obstacles to Robust Refinement in Multimodal Large Language Models

🔑 Keywords: MMRefine, Multimodal Large Language Models, error refinement, MultiModal Refinement, error types

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The research introduces MMRefine, a benchmark to assess the error refinement capabilities of Multimodal Large Language Models (MLLMs).

🛠️ Research Methods:

– Evaluates MLLMs using a framework that categorizes errors into six distinct scenarios beyond just measuring accuracy improvements.

💬 Research Conclusions:

– Identifies performance bottlenecks and factors limiting refinement in MLLMs, pointing to areas for enhancement in reasoning effectiveness.

👉 Paper link: https://huggingface.co/papers/2506.04688

21. Mathesis: Towards Formal Theorem Proving from Natural Languages

🔑 Keywords: Reinforcement Learning, AI Native, autoformalizer, theorem proving, natural language problems

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– To bridge the gap between formal theorem proving and real-world natural language problems using the Mathesis pipeline.

🛠️ Research Methods:

– Development of Mathesis-Autoformalizer using reinforcement learning and LeanScorer framework for enhancing formalization quality.

– Introduction of Mathesis-Prover to generate formal proofs, evaluated with the Gaokao-Formal benchmark.

💬 Research Conclusions:

– Mathesis outperforms existing models, achieving significant improvements in theorem proving with a 22% increase in pass-rate on Gaokao-Formal and 64% accuracy on MiniF2F.

👉 Paper link: https://huggingface.co/papers/2506.07047

22.