AI Native Daily Paper Digest – 20250630

1. BlenderFusion: 3D-Grounded Visual Editing and Generative Compositing

🔑 Keywords: BlenderFusion, diffusion model, source masking, simulated object jittering, AI-generated summary

💡 Category: Generative Models

🌟 Research Objective:

– The objective is to present BlenderFusion, a framework for generative visual compositing, enhancing scene editing and composition by synthesizing new scenes with a layering-editing-compositing pipeline.

🛠️ Research Methods:

– The research utilizes a pre-trained diffusion model extended for parallel processing of scenes and fine-tuned on video frames using source masking and simulated object jittering.

💬 Research Conclusions:

– BlenderFusion shows significant improvement over previous methods in complex compositional scene editing tasks.

👉 Paper link: https://huggingface.co/papers/2506.17450

2. LLaVA-Scissor: Token Compression with Semantic Connected Components for Video LLMs

🔑 Keywords: LLaVA-Scissor, Semantic Connected Components, token compression, video multimodal large language models

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Introduce LLaVA-Scissor, a training-free token compression strategy tailored for video multimodal large language models, aiming to enhance semantic coverage and minimize token redundancy.

🛠️ Research Methods:

– Utilize Semantic Connected Components (SCC) to assign tokens to distinct semantic regions for comprehensive coverage, implementing a spatio-temporal token compression strategy that operates in both spatial and temporal domains.

💬 Research Conclusions:

– LLaVA-Scissor outperforms existing token compression methods in video understanding benchmarks, demonstrating superior performance, especially at low token retention ratios.

👉 Paper link: https://huggingface.co/papers/2506.21862

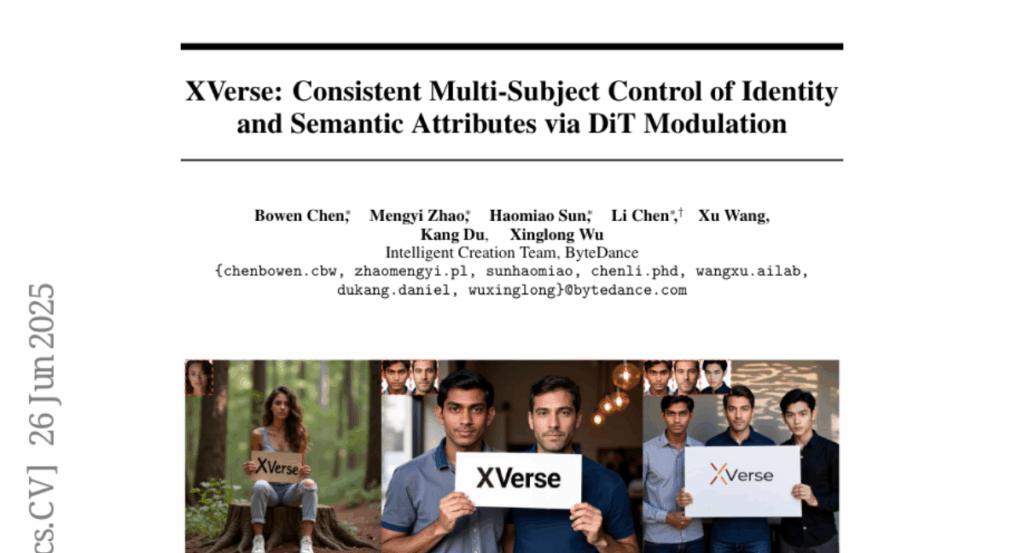

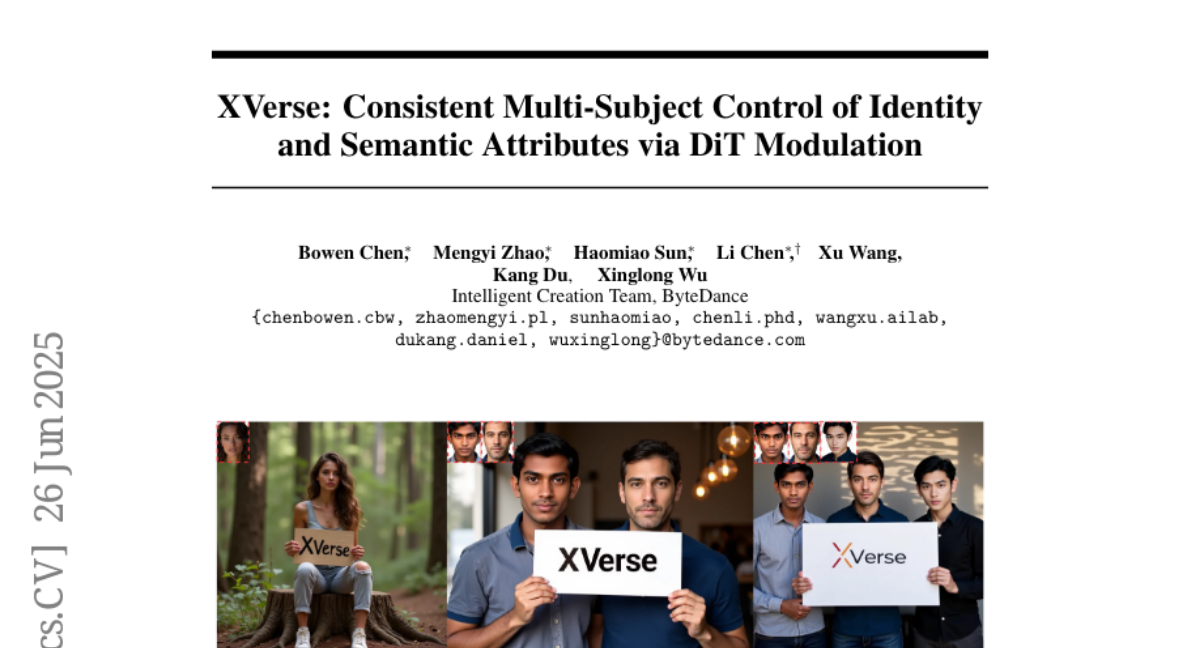

3. XVerse: Consistent Multi-Subject Control of Identity and Semantic Attributes via DiT Modulation

🔑 Keywords: AI-generated summary, text-to-image generation, Diffusion Transformers (DiTs), token-specific text-stream modulation, multi-subject image synthesis

💡 Category: Generative Models

🌟 Research Objective:

– The paper proposes the XVerse model to achieve fine-grained control over multiple subjects’ identity and semantic attributes in text-to-image generation.

🛠️ Research Methods:

– The method involves transforming reference images into offsets for token-specific text-stream modulation to allow independent control without disturbing image latents or features.

💬 Research Conclusions:

– XVerse offers high-fidelity, editable multi-subject image synthesis with robust control over individual subject characteristics, enhancing personalized and complex scene generation capabilities.

👉 Paper link: https://huggingface.co/papers/2506.21416

4. ShotBench: Expert-Level Cinematic Understanding in Vision-Language Models

🔑 Keywords: AI-driven cinematic understanding, Vision-Language Models, ShotBench, ShotQA, ShotVL

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The study aims to enhance AI’s capabilities in understanding and generating nuanced cinematic language by developing datasets and models specifically focused on cinematic language comprehension.

🛠️ Research Methods:

– Introduction of ShotBench as a benchmark with over 3.5k expert-annotated QA pairs from films.

– Evaluation of 24 Vision-Language Models to assess their limitations.

– Creation of ShotQA, a large-scale multimodal dataset with approximately 70k cinematic QA pairs.

– Development of ShotVL through supervised fine-tuning and Group Relative Policy Optimization.

💬 Research Conclusions:

– ShotVL significantly outperforms existing models on ShotBench, establishing state-of-the-art performance in AI-driven cinematic understanding and generation.

– Open-sourcing of models, data, and code to promote advancements in this area.

👉 Paper link: https://huggingface.co/papers/2506.21356

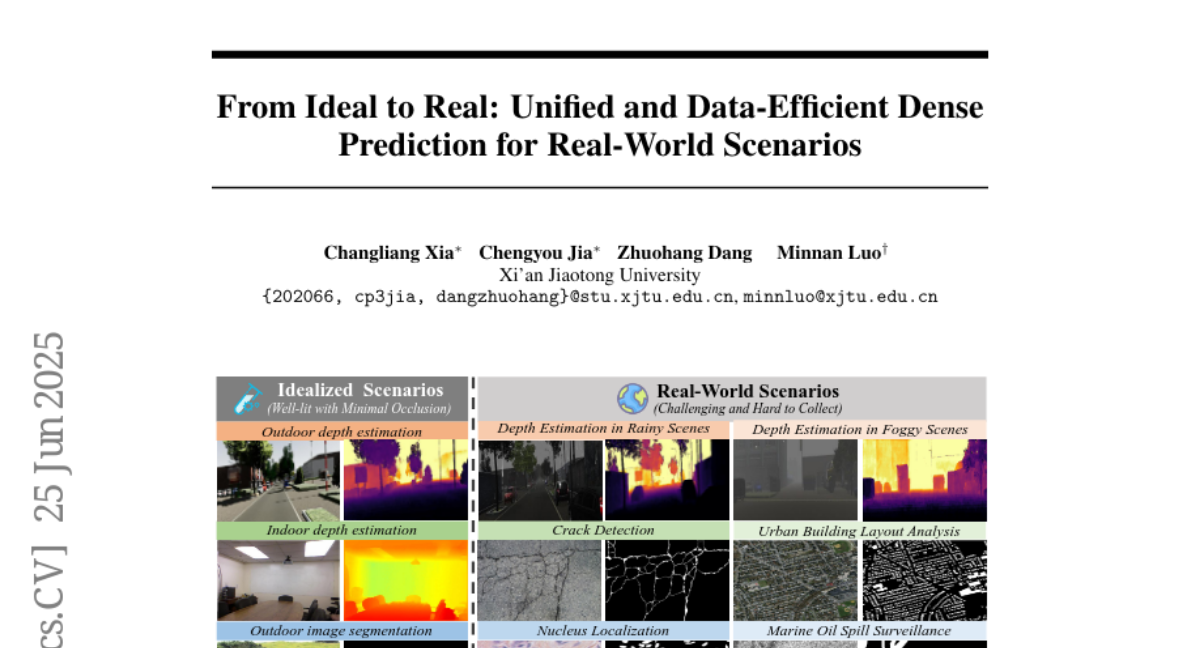

5. From Ideal to Real: Unified and Data-Efficient Dense Prediction for Real-World Scenarios

🔑 Keywords: DenseDiT, Generative Models, Dense Prediction, Computer Vision

💡 Category: Computer Vision

🌟 Research Objective:

– The study introduces DenseDiT, a generative model-based approach, aimed at enhancing performance in real-world dense prediction tasks using minimal training data.

🛠️ Research Methods:

– DenseDiT employs a parameter-reuse mechanism and two lightweight branches that integrate multi-scale context, maximizing the use of visual priors from generative models.

💬 Research Conclusions:

– DenseDiT demonstrates superior performance using less than 0.01% of the training data compared to existing methods, highlighting its efficacy and practical value for real-world deployment.

👉 Paper link: https://huggingface.co/papers/2506.20279

6. Pangu Pro MoE: Mixture of Grouped Experts for Efficient Sparsity

🔑 Keywords: Mixture of Grouped Experts, Ascend NPUs, Pangu Pro MoE, Expert Load Balancing, Sparse model

💡 Category: Natural Language Processing

🌟 Research Objective:

– To introduce and implement the Mixture of Grouped Experts (MoGE) for large language models to improve expert load balancing and execution efficiency, particularly on Ascend NPUs.

🛠️ Research Methods:

– The development of Pangu Pro MoE, a model based on MoGE with extensive system simulation studies, optimized for Ascend 300I Duo and 800I A2 NPUs, involving sparse models to enhance computational load balancing across devices.

💬 Research Conclusions:

– MoGE results in better expert load balancing and more efficient execution for model training and inference, achieving significant throughput improvements and a favorable cost-to-performance ratio, outperforming comparable Dense models.

👉 Paper link: https://huggingface.co/papers/2505.21411

7. Ark: An Open-source Python-based Framework for Robot Learning

🔑 Keywords: Robotics, AI Native, Imitation Learning, Python-first, ARK Framework

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– The main objective is to bridge the gap between hardware advancements in robotics and its lagging software capabilities by introducing ARK, a Python-first open-source framework.

🛠️ Research Methods:

– ARK provides a Gym-style environment interface, integrates imitation-learning algorithms, and supports seamless switching between simulations and physical robots.

– It employs a lightweight client-server architecture with networked communication and includes optional C/C++ bindings for real-time performance.

💬 Research Conclusions:

– ARK lowers the entry barriers of robotic software development, accelerates research and commercial deployment, and demonstrates with comprehensive documentation and case studies that unify robotics and AI practices.

👉 Paper link: https://huggingface.co/papers/2506.21628

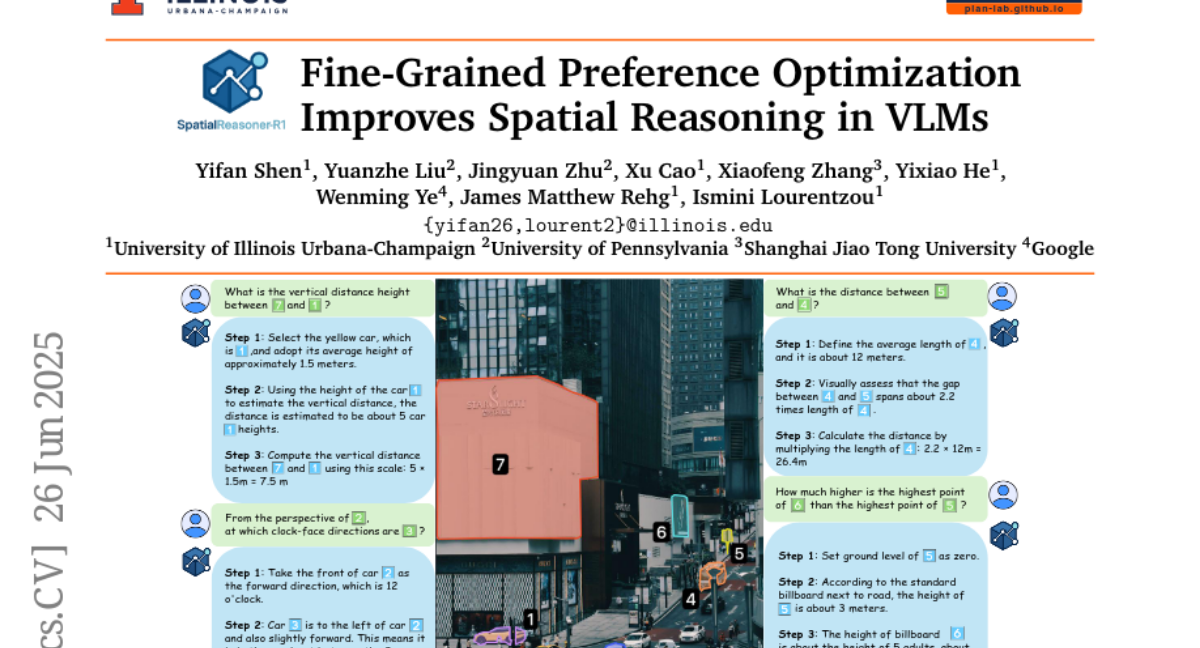

8. Fine-Grained Preference Optimization Improves Spatial Reasoning in VLMs

🔑 Keywords: SpatialReasoner-R1, Multi-Model Monte Carlo Tree Search, Direct Preference Optimization, Spatial Grounding, Vision-Language Models

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The study aims to enhance fine-grained spatial reasoning in Vision-Language Models by introducing SpatialReasoner-R1.

🛠️ Research Methods:

– Developed a Multi-Model Monte Carlo Tree Search method for generating diverse and consistent reasoning trajectories.

– Implemented fine-grained Direct Preference Optimization to improve descriptive grounding and logical reasoning, guided by a spatial reward mechanism.

💬 Research Conclusions:

– SpatialReasoner-R1 shows an average improvement of 4.1% over standard models in spatial quality tasks and a 9.0% gain in spatial quantity tasks.

– It sets a new state-of-the-art on SPATIALRGPT-Bench, outperforming the previous benchmark by 9.8% in average accuracy.

👉 Paper link: https://huggingface.co/papers/2506.21656

9. Shape-for-Motion: Precise and Consistent Video Editing with 3D Proxy

🔑 Keywords: 3D proxy, Dual-Propagation Strategy, video diffusion model, pose editing, object composition

💡 Category: Computer Vision

🌟 Research Objective:

– Introduce Shape-for-Motion, a framework for precise and consistent video editing using 3D proxy meshes and a decoupled video diffusion model.

🛠️ Research Methods:

– Develop a method that converts target objects in input videos to time-consistent 3D proxies, enabling direct editing on proxies.

– Design a Dual-Propagation Strategy to automatically propagate edits from a single frame to others, enhancing editing consistency.

💬 Research Conclusions:

– The framework facilitates various manipulations such as pose editing, rotation, and texture modification, achieving high-quality and controlled video editing.

👉 Paper link: https://huggingface.co/papers/2506.22432

10. MiCo: Multi-image Contrast for Reinforcement Visual Reasoning

🔑 Keywords: Self-supervised learning, Vision-Language Models, Image triplets, Reasoning, Reinforcement learning

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The study aims to enhance the reasoning ability of Vision-Language Models (VLMs) on multi-image tasks without using human-annotated question-answer pairs.

🛠️ Research Methods:

– The research utilizes self-supervised learning with image triplets and adapts rule-based reinforcement learning to facilitate visual comparison tasks.

💬 Research Conclusions:

– The approach demonstrates significant improvements in multi-image reasoning and general vision tasks, effectively applying reasoning without human annotations.

👉 Paper link: https://huggingface.co/papers/2506.22434

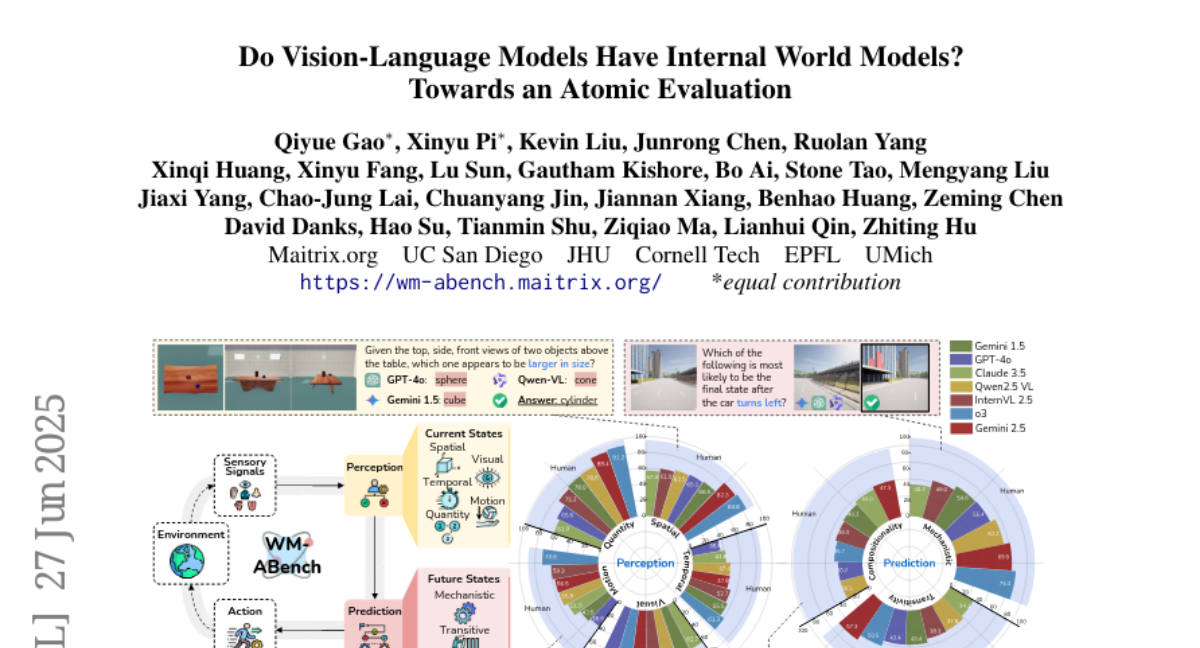

11. Do Vision-Language Models Have Internal World Models? Towards an Atomic Evaluation

🔑 Keywords: Vision-Language Models, World Modeling, Perception, Prediction, WM-ABench

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To evaluate the world modeling capabilities of Vision-Language Models by identifying their limitations in perception and prediction.

🛠️ Research Methods:

– A two-stage framework assessing Perception and Prediction capabilities, including a large-scale benchmark named WM-ABench, with experiments conducted across 15 VLMs in 6 simulated environments.

💬 Research Conclusions:

– VLMs demonstrate significant limitations in basic world modeling abilities, such as near-random accuracy in distinguishing motion trajectories and lack of disentangled understanding, revealing a gap with human-level world modeling.

👉 Paper link: https://huggingface.co/papers/2506.21876

12. The Automated LLM Speedrunning Benchmark: Reproducing NanoGPT Improvements

🔑 Keywords: Automated LLM Speedrunning Benchmark, NanoGPT, AI agents, Reproduction of Scientific Results, High-level Algorithmic Advancements

💡 Category: Natural Language Processing

🌟 Research Objective:

– The paper introduces the Automated LLM Speedrunning Benchmark to assess AI agents’ ability to reproduce results in active scientific research through NanoGPT speedrun tasks.

🛠️ Research Methods:

– The benchmark uses 19 tasks providing training scripts and optional hints, ranging from pseudocode to detailed descriptions, to evaluate AI agents’ efficiency in retraining a GPT-2 model.

💬 Research Conclusions:

– Recent reasoning LLMs face challenges in reimplementing known improvements despite detailed guidance, indicating limitations in automating scientific reproduction.

👉 Paper link: https://huggingface.co/papers/2506.22419

13. Spatial Mental Modeling from Limited Views

🔑 Keywords: MindCube, Vision Language Models, spatial mental models, cognitive maps, reinforcement learning

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To evaluate the ability of Vision Language Models (VLMs) to develop spatial mental models and improve understanding of unseen spaces using the MindCube benchmark.

🛠️ Research Methods:

– Systematic evaluation through cognitive mapping, perspective-taking, and mental simulation.

– Exploration of methods such as intermediate views, natural language reasoning chains, and cognitive maps.

– Implementation of the “map-then-reason” approach and reinforcement learning to enhance performance.

💬 Research Conclusions:

– Synergistic training that combines cognitive mapping and reasoning significantly improved VLMs’ accuracy from 37.8% to 60.8%.

– Applying reinforcement learning further increased accuracy to 70.7%.

– Constructing and using internal spatial mental models enhance understanding of unobservable spaces.

👉 Paper link: https://huggingface.co/papers/2506.21458