AI Native Daily Paper Digest – 20250707

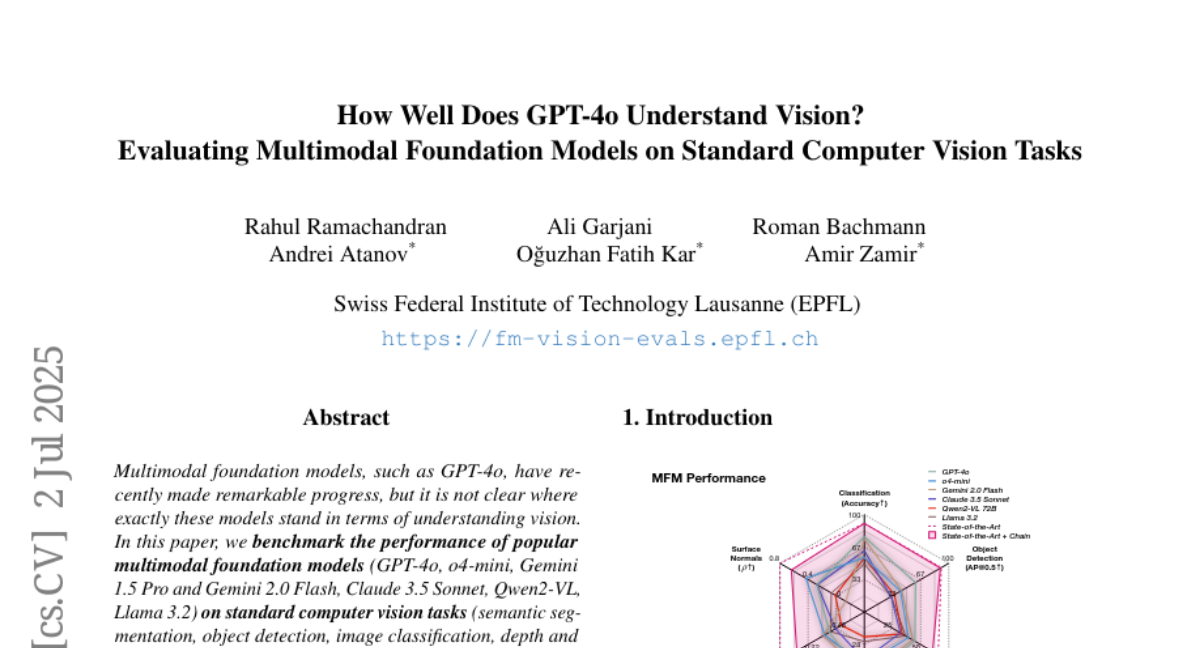

1. How Well Does GPT-4o Understand Vision? Evaluating Multimodal Foundation Models on Standard Computer Vision Tasks

🔑 Keywords: Multimodal Foundation Models, prompt chaining, computer vision, GPT-4o, reasoning models

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To benchmark the performance of popular multimodal foundation models on standard computer vision tasks and assess their understanding of vision.

🛠️ Research Methods:

– Translation of standard vision tasks into text-promptable and API-compatible tasks through prompt chaining to develop a standardized benchmarking framework.

💬 Research Conclusions:

– The models, though generalists, do not match the performance of state-of-the-art specialized models.

– They excel in semantic tasks over geometric ones.

– GPT-4o performed best among non-reasoning models.

– Reasoning models show improvements in geometric tasks.

– Models with native image generation may exhibit quirks like hallucinations and spatial misalignments.

👉 Paper link: https://huggingface.co/papers/2507.01955

2. Lost in Latent Space: An Empirical Study of Latent Diffusion Models for Physics Emulation

🔑 Keywords: latent space diffusion, dynamical systems, AI-generated summary, inference, autoencoder

💡 Category: Generative Models

🌟 Research Objective:

– The study investigates the application of latent space diffusion models as emulators for dynamical systems, focusing on their computational efficiency and prediction accuracy compared to non-generative approaches.

🛠️ Research Methods:

– The research explores generating in the latent space of an autoencoder to mitigate the computational costs typically associated with diffusion models during inference.

💬 Research Conclusions:

– Latent space emulations maintain high accuracy across diverse compression rates (up to 1000x) and provide more accurate and diverse predictions than non-generative models. The study highlights practical design choices, from architectures to optimizers, that are crucial for training these emulators.

👉 Paper link: https://huggingface.co/papers/2507.02608

3. Eka-Eval : A Comprehensive Evaluation Framework for Large Language Models in Indian Languages

🔑 Keywords: Multilingual Evaluation, Large Language Models, Indic-specific datasets, Distributed Inference, Open-source

💡 Category: Natural Language Processing

🌟 Research Objective:

– Develop EKA-EVAL, a multilingual evaluation framework to assess Large Language Models across diverse, linguistically significant benchmarks beyond English.

🛠️ Research Methods:

– Integrate over 35 benchmarks with specific focus on Indic datasets and utilize features such as distributed inference, quantization, and multi-GPU usage.

💬 Research Conclusions:

– EKA-EVAL establishes itself as a comprehensive, open-source evaluation suite for global and Indic LLMs, enhancing multilingual benchmarking capabilities. The framework aims to expand significantly, contributing to a robust evaluation ecosystem.

👉 Paper link: https://huggingface.co/papers/2507.01853

4. LitBench: A Benchmark and Dataset for Reliable Evaluation of Creative Writing

🔑 Keywords: LitBench, Creative Writing, AI-generated content, Zero-shot judges, Reward models

💡 Category: Natural Language Processing

🌟 Research Objective:

– To establish LitBench, a standardized benchmark and dataset for evaluating creative writing generated by language models.

🛠️ Research Methods:

– Conducted benchmarking of zero-shot language model judges using LitBench.

– Trained Bradley Terry and generative reward models for improved evaluation accuracy.

– Conducted an online human study to validate the rankings of reward models on newly generated stories.

💬 Research Conclusions:

– LitBench identifies Claude-3.7-Sonnet as the strongest off-the-shelf judge with 73% alignment to human preferences.

– Bradley Terry and generative reward models achieved a higher accuracy of 78%, outperforming all off-the-shelf judges.

– The online human study confirmed that trained reward models consistently aligned with human preferences.

– LitBench and the reward models are now available on Hugging Face for further research and application.

👉 Paper link: https://huggingface.co/papers/2507.00769

5.