AI Native Daily Paper Digest – 20250709

1. SingLoRA: Low Rank Adaptation Using a Single Matrix

🔑 Keywords: SingLoRA, Low-Rank Adaptation, parameter-efficient, fine-tuning, common sense reasoning

💡 Category: Foundations of AI

🌟 Research Objective:

– To enhance parameter-efficient fine-tuning by reformulating low-rank adaptation through the development of SingLoRA.

🛠️ Research Methods:

– SingLoRA is introduced as a method that learns a single low-rank matrix update, substantially reducing parameter counts and optimizing stability.

– Analysis is conducted within the infinite-width neural network framework to ensure stable feature learning.

💬 Research Conclusions:

– SingLoRA achieves superior accuracy in fine-tuning tasks such as MNLI, with only 60% of the parameter budget compared to existing methods.

– In image generation tasks, SingLoRA improves image fidelity, demonstrating superior performance in DreamBooth with higher DINO similarity scores.

👉 Paper link: https://huggingface.co/papers/2507.05566

2. A Survey on Latent Reasoning

🔑 Keywords: Latent reasoning, Large Language Models, Chain-of-Thought (CoT), Multi-step inference, Neural network layers

💡 Category: Natural Language Processing

🌟 Research Objective:

– To provide a comprehensive overview of latent reasoning in large language models and outline future research directions.

🛠️ Research Methods:

– Examining the role of neural network layers in reasoning.

– Exploring diverse latent reasoning methodologies like activation-based recurrence and hidden state propagation.

– Investigating advanced paradigms such as infinite-depth latent reasoning via masked diffusion models.

💬 Research Conclusions:

– Latent reasoning enhances the efficiency and expressiveness of large language models by moving beyond token-level supervision, allowing for globally consistent and reversible reasoning processes.

👉 Paper link: https://huggingface.co/papers/2507.06203

3. Agent KB: Leveraging Cross-Domain Experience for Agentic Problem Solving

🔑 Keywords: Agent KB, Knowledge Transfer, Error Correction, Experience Reuse, Reason-Retrieve-Refine

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– To introduce Agent KB, a hierarchical experience framework designed to enhance complex language agent problem solving through experience reuse and effective error correction across domains.

🛠️ Research Methods:

– Utilized a Reason-Retrieve-Refine pipeline that allows agents to share and learn from each other’s experiences, capturing high-level strategies and detailed execution logs.

💬 Research Conclusions:

– Agent KB significantly improved success rates on the GAIA benchmark and various tasks, demonstrating its potential as a modular, framework-agnostic infrastructure enabling agents to generalize successful strategies to new tasks.

👉 Paper link: https://huggingface.co/papers/2507.06229

4. OmniPart: Part-Aware 3D Generation with Semantic Decoupling and Structural Cohesion

🔑 Keywords: OmniPart, 3D object generation, autoregressive structure planning module, semantic decoupling, spatially-conditioned rectified flow model

💡 Category: Generative Models

🌟 Research Objective:

– To create a framework for part-aware 3D object generation with high semantic decoupling and robust structural cohesion.

🛠️ Research Methods:

– Utilization of an autoregressive structure planning module to generate and control variable-length sequences of 3D part bounding boxes.

– Implementation of a spatially-conditioned rectified flow model adapted from a pre-trained holistic 3D generator for simultaneous 3D part synthesis.

💬 Research Conclusions:

– OmniPart achieves state-of-the-art performance in creating interpretable, editable, and versatile 3D content, facilitating more interactive applications.

👉 Paper link: https://huggingface.co/papers/2507.06165

5. How to Train Your LLM Web Agent: A Statistical Diagnosis

🔑 Keywords: LLM-based web agents, Supervised Fine-Tuning, On-Policy Reinforcement Learning, Compute Allocation, Performance Optimization

💡 Category: Reinforcement Learning

🌟 Research Objective:

– To explore the effectiveness of combining supervised fine-tuning with on-policy reinforcement learning for post-training LLM-based web agents, aiming to improve performance and reduce computational costs.

🛠️ Research Methods:

– Utilized a two-stage pipeline, training a Llama 3.1 8B student to imitate a Llama 3.3 70B teacher using supervised fine-tuning, followed by on-policy reinforcement learning.

– Conducted statistical experiments by sampling 1,370 hyperparameter configurations and using bootstrapping to estimate effective hyperparameters.

💬 Research Conclusions:

– The combination of supervised fine-tuning and on-policy RL consistently outperforms either method alone in terms of performance and cost efficiency.

– The strategy requires only 55% of the compute resources to achieve peak performance, thereby effectively shifting the compute-performance Pareto frontier.

– This approach is the only one capable of narrowing the gap with closed-source models.

👉 Paper link: https://huggingface.co/papers/2507.04103

6. StreamVLN: Streaming Vision-and-Language Navigation via SlowFast Context Modeling

🔑 Keywords: StreamVLN, Vision-and-Language Navigation, Video-LLMs, multi-modal reasoning, 3D-aware token pruning

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To develop StreamVLN, a streaming VLN framework that balances visual understanding, context modeling, and efficiency in real-world environments.

🛠️ Research Methods:

– Implemented a hybrid slow-fast context modeling strategy, integrating fast-streaming dialogue context with slow-updating memory context using 3D-aware token pruning.

💬 Research Conclusions:

– StreamVLN demonstrates state-of-the-art performance on VLN-CE benchmarks, achieves low latency, and ensures robustness and efficiency in real-world settings.

👉 Paper link: https://huggingface.co/papers/2507.05240

7. CriticLean: Critic-Guided Reinforcement Learning for Mathematical Formalization

🔑 Keywords: CriticLean, Reinforcement Learning, CriticLeanGPT, Automated Theorem Proving, Semantic Evaluation

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The study introduces CriticLean, a reinforcement learning framework aimed at enhancing semantic evaluation in automated theorem proving by transitioning the critic from a passive validator to an active learning component.

🛠️ Research Methods:

– The research employs CriticLeanGPT, a model trained through supervised fine-tuning and reinforcement learning to assess semantic fidelity. Additionally, CriticLeanBench is introduced as a benchmark to evaluate models’ capabilities in distinguishing correct from incorrect formalizations.

💬 Research Conclusions:

– The findings emphasize the importance of optimizing the critic phase to produce reliable formalizations. Trained models significantly outperform existing baselines, and the approach provides valuable insights for advancing formal mathematical reasoning.

👉 Paper link: https://huggingface.co/papers/2507.06181

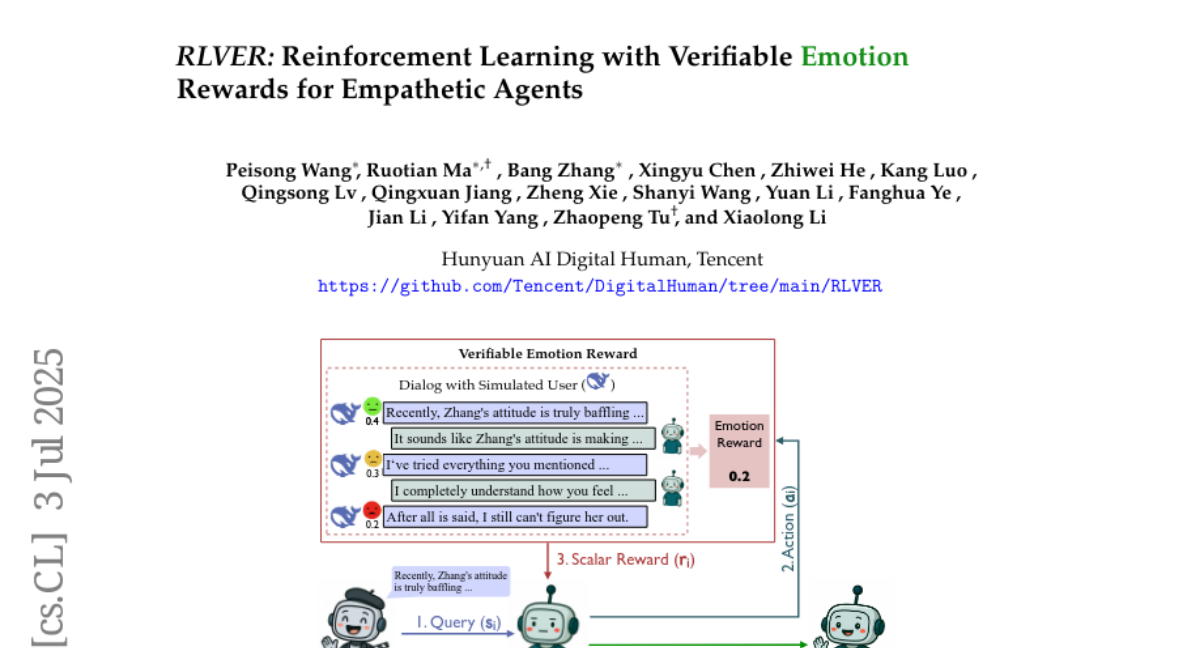

8. RLVER: Reinforcement Learning with Verifiable Emotion Rewards for Empathetic Agents

🔑 Keywords: Large language models, reinforcement learning, emotional intelligence, simulated users, RLVER

💡 Category: Reinforcement Learning

🌟 Research Objective:

– To enhance emotional intelligence in large language models through an end-to-end reinforcement learning framework using verifiable emotion rewards from simulated users.

🛠️ Research Methods:

– Utilized RLVER, a novel reinforcement learning framework, involving affective simulated users and deterministic emotion scores to guide language model training, specifically fine-tuning Qwen2.5-7B-Instruct with PPO.

💬 Research Conclusions:

– RLVER significantly improves dialogue capabilities, enhances empathy and insight in thinking models, and shows that moderate environments can yield stronger outcomes compared to more challenging ones.

👉 Paper link: https://huggingface.co/papers/2507.03112

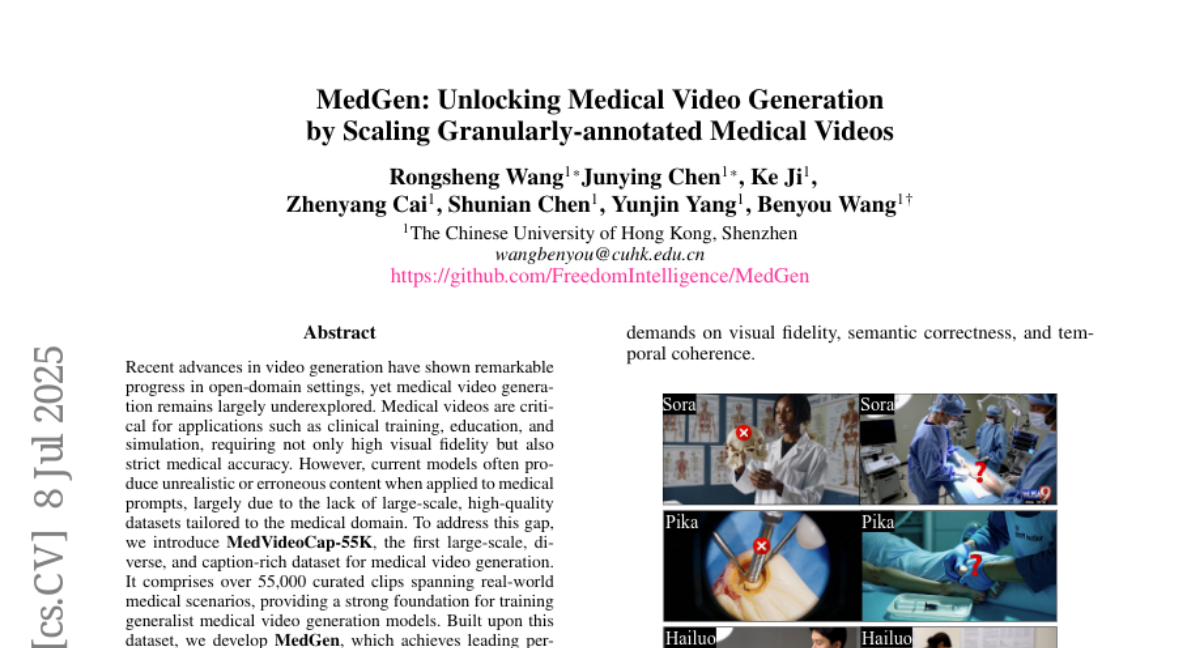

9. MedGen: Unlocking Medical Video Generation by Scaling Granularly-annotated Medical Videos

🔑 Keywords: MedGen, MedVideoCap-55K, medical video generation, visual quality, medical accuracy

💡 Category: AI in Healthcare

🌟 Research Objective:

– To develop a model called MedGen, utilizing the MedVideoCap-55K dataset, aimed at improving medical video generation by balancing visual quality and medical accuracy.

🛠️ Research Methods:

– Construction of MedVideoCap-55K, a large-scale and diverse dataset with over 55,000 caption-rich medical video clips as a basis for training.

💬 Research Conclusions:

– MedGen achieves leading performance in medical video generation compared to existing open-source and commercial models, enhancing both visual fidelity and medical precision.

👉 Paper link: https://huggingface.co/papers/2507.05675

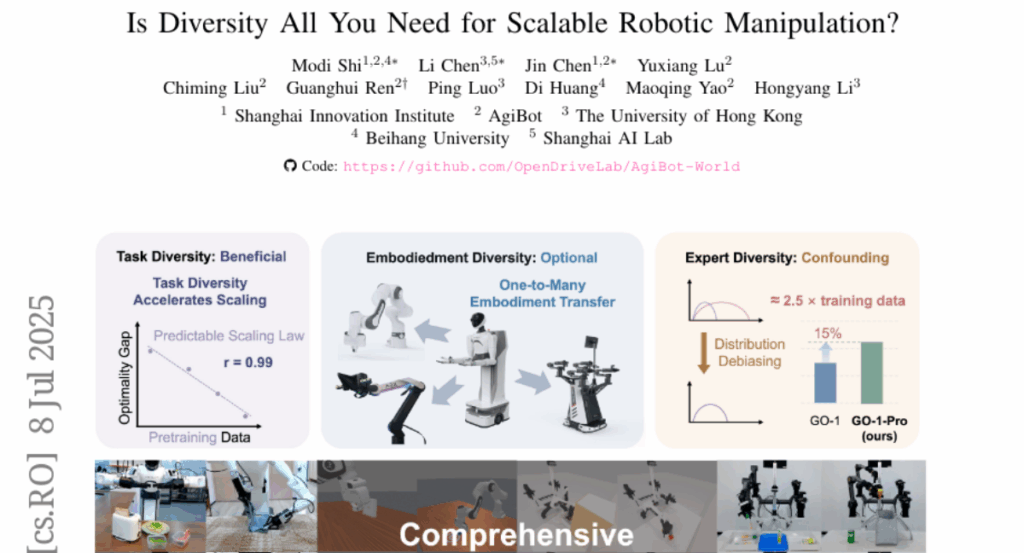

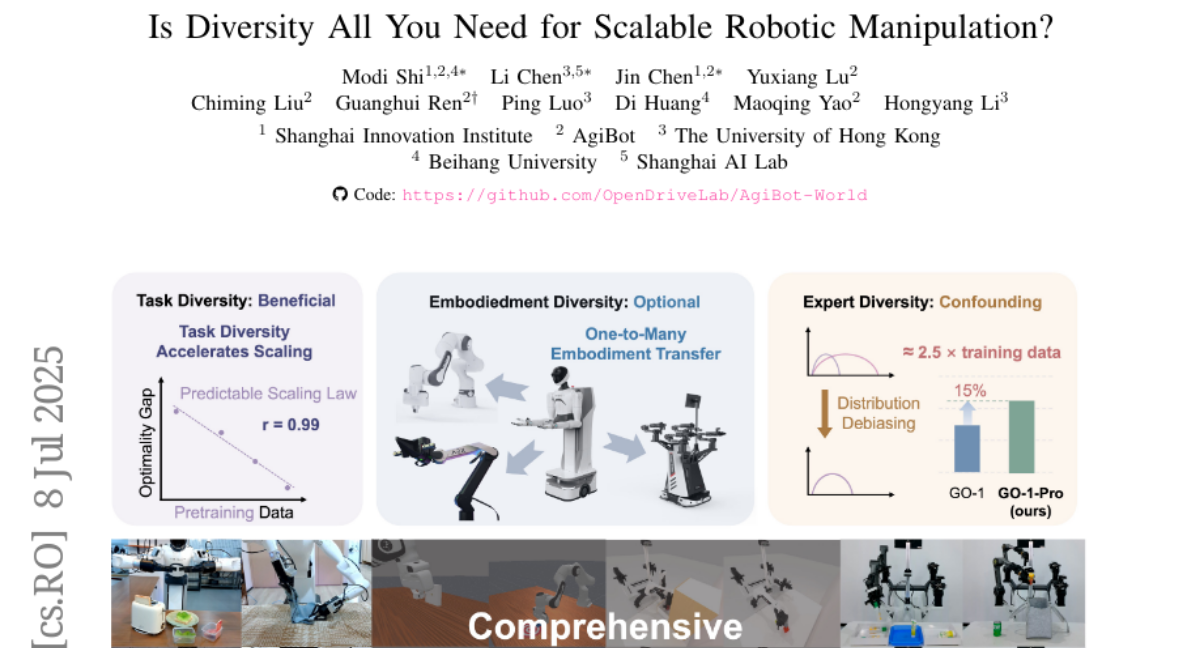

10. Is Diversity All You Need for Scalable Robotic Manipulation?

🔑 Keywords: Data Diversity, Task Diversity, Multi-embodiment, Distribution Debiasing

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– The study aims to investigate the impact of data diversity on robotic manipulation, focusing on task, embodiment, and expert dimensions.

🛠️ Research Methods:

– Conducted extensive experiments on various robot platforms to evaluate the importance of task diversity, the necessity of multi-embodiment data, and the effects of expert diversity.

💬 Research Conclusions:

– Task diversity is more critical than the number of demonstrations per task, aiding in the transfer to novel scenarios.

– Multi-embodiment data is not essential; high-quality single-embodiment data can enable effective cross-platform transfer.

– Expert diversity can hinder policy learning due to variations in demonstrations, prompting the need for a distribution debiasing method that improved performance by 15%.

👉 Paper link: https://huggingface.co/papers/2507.06219

11. Nile-Chat: Egyptian Language Models for Arabic and Latin Scripts

🔑 Keywords: Nile-Chat, Branch-Train-MiX, Egyptian dialect, MoE model, dual-script languages

💡 Category: Natural Language Processing

🌟 Research Objective:

– To introduce Nile-Chat models that outperform existing multilingual and Arabic LLMs, specifically for Egyptian dialects in both Arabic and Latin scripts.

🛠️ Research Methods:

– Deploying the Branch-Train-MiX strategy to design and merge script-specialized experts into a single Mixture of Experts (MoE) model.

💬 Research Conclusions:

– The Nile-Chat models achieve significant performance improvements over leading models on Egyptian benchmarks, including a 14.4% performance gain on Latin-script tasks against Qwen2.5-14B-Instruct, offering a new methodology for adapting LLMs to dual-script languages.

👉 Paper link: https://huggingface.co/papers/2507.04569

12. GTA1: GUI Test-time Scaling Agent

🔑 Keywords: GUI, Test-time Scaling, Reinforcement Learning, Visual Grounding, State-of-the-art

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The study aims to tackle task planning ambiguity and improve visual grounding in GUI interactions using test-time scaling and reinforcement learning.

🛠️ Research Methods:

– The researchers employed a test-time scaling method to select optimal action proposals and utilized reinforcement learning to enhance the accuracy of grounding actions in complex interfaces.

💬 Research Conclusions:

– The proposed method achieved state-of-the-art performance on multiple benchmarks, emphasizing the effectiveness of test-time scaling and reinforcement learning in task execution within GUI environments.

👉 Paper link: https://huggingface.co/papers/2507.05791

13. Coding Triangle: How Does Large Language Model Understand Code?

🔑 Keywords: Large language models, Code Triangle framework, Editorial analysis, Code implementation, Test case generation

💡 Category: AI Systems and Tools

🌟 Research Objective:

– To systematically evaluate large language models (LLMs) using the Code Triangle framework across editorial analysis, code implementation, and test case generation.

🛠️ Research Methods:

– Conducted extensive experiments on competitive programming benchmarks to analyze LLM capabilities and limitations in programming tasks.

💬 Research Conclusions:

– LLMs demonstrate consistent systems but lack diversity and robustness compared to human programmers.

– Identified significant distribution shifts due to training data biases and limited reasoning transfer.

– Suggested enhancements through human-generated content and model mixtures to improve LLM performance and robustness.

– Highlighted both consistency and inconsistency in LLM cognition that can facilitate self-reflection and self-improvement in coding models.

👉 Paper link: https://huggingface.co/papers/2507.06138

14. Critiques of World Models

🔑 Keywords: World Model, Artificial General Intelligence, Hypothetical Thinking, Generative Models

💡 Category: Foundations of AI

🌟 Research Objective:

– Critique existing schools of thought on world modeling to define the primary goal as simulating all actionable possibilities for reasoning and acting.

🛠️ Research Methods:

– Propose a new architecture featuring hierarchical, multi-level, mixed continuous/discrete representations.

– Integrate generative and self-supervised learning frameworks.

💬 Research Conclusions:

– Envision a Physical, Agentic, and Nested (PAN) AGI system enabled by the proposed world model.

👉 Paper link: https://huggingface.co/papers/2507.05169

15. Efficiency-Effectiveness Reranking FLOPs for LLM-based Rerankers

🔑 Keywords: LLM-based rerankers, E2R-FLOPs, efficiency-effectiveness tradeoff, FLOPs estimator

💡 Category: Natural Language Processing

🌟 Research Objective:

– The paper proposes E2R-FLOPs, a metric to evaluate LLM-based rerankers’ efficiency and effectiveness by measuring relevance and throughput per PetaFLOP.

🛠️ Research Methods:

– The study introduces hardware-agnostic metrics like RPP and QPP for evaluating rerankers and uses a FLOPs estimator for model assessments without experiments.

💬 Research Conclusions:

– Comprehensive experiments highlight the efficiency-effectiveness trade-off of various LLM-based rerankers, urging further research focus on this area.

👉 Paper link: https://huggingface.co/papers/2507.06223

16. SAMed-2: Selective Memory Enhanced Medical Segment Anything Model

🔑 Keywords: Medical Image Segmentation, Temporal Adapter, Confidence-driven Memory, AI in Healthcare

💡 Category: AI in Healthcare

🌟 Research Objective:

– To develop SAMed-2, a foundation model for medical image segmentation, by enhancing SAM-2 with features that address challenges unique to medical data.

🛠️ Research Methods:

– Integration of a temporal adapter in the image encoder and a confidence-driven memory mechanism to handle complex medical image data and mitigate catastrophic forgetting.

– Training and evaluation using the MedBank-100k dataset across multiple imaging modalities and tasks.

💬 Research Conclusions:

– SAMed-2 outperforms state-of-the-art baselines in multi-task scenarios, showcasing superior performance across both internal and external datasets.

👉 Paper link: https://huggingface.co/papers/2507.03698

17. PRING: Rethinking Protein-Protein Interaction Prediction from Pairs to Graphs

🔑 Keywords: Deep learning, Protein-Protein Interactions, Graph-level perspective, PRING, Biological network

💡 Category: Machine Learning

🌟 Research Objective:

– The research introduces PRING, a benchmark for evaluating protein-protein interaction (PPI) prediction from a graph-level perspective, emphasizing the importance of PPI network reconstruction.

🛠️ Research Methods:

– PRING curates a robust multi-species PPI network dataset, implementing strategies to tackle data redundancy and leakage, and establishes two evaluation paradigms: topology-oriented and function-oriented tasks.

💬 Research Conclusions:

– Extensive experiments reveal the limitations of current PPI models in capturing PPI networks’ structural and functional properties, indicating a gap in supporting real-world biological applications.

👉 Paper link: https://huggingface.co/papers/2507.05101

18. High-Resolution Visual Reasoning via Multi-Turn Grounding-Based Reinforcement Learning

🔑 Keywords: Multi-modal models, Reinforcement learning, Visual grounding, End-to-end framework

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The research aims to enhance large multi-modal models’ capabilities to focus on critical visual regions without the need for additional grounding annotations, improving performance across various benchmarks.

🛠️ Research Methods:

– Utilizes an end-to-end reinforcement learning framework called Multi-turn Grounding-based Policy Optimization (MGPO), allowing models to automatically crop sub-images based on model-predicted grounding coordinates.

💬 Research Conclusions:

– MGPO significantly boosts grounding abilities, leading to a performance improvement over standard techniques, surpassing existing models such as OpenAI’s o1 and GPT-4o in out-of-distribution evaluations.

👉 Paper link: https://huggingface.co/papers/2507.05920

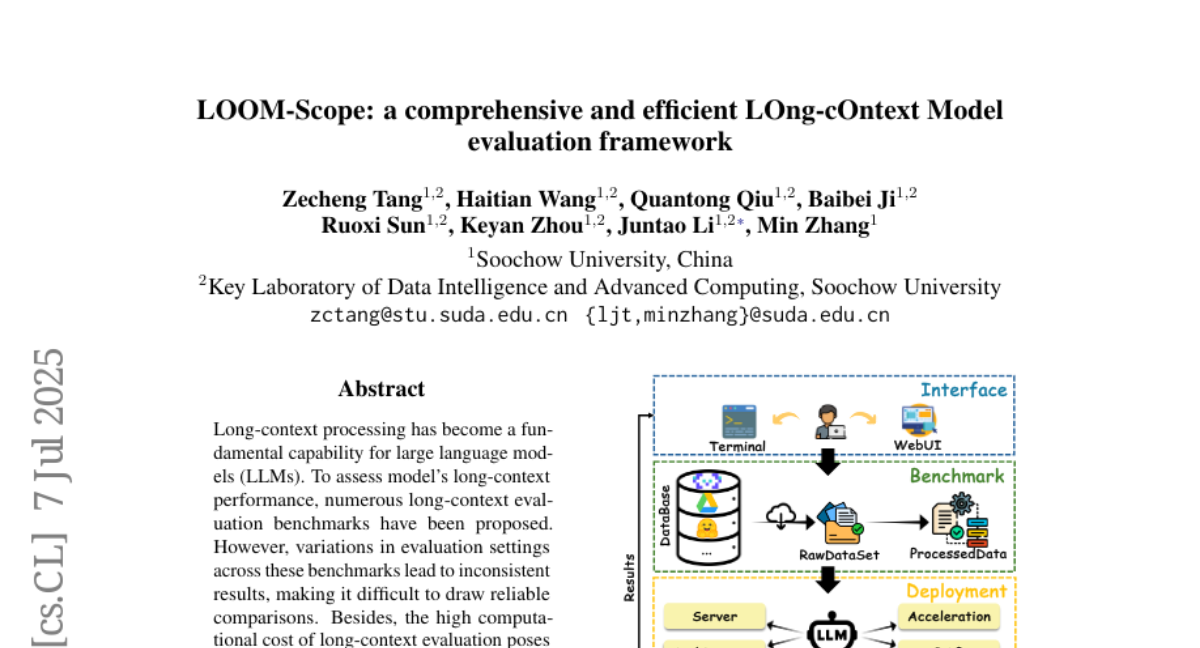

19. LOOM-Scope: a comprehensive and efficient LOng-cOntext Model evaluation framework

🔑 Keywords: Long-context processing, Evaluation benchmarks, LOOM-Scope

💡 Category: Natural Language Processing

🌟 Research Objective:

– To provide a standardized and efficient framework for assessing long-context performance in large language models.

🛠️ Research Methods:

– The development of LOOM-Scope, which standardizes evaluation settings and incorporates efficient inference acceleration methods.

💬 Research Conclusions:

– LOOM-Scope enables comprehensive and lightweight benchmarking of models, facilitating more reliable comparisons across various benchmarks.

👉 Paper link: https://huggingface.co/papers/2507.04723

20. Tora2: Motion and Appearance Customized Diffusion Transformer for Multi-Entity Video Generation

🔑 Keywords: motion-guided video generation, Tora2, decoupled personalization extractor, gated self-attention mechanism, contrastive loss

💡 Category: Generative Models

🌟 Research Objective:

– Tora2 is designed to enhance motion-guided video generation through improvements in appearance and motion customization, building upon the previous Tora model.

🛠️ Research Methods:

– Introduces a decoupled personalization extractor to generate comprehensive personalization embeddings, a gated self-attention mechanism for better integration of multimodal information, and a contrastive loss to optimize trajectory dynamics and entity consistency.

💬 Research Conclusions:

– Tora2 achieves competitive performance with state-of-the-art customization methods, providing advanced motion control capabilities and marking a significant advancement in multi-condition video generation.

👉 Paper link: https://huggingface.co/papers/2507.05963

21. Differential Mamba

🔑 Keywords: Mamba, differential mechanism, selective state-space layers, retrieval capabilities, AI-generated summary

💡 Category: Natural Language Processing

🌟 Research Objective:

– This study aims to explore the application of differential design techniques to Mamba, improving its retrieval capabilities and performance while addressing overallocation issues.

🛠️ Research Methods:

– The paper introduces a novel differential mechanism for the Mamba architecture.

– Empirical validation is conducted on language modeling benchmarks.

– Extensive ablation studies and empirical analyses are performed to justify design choices.

💬 Research Conclusions:

– The study demonstrates that the novel differential mechanism significantly enhances retrieval capabilities and performance over the vanilla Mamba by addressing the overallocation problem effectively.

– The findings suggest that careful architectural modifications are necessary for the application of differential design techniques to Mamba-based models.

👉 Paper link: https://huggingface.co/papers/2507.06204