AI Native Daily Paper Digest – 20250710

1. 4KAgent: Agentic Any Image to 4K Super-Resolution

🔑 Keywords: agentic super-resolution, Profiling, Perception Agent, Restoration Agent, low-level vision tasks

💡 Category: Computer Vision

🌟 Research Objective:

– Introduce 4KAgent, a comprehensive super-resolution system, to upscale any low-resolution image to 4K resolution and beyond.

🛠️ Research Methods:

– Utilize a three-component system: Profiling, Perception Agent using vision-language models, and a Restoration Agent following a recursive execution-reflection paradigm.

💬 Research Conclusions:

– Achieves state-of-the-art performance across 11 task categories and 26 benchmarks, improving both perceptual and fidelity metrics, especially in multiple image domains, and encourages innovation in vision-centric autonomous agents.

👉 Paper link: https://huggingface.co/papers/2507.07105

2. Go to Zero: Towards Zero-shot Motion Generation with Million-scale Data

🔑 Keywords: Zero-Shot Text-to-Motion Generation, MotionMillion, Scalable Architecture, Zero-Shot Generalization, AI-Generated Summary

💡 Category: Generative Models

🌟 Research Objective:

– To enhance zero-shot text-to-motion generation capabilities through the introduction of a large-scale dataset and a comprehensive evaluation framework.

🛠️ Research Methods:

– Development of an efficient annotation pipeline and creation of MotionMillion, the largest human motion dataset, alongside scaling a model to 7 billion parameters.

💬 Research Conclusions:

– Demonstrated strong generalization to out-of-domain and complex compositional motions, advancing towards robust zero-shot human motion generation.

👉 Paper link: https://huggingface.co/papers/2507.07095

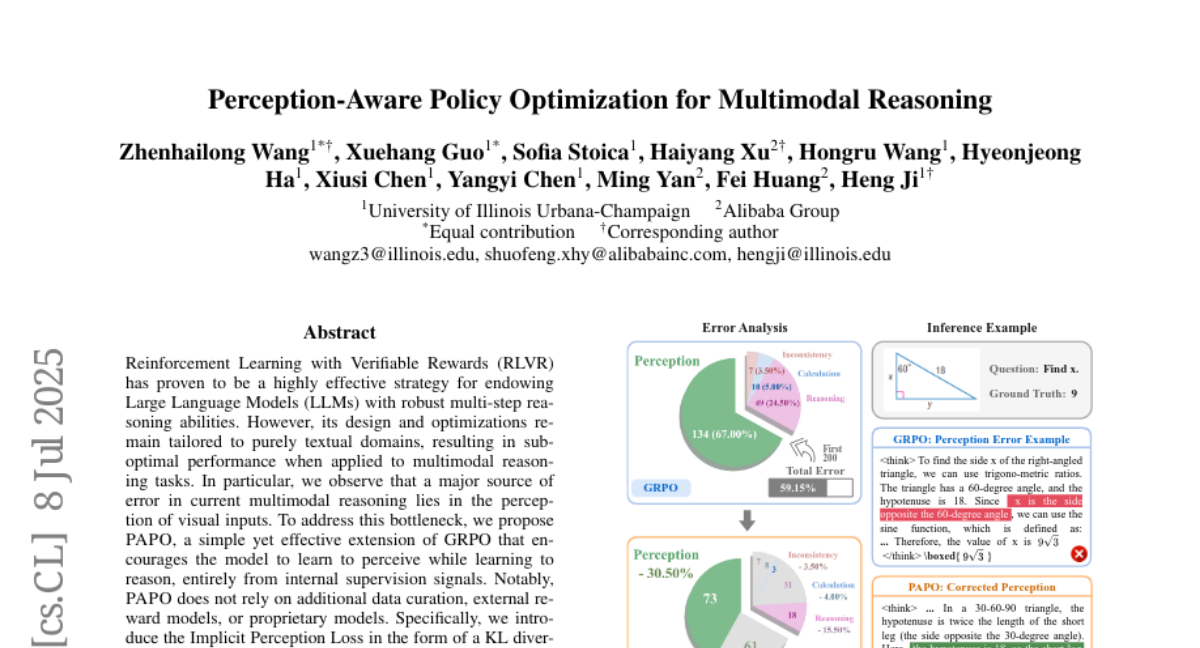

3. Perception-Aware Policy Optimization for Multimodal Reasoning

🔑 Keywords: Perception-Aware Policy Optimization, Reinforcement Learning with Verifiable Rewards, multimodal reasoning, Implicit Perception Loss, visually grounded reasoning

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Enhance reinforcement learning to improve visual perception and reasoning by integrating implicit perception loss.

🛠️ Research Methods:

– Introduce Perception-Aware Policy Optimization (PAPO) with an Implicit Perception Loss using KL divergence, without additional data curation or proprietary models.

💬 Research Conclusions:

– Achieved significant improvements in multimodal benchmarks, with a 4.4% overall enhancement and up to 8.0% in vision-dependent tasks, alongside a 30.5% reduction in perception errors. Identified and mitigated a loss hacking issue using Double Entropy Loss.

👉 Paper link: https://huggingface.co/papers/2507.06448

4. Rethinking Verification for LLM Code Generation: From Generation to Testing

🔑 Keywords: Human-LLM collaboration, Code-generation benchmarks, Test-case generation, Verifier accuracy, Reinforcement learning

💡 Category: Reinforcement Learning

🌟 Research Objective:

– To enhance code-generation test case generation, improving reliability and detection rates in code evaluation benchmarks using a human-LLM collaborative method called SAGA.

🛠️ Research Methods:

– Investigation of test-case generation using multi-dimensional metrics to quantify test-suite thoroughness, leveraging human programming expertise with LLM reasoning capability.

💬 Research Conclusions:

– The SAGA method demonstrates significant improvements with a detection rate of 90.62% and verifier accuracy of 32.58% on TCGBench. It outperforms existing benchmarks, showing a verifier accuracy improvement of 10.78% over LiveCodeBench-v6.

👉 Paper link: https://huggingface.co/papers/2507.06920

5. A Systematic Analysis of Hybrid Linear Attention

🔑 Keywords: linear attention mechanisms, hybrid architectures, selective gating, hierarchical recurrence, full attention layers

💡 Category: Natural Language Processing

🌟 Research Objective:

– To evaluate various linear attention models and their integration with full attention in Transformers, and to identify key mechanisms like selective gating and hierarchical recurrence that enhance recall performance.

🛠️ Research Methods:

– Systematic evaluation of 72 models trained and open-sourced, covering six linear attention variants across five hybridization ratios, with benchmarking on standard language modeling and recall tasks.

💬 Research Conclusions:

– Superior standalone linear models do not necessarily excel in hybrids. Enhanced recall is achieved with increased full attention layers, particularly below a 3:1 linear-to-full ratio, with selective gating and hierarchical recurrence being critical for effective hybrid models. Recommended architectures include HGRN-2 or GatedDeltaNet.

👉 Paper link: https://huggingface.co/papers/2507.06457

6. First Return, Entropy-Eliciting Explore

🔑 Keywords: FR3E, Reinforcement Learning, Large Language Models, structured exploration, reasoning trajectories

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The paper aims to enhance LLM reasoning by using a structured exploration framework called FR3E, tailored to identify and explore high-uncertainty points in reasoning processes.

🛠️ Research Methods:

– FR3E employs targeted rollouts to provide semantically grounded intermediate feedback, guiding the exploration without requiring dense supervision.

💬 Research Conclusions:

– Empirical results on mathematical reasoning benchmarks demonstrate that FR3E improves training stability, promotes the generation of coherent and lengthier responses, and increases the proportion of fully correct reasoning trajectories.

👉 Paper link: https://huggingface.co/papers/2507.07017

7. AutoTriton: Automatic Triton Programming with Reinforcement Learning in LLMs

🔑 Keywords: Reinforcement Learning, Triton, AutoTriton, Kernel Performance, AI Systems

💡 Category: AI Systems and Tools

🌟 Research Objective:

– To introduce AutoTriton, a model designed to optimize Triton programming using reinforcement learning for enhancing kernel performance in AI systems.

🛠️ Research Methods:

– Implements supervised fine-tuning (SFT) and reinforcement learning with Group Relative Policy Optimization (GRPO) algorithm for tuning Triton programming parameters.

💬 Research Conclusions:

– AutoTriton matches the performance of mainstream large models, demonstrating the effectiveness of reinforcement learning in generating high-performance kernels. This advancement lays the groundwork for constructing more efficient AI systems.

👉 Paper link: https://huggingface.co/papers/2507.05687

8. Towards Solving More Challenging IMO Problems via Decoupled Reasoning and Proving

🔑 Keywords: Automated Theorem Proving, Large Language Models, informal reasoning, Prover, modular design

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– The goal is to enhance formal proving performance in Automated Theorem Proving (ATP) by decoupling reasoning and proving to solve complex mathematical challenges, especially International Mathematical Olympiad (IMO) problems.

🛠️ Research Methods:

– A novel framework is introduced that separates high-level reasoning from proof generation using two specialized models: a general-purpose Reasoner for generating strategic subgoal lemmas and an efficient Prover for verification, evaluated on post-2000 IMO problems.

💬 Research Conclusions:

– The decoupled framework successfully solves 5 challenging IMO problems, showcasing a substantial advancement in automated reasoning for difficult mathematical challenges, with released datasets facilitating future research.

👉 Paper link: https://huggingface.co/papers/2507.06804

9. A Survey on Vision-Language-Action Models for Autonomous Driving

🔑 Keywords: Vision-Language-Action, Autonomous Driving, Multimodal Large Language Models, AI-generated summary, Architectural Components

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To provide a comprehensive overview of Vision-Language-Action paradigms for Autonomous Driving, highlighting architectural components, model evolution, and future challenges.

🛠️ Research Methods:

– Formalizing architectural building blocks, tracing the evolution of VLA models, and comparing over 20 models in the domain of autonomous driving. Additionally, consolidating existing datasets and benchmarks.

💬 Research Conclusions:

– Offers insights into the progress and fragmentation in VLA for autonomous driving, presents consolidated datasets and benchmarks for driving safety, accuracy, and explanation quality, and highlights open challenges like robustness and real-time efficiency.

👉 Paper link: https://huggingface.co/papers/2506.24044

10. DiffSpectra: Molecular Structure Elucidation from Spectra using Diffusion Models

🔑 Keywords: DiffSpectra, Diffusion Models, SE(3)-equivariant architecture, SpecFormer, Multi-Modal Spectral Reasoning

💡 Category: Generative Models

🌟 Research Objective:

– To develop a generative framework, DiffSpectra, for accurately inferring both 2D and 3D molecular structures from multi-modal spectral data.

🛠️ Research Methods:

– Utilization of diffusion models with SE(3)-equivariant architecture and a SpecFormer spectral encoder to integrate topological and geometric information while capturing intra- and inter-spectral dependencies.

💬 Research Conclusions:

– DiffSpectra demonstrates high accuracy with 16.01% top-1 and 96.86% top-20 accuracy rates, benefiting significantly from 3D geometric modeling and multi-modal conditioning, marking it as the first framework to unify multi-modal spectral reasoning and joint 2D/3D generative modeling for de novo molecular structure elucidation.

👉 Paper link: https://huggingface.co/papers/2507.06853

11. ModelCitizens: Representing Community Voices in Online Safety

🔑 Keywords: toxic language detection, conversational context, MODELCITIZENS, LLaMA and Gemma models, community-informed annotation

💡 Category: Natural Language Processing

🌟 Research Objective:

– The research aims to improve toxic language detection by incorporating diverse community perspectives and conversational context into models and datasets.

🛠️ Research Methods:

– Introduction of MODELCITIZENS, a dataset including social media posts and toxicity annotations across diverse groups, augmented with conversational scenarios.

– Development and fine-tuning of LLaMA- and Gemma-based models, specifically LLAMACITIZEN-8B and GEMMACITIZEN-12B, on the MODELCITIZENS dataset.

💬 Research Conclusions:

– The new models outperform existing systems like GPT-o4-mini by 5.5% in in-distribution evaluations, underlining the importance of community-informed annotations and context inclusion for effective content moderation.

👉 Paper link: https://huggingface.co/papers/2507.05455

12. SRT-H: A Hierarchical Framework for Autonomous Surgery via Language Conditioned Imitation Learning

🔑 Keywords: Hierarchical Framework, High-Level Task Planning, Surgical Autonomy, Minimally Invasive Procedure, Clinical Deployment

💡 Category: AI in Healthcare

🌟 Research Objective:

– The paper aims to develop a hierarchical framework that integrates high-level task planning with low-level trajectory generation to enhance the autonomy of surgical procedures.

🛠️ Research Methods:

– The research utilizes a hierarchical approach combining high-level policies for task planning in language space with low-level policies for robot trajectory generation, validated through ex vivo experiments on cholecystectomy.

💬 Research Conclusions:

– The proposed framework achieved a 100% success rate in autonomous surgical procedures on eight unseen gallbladders, demonstrating significant progress toward the clinical deployment of autonomous surgical systems.

👉 Paper link: https://huggingface.co/papers/2505.10251

13. Evaluating the Critical Risks of Amazon’s Nova Premier under the Frontier Model Safety Framework

🔑 Keywords: Nova Premier, Multimodal foundation model, Frontier Model Safety Framework, Automated AI R&D

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Evaluate Nova Premier, Amazon’s advanced multimodal foundation model, for safety under the Frontier Model Safety Framework across high-risk domains.

🛠️ Research Methods:

– Utilized automated benchmarks, expert red-teaming, and uplift studies to assess the safety of the model in domains like Chemical, Biological, Radiological & Nuclear, Offensive Cyber Operations, and Automated AI R&D.

💬 Research Conclusions:

– Nova Premier is deemed safe for public release, exceeding safety thresholds as evaluated at the 2025 Paris AI Safety Summit. Ongoing enhancements in safety evaluations and mitigation plans are mentioned for future frontier model risks.

👉 Paper link: https://huggingface.co/papers/2507.06260

14. PERK: Long-Context Reasoning as Parameter-Efficient Test-Time Learning

🔑 Keywords: PERK, Long-context reasoning, Meta-learning, Memory-intensive, Gradient updates

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– The study introduces PERK, a scalable approach to enhance long-context reasoning by utilizing parameter-efficient adapters during test time.

🛠️ Research Methods:

– The approach involves meta-training with two nested optimization loops; the inner loop encodes contexts into a low-rank adapter, while the outer loop uses the adapter to improve reasoning over encoded contexts.

💬 Research Conclusions:

– PERK significantly outperforms prompt-based methods in long-context reasoning tasks, showing substantial performance improvements, particularly for smaller models.

– While memory-intensive during training, PERK scales more efficiently during inference than traditional prompt-based methods.

👉 Paper link: https://huggingface.co/papers/2507.06415

15. Video-RTS: Rethinking Reinforcement Learning and Test-Time Scaling for Efficient and Enhanced Video Reasoning

🔑 Keywords: Video-RTS, Reinforcement Learning, Adaptive Test-Time Scaling, Data Efficiency

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The study aims to enhance video reasoning efficiency and accuracy using a novel approach called Video-RTS that reduces data and computational costs.

🛠️ Research Methods:

– The method integrates data-efficient reinforcement learning with a video-adaptive test-time scaling strategy to improve reasoning without extensive fine-tuning.

💬 Research Conclusions:

– Video-RTS delivers significant accuracy improvements over existing models, with minimal training data, demonstrated by surpassing benchmarks like Video-Holmes and MMVU.

👉 Paper link: https://huggingface.co/papers/2507.06485

16. Decoder-Hybrid-Decoder Architecture for Efficient Reasoning with Long Generation

🔑 Keywords: Gated Memory Unit, Hybrid Decoder Architectures, Differential Attention, State Space Models, AI-generated summary

💡 Category: Natural Language Processing

🌟 Research Objective:

– Introduce the Gated Memory Unit (GMU) for efficient memory sharing in hybrid decoder architectures to enhance language modeling tasks.

🛠️ Research Methods:

– Implement GMUs in the SambaY architecture, a decoder-hybrid-decoder model, to share memory readout states and improve decoding efficiency without explicit positional encoding.

– Conduct extensive scaling experiments to demonstrate the model’s performance.

💬 Research Conclusions:

– SambaY significantly enhances decoding efficiency and boosts long-context performance while maintaining linear pre-filling time complexity.

– The model demonstrates superior performance on reasoning tasks and achieves up to 10x higher decoding throughput compared to a YOCO baseline.

– The training codebase is released openly for further research and development.

👉 Paper link: https://huggingface.co/papers/2507.06607

17. FlexOlmo: Open Language Models for Flexible Data Use

🔑 Keywords: FlexOlmo, distributed training, data-flexible inference, mixture-of-experts, data licensing

💡 Category: Natural Language Processing

🌟 Research Objective:

– The primary aim is to develop FlexOlmo, a language model that allows distributed training with data privacy, enabling data owners to maintain control over their datasets.

🛠️ Research Methods:

– Utilizes a mixture-of-experts architecture where individual experts are trained on closed datasets, integrated using domain-informed routing without joint training.

💬 Research Conclusions:

– FlexOlmo achieves significant performance improvements, with a 41% relative enhancement in task performance, allowing selective data inclusion based on licensing, and outperforming prior model merging methods by 10.1%.

👉 Paper link: https://huggingface.co/papers/2507.07024

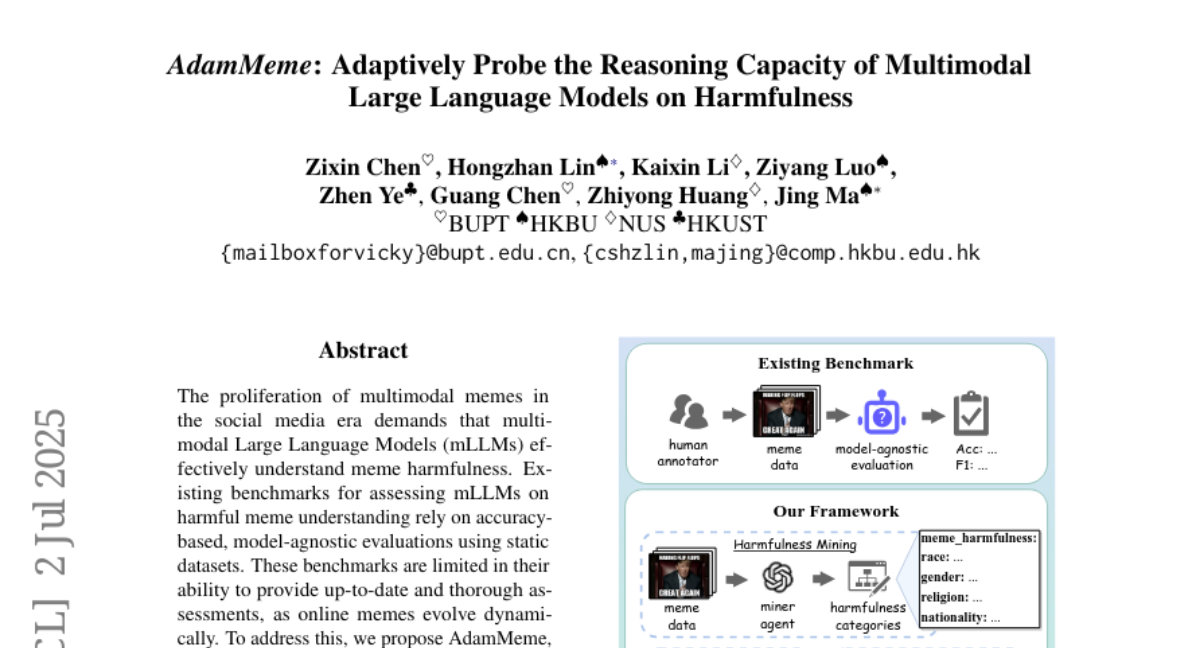

18. AdamMeme: Adaptively Probe the Reasoning Capacity of Multimodal Large Language Models on Harmfulness

🔑 Keywords: AdamMeme, Multimodal Large Language Models, agent-based framework, multi-agent collaboration, model-specific weaknesses

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To develop an adaptive agent-based framework, AdamMeme, for evaluating the understanding of harmful memes by multimodal Large Language Models.

🛠️ Research Methods:

– Utilization of multi-agent collaboration to iteratively update meme datasets, enabling in-depth evaluation of mLLMs’ capabilities and limitations in interpreting meme harmfulness.

💬 Research Conclusions:

– AdamMeme effectively exposes the specific weaknesses in different multimodal Large Language Models, providing comprehensive and fine-grained analysis of their performance on harmful meme interpretation.

👉 Paper link: https://huggingface.co/papers/2507.01702

19. Towards Multimodal Understanding via Stable Diffusion as a Task-Aware Feature Extractor

🔑 Keywords: Text-to-image diffusion models, CLIP, Instruction-aware, Visual encoder, Diffusion features

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Investigate whether pre-trained text-to-image diffusion models can serve as instruction-aware visual encoders to enhance image-based question-answering.

🛠️ Research Methods:

– Analyzed the internal representations of diffusion models for semantic richness and image-text alignment.

– Explored aligning diffusion features with large language models and addressed the leakage phenomenon with a mitigation strategy.

💬 Research Conclusions:

– Demonstrated that diffusion models complement CLIP, improving spatial and compositional reasoning in visual understanding tasks.

– Proposed a fusion strategy using both CLIP and conditional diffusion features, showing promise in VQA and MLLM benchmarks.

👉 Paper link: https://huggingface.co/papers/2507.07106

20.