AI Native Daily Paper Digest – 20250715

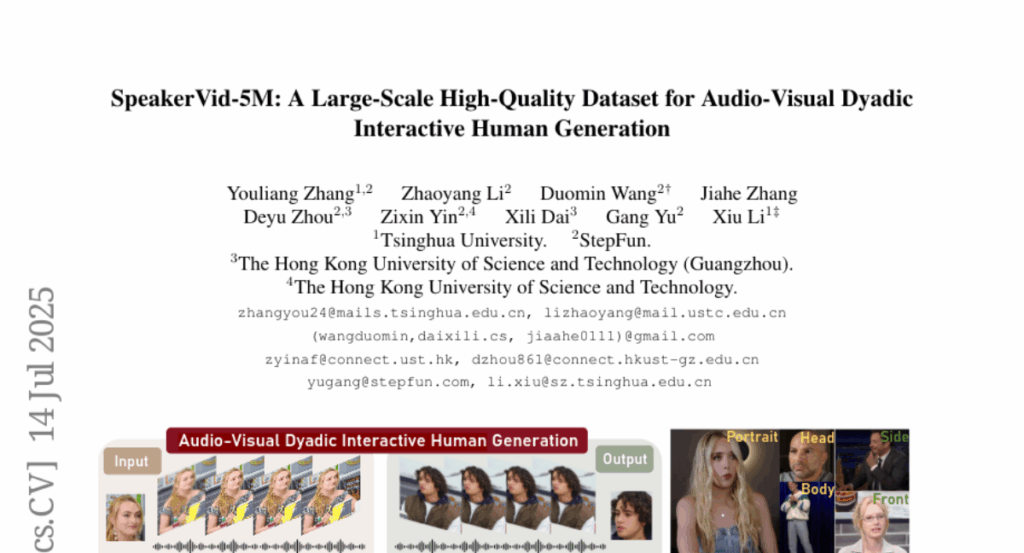

1. SpeakerVid-5M: A Large-Scale High-Quality Dataset for Audio-Visual Dyadic Interactive Human Generation

🔑 Keywords: SpeakerVid-5M, audio-visual, virtual human, large-scale dataset, dyadic interaction

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To introduce SpeakerVid-5M, the first large-scale, high-quality dataset for audio-visual dyadic interactive virtual human generation.

🛠️ Research Methods:

– The dataset is categorized into four interaction types (dialogue branch, single branch, listening branch, and multi-turn branch) and divided into pre-training and high-quality subsets, with a focus on 2D virtual human tasks.

💬 Research Conclusions:

– SpeakerVid-5M provides a robust resource for virtual human tasks, alongside an autoregressive-based video chat baseline and benchmark VidChatBench. The dataset and processing code will be public, facilitating future research in this domain.

👉 Paper link: https://huggingface.co/papers/2507.09862

2. Reasoning or Memorization? Unreliable Results of Reinforcement Learning Due to Data Contamination

🔑 Keywords: LLM reasoning, Reinforcement Learning, reward signals, data contamination, synthetic datasets

💡 Category: Reinforcement Learning

🌟 Research Objective:

– To enhance reasoning capabilities of large language models using Reinforcement Learning (RL) with accurate reward signals, in the context of potential data contamination.

🛠️ Research Methods:

– Introduction of a generator to produce fully synthetic arithmetic problems, creating a clean dataset named RandomCalculation to evaluate the effects of reward signals without data contamination.

💬 Research Conclusions:

– Accurate reward signals consistently improve LLM performance, while noisy or incorrect signals do not.

– Studies indicate unreliability of current benchmarks due to data contamination, advocating for the use of uncontaminated benchmarks across diverse model families.

👉 Paper link: https://huggingface.co/papers/2507.10532

3. Mixture-of-Recursions: Learning Dynamic Recursive Depths for Adaptive Token-Level Computation

🔑 Keywords: Mixture-of-Recursions, Recursive Transformer, parameter efficiency, adaptive token-level thinking, few-shot accuracy

💡 Category: Natural Language Processing

🌟 Research Objective:

– The aim is to achieve both parameter and computational efficiency in large language models through shared layers and adaptive recursion depths.

🛠️ Research Methods:

– Introduced Mixture-of-Recursions (MoR), a framework combining parameter sharing and adaptive computation within Recursive Transformers. Utilizes lightweight routers for dynamic recursion depth assignments to tokens.

💬 Research Conclusions:

– Mixture-of-Recursions forms a new Pareto frontier, showing reduced validation perplexity and improved few-shot accuracy, indicating it as a cost-effective path towards enhanced large-model quality and throughput.

👉 Paper link: https://huggingface.co/papers/2507.10524

4. EmbRACE-3K: Embodied Reasoning and Action in Complex Environments

🔑 Keywords: Embodied Settings, Vision-Language Models, Spatial Reasoning, Long-Horizon Planning, Reinforcement Learning

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– The paper introduces EmRACE-3K, a dataset designed to evaluate the embodied reasoning capabilities of Vision-Language Models (VLMs) in settings that require spatial reasoning and long-horizon planning.

🛠️ Research Methods:

– The study benchmarks VLMs using EmRACE-3K, which features over 3,000 tasks in photorealistic environments involving navigation, object manipulation, and multi-stage goal execution. It assesses models under zero-shot settings and fine-tunes them using supervised and reinforcement learning.

💬 Research Conclusions:

– The research reveals the current limitations of VLMs in interactive environments, with models achieving below 20% success in zero-shot settings. However, fine-tuning with EmRACE-3K markedly improves their performance, showcasing the dataset’s value in advancing embodied reasoning.

👉 Paper link: https://huggingface.co/papers/2507.10548

5. REST: Stress Testing Large Reasoning Models by Asking Multiple Problems at Once

🔑 Keywords: Large Reasoning Models, REST, stress-testing framework, contextual priority allocation, cognitive load management

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– Evaluate Large Reasoning Models under multi-context pressure to reveal performance differences not apparent in single-question tests.

🛠️ Research Methods:

– Introduction of REST, a stress-testing framework that concurrently challenges models with multiple problems to assess capabilities such as contextual priority allocation and cognitive load management.

💬 Research Conclusions:

– The study found that state-of-the-art models demonstrate significant performance degradation under REST, emphasizing REST’s superior ability to differentiate model performance beyond conventional benchmarks. Models using “long2short” technique showed better adaptation under stress, outperforming standard-trained peers.

👉 Paper link: https://huggingface.co/papers/2507.10541

6. LayerCake: Token-Aware Contrastive Decoding within Large Language Model Layers

🔑 Keywords: token-aware, layer-localized, contrastive decoding, factual accuracy, Large Language Models

💡 Category: Natural Language Processing

🌟 Research Objective:

– To improve factual accuracy in Large Language Models by using a token-aware, layer-localized contrastive decoding method.

🛠️ Research Methods:

– Introduced a method aligning specific token types with influential transformer layers, using empirical attention analysis to understand token attention dynamics.

– Implemented a strategy to selectively suppress attention to specific token types at respective depths without additional training or model modification.

💬 Research Conclusions:

– The proposed method consistently enhances factuality across multiple Large Language Models and various benchmarks, demonstrating improved controlled factual generation.

👉 Paper link: https://huggingface.co/papers/2507.04404

7. CompassJudger-2: Towards Generalist Judge Model via Verifiable Rewards

🔑 Keywords: Generalist Judge Model, Task-Driven, Verifiable Rewards, Margin Policy Gradient, Cross-Domain Judgment Accuracy

💡 Category: Natural Language Processing

🌟 Research Objective:

– Introduce CompassJudger-2, a generalist judge model designed to overcome limitations of current judge models in terms of narrow specialization and robustness.

🛠️ Research Methods:

– Employ a task-driven, multi-domain data curation strategy.

– Implement supervision of judgment tasks using verifiable rewards and apply rejection sampling to guide critical reasoning.

– Utilize a refined learning objective with margin policy gradient loss to enhance judgment capabilities.

💬 Research Conclusions:

– CompassJudger-2 achieves superior results across multiple benchmarks, demonstrating competitive accuracy with larger models.

– Introduce JudgerBenchV2 as a comprehensive benchmark to evaluate cross-domain judgment accuracy and rank consistency, setting new standards for judge model evaluation.

👉 Paper link: https://huggingface.co/papers/2507.09104

8. MoVieS: Motion-Aware 4D Dynamic View Synthesis in One Second

🔑 Keywords: MoVieS, Gaussian primitives, time-varying motion, view synthesis, zero-shot applications

💡 Category: Computer Vision

🌟 Research Objective:

– The objective is to synthesize 4D dynamic novel views from monocular videos using a model that unifies the modeling of appearance, geometry, and motion.

🛠️ Research Methods:

– Utilizes a feed-forward model representing dynamic 3D scenes with pixel-aligned grids of Gaussian primitives, incorporating time-varying motion supervision to enable tasks like view synthesis and 3D point tracking.

💬 Research Conclusions:

– MoVieS achieves competitive performance across multiple tasks with significant speed improvements and supports zero-shot applications, validated by extensive experiments.

👉 Paper link: https://huggingface.co/papers/2507.10065

9. From KMMLU-Redux to KMMLU-Pro: A Professional Korean Benchmark Suite for LLM Evaluation

🔑 Keywords: Large Language Models, Benchmarks, KMMLU-Redux, KMMLU-Pro, Industrial Knowledge

💡 Category: Natural Language Processing

🌟 Research Objective:

– Introduce Korean expert-level benchmarks, KMMLU-Redux and KMMLU-Pro, to evaluate Large Language Models in both academic and industrial contexts.

🛠️ Research Methods:

– Reconstruct KMMLU-Redux from Korean National Technical Qualification exams, removing critical errors to improve reliability.

– Develop KMMLU-Pro based on Korean National Professional Licensure exams to represent professional knowledge.

💬 Research Conclusions:

– The benchmarks comprehensively represent industrial knowledge in Korea, enhancing the evaluation of LLMs’ applicability in real-world scenarios. The dataset is publicly released.

👉 Paper link: https://huggingface.co/papers/2507.08924

10. DreamPoster: A Unified Framework for Image-Conditioned Generative Poster Design

🔑 Keywords: Text-to-Image generation, Seedream3.0, data annotation pipeline, usability rate

💡 Category: Generative Models

🌟 Research Objective:

– Introduce DreamPoster, a framework for generating high-quality posters from images and text prompts with flexible resolution and layout.

🛠️ Research Methods:

– Develop a systematic data annotation pipeline to annotate textual content and typographic hierarchy in poster images.

– Implement a progressive training strategy using the Seedream3.0 model to enhance multi-task generation capabilities.

💬 Research Conclusions:

– DreamPoster outperforms existing methods, achieving a usability rate of 88.55%, significantly higher than GPT-4o and SeedEdit3.0.

– The framework is set to be available on platforms like Jimeng and other Bytedance Apps.

👉 Paper link: https://huggingface.co/papers/2507.04218

11. A Practical Two-Stage Recipe for Mathematical LLMs: Maximizing Accuracy with SFT and Efficiency with Reinforcement Learning

🔑 Keywords: Large Language Models, Mathematical Reasoning, Supervised Fine-Tuning, Reinforcement Learning, AI Mathematical Olympiad

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– To enhance the mathematical reasoning capabilities of Large Language Models (LLMs) through a combination of extended Supervised Fine-Tuning (SFT) and Reinforcement Learning (RL) from online inference.

🛠️ Research Methods:

– A systematic integration of extended SFT and RL, specifically using a method called GRPO from online inference. The approach involves prolonging the SFT phase to maximize model accuracy before utilizing GRPO to improve token efficiency and optimize solution length.

💬 Research Conclusions:

– The methodology effectively achieves top-tier performance on mathematical benchmarks, including the AI Mathematical Olympiad, and presents a validated training blueprint for developing state-of-the-art mathematical reasoning models with high accuracy and efficiency. The entire framework, including code and training resources, will be open-sourced for reproducibility and future research.

👉 Paper link: https://huggingface.co/papers/2507.08267

12. Favicon Trojans: Executable Steganography Via Ico Alpha Channel Exploitation

🔑 Keywords: Executable Steganography, JavaScript Payloads, Favicon, Covert Channel, Alpha Transparency

💡 Category: Computer Vision

🌟 Research Objective:

– The study introduces a method for embedding and delivering self-decompressing JavaScript payloads within web browsers using the alpha transparency layer of ICO image files.

🛠️ Research Methods:

– Utilizes the least significant bit (LSB) of non-transparent alpha layer image values to conceal JavaScript code without affecting visual fidelity. Implements a proof-of-concept demonstrating this capability in a 64×64 ICO image, extracted and executed through native JavaScript APIs.

💬 Research Conclusions:

– Confirms successful and silent execution across multiple browsers. Highlights stealth attack surface that circumvents detection mechanisms like content security policies and antivirus scanners, offering insights into vulnerabilities in standard web behaviors.

👉 Paper link: https://huggingface.co/papers/2507.09074

13. Sound and Complete Neuro-symbolic Reasoning with LLM-Grounded Interpretations

🔑 Keywords: Large Language Models, Natural Language Understanding, Logical Consistency, Formal Semantics, Paraconsistent Logic

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– The objective is to integrate Large Language Models (LLMs) into formal semantics for paraconsistent logic to maintain logical soundness and completeness while utilizing LLM’s extensive knowledge.

🛠️ Research Methods:

– The research introduces a method that incorporates an LLM into the interpretation function of formal semantics specifically for paraconsistent logic. This method is evaluated using datasets from short-form factuality benchmarks.

💬 Research Conclusions:

– The study presents a theoretical framework for neuro-symbolic reasoning that effectively harnesses an LLM’s knowledge, ensuring the preservation of soundness and completeness in logical systems.

👉 Paper link: https://huggingface.co/papers/2507.09751

14.