AI Native Daily Paper Digest – 20250716

1. Vision-Language-Vision Auto-Encoder: Scalable Knowledge Distillation from Diffusion Models

🔑 Keywords: Vision-Language Models, VLV auto-encoder, semantic understanding, fine-tuning, cost-efficiency

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Introduce the Vision-Language-Vision (VLV) auto-encoder framework to create an efficient and cost-effective captioning system.

🛠️ Research Methods:

– Leverages pretrained components like vision encoders, Text-to-Image (T2I) diffusion models, and Large Language Models (LLMs) to construct a comprehensive captioning pipeline.

– Implements an information bottleneck by freezing the pretrained T2I diffusion decoder and fine-tunes an LLM for detailed description decoding.

💬 Research Conclusions:

– The method shows remarkable cost-efficiency and effectively reduces data requirements by primarily utilizing single-modal images and existing pretrained models, cutting down the need for large paired image-text datasets while maintaining high-quality semantic understanding.

👉 Paper link: https://huggingface.co/papers/2507.07104

2. EXAONE 4.0: Unified Large Language Models Integrating Non-reasoning and Reasoning Modes

🔑 Keywords: AI Native, multilingual capabilities, high performance, on-device applications, Reasoning mode

💡 Category: AI Systems and Tools

🌟 Research Objective:

– To introduce EXAONE 4.0, which combines non-reasoning and reasoning capabilities with multilingual features to enhance the functions of previous versions and prepare for the agentic AI era.

🛠️ Research Methods:

– Integration of both Non-reasoning and Reasoning modes, developing mid-size and small-size models for high-performance and on-device usage.

💬 Research Conclusions:

– EXAONE 4.0 exhibits superior performance to open-weight models and remains competitive against frontier-class models, providing accessible multilingual support for research purposes.

👉 Paper link: https://huggingface.co/papers/2507.11407

3. Scaling Laws for Optimal Data Mixtures

🔑 Keywords: Scaling laws, Large foundation models, Data mixtures, Performance prediction, Optimal domain weights

💡 Category: Foundations of AI

🌟 Research Objective:

– The study aims to propose a systematic method for determining the optimal data mixture for large foundation models using scaling laws, enhancing efficiency and accuracy across different domains and scales.

🛠️ Research Methods:

– The research employs scaling laws to predict model loss when trained with different domain data proportions, validated in extensive frameworks like large language models, native multimodal models, and large vision models.

💬 Research Conclusions:

– The study demonstrates the universality and predictive power of these scaling laws, showing their applicability in estimating performance for new data mixtures and scales, offering a cost-effective and reliable alternative to traditional trial-and-error approaches.

👉 Paper link: https://huggingface.co/papers/2507.09404

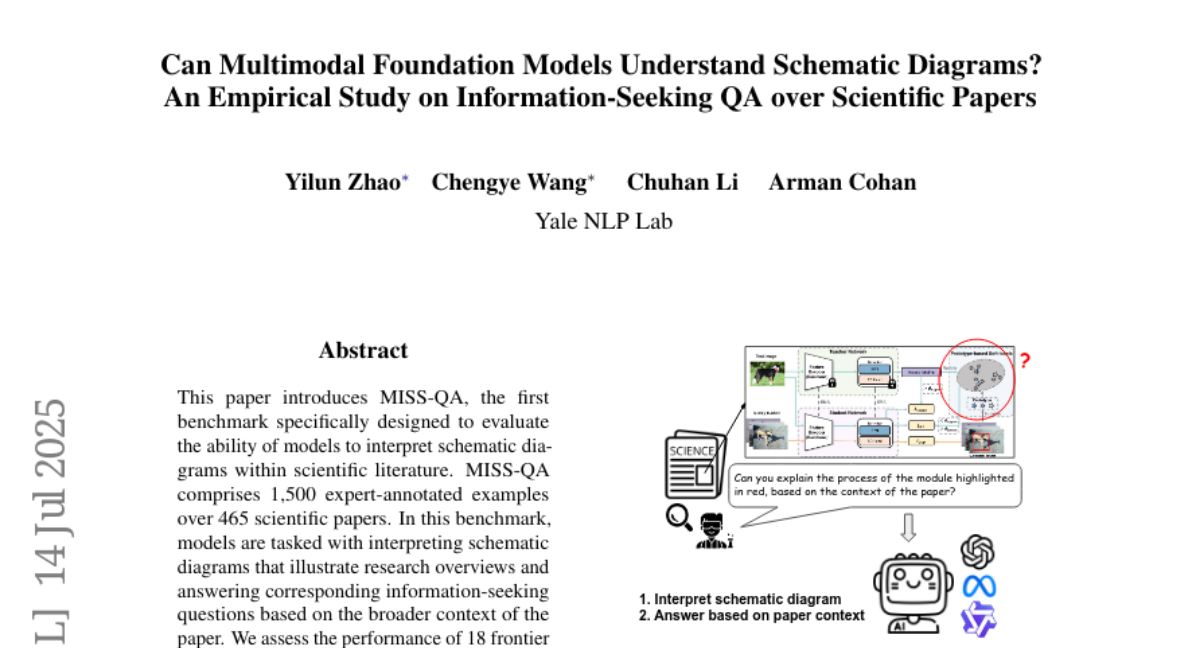

4. Can Multimodal Foundation Models Understand Schematic Diagrams? An Empirical Study on Information-Seeking QA over Scientific Papers

🔑 Keywords: MISS-QA, multimodal foundation models, schematic diagrams, scientific literature, error analysis

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Introduce and evaluate MISS-QA, a benchmark designed to assess models’ abilities to interpret scientific schematic diagrams and answer related questions.

🛠️ Research Methods:

– Assessed 18 frontier multimodal foundation models using 1,500 expert-annotated examples from 465 scientific papers to identify performance gaps and insights for improvement.

💬 Research Conclusions:

– There exists a significant performance gap between current models and human experts, with insights gained from error analysis indicating strengths and limitations in model performance on schematic diagrams.

👉 Paper link: https://huggingface.co/papers/2507.10787

5. OpenCodeReasoning-II: A Simple Test Time Scaling Approach via Self-Critique

🔑 Keywords: Large Language Models, code generation, distillation, reasoning, dataset

💡 Category: Natural Language Processing

🌟 Research Objective:

– Introduce OpenCodeReasoning-II, a large-scale dataset for enhancing code generation and critique tasks.

🛠️ Research Methods:

– Implement a two-stage supervised fine-tuning strategy focusing on code generation and the joint training of generation and critique models.

💬 Research Conclusions:

– The fine-tuned Qwen2.5-Instruct models deliver superior or on-par code generation performance compared to previous models, with notable improvements in competitive coding, and an extended C++ benchmark in LiveCodeBench for enhanced LLM evaluation.

👉 Paper link: https://huggingface.co/papers/2507.09075

6. Token-based Audio Inpainting via Discrete Diffusion

🔑 Keywords: Audio inpainting, Discrete diffusion modeling, Tokenized audio representations, Pre-trained audio tokenizer, Generative process

💡 Category: Generative Models

🌟 Research Objective:

– The study introduces a novel inpainting method for reconstructing long gaps in corrupted audio recordings using discrete diffusion modeling based on tokenized audio representations.

🛠️ Research Methods:

– The method involves operating over tokenized audio representations produced by a pre-trained audio tokenizer and evaluates the process on datasets like MusicNet and MTG for gap durations up to 500 ms.

💬 Research Conclusions:

– The proposed method demonstrates competitive or superior performance compared to existing baselines, particularly for longer gaps, providing a robust solution for restoring degraded musical recordings.

👉 Paper link: https://huggingface.co/papers/2507.08333

7. AgentsNet: Coordination and Collaborative Reasoning in Multi-Agent LLMs

🔑 Keywords: AgentsNet, Multi-Agent Systems, Self-Organization, Communication, Network Topology

💡 Category: AI Systems and Tools

🌟 Research Objective:

– The objective of the research is to introduce AgentsNet, a benchmark designed to evaluate multi-agent systems’ capability to self-organize, communicate, and solve problems across various network sizes.

🛠️ Research Methods:

– The study utilizes AgentsNet, drawing from distributed systems and graph theory, to measure the ability of multi-agent systems to collaborate effectively, self-organize, and communicate within a given network topology.

💬 Research Conclusions:

– Some frontier LLMs perform well in small network settings but their efficacy declines as network size increases. AgentsNet supports scaling with systems involving up to 100 agents, exceeding the capacity of existing benchmarks which support only 2-5 agents.

👉 Paper link: https://huggingface.co/papers/2507.08616

8. LLMalMorph: On The Feasibility of Generating Variant Malware using Large-Language-Models

🔑 Keywords: Large Language Models, LLMalMorph, malware variants, syntactical code comprehension, antivirus engines

💡 Category: Generative Models

🌟 Research Objective:

– This research aims to explore the feasibility of utilizing Large Language Models (LLMs) to modify malware source code for generating new variants, potentially reducing detection rates by antivirus engines.

🛠️ Research Methods:

– The study introduces a semi-automated framework called LLMalMorph, which harnesses LLMs’ semantic and syntactical code comprehension. The framework uses function-level information from source code and custom-engineered prompts, along with strategically defined code transformations, to generate malware variants efficiently.

💬 Research Conclusions:

– Experimental results revealed that LLMalMorph can effectively reduce detection rates of generated malware variants while maintaining their functionalities. These variants also exhibited notable success against ML-based malware classifiers, highlighting both the potential and limitations of current LLM capabilities in malware variant generation.

👉 Paper link: https://huggingface.co/papers/2507.09411

9. Orchestrator-Agent Trust: A Modular Agentic AI Visual Classification System with Trust-Aware Orchestration and RAG-Based Reasoning

🔑 Keywords: Agentic AI, zero-shot visual classification, multimodal agents, trust-aware orchestration, Retrieval-Augmented Generation (RAG)

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To develop a modular Agentic AI framework that enhances trust and accuracy in zero-shot visual classification tasks, such as diagnosing apple leaf diseases.

🛠️ Research Methods:

– Integration of generalist multimodal agents with a non-visual reasoning orchestrator and a Retrieval-Augmented Generation (RAG) module.

– Benchmarking three configurations: zero-shot with confidence-based orchestration, fine-tuned agents, and trust-calibrated orchestration with CLIP-based image retrieval.

– Use of confidence calibration metrics (ECE, OCR, CCC) for modulating trust across agents.

💬 Research Conclusions:

– Achieved 77.94% accuracy improvement in zero-shot setting, reaching 85.63% overall accuracy through trust-aware orchestration and RAG.

– System supports scalability and interpretability, applicable to trust-critical domains such as diagnostics and biology.

– Full release of source code and components on GitHub for community benchmarking and transparency.

👉 Paper link: https://huggingface.co/papers/2507.10571

10. Planted in Pretraining, Swayed by Finetuning: A Case Study on the Origins of Cognitive Biases in LLMs

🔑 Keywords: Large language models, Cognitive biases, Pretraining, Finetuning, Instruction tuning

💡 Category: Natural Language Processing

🌟 Research Objective:

– To identify the main sources of cognitive biases in large language models, distinguishing the impact of pretraining from other factors like finetuning and training randomness.

🛠️ Research Methods:

– A two-step causal experimental approach is used: finetuning models multiple times with different random seeds, and introducing cross-tuning by swapping instruction datasets to isolate bias sources.

💬 Research Conclusions:

– The study finds that while training randomness introduces some variability, cognitive biases in large language models are primarily shaped by pretraining. Models with identical pretraining backbones exhibit similar bias patterns compared to those only sharing finetuning data.

👉 Paper link: https://huggingface.co/papers/2507.07186

11. UGC-VideoCaptioner: An Omni UGC Video Detail Caption Model and New Benchmarks

🔑 Keywords: UGC-VideoCap, AI-generated summary, omnimodal captioning, audio-visual integration, TikTok

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Introduce UGC-VideoCap to improve detailed omnimodal captioning for user-generated videos by integrating audio-visual content effectively.

🛠️ Research Methods:

– Developed a benchmark using 1000 TikTok videos annotated through a human-in-the-loop pipeline, integrating audio and visual content.

– Proposed a 3B parameter captioning model, UGC-VideoCaptioner(3B), using a novel two-stage training strategy involving supervised fine-tuning and Group Relative Policy Optimization (GRPO).

💬 Research Conclusions:

– The benchmark and model set a foundation for advancing omnimodal video captioning in real-world user-generated content scenarios, providing a data-efficient solution with competitive performance.

👉 Paper link: https://huggingface.co/papers/2507.11336

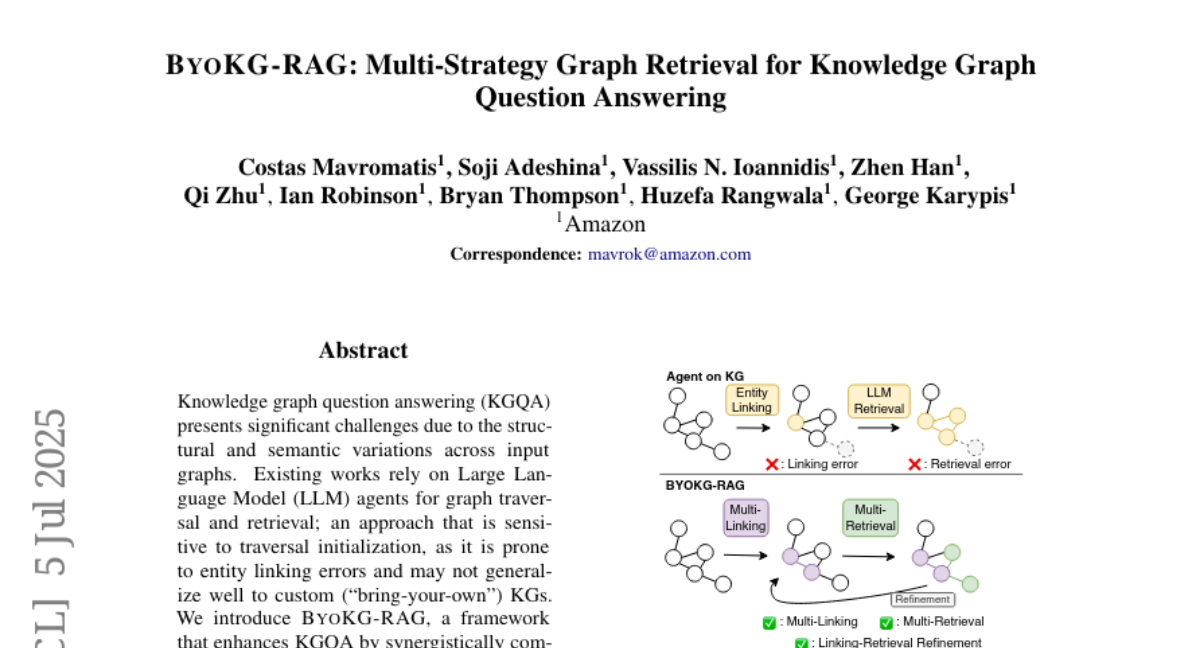

12. BYOKG-RAG: Multi-Strategy Graph Retrieval for Knowledge Graph Question Answering

🔑 Keywords: Knowledge graph question answering, Large Language Model, graph retrieval tools, BYOKG-RAG, custom KGs

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– The research introduces BYOKG-RAG, a framework designed to enhance KGQA by combining LLMs with specialized graph retrieval tools to improve generalization and performance over custom knowledge graphs.

🛠️ Research Methods:

– BYOKG-RAG utilizes LLMs to generate crucial graph artifacts and specialized tools to link these artifacts to the knowledge graphs, allowing retrieval of relevant graph context to iteratively refine graph linking and retrieval.

💬 Research Conclusions:

– Experiments demonstrate that BYOKG-RAG outperforms existing graph retrieval methods by 4.5% and shows better generalization to custom KGs. The framework is open-sourced for wider accessibility and usage.

👉 Paper link: https://huggingface.co/papers/2507.04127

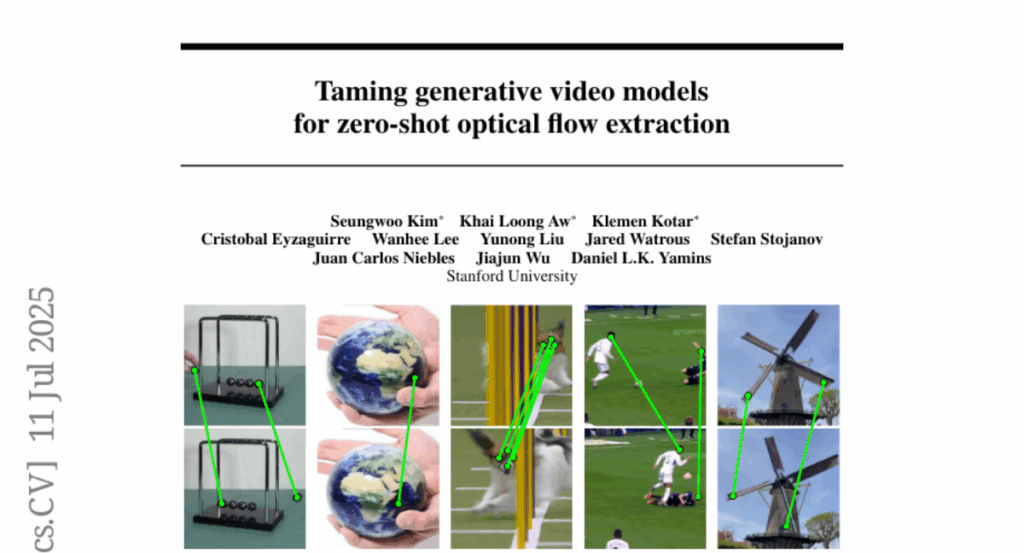

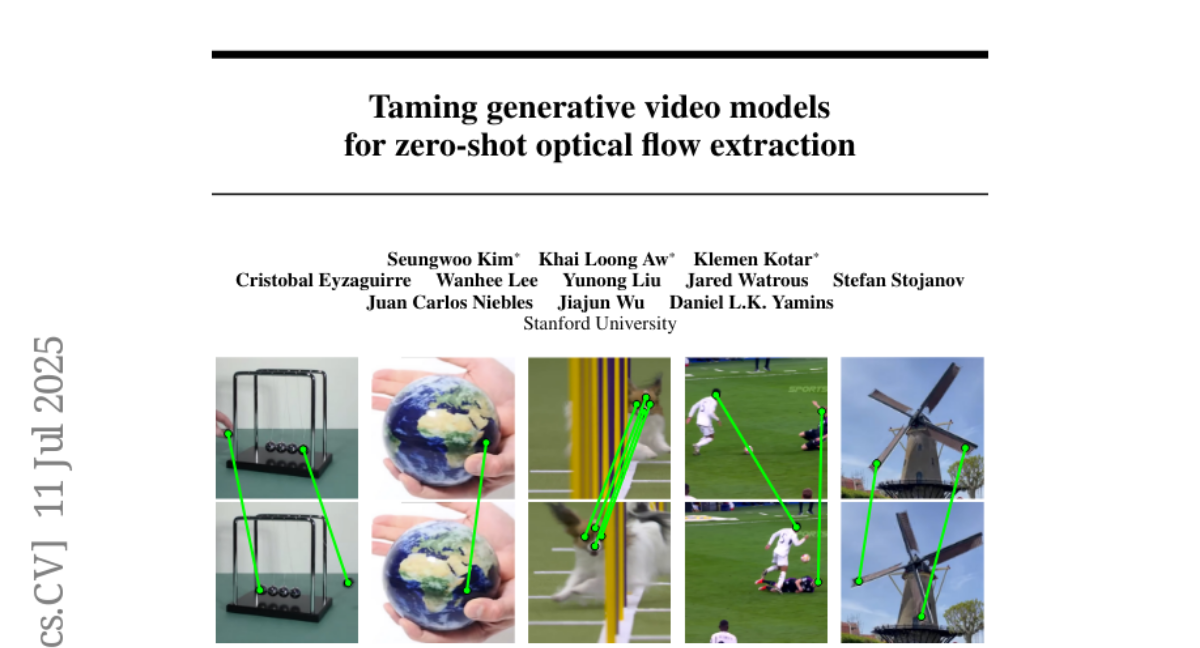

13. Taming generative video models for zero-shot optical flow extraction

🔑 Keywords: Optical Flow, Generative Video Models, KL-tracing, Counterfactual World Model

💡 Category: Computer Vision

🌟 Research Objective:

– The study aims to investigate if frozen self-supervised video models can generate optical flow without fine-tuning for future frame prediction.

🛠️ Research Methods:

– The researchers utilized a test-time procedure named KL-tracing, based on the Counterfactual World Model paradigm, applying it to the Local Random Access Sequence (LRAS) architecture to outperform existing methods on both real and synthetic datasets.

💬 Research Conclusions:

– The proposed method outperforms state-of-the-art models in generating high-quality flow, presenting a scalable alternative to traditional supervised approaches, achieving significant improvements on the TAP-Vid DAVIS and TAP-Vid Kubric datasets.

👉 Paper link: https://huggingface.co/papers/2507.09082

14.