AI Native Daily Paper Digest – 20250723

1. Beyond Context Limits: Subconscious Threads for Long-Horizon Reasoning

🔑 Keywords: Thread Inference Model, TIMRUN, long-horizon reasoning, reasoning trees, key-value states

💡 Category: Natural Language Processing

🌟 Research Objective:

– To overcome the context and memory limitations of large language models (LLMs) by proposing the Thread Inference Model (TIM) and its runtime (TIMRUN) for enhanced reasoning accuracy and efficiency.

🛠️ Research Methods:

– Introduces a reasoning framework using reasoning trees that model natural language tasks with thoughts, recursive subtasks, and conclusions to support virtually unlimited working memory and multi-hop tool calls.

💬 Research Conclusions:

– TIM and TIMRUN enhance inference throughput and deliver accurate reasoning, particularly in mathematical tasks and information retrieval, by sustaining a high manipulation capacity of GPU memory and maintaining relevant context tokens.

👉 Paper link: https://huggingface.co/papers/2507.16784

2. Step-Audio 2 Technical Report

🔑 Keywords: Multi-Modal Large Language Model, Automatic Speech Recognition, Reinforcement Learning, Retrieval-Augmented Generation

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Develop Step-Audio 2, an end-to-end multi-modal model for advanced audio understanding and speech conversation.

🛠️ Research Methods:

– Integration of a latent audio encoder and reasoning-centric reinforcement learning.

– Use of retrieval-augmented generation (RAG) for improved responsiveness and mitigating hallucination.

💬 Research Conclusions:

– Step-Audio 2 delivers state-of-the-art performance in audio understanding and conversational benchmarks, surpassing existing solutions.

👉 Paper link: https://huggingface.co/papers/2507.16632

3. MegaScience: Pushing the Frontiers of Post-Training Datasets for Science Reasoning

🔑 Keywords: MegaScience, Scientific Reasoning, Dataset, Evaluation System, Llama3.1

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– To fill the gap in open, large-scale scientific reasoning datasets by introducing MegaScience and TextbookReasoning to enhance AI model performance and training efficiency.

🛠️ Research Methods:

– Introduction of an extensive mixture of datasets totaling 1.25 million instances through systematic ablation studies.

– Development of a comprehensive evaluation system covering diverse subjects and benchmarks.

💬 Research Conclusions:

– MegaScience datasets achieve superior performance with increased efficiency and concise response lengths.

– Models like Llama3.1 and Qwen series trained on MegaScience significantly outperform corresponding official models.

– MegaScience shows a scaling benefit for scientific tuning, suggesting effectiveness for larger models.

👉 Paper link: https://huggingface.co/papers/2507.16812

4. Upsample What Matters: Region-Adaptive Latent Sampling for Accelerated Diffusion Transformers

🔑 Keywords: Diffusion transformers, Image and video generation, Region-Adaptive Latent Upsampling, Scalability, Temporal acceleration

💡 Category: Generative Models

🌟 Research Objective:

– To propose Region-Adaptive Latent Upsampling (RALU) as a framework to accelerate inference in diffusion transformers for high-fidelity image and video generation without degrading image quality.

🛠️ Research Methods:

– Implementation of a training-free, three-stage mixed-resolution sampling process involving low-resolution denoising, region-adaptive upsampling for artifact-prone areas, and full-resolution latent upsampling for detailed refinement.

💬 Research Conclusions:

– RALU significantly reduces computation by achieving up to 7.0x speed-up on FLUX and 3.0x on Stable Diffusion 3 while maintaining image quality. It is also complementary to existing temporal acceleration methods, allowing for further reduction in inference latency.

👉 Paper link: https://huggingface.co/papers/2507.08422

5. Zebra-CoT: A Dataset for Interleaved Vision Language Reasoning

🔑 Keywords: Visual Chain of Thought, Multimodal Models, Zebra-CoT, Fine-tuning, Visual Reasoning

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The paper aims to address challenges in training multimodal models using a new dataset, Zebra-CoT, for enhancing visual chain of thought (Visual CoT) performance.

🛠️ Research Methods:

– Introduced a large-scale dataset with 182,384 samples of interleaved text-image reasoning traces, focusing on tasks like scientific questions, 2D and 3D reasoning tasks, and strategic games.

– Fine-tuned models such as Anole-7B and Bagel-7B on the Zebra-CoT dataset to improve accuracy and performance in visual reasoning tasks.

💬 Research Conclusions:

– Fine-tuning on the Zebra-CoT dataset improves test-set accuracy by +12% and increases performance by up to +13% on standard benchmarks.

– The open-sourcing of the dataset and models supports further development and evaluation of multimodal reasoning abilities in Visual CoT.

👉 Paper link: https://huggingface.co/papers/2507.16746

6. Semi-off-Policy Reinforcement Learning for Vision-Language Slow-thinking Reasoning

🔑 Keywords: Vision-Language models, Reinforcement Learning, slow-thinking reasoning, SOPHIA, multimodal tasks

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The study aims to enhance large vision-language models (LVLMs) with slow-thinking reasoning capability for solving complex multimodal tasks.

🛠️ Research Methods:

– The paper introduces SOPHIA, a Semi-Off-Policy RL framework that combines on-policy visual understanding from LVLMs with off-policy slow-thinking reasoning from a language model, using outcome-based and propagated visual rewards.

💬 Research Conclusions:

– SOPHIA significantly improves the performance of large LVLMs such as InternVL3.0, achieving state-of-the-art results on various multimodal reasoning benchmarks and outperforming some closed-source models like GPT-4.1, by offering better policy initialization over traditional methods.

👉 Paper link: https://huggingface.co/papers/2507.16814

7. ThinkAct: Vision-Language-Action Reasoning via Reinforced Visual Latent Planning

🔑 Keywords: Vision-language-action, Reinforced visual latent planning, Few-shot adaptation, Long-horizon planning, AI Native

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The study aims to enhance vision-language-action tasks by developing ThinkAct, a dual-system framework that integrates high-level reasoning with robust action execution.

🛠️ Research Methods:

– ThinkAct employs reinforced visual latent planning that trains a multimodal large language model to generate embodied reasoning plans, guided by action-aligned visual rewards for improved planning and adaptation.

💬 Research Conclusions:

– Experiments demonstrate that ThinkAct supports few-shot adaptation, enables long-horizon planning, and promotes self-correction behaviors in complex embodied AI tasks.

👉 Paper link: https://huggingface.co/papers/2507.16815

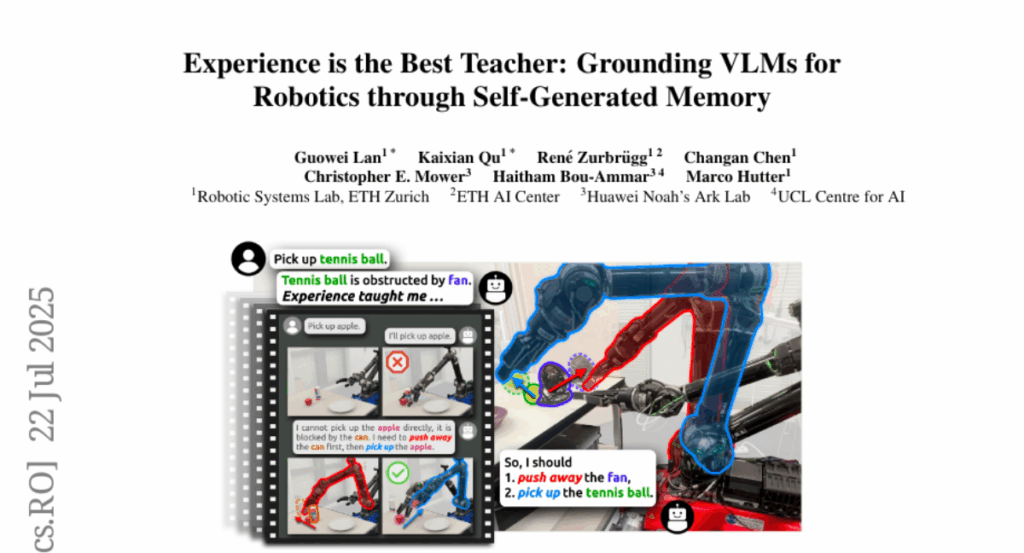

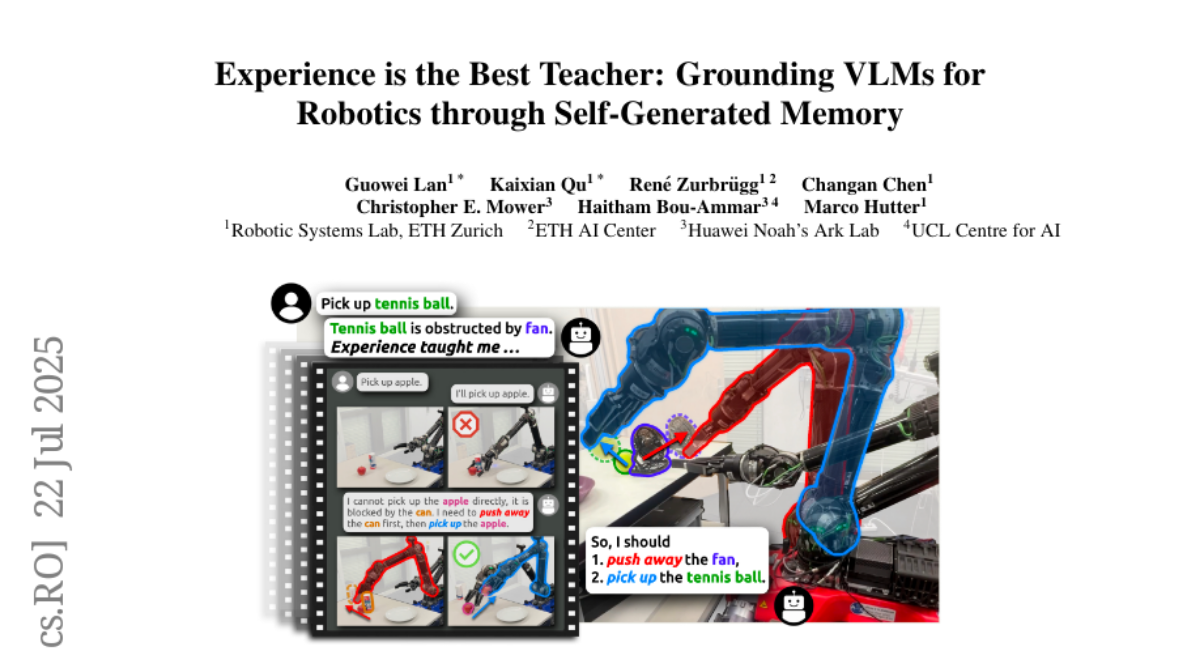

8. Experience is the Best Teacher: Grounding VLMs for Robotics through Self-Generated Memory

🔑 Keywords: Vision-language models, Robotics, Autonomous planning, ExpTeach, Long-term memory

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– Develop a framework (ExpTeach) that grounds Vision-Language Models (VLMs) to diverse real-world robots by building self-generated memory of real-world experiences.

🛠️ Research Methods:

– The framework enables VLMs to autonomously plan actions, verify outcomes, reflect on failures, and adapt robot behaviors in a closed loop. It incorporates a retrieval-augmented generation (RAG) mechanism and an on-demand image annotation module.

💬 Research Conclusions:

– Experiments demonstrate significant improvements in task success rates, showcasing the framework’s effectiveness and generalizability, especially in enhancing intelligent object interactions and achieving high single-trial success rates in real-world tests.

👉 Paper link: https://huggingface.co/papers/2507.16713

9. RefCritic: Training Long Chain-of-Thought Critic Models with Refinement Feedback

🔑 Keywords: Large Language Models, Critic Modules, Reinforcement Learning, Model Refinement

💡 Category: Reinforcement Learning

🌟 Research Objective:

– Develop effective critic modules to enhance critique capabilities of Large Language Models using a novel approach called RefCritic

🛠️ Research Methods:

– Introduced RefCritic, a long-chain-of-thought critic module employing reinforcement learning with dual rule-based rewards for solution judgment correctness and refinement accuracies

💬 Research Conclusions:

– RefCritic consistently outperforms traditional methods in critique and refinement across multiple benchmarks, showing notable gains and effectiveness in guiding model refinement

👉 Paper link: https://huggingface.co/papers/2507.15024

10. HOComp: Interaction-Aware Human-Object Composition

🔑 Keywords: HOComp, MLLMs, human-object interactions, Region-based Pose Guidance, Detail-Consistent Appearance Preservation

💡 Category: Computer Vision

🌟 Research Objective:

– The research introduces HOComp, a novel method aimed at facilitating seamless human-object interactions within image compositing, ensuring consistent appearances.

🛠️ Research Methods:

– The research implements MLLMs-driven Region-based Pose Guidance to identify and constrain interaction regions and types, and employs Detail-Consistent Appearance Preservation to maintain shape and texture consistency through advanced attention mechanisms and loss strategies.

💬 Research Conclusions:

– HOComp successfully enhances human-object interaction compositions with consistency and outperforms existing methods both qualitatively and quantitatively, demonstrated through experiments on the newly proposed IHOC dataset.

👉 Paper link: https://huggingface.co/papers/2507.16813

11. SPAR: Scholar Paper Retrieval with LLM-based Agents for Enhanced Academic Search

🔑 Keywords: Large Language Models, Scholarly Retrieval, RefChain, Query Decomposition

💡 Category: Natural Language Processing

🌟 Research Objective:

– To introduce SPAR, a multi-agent framework that enhances flexibility and effectiveness in academic literature retrieval using LLMs.

🛠️ Research Methods:

– Incorporates RefChain-based query decomposition and query evolution

– Constructs SPARBench, a benchmark with expert-annotated relevance labels

💬 Research Conclusions:

– SPAR significantly outperforms existing strong baselines, achieving up to +56% F1 on AutoScholar and +23% F1 on SPARBench.

– SPAR and SPARBench provide a scalable, interpretable, and high-performing foundation for research in scholarly retrieval.

👉 Paper link: https://huggingface.co/papers/2507.15245

12. PrefPalette: Personalized Preference Modeling with Latent Attributes

🔑 Keywords: PrefPalette, attribute dimensions, preference prediction, social community values, human-interpretable

💡 Category: Human-AI Interaction

🌟 Research Objective:

– The paper introduces PrefPalette, a framework that decomposes user preferences into comprehensible attribute dimensions and aligns predictions with the values of different social communities.

🛠️ Research Methods:

– PrefPalette employs counterfactual attribute synthesis to generate synthetic data and uses attention-based preference modeling to understand attribute weighting within social communities.

💬 Research Conclusions:

– PrefPalette enhances prediction accuracy by 46.6% over GPT-4o and provides community-specific insights, highlighting differing preference priorities among scholarly, conflict-oriented, and support-based communities.

👉 Paper link: https://huggingface.co/papers/2507.13541

13. Task-Specific Zero-shot Quantization-Aware Training for Object Detection

🔑 Keywords: Zero-shot Quantization, Synthetic Data, Object Detection, Task-specific

💡 Category: Computer Vision

🌟 Research Objective:

– The paper introduces a novel task-specific Zero-shot Quantization (ZSQ) framework for enhancing object detection networks without the need for real training data.

🛠️ Research Methods:

– Two key stages are proposed: a strategy for synthesizing a task-specific calibration set using bounding box and category sampling, and integrating task-specific training into the knowledge distillation process.

💬 Research Conclusions:

– The proposed method demonstrates efficient and state-of-the-art performance on the MS-COCO and Pascal VOC datasets, highlighting its effectiveness in restoring quantized detection networks.

👉 Paper link: https://huggingface.co/papers/2507.16782

14. Does More Inference-Time Compute Really Help Robustness?

🔑 Keywords: Inference-time computation, Open-source models, Security risk, Reasoning chains, AI Native

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– To explore the impact of increasing inference-time computation on the robustness of smaller-scale, open-source reasoning models.

🛠️ Research Methods:

– Utilized a budget forcing strategy to examine inference-time scaling in models like DeepSeek R1 and Qwen3, and evaluated the assumption of hidden intermediate reasoning steps.

💬 Research Conclusions:

– Identified a security risk in exposing intermediate reasoning steps leading to reduced robustness due to an inverse scaling law. Highlighted the necessity for careful consideration of adversarial settings before deploying inference-time scaling in security-sensitive applications.

👉 Paper link: https://huggingface.co/papers/2507.15974

15. ObjectGS: Object-aware Scene Reconstruction and Scene Understanding via Gaussian Splatting

🔑 Keywords: ObjectGS, 3D scene reconstruction, semantic understanding, neural Gaussians, mesh extraction

💡 Category: Computer Vision

🌟 Research Objective:

– The study aims to unify 3D scene reconstruction with semantic understanding by proposing ObjectGS, an object-aware framework.

🛠️ Research Methods:

– ObjectGS models individual objects as neural Gaussians and dynamically manages these anchors through growth and pruning, utilizing one-hot ID encoding with classification loss for semantic constraints.

💬 Research Conclusions:

– ObjectGS outperforms state-of-the-art methods in segmentation tasks and integrates effectively with applications like mesh extraction and scene editing.

👉 Paper link: https://huggingface.co/papers/2507.15454

16. Steering Out-of-Distribution Generalization with Concept Ablation Fine-Tuning

🔑 Keywords: Concept Ablation Fine-Tuning, interpretability tools, large language models, fine-tuning, misaligned responses

💡 Category: Natural Language Processing

🌟 Research Objective:

– Introduce Concept Ablation Fine-Tuning (CAFT) to control LLMs’ generalization without altering training data.

🛠️ Research Methods:

– Utilize interpretability tools and linear projections to ablate undesired concepts during fine-tuning.

💬 Research Conclusions:

– CAFT significantly reduces misaligned responses by 10x, maintaining performance on training distribution.

👉 Paper link: https://huggingface.co/papers/2507.16795

17.