AI Native Daily Paper Digest – 20250724

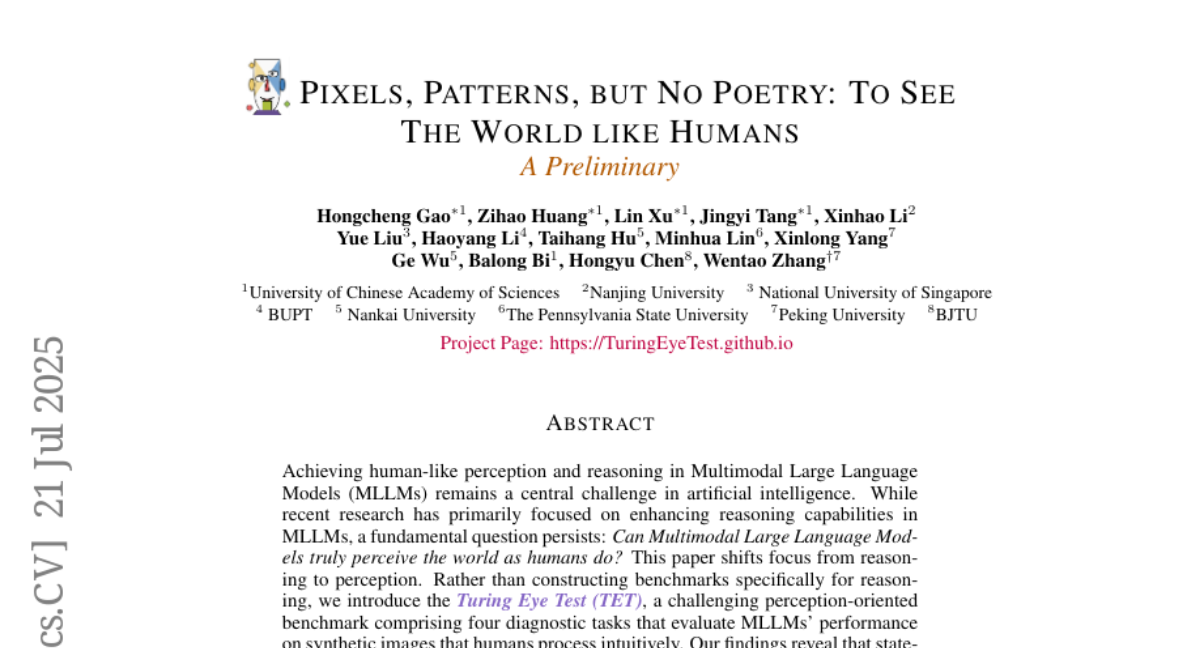

1. Pixels, Patterns, but No Poetry: To See The World like Humans

🔑 Keywords: Turing Eye Test, Multimodal Large Language Models, AI Native, Vision Tower, Human Perception

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To evaluate the perceptual abilities of Multimodal Large Language Models (MLLMs) compared to human perception, using the Turing Eye Test.

🛠️ Research Methods:

– Introduction of a perception-oriented benchmark called the Turing Eye Test, which includes four diagnostic tasks assessing MLLMs’ performance on synthetic images.

💬 Research Conclusions:

– Current state-of-the-art MLLMs face significant challenges in perceptual tasks that are trivial for humans.

– In-context learning and language backbone training do not improve MLLMs’ performance on these tasks, while fine-tuning the vision tower shows promise for adaptation, highlighting a key gap in vision tower generalization.

👉 Paper link: https://huggingface.co/papers/2507.16863

2. Yume: An Interactive World Generation Model

🔑 Keywords: Masked Video Diffusion Transformer, AI-generated, model acceleration, Anti-Artifact Mechanism, Time Travel Sampling

💡 Category: Generative Models

🌟 Research Objective:

– The project aims to create an interactive, realistic, and dynamic video world from images, text, or videos, allowing users to explore and control it via peripheral devices or neural signals.

🛠️ Research Methods:

– A framework comprising camera motion quantization, a video generation architecture, an advanced sampler, and model acceleration was introduced.

– Utilization of Masked Video Diffusion Transformer with a memory module for infinite video generation.

– Anti-Artifact Mechanism and Time Travel Sampling using Stochastic Differential Equations to enhance visual quality and control.

– Model acceleration through adversarial distillation and caching mechanisms.

💬 Research Conclusions:

– The method achieves impressive results in diverse scenes and applications and the data, code, and models are made publicly available for ongoing monthly updates.

👉 Paper link: https://huggingface.co/papers/2507.17744

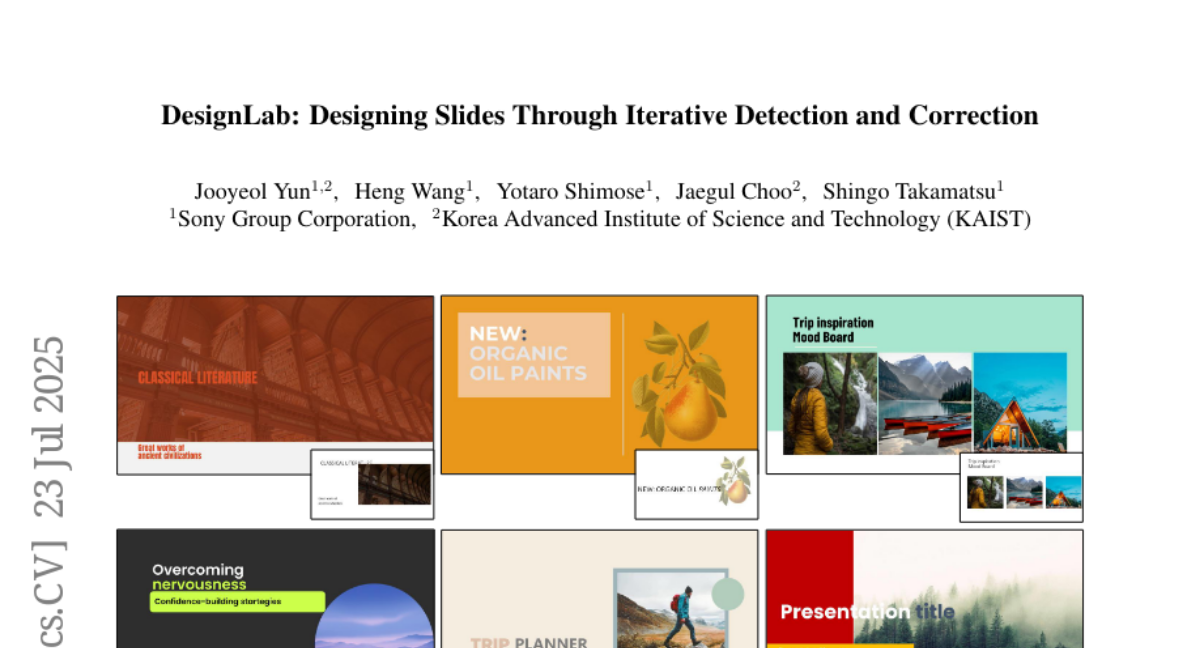

3. DesignLab: Designing Slides Through Iterative Detection and Correction

🔑 Keywords: DesignLab, large language models, design reviewer, design contributor

💡 Category: AI Systems and Tools

🌟 Research Objective:

– To develop an iterative design tool, DesignLab, for enhancing presentation slide creation by separating the process into roles: design reviewer and design contributor.

🛠️ Research Methods:

– DesignLab fine-tunes large language models to simulate intermediate drafts and introduce controlled perturbations, allowing the roles to learn from design errors and corrections.

💬 Research Conclusions:

– DesignLab outperforms existing design-generation tools, including commercial options, by embracing an iterative process that results in highly polished and professional slides.

👉 Paper link: https://huggingface.co/papers/2507.17202

4. RAVine: Reality-Aligned Evaluation for Agentic Search

🔑 Keywords: RAVine, agentic search, iterative process, evaluation frameworks, agentic LLMs

💡 Category: AI Systems and Tools

🌟 Research Objective:

– To propose RAVine, a novel evaluation framework aimed at improving the assessment of agentic search systems by aligning evaluations with realistic user queries and enhancing fine-grained accuracy.

🛠️ Research Methods:

– Implementation of RAVine focusing on multi-point queries and long-form answers, introducing an attributable ground truth construction to improve evaluation accuracy, and evaluating models’ iterative processes and interaction with search tools.

💬 Research Conclusions:

– RAVine offers a set of advancements that can drive improvements in agentic search systems, providing insights through benchmarking various models, with publicly available code and datasets to aid further research and development.

👉 Paper link: https://huggingface.co/papers/2507.16725

5. Can One Domain Help Others? A Data-Centric Study on Multi-Domain Reasoning via Reinforcement Learning

🔑 Keywords: Reinforcement Learning, Verifiable Rewards, Multi-Domain Reasoning, GRPO algorithm, Curriculum Learning

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The study aims to systematically investigate multi-domain reasoning within the Reinforcement Learning with Verifiable Rewards (RLVR) framework, focusing on mathematical reasoning, code generation, and logical puzzle solving.

🛠️ Research Methods:

– The research utilizes the GRPO algorithm and the Qwen-2.5-7B model family to evaluate improvements in domain-specific and cross-domain generalization capabilities.

– An exploration of interactions during combined cross-domain training is performed to identify mutual enhancements and conflicts.

– Analysis of performance differences between base and instruct models under identical RL configurations is conducted.

– The study delves into RL training aspects, examining curriculum learning strategies, reward design variations, and language-specific factors systematically.

💬 Research Conclusions:

– The results provide significant insights into domain interaction dynamics, revealing key factors that influence specialized and generalizable reasoning performance.

– These insights guide the optimization of RL methodologies, enhancing comprehensive, multi-domain reasoning capabilities in LLMs.

👉 Paper link: https://huggingface.co/papers/2507.17512

6. Re:Form — Reducing Human Priors in Scalable Formal Software Verification with RL in LLMs: A Preliminary Study on Dafny

🔑 Keywords: Formal language-based reasoning, Reinforcement Learning, Dafny, Auto-formalized specifications, Generalization

💡 Category: Generative Models

🌟 Research Objective:

– To enhance the reliability and scalability of Large Language Models (LLMs) in generating verifiable programs using formal language-based reasoning.

🛠️ Research Methods:

– Implemented a systematic pipeline using Dafny as the main environment for reducing human priors and integrated automatic and scalable data curation with RL designs.

💬 Research Conclusions:

– Developed a benchmark called DafnyComp for evaluating compositional formal programs, demonstrating that even small models can generate valid and verifiable Dafny code, surpassing proprietary models through supervised fine-tuning and RL with regularization.

👉 Paper link: https://huggingface.co/papers/2507.16331

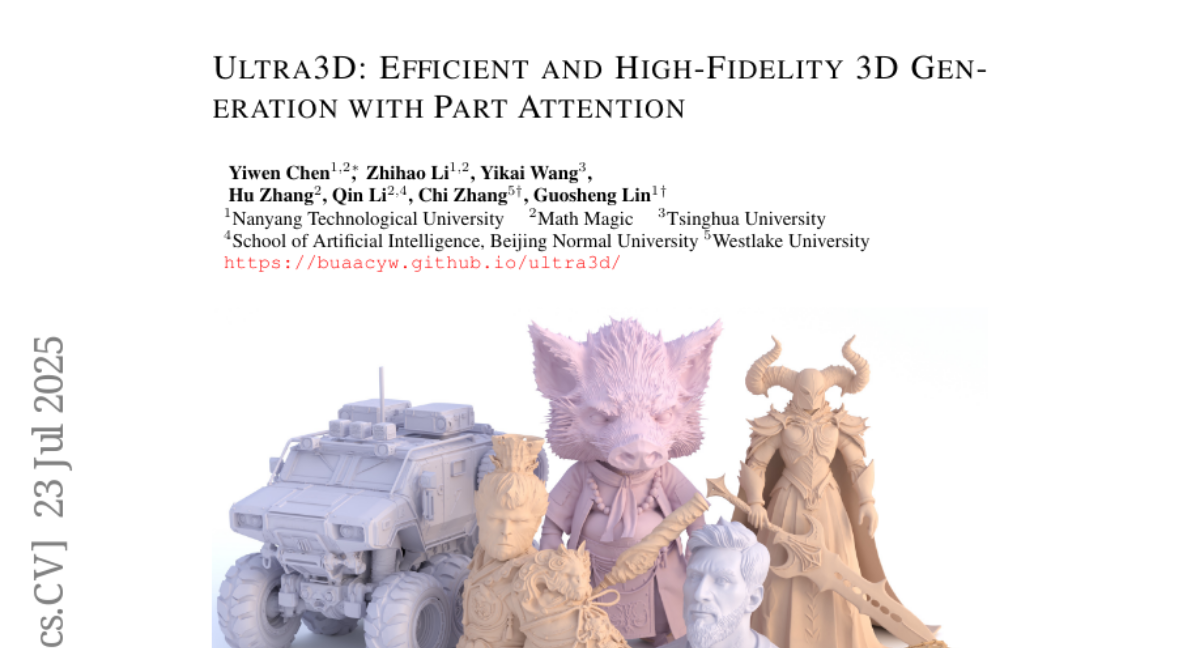

7. Ultra3D: Efficient and High-Fidelity 3D Generation with Part Attention

🔑 Keywords: Ultra3D, sparse voxel representations, VecSet, Part Attention, geometry-aware localized attention

💡 Category: Generative Models

🌟 Research Objective:

– The objective is to accelerate 3D voxel generation while maintaining high quality and resolution using the Ultra3D framework.

🛠️ Research Methods:

– Ultra3D leverages VecSet for efficient coarse object layout generation, reducing token count and accelerating voxel prediction.

– Utilizes Part Attention for refining per-voxel latent features by implementing geometry-aware localized attention within semantically consistent regions.

💬 Research Conclusions:

– Ultra3D achieves up to 6.7x speed-up in latent generation and supports high-resolution 3D generation at 1024 resolution, setting a new state-of-the-art in visual fidelity and user preference.

👉 Paper link: https://huggingface.co/papers/2507.17745

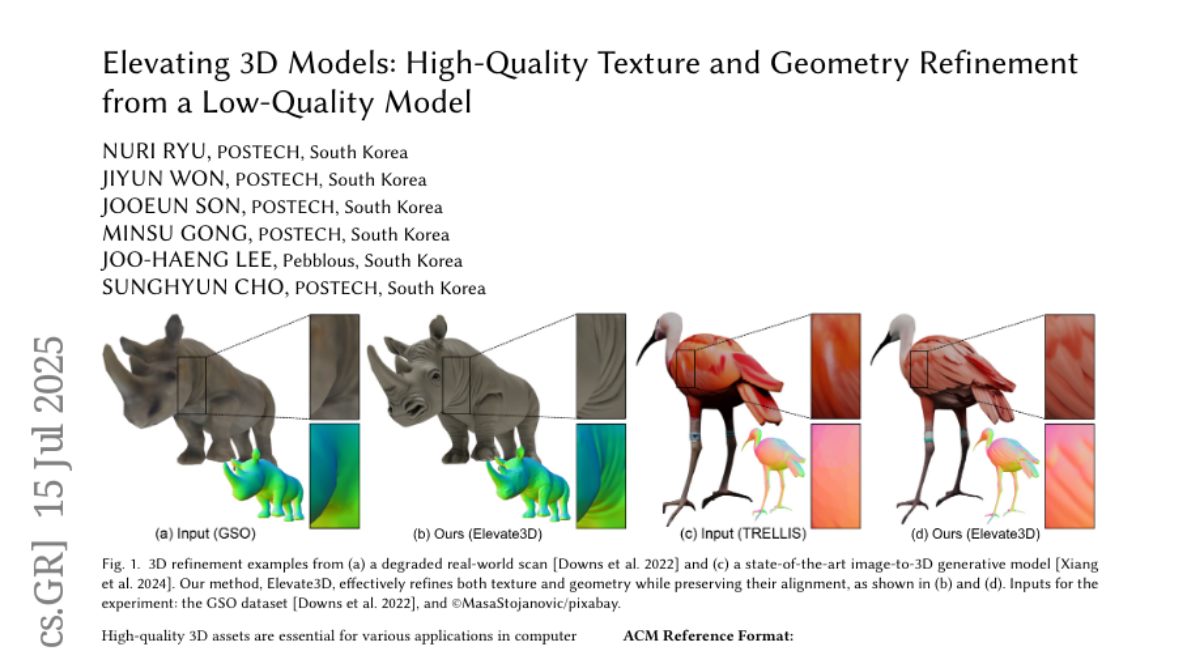

8. Elevating 3D Models: High-Quality Texture and Geometry Refinement from a Low-Quality Model

🔑 Keywords: Elevate3D, Texture Enhancement, Geometry Refinement, 3D Assets, HFS-SDEdit

💡 Category: Computer Vision

🌟 Research Objective:

– The primary goal of this research is to improve the texture and geometry refinement of low-quality 3D assets through a novel framework called Elevate3D.

🛠️ Research Methods:

– Utilizes HFS-SDEdit for high-quality texture enhancement while maintaining the original appearance and geometry.

– Employs monocular geometry predictors to refine geometry details in a view-by-view manner.

💬 Research Conclusions:

– Elevate3D outperforms recent methods by achieving state-of-the-art quality in 3D model refinement, addressing the scarcity of high-quality open-source 3D assets.

👉 Paper link: https://huggingface.co/papers/2507.11465

9. Finding Dori: Memorization in Text-to-Image Diffusion Models Is Less Local Than Assumed

🔑 Keywords: Text-to-image diffusion models, Data privacy, Pruning, Memorization locality, Adversarial fine-tuning

💡 Category: Generative Models

🌟 Research Objective:

– Explore the effectiveness of pruning-based defenses in text-to-image diffusion models and address memorization issues.

🛠️ Research Methods:

– Analyze robustness of pruning methods and introduce a novel adversarial fine-tuning technique to enhance model robustness against data replication.

💬 Research Conclusions:

– Existing pruning-based strategies are inadequate as minor changes can re-trigger memorization, stressing the need for true removal methods. Introduced adversarial fine-tuning offers a foundation for more robust and compliant generative AI.

👉 Paper link: https://huggingface.co/papers/2507.16880

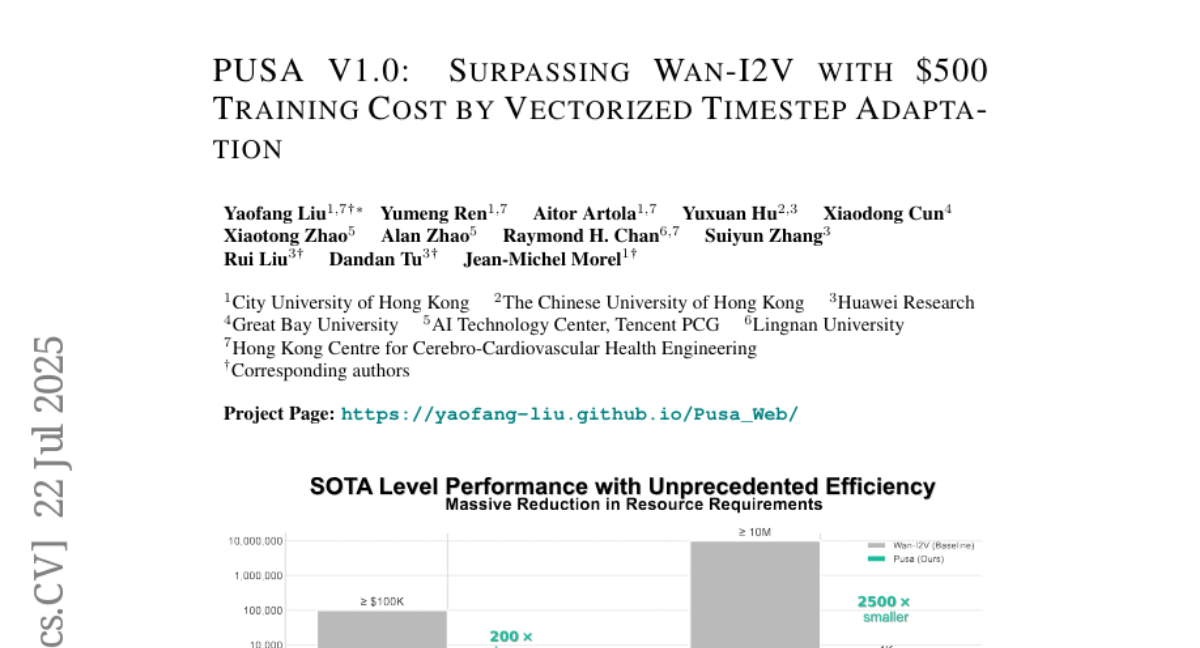

10. PUSA V1.0: Surpassing Wan-I2V with $500 Training Cost by Vectorized Timestep Adaptation

🔑 Keywords: Video Diffusion Models, Temporal Modeling, Vectorized Timestep Adaptation, Zero-shot Multi-task Capabilities, Text-to-Video Generation

💡 Category: Generative Models

🌟 Research Objective:

– The paper aims to enhance video diffusion models using a novel vectorized timestep adaptation approach, known as Pusa, to improve video generation efficiency and versatility.

🛠️ Research Methods:

– The approach leverages vectorized timestep adaptation (VTA) within the video diffusion framework, enabling fine-grained temporal control while preserving the capabilities of the base model.

💬 Research Conclusions:

– Pusa achieves significant improvements in video generation efficiency, outperforming existing models such as Wan-I2V-14B with remarkably low training costs and dataset size, and demonstrates versatile zero-shot multi-task capabilities, including text-to-video generation, without task-specific training.

👉 Paper link: https://huggingface.co/papers/2507.16116

11. Promptomatix: An Automatic Prompt Optimization Framework for Large Language Models

🔑 Keywords: Large Language Models, prompt engineering, Promptomatix, automatic prompt optimization, AI Systems and Tools

💡 Category: Natural Language Processing

🌟 Research Objective:

– To introduce Promptomatix, an automatic framework for optimizing prompts in large language models without manual effort or domain expertise.

🛠️ Research Methods:

– Utilization of a meta-prompt-based optimizer and a DSPy-powered compiler within a modular framework that supports future extensions.

– Analysis of user intent, generation of synthetic training data, selection of prompting strategies, and refinement of prompts using cost-aware objectives.

💬 Research Conclusions:

– Promptomatix delivers competitive or superior performance across five task categories compared to existing libraries, while also reducing prompt length and computational overhead.

👉 Paper link: https://huggingface.co/papers/2507.14241

12.