AI Native Daily Paper Digest – 20250725

1. nablaNABLA: Neighborhood Adaptive Block-Level Attention

🔑 Keywords: NABLA, Video Diffusion Transformers, Block-Level Attention, Sparsity Patterns, AI-generated

💡 Category: Generative Models

🌟 Research Objective:

– Introduce NABLA, a Neighborhood Adaptive Block-Level Attention mechanism to enhance computational efficiency without compromising generative quality in video diffusion transformers.

🛠️ Research Methods:

– Employ block-wise attention with adaptive sparsity-driven threshold to reduce computational overhead.

– Seamlessly integrate with PyTorch’s Flex Attention operator.

💬 Research Conclusions:

– NABLA achieves up to 2.7x faster training and inference compared to baseline while maintaining quantitative metrics and visual quality.

👉 Paper link: https://huggingface.co/papers/2507.13546

2. Group Sequence Policy Optimization

🔑 Keywords: GSPO, Reinforcement Learning, Sequence-level clipping, Mixture-of-Experts, Qwen3 models

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The introduction of Group Sequence Policy Optimization (GSPO) as a reinforcement learning algorithm for training large language models.

🛠️ Research Methods:

– Use of sequence likelihood for defining importance ratio with sequence-level clipping, rewarding, and optimization within GSPO.

💬 Research Conclusions:

– GSPO achieves superior training efficiency and performance compared to previous algorithms, stabilizes MoE RL training, and simplifies RL infrastructure design, leading to improvements in Qwen3 models.

👉 Paper link: https://huggingface.co/papers/2507.18071

3. MUR: Momentum Uncertainty guided Reasoning for Large Language Models

🔑 Keywords: Large Language Models, Test-Time Scaling, Momentum Uncertainty-guided Reasoning, reasoning efficiency, gamma-control

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– To dynamically optimize reasoning budgets in Large Language Models for enhanced accuracy and reduced computation during inference.

🛠️ Research Methods:

– Introduced Momentum Uncertainty-guided Reasoning (MUR), using stepwise uncertainty tracking, and gamma-control as a tuning mechanism without additional training.

💬 Research Conclusions:

– MUR significantly reduces computation by over 50% on average and enhances accuracy by 0.62-3.37% across various benchmarks using different Qwen3 model sizes.

👉 Paper link: https://huggingface.co/papers/2507.14958

4. LAPO: Internalizing Reasoning Efficiency via Length-Adaptive Policy Optimization

🔑 Keywords: Length-Adaptive Policy Optimization, Reinforcement Learning, Reasoning Models, Mathematical Reasoning

💡 Category: Reinforcement Learning

🌟 Research Objective:

– Introduce Length-Adaptive Policy Optimization (LAPO) to transform reasoning length control into an intrinsic model capability.

🛠️ Research Methods:

– Use a two-stage reinforcement learning process to teach models natural reasoning patterns and meta-cognitive guidance for efficient reasoning.

💬 Research Conclusions:

– LAPO reduces token usage by up to 40.9% and improves accuracy by 2.3%, with models developing the ability to allocate computational resources effectively.

👉 Paper link: https://huggingface.co/papers/2507.15758

5. Captain Cinema: Towards Short Movie Generation

🔑 Keywords: Captain Cinema, AI-generated summary, Multimodal Diffusion Transformers, keyframe planning, video synthesis

💡 Category: Generative Models

🌟 Research Objective:

– Captain Cinema aims to generate high-quality short movies from textual descriptions using an advanced framework for narrative and visual coherence.

🛠️ Research Methods:

– Employs top-down keyframe planning and bottom-up video synthesis alongside an interleaved training strategy for Multimodal Diffusion Transformers, utilizing a curated cinematic dataset.

💬 Research Conclusions:

– Demonstrates efficiency and high-quality performance in creating visually coherent and narratively consistent short movies.

👉 Paper link: https://huggingface.co/papers/2507.18634

6. Hierarchical Budget Policy Optimization for Adaptive Reasoning

🔑 Keywords: Hierarchical Budget Policy Optimization, reinforcement learning, reasoning models, computational efficiency

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The research aims to optimize reasoning models by learning problem-specific depths without losing capability.

🛠️ Research Methods:

– The study introduces Hierarchical Budget Policy Optimization (HBPO), a reinforcement learning framework, utilizing hierarchical budget exploration and differentiated reward mechanisms to allocate computational resources efficiently while retaining the model’s capacity for complex tasks.

💬 Research Conclusions:

– HBPO reduces token usage by up to 60.6% and improves accuracy by 3.14% on reasoning benchmarks, demonstrating that reasoning efficiency and capability can be optimized together without conflict.

👉 Paper link: https://huggingface.co/papers/2507.15844

7. TTS-VAR: A Test-Time Scaling Framework for Visual Auto-Regressive Generation

🔑 Keywords: TTS-VAR, test-time scaling, visual auto-regressive models, clustering, resampling

💡 Category: Generative Models

🌟 Research Objective:

– To introduce TTS-VAR, a framework designed to improve visual auto-regressive models’ generation quality by applying test-time scaling.

🛠️ Research Methods:

– Utilization of an adaptive descending batch size schedule and integration of clustering and resampling techniques to enhance model efficiency and performance.

💬 Research Conclusions:

– TTS-VAR achieves a notable improvement in generation quality, demonstrated by an 8.7% increase in GenEval score, highlighting the impact of early-stage structural features and varying resampling efficacy across scales.

👉 Paper link: https://huggingface.co/papers/2507.18537

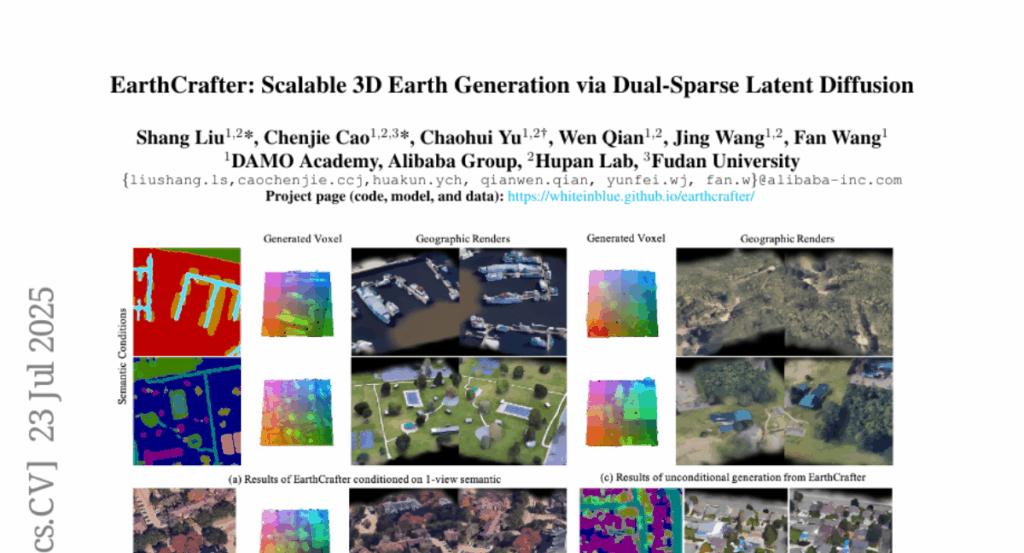

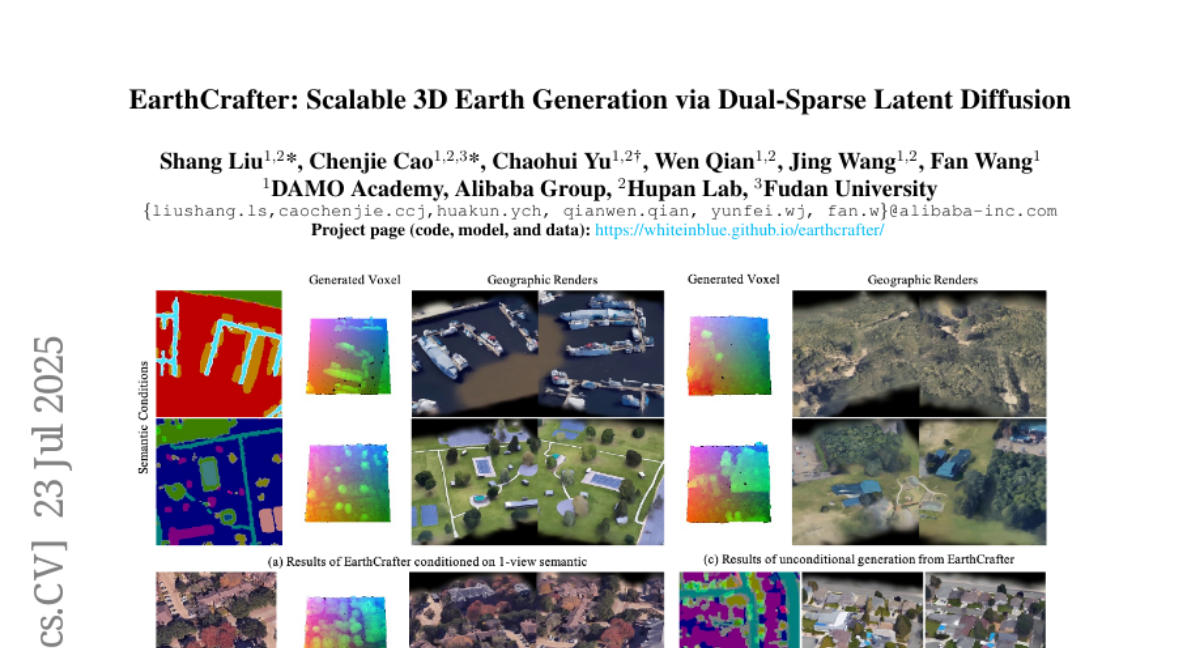

8. EarthCrafter: Scalable 3D Earth Generation via Dual-Sparse Latent Diffusion

🔑 Keywords: 3D generation, Aerial-Earth3D, EarthCrafter, latent diffusion

💡 Category: Generative Models

🌟 Research Objective:

– Address the challenge of scaling 3D modeling methods to cover vast geographic areas.

🛠️ Research Methods:

– Introduction of Aerial-Earth3D, the largest 3D aerial dataset, featuring 50k curated scenes from the U.S. mainland.

– Development of EarthCrafter framework, utilizing sparse-decoupled latent diffusion for efficient and detailed large-scale 3D Earth generation.

💬 Research Conclusions:

– EarthCrafter significantly enhances performance in generating extremely large-scale 3D models while supporting a range of applications like semantic-guided urban layout generation.

👉 Paper link: https://huggingface.co/papers/2507.16535

9. DriftMoE: A Mixture of Experts Approach to Handle Concept Drifts

🔑 Keywords: DriftMoE, Mixture-of-Experts, Concept Drift, Neural Router, Expert Specialization

💡 Category: Machine Learning

🌟 Research Objective:

– To introduce DriftMoE, an online Mixture-of-Experts architecture with a compact neural router, for adapting to concept drift in data streams efficiently.

🛠️ Research Methods:

– Utilized a novel co-training framework involving a neural router co-trained with incremental Hoeffding tree experts.

– Employed a symbiotic learning loop for expert specialization and accurate predictions across different data stream benchmarks.

💬 Research Conclusions:

– DriftMoE achieves competitive results with state-of-the-art stream learning adaptive ensembles.

– Demonstrates a principled and efficient approach to adapting concept drift across various data stream benchmarks.

👉 Paper link: https://huggingface.co/papers/2507.18464

10. DMOSpeech 2: Reinforcement Learning for Duration Prediction in Metric-Optimized Speech Synthesis

🔑 Keywords: DMOSpeech 2, speech synthesis, duration prediction, reinforcement learning, teacher-guided sampling

💡 Category: Generative Models

🌟 Research Objective:

– Optimize the duration predictor component within DMOSpeech 2 for improved speech synthesis performance using reinforcement learning.

🛠️ Research Methods:

– Utilizes a novel duration policy framework involving group relative preference optimization (GRPO) with speaker similarity and word error rate as reward signals.

– Introduces teacher-guided sampling, leveraging a hybrid approach between teacher and student models to enhance output diversity.

💬 Research Conclusions:

– Demonstrates superior performance across all metrics compared to previous systems.

– Achieves a reduction in sampling steps by half without quality degradation, marking a significant advancement in metric-optimized speech synthesis pipelines.

👉 Paper link: https://huggingface.co/papers/2507.14988

11. GLiNER2: An Efficient Multi-Task Information Extraction System with Schema-Driven Interface

🔑 Keywords: GLiNER2, Information Extraction, Named Entity Recognition, Multi-task Composition, Transformer Model

💡 Category: Natural Language Processing

🌟 Research Objective:

– To present GLiNER2, a unified framework enhancing NLP task support using a single efficient model.

🛠️ Research Methods:

– Utilizes a pretrained transformer encoder architecture to integrate named entity recognition, text classification, and hierarchical structured data extraction.

💬 Research Conclusions:

– GLiNER2 offers competitive performance in extraction and classification tasks and is more accessible for deployment than large language models.

👉 Paper link: https://huggingface.co/papers/2507.18546

12. Technical Report of TeleChat2, TeleChat2.5 and T1

🔑 Keywords: Supervised Fine-Tuning, Direct Preference Optimization, Reinforcement Learning, Transformer-based architectures, Chain-of-Thought

💡 Category: Natural Language Processing

🌟 Research Objective:

– To upgrade the TeleChat series with enhanced training strategies focusing on both performance and speed improvements.

🛠️ Research Methods:

– Implemented advanced training methodologies, including Supervised Fine-Tuning, Direct Preference Optimization, and reinforcement learning.

– Employed a large-scale pretraining on 10 trillion tokens and domain-specific datasets in continual pretraining phases.

💬 Research Conclusions:

– Demonstrated superior performance in reasoning and task execution, with the T1 model showing substantial improvements in mathematical and coding capabilities.

– Released models, TeleChat2, TeleChat2.5, and T1, publicly with parameter variations to support diverse applications.

👉 Paper link: https://huggingface.co/papers/2507.18013

13. A New Pair of GloVes

🔑 Keywords: GloVe, AI-generated summary, word embeddings, NER, Wikipedia

💡 Category: Natural Language Processing

🌟 Research Objective:

– The study aims to document, describe, and evaluate new 2024 English GloVe models to improve upon the 2014 versions, focusing on enhanced performance in Named Entity Recognition tasks.

🛠️ Research Methods:

– Two sets of word embeddings were trained using updated datasets from Wikipedia, Gigaword, and Dolma subset, accompanied by thorough documentation of data versions and preprocessing.

💬 Research Conclusions:

– The 2024 GloVe models incorporate culturally and linguistically relevant vocabulary, maintain structural task performance, and demonstrate improved results on recent, temporally dependent NER datasets, particularly non-Western newswire data.

👉 Paper link: https://huggingface.co/papers/2507.18103

14. SegDT: A Diffusion Transformer-Based Segmentation Model for Medical Imaging

🔑 Keywords: SegDT, diffusion transformer, skin lesion segmentation, inference speeds, AI in Healthcare

💡 Category: AI in Healthcare

🌟 Research Objective:

– Introduce SegDT, a diffusion transformer model for skin lesion segmentation in medical applications.

🛠️ Research Methods:

– Evaluated on three benchmarking datasets and compared with existing models, focusing on performance and speed.

💬 Research Conclusions:

– Achieves state-of-the-art results with fast inference, enhancing deep learning’s role in medical image analysis.

👉 Paper link: https://huggingface.co/papers/2507.15595

15. Discovering and using Spelke segments

🔑 Keywords: SpelkeNet, Spelke objects, motion affordance map, expected-displacement map, AI Native

💡 Category: Computer Vision

🌟 Research Objective:

– To introduce and benchmark the Spelke object concept through the SpelkeBench dataset to improve the understanding and handling of Spelke objects in images.

🛠️ Research Methods:

– Development and training of SpelkeNet, a visual world model designed to predict motion distributions.

– Utilization of motion affordance maps and expected-displacement maps for statistical counterfactual probing to identify Spelke segments.

💬 Research Conclusions:

– SpelkeNet outperforms supervised baselines like SegmentAnything on the SpelkeBench dataset.

– The Spelke concept significantly enhances performance on the 3DEditBench benchmark for physical object manipulation, proving practical use in various object manipulation models.

👉 Paper link: https://huggingface.co/papers/2507.16038

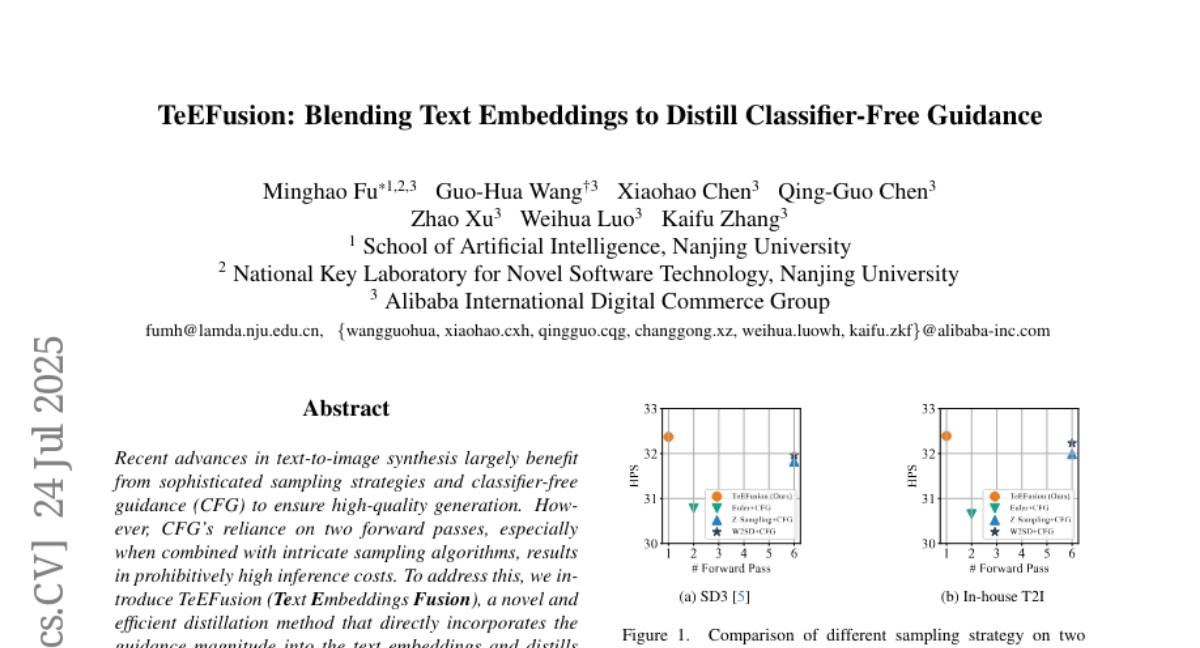

16. TeEFusion: Blending Text Embeddings to Distill Classifier-Free Guidance

🔑 Keywords: Text-to-Image Synthesis, Classifier-Free Guidance, Text Embeddings, Distillation Method, Linear Operations

💡 Category: Generative Models

🌟 Research Objective:

– To enhance text-to-image synthesis by integrating classifier-free guidance into text embeddings efficiently, reducing inference costs without losing image quality.

🛠️ Research Methods:

– Introduce TeEFusion, a novel efficient distillation method that directly incorporates guidance magnitude into text embeddings and simplifies complex sampling strategies using linear operations.

💬 Research Conclusions:

– TeEFusion enables the student model to mimic the teacher model’s performance while being up to 6 times faster during inference and maintaining comparable image quality.

👉 Paper link: https://huggingface.co/papers/2507.18192

17. Agentar-Fin-R1: Enhancing Financial Intelligence through Domain Expertise, Training Efficiency, and Advanced Reasoning

🔑 Keywords: Large Language Models, trustworthiness assurance, financial large language models, domain specialization, sophisticated reasoning capabilities

💡 Category: AI in Finance

🌟 Research Objective:

– Introduce the Agentar-Fin-R1 series to enhance reasoning capabilities, reliability, and domain specialization in financial applications through a trustworthiness assurance framework.

🛠️ Research Methods:

– Engineered based on the Qwen3 foundation model with an optimization approach that integrates a high-quality systematic financial task label system and a comprehensive multi-layered trustworthiness assurance framework.

– Utilized label-guided automated difficulty-aware optimization, two-stage training pipeline, and dynamic attribution systems to improve training efficiency.

💬 Research Conclusions:

– Achieves state-of-the-art performance on both financial and general reasoning tasks.

– Validates effectiveness as a trustworthy solution for high-stakes financial applications through comprehensive evaluations on mainstream financial benchmarks and the innovative Finova evaluation benchmark.

👉 Paper link: https://huggingface.co/papers/2507.16802

18. Iwin Transformer: Hierarchical Vision Transformer using Interleaved Windows

🔑 Keywords: Iwin Transformer, hierarchical vision transformer, position-embedding-free, interleaved window attention, depthwise separable convolution

💡 Category: Computer Vision

🌟 Research Objective:

– The study introduces the Iwin Transformer, a hierarchical vision transformer design that eliminates the need for position embeddings, aiming to enhance global information exchange in tasks such as image classification, semantic segmentation, and video action recognition.

🛠️ Research Methods:

– Utilization of interleaved window attention and depthwise separable convolution to efficiently connect distant and neighboring tokens for global information exchange. Extensive experiments were conducted to demonstrate its effectiveness.

💬 Research Conclusions:

– Iwin Transformer achieves strong performance in visual tasks with notable top-1 accuracy on ImageNet-1K and exhibits flexibility in replacing the self-attention module in specific image generation tasks, showcasing potential for future research like Iwin 3D Attention in video generation.

👉 Paper link: https://huggingface.co/papers/2507.18405

19. True Multimodal In-Context Learning Needs Attention to the Visual Context

🔑 Keywords: Multimodal Large Language Models, Multimodal In-Context Learning, Dynamic Attention Reallocation, TrueMICL

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The paper aims to enhance Multimodal In-Context Learning (MICL) by addressing its current limitations in leveraging visual information effectively.

🛠️ Research Methods:

– The authors introduce Dynamic Attention Reallocation (DARA) as a fine-tuning strategy to balance attention between visual and textual tokens.

– They present a dedicated dataset, TrueMICL, which requires integrating multimodal information for task completion.

💬 Research Conclusions:

– The proposed solutions result in substantial improvements in the true multimodal in-context learning capabilities, indicating a better adaptation to multimodal tasks.

👉 Paper link: https://huggingface.co/papers/2507.15807

20. HLFormer: Enhancing Partially Relevant Video Retrieval with Hyperbolic Learning

🔑 Keywords: HLFormer, Hyperbolic Modeling, Lorentz Attention Block, Video-Text Retrieval, Cross-modal Matching

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The primary aim of the paper is to enhance video-text retrieval by proposing HLFormer, which addresses hierarchical and partial relevance issues often mishandled by existing Euclidean space methods.

🛠️ Research Methods:

– The study introduces a hyperbolic modeling framework that incorporates Lorentz and Euclidean attention blocks alongside a Mean-Guided Adaptive Interaction Module to effectively encode video embeddings in hybrid spaces.

– A Partial Order Preservation Loss is introduced to enforce text-video hierarchy using Lorentzian cone constraints to improve partial relevance matching.

💬 Research Conclusions:

– HLFormer demonstrates superior performance over state-of-the-art methods in partially relevant video retrieval, significantly improving the cross-modal matching between video content and text queries.

👉 Paper link: https://huggingface.co/papers/2507.17402

21. Deep Learning-Based Age Estimation and Gender Deep Learning-Based Age Estimation and Gender Classification for Targeted Advertisement

🔑 Keywords: CNN, Facial Features, Shared Representations, Gender Classification, Data Augmentation

💡 Category: Computer Vision

🌟 Research Objective:

– Develop a custom CNN architecture to simultaneously classify age and gender from facial images, enhancing targeted advertising effectiveness.

🛠️ Research Methods:

– Employ a CNN architecture optimized to leverage correlations between age and gender, using a large, diverse dataset pre-processed for robustness.

💬 Research Conclusions:

– Achieved significant improvement in gender classification accuracy at 95% and a competitive mean absolute error of 5.77 years for age estimation. Identified challenges in estimating ages of younger individuals, suggesting the need for targeted data augmentation for further model refinement.

👉 Paper link: https://huggingface.co/papers/2507.18565

22.